本文首发于个人博客https://kezunlin.me/post/b90033a9/,欢迎阅读!

Install and Configure Caffe on ubuntu 16.04

Series

- Part 1: Install and Configure Caffe on windows 10

- Part 2: Install and Configure Caffe on ubuntu 16.04

Guide

requirements:

- NVIDIA driver 396.54

CUDA 8.0 + cudnn 6.0.21- CUDA 9.2 +cudnn 7.1.4

- opencv 3.1.0 --->3.3.0

- python 2.7 + numpy 1.15.1

python 3.5.2 + numpy 1.16.2- protobuf 3.6.1 (static)

- caffe latest

默认的protobuf,2.6.1测试通过。

此处,使用最新的3.6.1 也可以,编译caffe需要加上-std=c++11

install CUDA + cudnn

see install and configure cuda 9.2 with cudnn 7.1 on ubuntu 16.04

tips: we need to recompile caffe with cudnn 7.1

before we compile caffe, move caffe/python/caffe/selective_search_ijcv_with_python folder outside caffe source folder, otherwise error occurs.

install protobuf

see Part 1: compile protobuf-cpp on ubuntu 16.04

which protoc

/usr/local/bin/protoc

protoc --version

libprotoc 3.6.1

caffe使用static的libprotoc 3.6.1

install opencv

see compile opencv on ubuntu 16.04

which opencv_version

/usr/local/bin/opencv_version

opencv_version

3.3.0

python

python --version

Python 2.7.12

check numpy version

import numpy

numpy.__version__

'1.15.1'

import numpy

import inspect

inspect.getfile(numpy)

'/usr/local/lib/python2.7/dist-packages/numpy/__init__.pyc'

compile caffe

clone repo

git clone https://github.com/BVLC/caffe.git

cd caffe

update repo

update at 20180822.

if you change your local Makefile and git pull origin master merge conflict, solution

git checkout HEAD Makefile

git pull origin master

configure

mkdir build && cd build && cmake-gui ..

cmake-gui options

USE_CUDNN ON

USE_OPENCV ON

Build_python ON

Build_python_layer ON

BLAS atlas

CMAKE_CXX_FLGAS -std=c++11

CMAKE_INSTALL_PREFIX /home/kezunlin/program/caffe/build/install

使用

-std=c++11

configure output

Dependencies:

BLAS : Yes (Atlas)

Boost : Yes (ver. 1.66)

glog : Yes

gflags : Yes

protobuf : Yes (ver. 3.6.1)

lmdb : Yes (ver. 0.9.17)

LevelDB : Yes (ver. 1.18)

Snappy : Yes (ver. 1.1.3)

OpenCV : Yes (ver. 3.1.0)

CUDA : Yes (ver. 9.2)

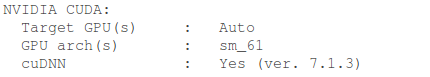

NVIDIA CUDA:

Target GPU(s) : Auto

GPU arch(s) : sm_61

cuDNN : Yes (ver. 7.1.4)

Python:

Interpreter : /usr/bin/python2.7 (ver. 2.7.12)

Libraries : /usr/lib/x86_64-linux-gnu/libpython2.7.so (ver 2.7.12)

NumPy : /usr/lib/python2.7/dist-packages/numpy/core/include (ver 1.51.1)

Documentaion:

Doxygen : /usr/bin/doxygen (1.8.11)

config_file : /home/kezunlin/program/caffe/.Doxyfile

Install:

Install path : /home/kezunlin/program/caffe-wy/build/install

Configuring done

we can also use

python3.5andnumpy 1.16.2

Python:

Interpreter : /usr/bin/python3 (ver. 3.5.2)

Libraries : /usr/lib/x86_64-linux-gnu/libpython3.5m.so (ver 3.5.2)

NumPy : /home/kezunlin/.local/lib/python3.5/site-packages/numpy/core/include (ver 1.16.2)

use -std=c++11, otherwise errors occur

make -j8

[ 1%] Running C++/Python protocol buffer compiler on /home/kezunlin/program/caffe-wy/src/caffe/proto/caffe.proto

Scanning dependencies of target caffeproto

[ 1%] Building CXX object src/caffe/CMakeFiles/caffeproto.dir/__/__/include/caffe/proto/caffe.pb.cc.o

In file included from /usr/include/c++/5/mutex:35:0,

from /usr/local/include/google/protobuf/stubs/mutex.h:33,

from /usr/local/include/google/protobuf/stubs/common.h:52,

from /home/kezunlin/program/caffe-wy/build/include/caffe/proto/caffe.pb.h:9,

from /home/kezunlin/program/caffe-wy/build/include/caffe/proto/caffe.pb.cc:4:

/usr/include/c++/5/bits/c++0x_warning.h:32:2: error: #error This file requires compiler and library support for the ISO C++ 2011 standard. This support must be enabled with the -std=c++11 or -std=gnu++11 compiler options.

#error This file requires compiler and library support

fix gcc error

vim /usr/local/cuda/include/host_config.h

# 将其中的第115行注释掉:

#error-- unsupported GNU version! gcc versions later than 4.9 are not supported!

======>

//#error-- unsupported GNU version! gcc versions later than 4.9 are not supported!

fix gflags error

- caffe/include/caffe/common.hpp

- caffe/examples/mnist/convert_mnist_data.cpp

Comment out the ifndef

// #ifndef GFLAGS_GFLAGS_H_

namespace gflags = google;

// #endif // GFLAGS_GFLAGS_H_

compile

make clean

make -j8

make pycaffe

output

[ 1%] Running C++/Python protocol buffer compiler on /home/kezunlin/program/caffe-wy/src/caffe/proto/caffe.proto

Scanning dependencies of target caffeproto

[ 1%] Building CXX object src/caffe/CMakeFiles/caffeproto.dir/__/__/include/caffe/proto/caffe.pb.cc.o

[ 1%] Linking CXX static library ../../lib/libcaffeproto.a

[ 1%] Built target caffeproto

libcaffeproto.astatic library

install

make install

ls build/install

bin include lib python share

will install to

build/installfolder

ls build/install/lib

libcaffeproto.a libcaffe.so libcaffe.so.1.0.0

advanced

- INTERFACE_INCLUDE_DIRECTORIES

- INTERFACE_LINK_LIBRARIES

Target "caffe" has an INTERFACE_LINK_LIBRARIES property which differs from its LINK_INTERFACE_LIBRARIES properties.

Play with Caffe

python caffe

fix python caffe

fix ipython 6.1 version conflict

vim caffe/python/requirements.txt

ipython>=3.0.0

====>

ipython==5.4.1

reinstall ipython

pip install -r requirements.txt

cd caffe/python

python

>>>import caffe

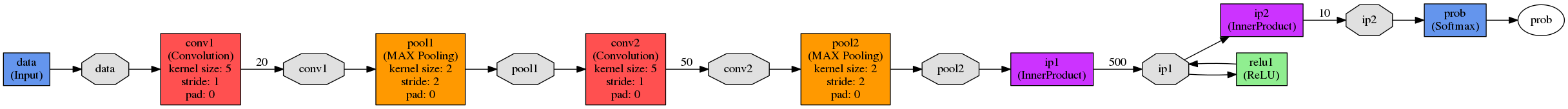

python draw net

sudo apt-get install graphviz

sudo pip install theano=0.9

# for theano d3viz

sudo pip install pydot==1.1.0

sudo pip install pydot-ng

# other usefull tools

sudo pip install jupyter

sudo pip install seaborn

we need to install graphviz, otherwise we get ERROR:"dot" not found in path

draw net

cd $CAFFE_HOME

./python/draw_net.py ./examples/mnist/lenet.prototxt ./examples/mnist/lenet.png

eog ./examples/mnist/lenet.png

cpp caffe

train net

cd caffe

./examples/mnist/create_mnist.sh

./examples/mnist/train_lenet.sh

cat ./examples/mnist/train_lenet.sh

./build/tools/caffe train --solver=examples/mnist/lenet_solver.prototxt $@

output results

I0912 15:57:28.812655 14094 solver.cpp:327] Iteration 10000, loss = 0.00272129

I0912 15:57:28.812675 14094 solver.cpp:347] Iteration 10000, Testing net (#0)

I0912 15:57:28.891481 14100 data_layer.cpp:73] Restarting data prefetching from start.

I0912 15:57:28.893678 14094 solver.cpp:414] Test net output #0: accuracy = 0.9904

I0912 15:57:28.893707 14094 solver.cpp:414] Test net output #1: loss = 0.0276084 (* 1 = 0.0276084 loss)

I0912 15:57:28.893714 14094 solver.cpp:332] Optimization Done.

I0912 15:57:28.893719 14094 caffe.cpp:250] Optimization Done.

tips, for

caffe, errors because no imdb data.

I0417 13:28:17.764714 35030 layer_factory.hpp:77] Creating layer mnist

F0417 13:28:17.765067 35030 db_lmdb.hpp:15] Check failed: mdb_status == 0 (2 vs. 0) No such file or directory

---------------------

upgrade net

./tools/upgrade_net_proto_text old.prototxt new.prototxt

./tools/upgrade_net_proto_binary old.caffemodel new.caffemodel

caffe time

-

yolov3

./build/tools/caffe time --model='det/yolov3/yolov3.prototxt' --iterations=100 --gpu=0 I0313 10:15:41.888208 12527 caffe.cpp:408] Average Forward pass: 49.7012 ms. I0313 10:15:41.888213 12527 caffe.cpp:410] Average Backward pass: 84.946 ms. I0313 10:15:41.888248 12527 caffe.cpp:412] Average Forward-Backward: 134.85 ms. -

yolov3 autotrain

./build/tools/caffe time --model='det/autotrain/yolo3-autotrain-mbn-416-5c.prototxt' --iterations=100 --gpu=0 I0313 10:19:27.283625 12894 caffe.cpp:408] Average Forward pass: 38.4823 ms. I0313 10:19:27.283630 12894 caffe.cpp:410] Average Backward pass: 74.1656 ms. I0313 10:19:27.283638 12894 caffe.cpp:412] Average Forward-Backward: 112.732 ms.

Example

Caffe Classifier

#include <caffe/caffe.hpp>

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#endif // USE_OPENCV

#include <algorithm>

#include <iosfwd>

#include <memory>

#include <string>

#include <utility>

#include <vector>

#ifdef USE_OPENCV

using namespace caffe; // NOLINT(build/namespaces)

using std::string;

/* Pair (label, confidence) representing a prediction. */

typedef std::pair<string, float> Prediction;

class Classifier {

public:

Classifier(const string& model_file,

const string& trained_file,

const string& mean_file,

const string& label_file);

std::vector<Prediction> Classify(const cv::Mat& img, int N = 5);

private:

void SetMean(const string& mean_file);

std::vector<float> Predict(const cv::Mat& img);

void WrapInputLayer(std::vector<cv::Mat>* input_channels);

void Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels);

private:

shared_ptr<Net<float> > net_;

cv::Size input_geometry_;

int num_channels_;

cv::Mat mean_;

std::vector<string> labels_;

};

Classifier::Classifier(const string& model_file,

const string& trained_file,

const string& mean_file,

const string& label_file) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif

/* Load the network. */

net_.reset(new Net<float>(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file);

CHECK_EQ(net_->num_inputs(), 1) << "Network should have exactly one input.";

CHECK_EQ(net_->num_outputs(), 1) << "Network should have exactly one output.";

Blob<float>* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

CHECK(num_channels_ == 3 || num_channels_ == 1)

<< "Input layer should have 1 or 3 channels.";

input_geometry_ = cv::Size(input_layer->width(), input_layer->height());

/* Load the binaryproto mean file. */

SetMean(mean_file);

/* Load labels. */

std::ifstream labels(label_file.c_str());

CHECK(labels) << "Unable to open labels file " << label_file;

string line;

while (std::getline(labels, line))

labels_.push_back(string(line));

Blob<float>* output_layer = net_->output_blobs()[0];

CHECK_EQ(labels_.size(), output_layer->channels())

<< "Number of labels is different from the output layer dimension.";

}

static bool PairCompare(const std::pair<float, int>& lhs,

const std::pair<float, int>& rhs) {

return lhs.first > rhs.first;

}

/* Return the indices of the top N values of vector v. */

static std::vector<int> Argmax(const std::vector<float>& v, int N) {

std::vector<std::pair<float, int> > pairs;

for (size_t i = 0; i < v.size(); ++i)

pairs.push_back(std::make_pair(v[i], i));

std::partial_sort(pairs.begin(), pairs.begin() + N, pairs.end(), PairCompare);

std::vector<int> result;

for (int i = 0; i < N; ++i)

result.push_back(pairs[i].second);

return result;

}

/* Return the top N predictions. */

std::vector<Prediction> Classifier::Classify(const cv::Mat& img, int N) {

std::vector<float> output = Predict(img);

N = std::min<int>(labels_.size(), N);

std::vector<int> maxN = Argmax(output, N);

std::vector<Prediction> predictions;

for (int i = 0; i < N; ++i) {

int idx = maxN[i];

predictions.push_back(std::make_pair(labels_[idx], output[idx]));

}

return predictions;

}

/* Load the mean file in binaryproto format. */

void Classifier::SetMean(const string& mean_file) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto);

/* Convert from BlobProto to Blob<float> */

Blob<float> mean_blob;

mean_blob.FromProto(blob_proto);

CHECK_EQ(mean_blob.channels(), num_channels_)

<< "Number of channels of mean file doesn't match input layer.";

/* The format of the mean file is planar 32-bit float BGR or grayscale. */

std::vector<cv::Mat> channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

/* Extract an individual channel. */

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

}

/* Merge the separate channels into a single image. */

cv::Mat mean;

cv::merge(channels, mean);

/* Compute the global mean pixel value and create a mean image

* filled with this value. */

cv::Scalar channel_mean = cv::mean(mean);

mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

}

std::vector<float> Classifier::Predict(const cv::Mat& img) {

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_,

input_geometry_.height, input_geometry_.width);

/* Forward dimension change to all layers. */

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

/* Copy the output layer to a std::vector */

Blob<float>* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector<float>(begin, end);

}

/* Wrap the input layer of the network in separate cv::Mat objects

* (one per channel). This way we save one memcpy operation and we

* don't need to rely on cudaMemcpy2D. The last preprocessing

* operation will write the separate channels directly to the input

* layer. */

void Classifier::WrapInputLayer(std::vector<cv::Mat>* input_channels) {

Blob<float>* input_layer = net_->input_blobs()[0];

int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

}

void Classifier::Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels) {

/* Convert the input image to the input image format of the network. */

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_GRAY2BGR);

else

sample = img;

cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample;

cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1);

cv::Mat sample_normalized;

cv::subtract(sample_float, mean_, sample_normalized);

/* This operation will write the separate BGR planes directly to the

* input layer of the network because it is wrapped by the cv::Mat

* objects in input_channels. */

cv::split(sample_normalized, *input_channels);

CHECK(reinterpret_cast<float*>(input_channels->at(0).data)

== net_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

}

int main(int argc, char** argv) {

if (argc != 6) {

std::cerr << "Usage: " << argv[0]

<< " deploy.prototxt network.caffemodel"

<< " mean.binaryproto labels.txt img.jpg" << std::endl;

return 1;

}

::google::InitGoogleLogging(argv[0]);

string model_file = argv[1];

string trained_file = argv[2];

string mean_file = argv[3];

string label_file = argv[4];

Classifier classifier(model_file, trained_file, mean_file, label_file);

string file = argv[5];

std::cout << "---------- Prediction for "

<< file << " ----------" << std::endl;

cv::Mat img = cv::imread(file, -1);

CHECK(!img.empty()) << "Unable to decode image " << file;

std::vector<Prediction> predictions = classifier.Classify(img);

/* Print the top N predictions. */

for (size_t i = 0; i < predictions.size(); ++i) {

Prediction p = predictions[i];

std::cout << std::fixed << std::setprecision(4) << p.second << " - ""

<< p.first << """ << std::endl;

}

}

#else

int main(int argc, char** argv) {

LOG(FATAL) << "This example requires OpenCV; compile with USE_OPENCV.";

}

#endif // USE_OPENCV

CMakeLists.txt

find_package(OpenCV REQUIRED)

set(Caffe_DIR "/home/kezunlin/program/caffe-wy/build/install/share/Caffe") # caffe-wy caffe

# for CaffeConfig.cmake/ caffe-config.cmake

find_package(Caffe)

# offical caffe : There is no Caffe_INCLUDE_DIRS and Caffe_DEFINITIONS

# refinedet caffe: OK.

add_definitions(${Caffe_DEFINITIONS})

MESSAGE( [Main] " Caffe_INCLUDE_DIRS = ${Caffe_INCLUDE_DIRS}")

MESSAGE( [Main] " Caffe_DEFINITIONS = ${Caffe_DEFINITIONS}")

MESSAGE( [Main] " Caffe_LIBRARIES = ${Caffe_LIBRARIES}") # caffe

MESSAGE( [Main] " Caffe_CPU_ONLY = ${Caffe_CPU_ONLY}")

MESSAGE( [Main] " Caffe_HAVE_CUDA = ${Caffe_HAVE_CUDA}")

MESSAGE( [Main] " Caffe_HAVE_CUDNN = ${Caffe_HAVE_CUDNN}")

include_directories(${Caffe_INCLUDE_DIRS})

target_link_libraries(demo

${OpenCV_LIBS}

${Caffe_LIBRARIES}

)

run

ldd demo

if error occurs:

libcaffe.so.1.0.0 => not found

fix

vim .bashrc

# for caffe

export LD_LIBRARY_PATH=/home/kezunlin/program/caffe-wy/build/install/lib:$LD_LIBRARY_PATH

Reference

History

- 20180807: created.

- 20180822: update cmake-gui for caffe

Copyright

- Post author: kezunlin

- Post link: https://kezunlin.me/post/b90033a9/

- Copyright Notice: All articles in this blog are licensed under CC BY-NC-SA 3.0 unless stating additionally.