keras 自适应分配显存 & 清理不用的变量释放 GPU 显存

Intro

Are you running out of GPU memory when using keras or tensorflow deep learning models, but only some of the time?

Are you curious about exactly how much GPU memory your tensorflow model uses during training?

Are you wondering if you can run two or more keras models on your GPU at the same time?

Background

By default, tensorflow pre-allocates nearly all of the available GPU memory, which is bad for a variety of use cases, especially production and memory profiling.

When keras uses tensorflow for its back-end, it inherits this behavior.

Setting tensorflow GPU memory options

For new models

Thankfully, tensorflow allows you to change how it allocates GPU memory, and to set a limit on how much GPU memory it is allowed to allocate.

Let’s set GPU options on keras‘s example Sequence classification with LSTM network

## keras example imports from keras.models import Sequential from keras.layers import Dense, Dropout from keras.layers import Embedding from keras.layers import LSTM ## extra imports to set GPU options import tensorflow as tf from keras import backend as k ################################### # TensorFlow wizardry config = tf.ConfigProto() # Don't pre-allocate memory; allocate as-needed config.gpu_options.allow_growth = True # Only allow a total of half the GPU memory to be allocated #config.gpu_options.per_process_gpu_memory_fraction = 0.5 # Create a session with the above options specified. k.tensorflow_backend.set_session(tf.Session(config=config)) ################################### model = Sequential() model.add(Embedding(max_features, output_dim=256)) model.add(LSTM(128)) model.add(Dropout(0.5)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='rmsprop', metrics=['accuracy']) model.fit(x_train, y_train, batch_size=16, epochs=10) score = model.evaluate(x_test, y_test, batch_size=16)

After the above, when we create the sequence classification model, it won’t use half the GPU memory automatically, but rather will allocate GPU memory as-needed during the calls to model.fit() and model.evaluate().

Additionally, with the per_process_gpu_memory_fraction = 0.5, tensorflow will only allocate a total of half the available GPU memory.

If it tries to allocate more than half of the total GPU memory, tensorflow will throw a ResourceExhaustedError, and you’ll get a lengthy stack trace.

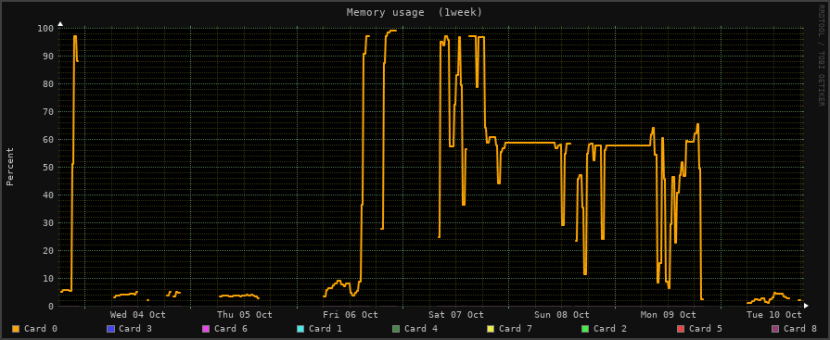

If you have a Linux machine and an nvidia card, you can watch nvidia-smi to see how much GPU memory is in use, or can configure a monitoring tool like monitorix to generate graphs for you.

GPU memory usage, as shown in Monitorix for Linux

For a model that you’re loading

We can even set GPU memory management options for a model that’s already created and trained, and that we’re loading from disk for deployment or for further training.

For that, let’s tweak keras‘s load_model example:

# keras example imports from keras.models import load_model ## extra imports to set GPU options import tensorflow as tf from keras import backend as k ################################### # TensorFlow wizardry config = tf.ConfigProto() # Don't pre-allocate memory; allocate as-needed config.gpu_options.allow_growth = True # Only allow a total of half the GPU memory to be allocated config.gpu_options.per_process_gpu_memory_fraction = 0.5 # Create a session with the above options specified. k.tensorflow_backend.set_session(tf.Session(config=config)) ################################### # returns a compiled model # identical to the previous one model = load_model('my_model.h5') # TODO: classify all the things

Now, with your loaded model, you can open your favorite GPU monitoring tool and watch how the GPU memory usage changes under different loads.

Conclusion

Good news everyone! That sweet deep learning model you just made doesn’t actually need all that memory it usually claims!

And, now that you can tell tensorflow not to pre-allocate memory, you can get a much better idea of what kind of rig(s) you need in order to deploy your model into production.

Is this how you’re handling GPU memory management issues with tensorflow or keras?

Did I miss a better, cleaner way of handling GPU memory allocation with tensorflow and keras?

Let me know in the comments!

How to remove stale models from GPU memory

import gc m = Model(.....) m.save(tmp_model_name) del m K.clear_session() gc.collect() m = load_model(tmp_model_name)

参考: https://michaelblogscode.wordpress.com/2017/10/10/reducing-and-profiling-gpu-memory-usage-in-keras-with-tensorflow-backend/