今日内容:

方法一 bs4爬取豌豆荚

爬取豌豆荚:

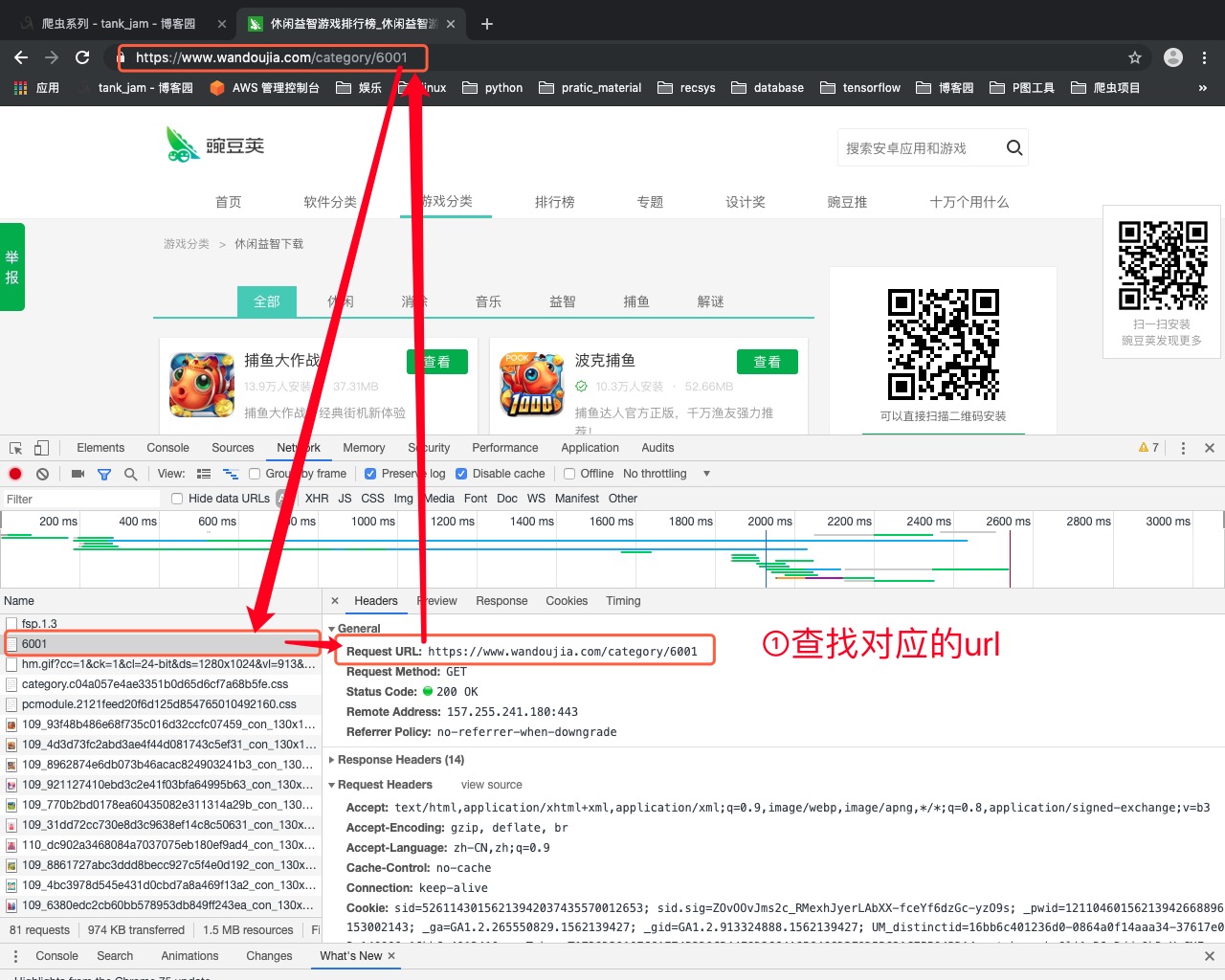

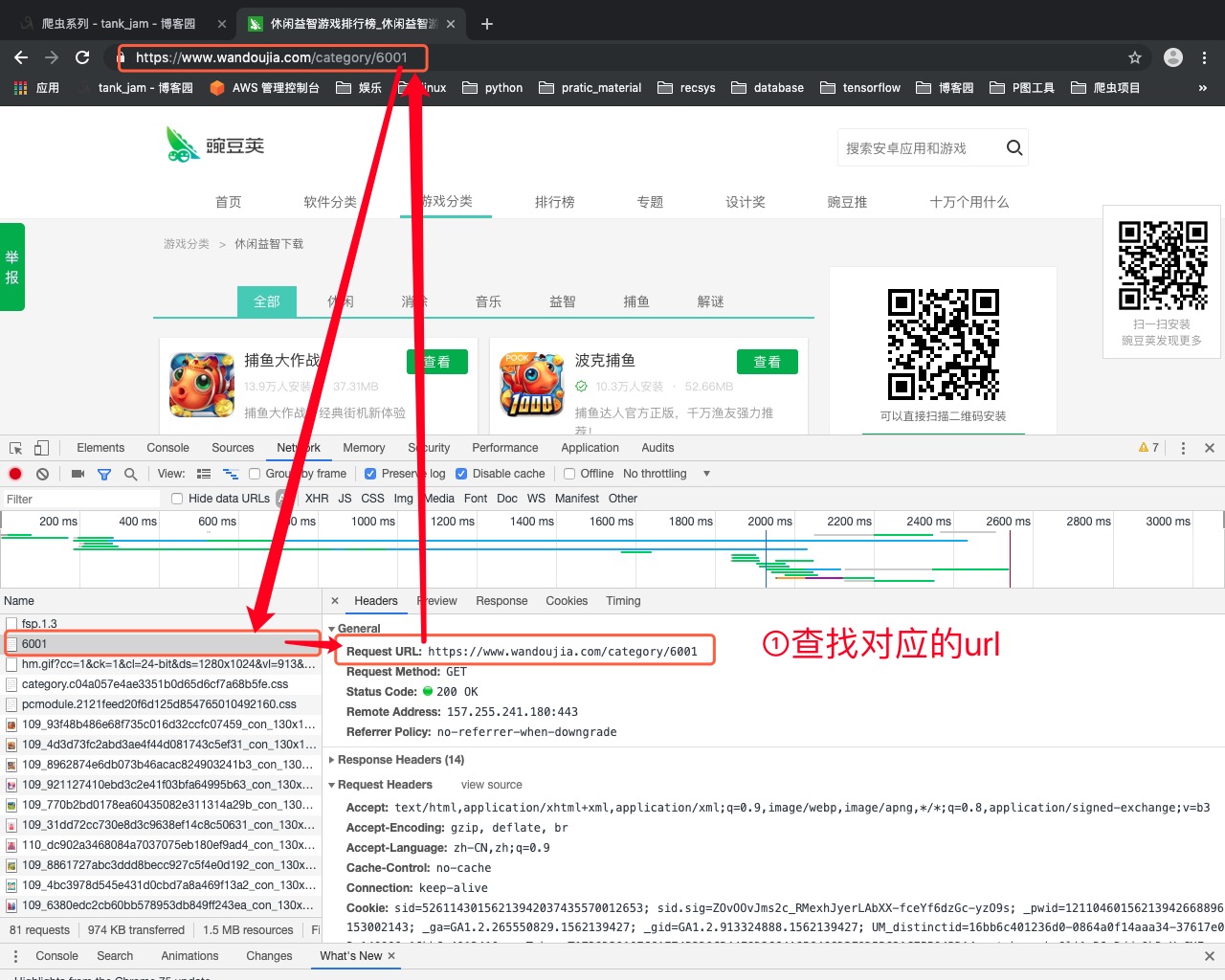

1.访问游戏主页

https://www.wandoujia.com/category/6001

2.点击查看更多,观察network内的请求

- 请求url

page2:

https://www.wandoujia.com/wdjweb/api/category/more?

catId=6001&subCatId=0&page=2&ctoken=vbw9lj1sRQsRddx0hD-XqCNF

page3:

https://www.wandoujia.com/wdjweb/api/category/more?

catId=6001&subCatId=0&page=3&ctoken=vbw9lj1sRQsRddx0hD-XqCNF

page4:

https://www.wandoujia.com/wdjweb/api/category/more?

catId=6001&subCatId=0&page=4&ctoken=vbw9lj1sRQsRddx0hD-XqCNF

3.循环拼接30个接口

4.解析返回的数据,获取每一个app数据

'''

爬取豌豆荚app数据

- 请求url

page2:

https://www.wandoujia.com/wdjweb/api/category/more?catId=6001&subCatId=0&page=2&ctoken=vbw9lj1sRQsRddx0hD-XqCNF

'''

import requests

from bs4 import BeautifulSoup

import re

'''

爬虫三部曲

'''

# 1.发送请求

def get_page(url):

response = requests.get(url)

return response

# 2.解析数据

def parse_data(text):

soup = BeautifulSoup(text, 'lxml')

# print(soup)

li_list = soup.find_all(name='li', class_="card")

# print(li_list)

for li in li_list:

# print(li)

# print('tank' * 100)

app_name = li.find(name='a', class_="name").text

# print(app_name)

app_url = li.find(name='a', class_="name").attrs.get('href')

# print(app_url)

download_num = li.find(name='span', class_="install-count").text

# print(download_num)

app_size = li.find(name='span', attrs={"title": re.compile('d+MB')}).text

# print(app_size)

app_data = f'''

游戏名称: {app_name}

游戏地址: {app_url}

下载人数: {download_num}

游戏大小: {app_size}

'''

print(app_data)

def save_data(app_data):

with open('wandoujia.txt', 'a', encoding='utf-8') as f:

f.write(app_data)

f.flush()

if __name__ == '__main__':

for line in range(1, 2):

url = f'https://www.wandoujia.com/wdjweb/api/category/more?catId=6001&subCatId=0&page={line}&ctoken=vbw9lj1sRQsRddx0hD-XqCNF'

print(url)

# 1.发送请求

# 往接口发送请求获取响应数据

response = get_page(url)

# print(response.text)

# import json

# json.loads(response.text)

# print(type(response.json()))

# print('tank ' * 1000)

# 把json数据格式转换成python的字典

data = response.json()

# print(data['state'])

# 通过字典取值获取到li文本

text = data.get('data').get('content')

# 2.解析数据

parse_data(text)

方法二:requests请求库爬去豌豆荚游戏信息

import requests

import re

# 爬虫三部曲

# 1、发送请求

def get_page(url):

response = requests.get(url)

# print(response.text)

return response

# 2、解析数据

def parse_index(html):

#关键是写好正则规则

game_list = re.findall(

'<li data-pn=".*?" class="card" data-suffix="">.*?<a href="(.*?)" >'

'.*?class="name">(.*?)</a>.*?<span class="install-count">(.*?)万人安装</span>'

'.*?<span title=".*?">(.*?)MB',

html,

re.S)

return game_list

# 3、保存数据

def save_data(game):

url,name,download,size = game

data = f'''

======== 欢迎 ========

游戏网址:{url}

游戏名称:{name}

评价人数:{download}万人

游戏大小:{size}MB

========下次再来哟 ========

'''

print(data)

with open('wandoujiagame.txt', 'a', encoding='utf-8') as f:

f.write(data)

print(f'游戏: {name} 写入成功...')

if __name__ == '__main__':

url = f'https://www.wandoujia.com/category/6001'

# print(url)

# 1.往每个主页发送请求

index_res = get_page(url)

# print(index_res.text)

# 2.解析主页获取信息

game_list = parse_index(index_res.text)

print(game_list)

#

num=1

for game in game_list:

num+=1

# print(game)

# 3.保存数据

save_data(game)