时间:1个小时左右

代码:200行左右

博客:2

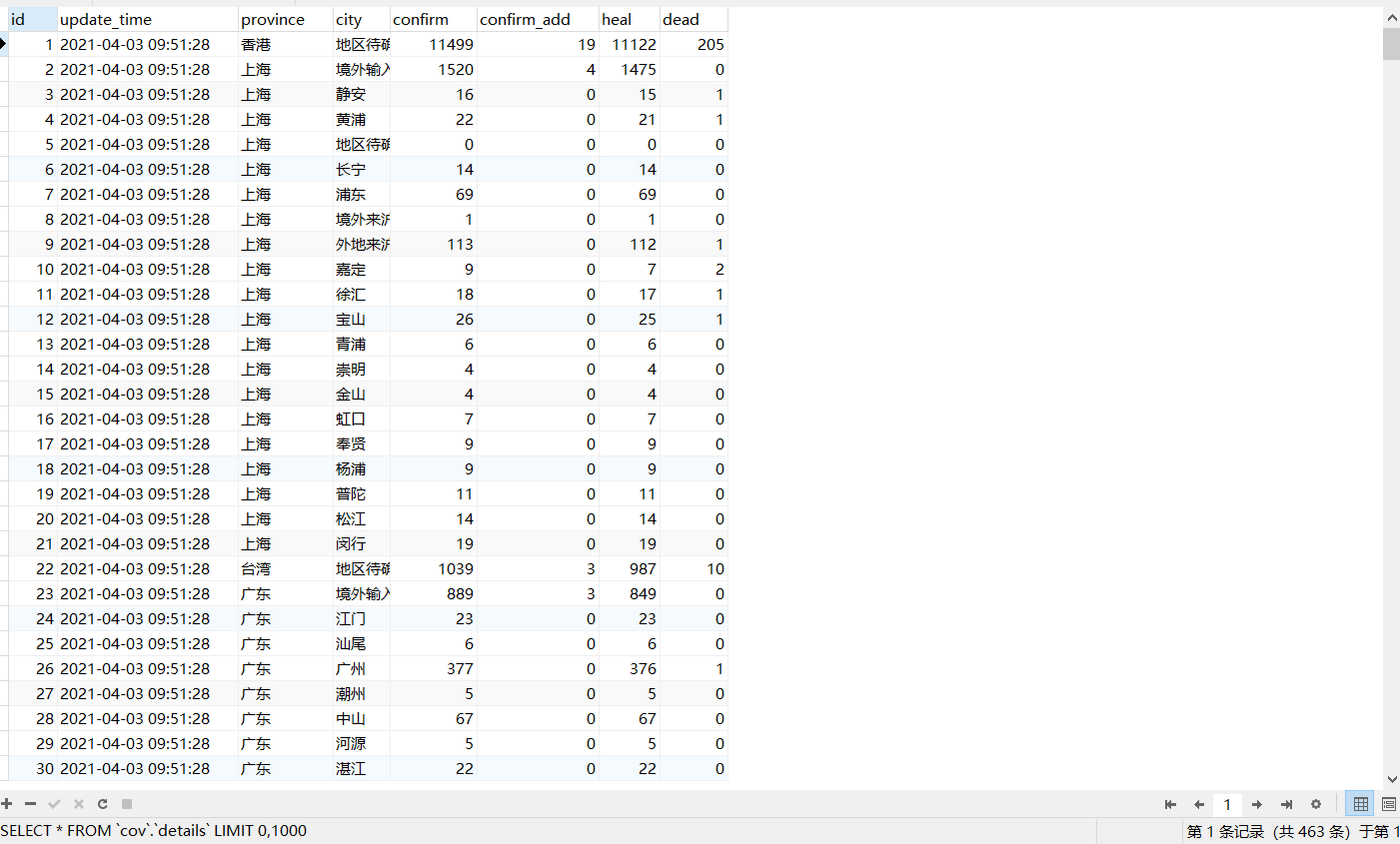

知识点:python的爬取疫情数据并进行加入数据库

import requests import json # https://view.inews.qq.com/g2/getOnsInfo?name=disease_h5 # https://view.inews.qq.com/g2/getOnsInfo?name=disease_foreign headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.114 Safari/537.36" } # 历史数据 url_history = "https://view.inews.qq.com/g2/getOnsInfo?name=disease_foreign" resp = requests.get(url_history, headers) # 获取页面的json字符串 json_data = resp.text print(type(json_data)) print(json_data) # 把json转换为dict d_data = json.loads(json_data) print(type(d_data)) print(d_data) print(d_data['data']) data_history = json.loads(d_data['data']) for item in data_history.keys(): print(item) print(data_history[item]) # details 详细数据 url = "https://view.inews.qq.com/g2/getOnsInfo?name=disease_h5" resp = requests.get(url, headers) json_data = resp.text print(json_data) # 转换为字典 dict_data = json.loads(json_data) print(dict_data) data = json.loads(dict_data['data']) for item in data.keys(): print(item) print(data[item]) print(data['chinaTotal']) print(data['chinaAdd']) print(data['areaTree']) print("*******") i = 1 for item in data['areaTree'][0]: print(item) for item in data['areaTree'][0]['children']: print(item)