实验环境

| 主机名称 | IP地址 | 角色 | 统一安装目录 | 统一安装用户 |

| sht-sgmhadoopnn-01 | 172.16.101.55 | namenode,resourcemanager |

/usr/local/hadoop(软连接) /usr/local/hadoop-2.7.4 /usr/local/zookeeper(软连接) /usr/local/zookeeper-3.4.9 |

root |

| sht-sgmhadoopnn-02 | 172.16.101.56 | namenode,resourcemanager | ||

| sht-sgmhadoopdn-01 | 172.16.101.58 | datanode,nodemanager,journalnode,zookeeper | ||

| sht-sgmhadoopdn-02 | 172.16.101.59 | datanode,nodemanager,journalnode,zookeeper | ||

| sht-sgmhadoopdn-03 | 172.16.101.60 | datanode,nodemanager,journalnode,zookeeper |

准备阶段

软件

- Apache Hadoop

http://archive.apache.org/dist/hadoop/common/hadoop-2.7.4/hadoop-2.7.4.tar.gz

- Apache Zookeeper

https://archive.apache.org/dist/zookeeper/zookeeper-3.4.9/zookeeper-3.4.9.tar.gz

- Java

https://download.oracle.com/otn/java/jdk/8u45-b14/jdk-8u45-linux-x64.tar.gz

系统包

- psmisc

主要防止出现HDFS切换报错

2019-03-26 22:48:29,200 WARN org.apache.hadoop.ha.SshFenceByTcpPort: PATH=$PATH:/sbin:/usr/sbin fuser -v -k -n tcp 8020 via ssh: bash: fuser: command not found 2019-03-26 22:48:29,201 INFO org.apache.hadoop.ha.SshFenceByTcpPort: rc: 127 2019-03-26 22:48:29,201 INFO org.apache.hadoop.ha.SshFenceByTcpPort.jsch: Disconnecting from sht-sgmhadoopnn-02 port 22 2019-03-26 22:48:29,201 WARN org.apache.hadoop.ha.NodeFencer: Fencing method org.apache.hadoop.ha.SshFenceByTcpPort(null) was unsuccessful. 2019-03-26 22:48:29,201 ERROR org.apache.hadoop.ha.NodeFencer: Unable to fence service by any configured method. 2019-03-26 22:48:29,201 INFO org.apache.hadoop.ha.SshFenceByTcpPort.jsch: Caught an exception, leaving main loop due to Socket closed 2019-03-26 22:48:29,201 WARN org.apache.hadoop.ha.ActiveStandbyElector: Exception handling the winning of election java.lang.RuntimeException: Unable to fence NameNode at sht-sgmhadoopnn-02/172.16.101.56:8020

一 配置各主机名和IP地址之间相互解析,所有节点一致

# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.101.55 sht-sgmhadoopnn-01 172.16.101.56 sht-sgmhadoopnn-02 172.16.101.58 sht-sgmhadoopdn-01 172.16.101.59 sht-sgmhadoopdn-02 172.16.101.60 sht-sgmhadoopdn-03

二 配置各主机ssh无密码登录

1. 生成公钥和私钥,每个节点均要执行

# sshd-keygen -t rsa

2. 将公钥复制到所有其他节点,每个节点均要执行

# ssh-copy-id root@sht-sgmhadoopnn-01 # ssh-copy-id root@sht-sgmhadoopnn-02 # ssh-copy-id root@sht-sgmhadoopdn-01 # ssh-copy-id root@sht-sgmhadoopdn-02 # ssh-copy-id root@sht-sgmhadoopdn-03

3. 测试无密码登录,每个节点均要执行

# ssh root@sht-sgmhadoopnn-01 date # ssh root@sht-sgmhadoopnn-02 date # ssh root@sht-sgmhadoopdn-01 date # ssh root@sht-sgmhadoopdn-02 date # ssh root@sht-sgmhadoopdn-03 date

三 配置.bash_profile 系统环境变量,每个节点均要执行

# .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs ZOOKEEPER_HOME=/usr/local/zookeeper JAVA_HOME=/usr/java/jdk1.8.0_45 JRE_HOME=$JAVA_HOME/jre HADOOP_HOME=/usr/local/hadoop CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$JRE_HOME/lib PATH=$ZOOKEEPER_HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH:$HOME/bin export PATH

四 安装java,所有节点一致

# which java /usr/java/jdk1.8.0_45/bin/java

五 安装zookepeer集群

1. 配置文件zoo.cfg,集群zookeeper所有节点一致

# cat /usr/local/zookeeper/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/usr/local/zookeeper/data clientPort=2181 server.1=sht-sgmhadoopdn-01:2888:3888 server.2=sht-sgmhadoopdn-02:2888:3888 server.3=sht-sgmhadoopdn-03:2888:3888

2.确保各节点如下文件存在,并且值与上步骤server.*的值一一对应

sht-sgmhadoopdn-01

# cat /usr/local/zookeeper/data/myid 1

sht-sgmhadoopdn-02

# cat /usr/local/zookeeper/data/myid 2

sht-sgmhadoopdn-03

# cat /usr/local/zookeeper/data/myid 3

3.启动zookeeper,各节点依次执行

# zkServer.sh start

4. 查看集群中各个节点的角色和进程信息

sht-sgmhadoopdn-01

# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower # jps 5273 QuorumPeerMain5678 Jps

sht-sgmhadoopdn-02

# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: leader # jps 22720 QuorumPeerMain25180 Jps

sht-sgmhadoopdn-03

# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower # jps 592 Jps32527 QuorumPeerMain

5. zookeeper集群登录测试

# zkCli.sh -server sht-sgmhadoopdn-01:2181 # zkCli.sh -server sht-sgmhadoopdn-02:2181 # zkCli.sh -server sht-sgmhadoopdn-03:2181

六 配置HDFS HA

1. 在namenode sht-sgmhadoopnn-01节点修改配置文件

1). hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_45

2). core-site.xml

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/hadoop/data</value> </property> <property> <name>fs.defaultFS</name> <value>hdfs://mycluster</value> </property> <property> <name>hadoop.http.staticuser.user</name> <value>admin</value> </property> <property> <name>dfs.permissions.superusergroup</name> <value>admingroup</value> </property> <property> <name>fs.trash.interval</name> <value>1440</value> </property> <property> <name>fs.trash.checkpoint.interval</name> <value>0</value> </property> <property> <name>io.file.buffer.size</name> <value>65536</value> </property> <property> <name>hadoop.proxyuser.hduser.groups</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.hduser.hosts</name> <value>*</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>sht-sgmhadoopdn-01:2181,sht-sgmhadoopdn-02:2181,sht-sgmhadoopdn-03:2181</value> </property> <property> <name>ha.zookeeper.session-timeout.ms</name> <value>5000</value> </property> <property> <name>ha.zookeeper.parent-znode</name> <value>/hadoop-ha</value> </property> </configuration>

3). hdfs-site.xml

# touch /usr/local/hadoop/etc/hadoop/hdfs_excludes # touch /usr/local/hadoop/etc/hadoop/hdfs_excludes

<configuration> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/usr/local/hadoop/data/dfs/name</value> </property> <property> <name>dfs.namenode.edits.dir</name> <value>${dfs.namenode.name.dir}</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/usr/local/hadoop/data/dfs/data</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.blocksize</name> <value>134217728</value> </property> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <property> <name>dfs.ha.namenodes.mycluster</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn1</name> <value>sht-sgmhadoopnn-01:8020</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn2</name> <value>sht-sgmhadoopnn-02:8020</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn1</name> <value>sht-sgmhadoopnn-01:50070</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn2</name> <value>sht-sgmhadoopnn-02:50070</value> </property> <property> <name>dfs.journalnode.http-address</name> <value>0.0.0.0:8480</value> </property> <property> <name>dfs.journalnode.https-address</name> <value>0.0.0.0:8481</value> </property> <property> <name>dfs.journalnode.rpc-address</name> <value>0.0.0.0:8485</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <property> <name>dfs.ha.fencing.ssh.connect-timeout</name> <value>30000</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://sht-sgmhadoopdn-01:8485;sht-sgmhadoopdn-02:8485;sht-sgmhadoopdn-03:8485/mycluster</value> </property> <property> <name>dfs.journalnode.edits.dir</name> <value>/usr/local/hadoop/data/dfs/jn</value> </property> <property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>ha.failover-controller.cli-check.rpc-timeout.ms</name> <value>20000</value> </property> <property> <name>ipc.client.connect.timeout</name> <value>20000</value> </property> <property> <name>dfs.hosts</name> <value>/usr/local/hadoop/etc/hadoop/hdfs_includes</value> </property> <property> <name>dfs.hosts.exclude</name> <value>/usr/local/hadoop/etc/hadoop/hdfs_excludes</value> </property> <property> <name>dfs.namenode.heartbeat.recheck-interval</name> <value>30000</value> </property> <property> <name>dfs.heartbeat.interval</name> <value>1</value> </property> <property> <name>dfs.blockreport.intervalMsec</name> <value>3600000</value> </property> <property> <name>dfs.datanode.balance.bandwidthPerSec</name> <value>67108864</value> </property> <property> <name>dfs.datanode.balance.max.concurrent.moves</name> <value>1024</value> </property> <property> <name>dfs.datanode.handler.count</name> <value>100</value> </property> </configuration>

4). slaves

sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03

2. 将上述配置文件分别复制到所有节点对应目录

# rsync -az --progress /usr/local/hadoop/etc/hadoop/* root@sht-sgmhadoopnn-02:/usr/local/hadoop/etc/hadoop # rsync -az --progress /usr/local/hadoop/etc/hadoop/* root@sht-sgmhadoopdn-01:/usr/local/hadoop/etc/hadoop # rsync -az --progress /usr/local/hadoop/etc/hadoop/* root@sht-sgmhadoopdn-02:/usr/local/hadoop/etc/hadoop # rsync -az --progress /usr/local/hadoop/etc/hadoop/* root@sht-sgmhadoopdn-03:/usr/local/hadoop/etc/hadoop

3. 在namenode sht-sgmhadoopnn-01节点上创建zookeeper集群的hadoop znode节点

# hdfs zkfc -formatZK

输出log

# hdfs zkfc -formatZK 19/03/27 23:28:51 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at sht-sgmhadoopnn-01/172.16.101.55:8020 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:host.name=sht-sgmhadoopnn-01 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_45 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.8.0_45/jre 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop-2.7.4/etc/hadoop:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-auth-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-client-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-api-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-registry-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.4.jar:/contrib/capacity-scheduler/*.jar 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop-2.7.4/lib/native 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA> 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:os.version=3.10.0-514.el7.x86_64 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:user.name=root 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:user.home=/root 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Client environment:user.dir=/usr/local/hadoop-2.7.4/data 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=sht-sgmhadoopdn-01:2181,sht-sgmhadoopdn-02:2181,sht-sgmhadoopdn-03:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@74fe5c40 19/03/27 23:28:51 INFO zookeeper.ClientCnxn: Opening socket connection to server sht-sgmhadoopdn-01/172.16.101.58:2181. Will not attempt to authenticate using SASL (unknown error) 19/03/27 23:28:51 INFO zookeeper.ClientCnxn: Socket connection established to sht-sgmhadoopdn-01/172.16.101.58:2181, initiating session 19/03/27 23:28:51 INFO zookeeper.ClientCnxn: Session establishment complete on server sht-sgmhadoopdn-01/172.16.101.58:2181, sessionid = 0x169bfa59c6a0001, negotiated timeout = 5000 19/03/27 23:28:51 INFO ha.ActiveStandbyElector: Session connected. 19/03/27 23:28:51 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/mycluster in ZK. 19/03/27 23:28:51 INFO zookeeper.ZooKeeper: Session: 0x169bfa59c6a0001 closed 19/03/27 23:28:51 INFO zookeeper.ClientCnxn: EventThread shut down

登录zookeeper查看

# zkCli.sh -server sht-sgmhadoopdn-03:2181 Connecting to sht-sgmhadoopdn-03:2181 2019-03-27 23:30:43,433 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.9-1757313, built on 08/23/2016 06:50 GMT 2019-03-27 23:30:43,440 [myid:] - INFO [main:Environment@100] - Client environment:host.name=sht-sgmhadoopdn-03 2019-03-27 23:30:43,441 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_45 2019-03-27 23:30:43,445 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation 2019-03-27 23:30:43,446 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/java/jdk1.8.0_45/jre 2019-03-27 23:30:43,446 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.10.5.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.16.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.9.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf: 2019-03-27 23:30:43,446 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2019-03-27 23:30:43,446 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp 2019-03-27 23:30:43,447 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA> 2019-03-27 23:30:43,447 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux 2019-03-27 23:30:43,447 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64 2019-03-27 23:30:43,447 [myid:] - INFO [main:Environment@100] - Client environment:os.version=3.10.0-514.el7.x86_64 2019-03-27 23:30:43,448 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root 2019-03-27 23:30:43,448 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root 2019-03-27 23:30:43,448 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/zookeeper-3.4.9/conf 2019-03-27 23:30:43,451 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=sht-sgmhadoopdn-03:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@3eb07fd3 Welcome to ZooKeeper! 2019-03-27 23:30:43,520 [myid:] - INFO [main-SendThread(sht-sgmhadoopdn-03:2181):ClientCnxn$SendThread@1032] - Opening socket connection to server sht-sgmhadoopdn-03/172.16.101.60:2181. Will not attempt to authenticate using SASL (unknown error) JLine support is enabled 2019-03-27 23:30:43,691 [myid:] - INFO [main-SendThread(sht-sgmhadoopdn-03:2181):ClientCnxn$SendThread@876] - Socket connection established to sht-sgmhadoopdn-03/172.16.101.60:2181, initiating session 2019-03-27 23:30:43,720 [myid:] - INFO [main-SendThread(sht-sgmhadoopdn-03:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server sht-sgmhadoopdn-03/172.16.101.60:2181, sessionid = 0x369bfa5e6bf0002, negotiated timeout = 30000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 0] ls / [zookeeper, hadoop-ha] [zk: sht-sgmhadoopdn-03:2181(CONNECTED) 1] stat /hadoop-ha cZxid = 0x100000008 ctime = Wed Mar 27 23:28:51 CST 2019 mZxid = 0x100000008 mtime = Wed Mar 27 23:28:51 CST 2019 pZxid = 0x100000009 cversion = 1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 1

4. 启动journalnode角色

# hadoop-daemon.sh start journalnode

查看状态

sht-sgmhadoopdn-01

# netstat -antlp | grep -E ':8480|8481|:8485' tcp 0 0 0.0.0.0:8480 0.0.0.0:* LISTEN 5614/java tcp 0 0 0.0.0.0:8485 0.0.0.0:* LISTEN 5614/java # jps 5735 Jps 5273 QuorumPeerMain 5614 JournalNode

sht-sgmhadoopdn-02

# netstat -antlp | grep -E ':8480|8481|:8485' tcp 0 0 0.0.0.0:8480 0.0.0.0:* LISTEN 24917/java tcp 0 0 0.0.0.0:8485 0.0.0.0:* LISTEN 24917/java # jps 22720 QuorumPeerMain 25681 Jps 24917 JournalNode

sht-sgmhadoopdn-03

# netstat -antlp | grep -E ':8480|8481|:8485' tcp 0 0 0.0.0.0:8480 0.0.0.0:* LISTEN 530/java tcp 0 0 0.0.0.0:8485 0.0.0.0:* LISTEN 530/java # jps 530 JournalNode 677 Jps 32527 QuorumPeerMain

5. 在namenode sht-sgmhadoopnn-01节点上格式化hdfs文件系统

# hadoop namenode -format mycluster

输出log

# hadoop namenode -format mycluster DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 19/03/27 23:52:51 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = sht-sgmhadoopnn-01/172.16.101.55 STARTUP_MSG: args = [-format, mycluster] STARTUP_MSG: version = 2.7.4 STARTUP_MSG: classpath = /usr/local/hadoop-2.7.4/etc/hadoop:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-auth-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-client-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-api-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-registry-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.4.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r cd915e1e8d9d0131462a0b7301586c175728a282; compiled by 'kshvachk' on 2017-08-01T00:29Z STARTUP_MSG: java = 1.8.0_45 ************************************************************/ 19/03/27 23:52:51 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 19/03/27 23:52:51 INFO namenode.NameNode: createNameNode [-format, mycluster] 19/03/27 23:52:52 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/03/27 23:52:52 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-2f443fae-4570-40e1-a09d-936ebcc203e3 19/03/27 23:52:52 INFO namenode.FSNamesystem: No KeyProvider found. 19/03/27 23:52:52 INFO namenode.FSNamesystem: fsLock is fair: true 19/03/27 23:52:52 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 19/03/27 23:52:52 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 19/03/27 23:52:52 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 19/03/27 23:52:52 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 19/03/27 23:52:52 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Mar 27 23:52:52 19/03/27 23:52:52 INFO util.GSet: Computing capacity for map BlocksMap 19/03/27 23:52:52 INFO util.GSet: VM type = 64-bit 19/03/27 23:52:52 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 19/03/27 23:52:52 INFO util.GSet: capacity = 2^21 = 2097152 entries 19/03/27 23:52:52 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 19/03/27 23:52:52 INFO blockmanagement.BlockManager: defaultReplication = 3 19/03/27 23:52:52 INFO blockmanagement.BlockManager: maxReplication = 512 19/03/27 23:52:52 INFO blockmanagement.BlockManager: minReplication = 1 19/03/27 23:52:52 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 19/03/27 23:52:52 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 19/03/27 23:52:52 INFO blockmanagement.BlockManager: encryptDataTransfer = false 19/03/27 23:52:52 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 19/03/27 23:52:52 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 19/03/27 23:52:52 INFO namenode.FSNamesystem: supergroup = supergroup 19/03/27 23:52:52 INFO namenode.FSNamesystem: isPermissionEnabled = false 19/03/27 23:52:52 INFO namenode.FSNamesystem: Determined nameservice ID: mycluster 19/03/27 23:52:52 INFO namenode.FSNamesystem: HA Enabled: true 19/03/27 23:52:52 INFO namenode.FSNamesystem: Append Enabled: true 19/03/27 23:52:52 INFO util.GSet: Computing capacity for map INodeMap 19/03/27 23:52:52 INFO util.GSet: VM type = 64-bit 19/03/27 23:52:52 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 19/03/27 23:52:52 INFO util.GSet: capacity = 2^20 = 1048576 entries 19/03/27 23:52:52 INFO namenode.FSDirectory: ACLs enabled? false 19/03/27 23:52:52 INFO namenode.FSDirectory: XAttrs enabled? true 19/03/27 23:52:52 INFO namenode.FSDirectory: Maximum size of an xattr: 16384 19/03/27 23:52:52 INFO namenode.NameNode: Caching file names occuring more than 10 times 19/03/27 23:52:52 INFO util.GSet: Computing capacity for map cachedBlocks 19/03/27 23:52:52 INFO util.GSet: VM type = 64-bit 19/03/27 23:52:52 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 19/03/27 23:52:52 INFO util.GSet: capacity = 2^18 = 262144 entries 19/03/27 23:52:52 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 19/03/27 23:52:52 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 19/03/27 23:52:52 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 19/03/27 23:52:52 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 19/03/27 23:52:52 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 19/03/27 23:52:52 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 19/03/27 23:52:52 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 19/03/27 23:52:52 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 19/03/27 23:52:52 INFO util.GSet: Computing capacity for map NameNodeRetryCache 19/03/27 23:52:52 INFO util.GSet: VM type = 64-bit 19/03/27 23:52:52 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 19/03/27 23:52:52 INFO util.GSet: capacity = 2^15 = 32768 entries 19/03/27 23:52:53 INFO namenode.FSImage: Allocated new BlockPoolId: BP-698223843-172.16.101.55-1553701973789 19/03/27 23:52:53 INFO common.Storage: Storage directory /usr/local/hadoop-2.7.4/data/dfs/name has been successfully formatted. 19/03/27 23:52:54 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop-2.7.4/data/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 19/03/27 23:52:54 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop-2.7.4/data/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 321 bytes saved in 0 seconds. 19/03/27 23:52:54 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 19/03/27 23:52:54 INFO util.ExitUtil: Exiting with status 0 19/03/27 23:52:54 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at sht-sgmhadoopnn-01/172.16.101.55 ************************************************************/

6. 在namenode sht-sgmhadoopnn-01节点上启动namenode角色

# hadoop-daemon.sh start namenode starting namenode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-namenode-sht-sgmhadoopnn-01.out # jps 5041 NameNode 5116 Jps

7. 在namenode sht-sgmhadoopnn-02节点上从sht-sgmhadoopnn-01同步HDFS元数据

# hdfs namenode -bootstrapStandby

输出log

# hdfs namenode -bootstrapStandby

19/03/27 23:58:01 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = sht-sgmhadoopnn-02/172.16.101.56

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.7.4

STARTUP_MSG: classpath = /usr/local/hadoop-2.7.4/etc/hadoop:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hadoop-auth-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/common/hadoop-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-api-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-client-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-registry-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4-tests.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.4.jar:/usr/local/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r cd915e1e8d9d0131462a0b7301586c175728a282; compiled by 'kshvachk' on 2017-08-01T00:29Z

STARTUP_MSG: java = 1.8.0_45

************************************************************/

19/03/27 23:58:01 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

19/03/27 23:58:01 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

19/03/27 23:58:02 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

19/03/27 23:58:02 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: mycluster

Other Namenode ID: nn1

Other NN's HTTP address: http://sht-sgmhadoopnn-01:50070

Other NN's IPC address: sht-sgmhadoopnn-01/172.16.101.55:8020

Namespace ID: 789891431

Block pool ID: BP-698223843-172.16.101.55-1553701973789

Cluster ID: CID-2f443fae-4570-40e1-a09d-936ebcc203e3

Layout version: -63

isUpgradeFinalized: true

=====================================================

19/03/27 23:58:03 INFO common.Storage: Storage directory /usr/local/hadoop-2.7.4/data/dfs/name has been successfully formatted.

19/03/27 23:58:03 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

19/03/27 23:58:03 WARN common.Util: Path /usr/local/hadoop/data/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

19/03/27 23:58:04 INFO namenode.TransferFsImage: Opening connection to http://sht-sgmhadoopnn-01:50070/imagetransfer?getimage=1&txid=0&storageInfo=-63:789891431:0:CID-2f443fae-4570-40e1-a09d-936ebcc203e3

19/03/27 23:58:04 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

19/03/27 23:58:04 INFO namenode.TransferFsImage: Transfer took 0.04s at 0.00 KB/s

19/03/27 23:58:04 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 321 bytes.

19/03/27 23:58:04 INFO util.ExitUtil: Exiting with status 0

19/03/27 23:58:04 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at sht-sgmhadoopnn-02/172.16.101.56

************************************************************/

8. 在namenode sht-sgmhadoopnn-02节点上启动namenode角色

# hadoop-daemon.sh start namenode starting namenode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-namenode-sht-sgmhadoopnn-02.out # jps 30180 NameNode 30255 Jps

9. 停止两台namenode节点的namenode角色

# hadoop-daemon.sh stop namenode

10. 在namenode sht-sgmhadoopnn-01节点上启动HDFS集群服务

# start-dfs.sh

输出log

# start-dfs.sh Starting namenodes on [sht-sgmhadoopnn-01 sht-sgmhadoopnn-02] sht-sgmhadoopnn-01: ******************************************************************** sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopnn-01: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopnn-01: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopnn-01: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopnn-01: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: ******************************************************************** sht-sgmhadoopnn-02: ******************************************************************** sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopnn-02: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopnn-02: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopnn-02: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopnn-02: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: ******************************************************************** sht-sgmhadoopnn-01: starting namenode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-namenode-sht-sgmhadoopnn-01.out sht-sgmhadoopnn-02: starting namenode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-namenode-sht-sgmhadoopnn-02.out sht-sgmhadoopdn-01: ******************************************************************** sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-01: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-01: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-01: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-01: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: ******************************************************************** sht-sgmhadoopdn-03: ******************************************************************** sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-03: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-03: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-03: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-03: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: ******************************************************************** sht-sgmhadoopdn-02: ******************************************************************** sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-02: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-02: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-02: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-02: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: ******************************************************************** sht-sgmhadoopdn-02: starting datanode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-datanode-sht-sgmhadoopdn-02.telenav.cn.out sht-sgmhadoopdn-01: starting datanode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-datanode-sht-sgmhadoopdn-01.out sht-sgmhadoopdn-03: starting datanode, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-datanode-sht-sgmhadoopdn-03.telenav.cn.out Starting journal nodes [sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03] sht-sgmhadoopdn-01: ******************************************************************** sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-01: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-01: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-01: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-01: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-01: * * sht-sgmhadoopdn-01: ******************************************************************** sht-sgmhadoopdn-03: ******************************************************************** sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-03: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-03: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-03: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-03: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-03: * * sht-sgmhadoopdn-03: ******************************************************************** sht-sgmhadoopdn-02: ******************************************************************** sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-02: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-02: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-02: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-02: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-02: * * sht-sgmhadoopdn-02: ******************************************************************** sht-sgmhadoopdn-02: journalnode running as process 24917. Stop it first. sht-sgmhadoopdn-01: journalnode running as process 5614. Stop it first. sht-sgmhadoopdn-03: journalnode running as process 530. Stop it first. Starting ZK Failover Controllers on NN hosts [sht-sgmhadoopnn-01 sht-sgmhadoopnn-02] sht-sgmhadoopnn-01: ******************************************************************** sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopnn-01: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopnn-01: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopnn-01: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopnn-01: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopnn-01: * * sht-sgmhadoopnn-01: ******************************************************************** sht-sgmhadoopnn-02: ******************************************************************** sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopnn-02: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopnn-02: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopnn-02: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopnn-02: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopnn-02: * * sht-sgmhadoopnn-02: ******************************************************************** sht-sgmhadoopnn-01: starting zkfc, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-zkfc-sht-sgmhadoopnn-01.out sht-sgmhadoopnn-02: starting zkfc, logging to /usr/local/hadoop-2.7.4/logs/hadoop-root-zkfc-sht-sgmhadoopnn-02.out

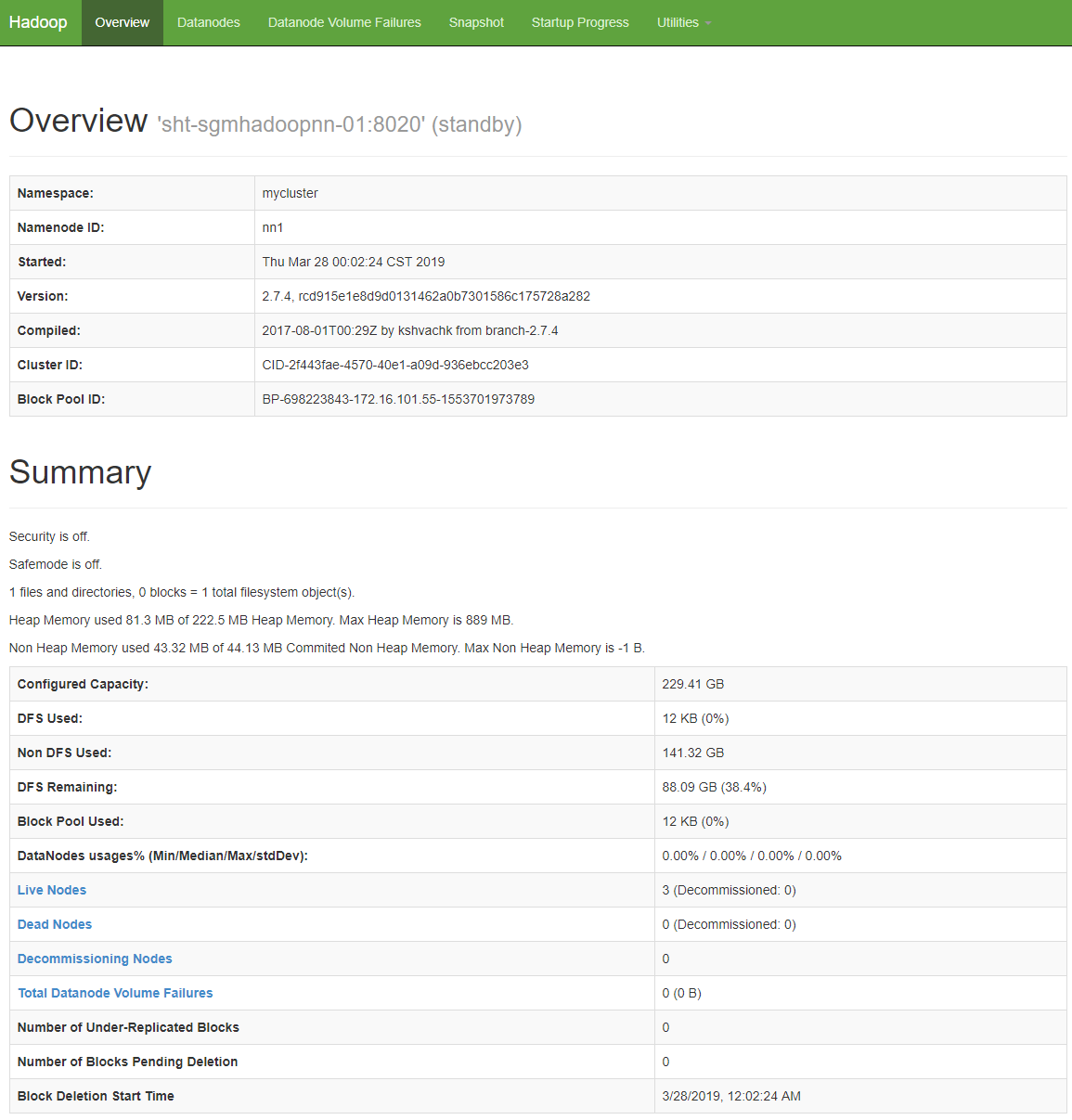

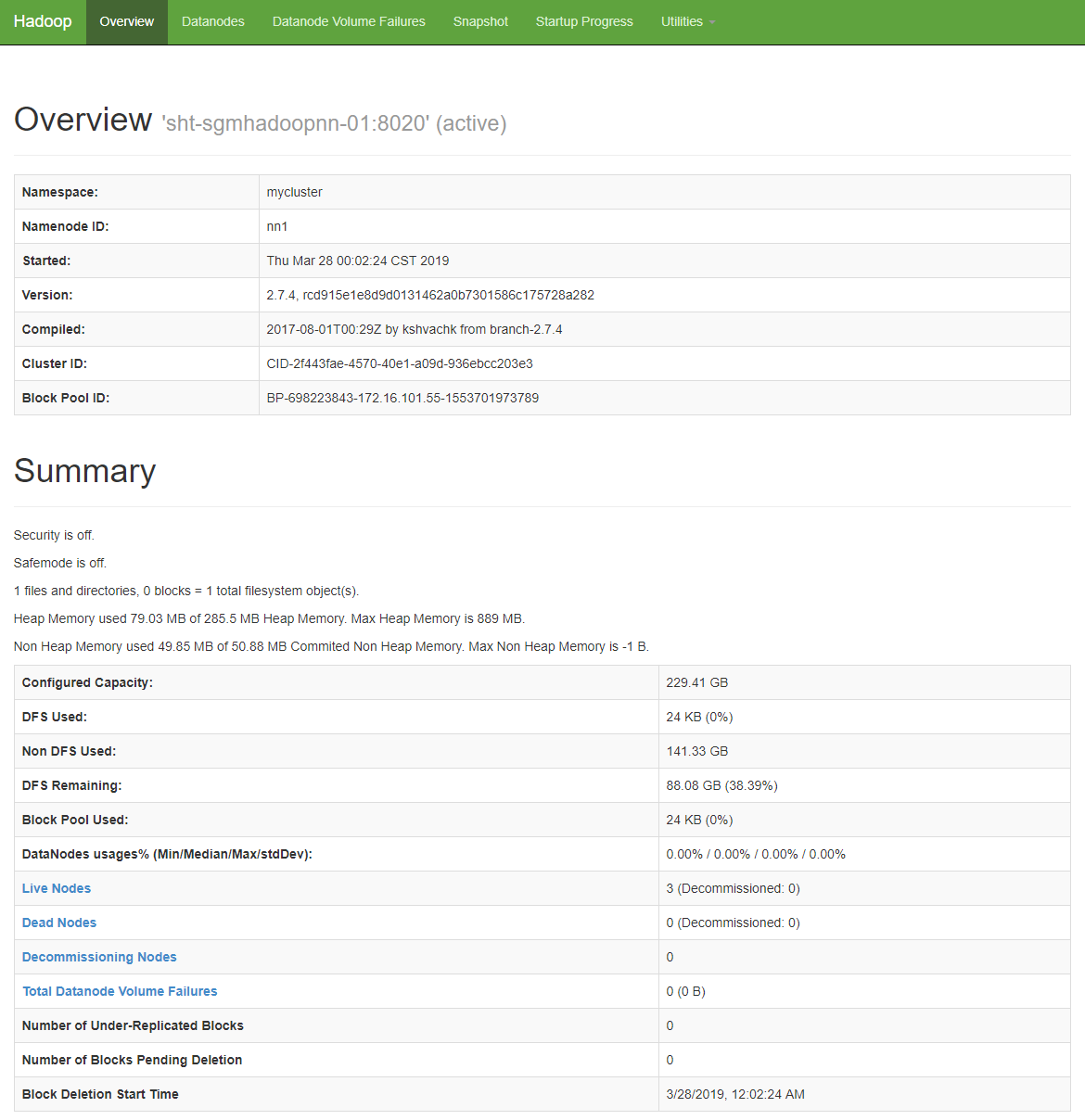

11.验证HDFS

namenode1节点

http://172.16.101.55:50070

namenode2节点

http://172.16.101.56:50070

12. 自动切换测试

注:此时namenode的 active节点为sht-sgmhadoopnn-02,standby节点为sht-sgmhadoopnn-01

也可以通过命令查看

# hdfs haadmin -getServiceState nn1 standby # hdfs haadmin -getServiceState nn2 active

手动kill掉active节点的namenode进程,验证standby节点是否会自动切换为active状态

# jps 30339 NameNode 30435 DFSZKFailoverController 30601 Jps # kill -9 30339

同时查看standby节点的zkfc输出log

2019-03-28 00:13:15,460 INFO org.apache.hadoop.ha.ZKFailoverController: Trying to make NameNode at sht-sgmhadoopnn-01/172.16.101.55:8020 active... 2019-03-28 00:13:17,059 INFO org.apache.hadoop.ha.ZKFailoverController: Successfully transitioned NameNode at sht-sgmhadoopnn-01/172.16.101.55:8020 to active state

完整输出log