starRocks安装测试报告

下载安装部署

StarRocks的集群部署分为两种模式,第一种是使用命令部署,第二种是使用 StarRocksManager 自动化部署。自动部署的版本只需要在页面上简单进行配置、选择、输入后批量完成,并且包含Supervisor进程管理、滚动升级、备份、回滚等功能。因 StarRocksManager并未开源,因此我们只能使用命令部署。

生产环境使用官方推荐配置:BE推荐16核64GB以上(StarRocks的元数据都在内存中保存),FE推荐8核16GB以上

下载地址:

https://www.starrocks.com/zh-CN/download/request-download/4

服务器配置

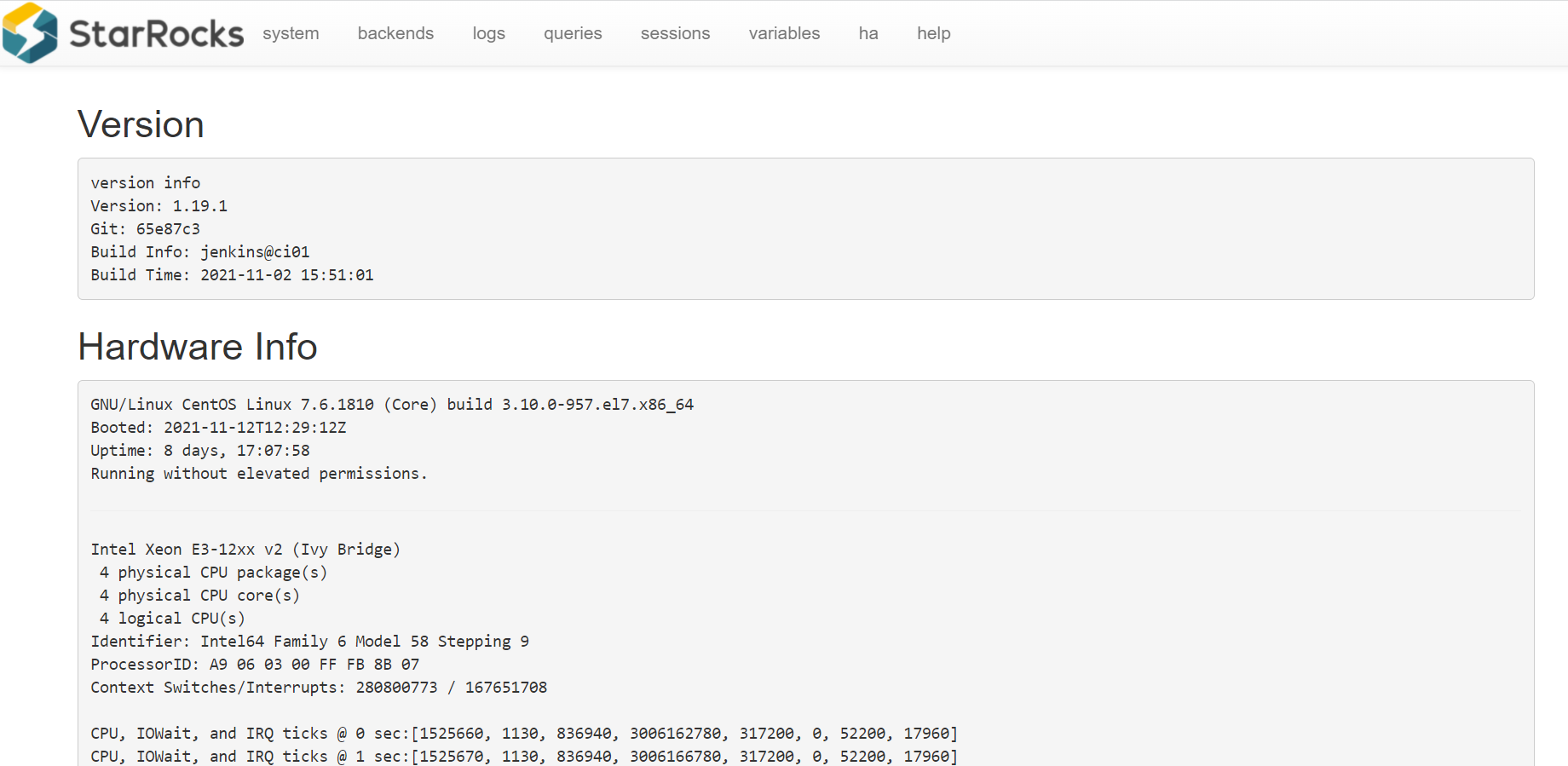

下载社区版最新版本:StarRocks-1.19.1,因资源限制(使用4台虚机),FE采取单实例部署,BE部署三个实例。

| IP | host | 配置 | 部署 |

|---|---|---|---|

| 192.168.130.178 | fe1 | 4C16G | FE主节点 |

| 192.168.130.183 | be1 | 4C8G | BE节点 |

| 192.168.130.36 | be2 | 4C8G | BE节点 |

| 192.168.130.149 | be3 | 4C8G | BE节点 |

FE单实例部署

# 修改fe配置

cd StarRocks-1.19.1/fe

# 配置文件conf/fe.conf

# 根据FE内存大小调整 -Xmx4096m,为了避免GC建议16G以上,StarRocks的元数据都在内存中保存。

# 创建元数据目录

mkdir -p meta

# 启动进程

bin/start_fe.sh --daemon

# 使用浏览器访问8030端口, 打开StarRocks的WebUI, 用户名为root, 密码为空

StarRocks UI

http://192.168.130.178:8030/ 打开StarRocks的WebUI,,用户名为root, 密码为空。

使用MySQL客户端访问FE

第一步: 安装mysql客户端(如果已经安装,可忽略此步):

Ubuntu:sudo apt-get install mysql-client

Centos:sudo yum install mysql-client

wget http://repo.mysql.com/mysql57-community-release-sles12.rpm

rpm -ivh mysql57-community-release-sles12.rpm

# 安装mysql

yum install mysql-server

# 启动mysql

service mysqld start

第二步: 使用mysql客户端连接:

mysql -h 127.0.0.1 -P9030 -uroot

注意:这里默认root用户密码为空,端口为fe/conf/fe.conf中的query_port配置项,默认为9030

第三步: 查看FE状态:

MySQL [(none)]> SHOW PROC '/frontends'\G

*************************** 1. row ***************************

Name: 192.168.130.178_9010_1636811945380

IP: 192.168.130.178

HostName: fe1

EditLogPort: 9010

HttpPort: 8030

QueryPort: 9030

RpcPort: 9020

Role: FOLLOWER

IsMaster: true

ClusterId: 985620692

Join: true

Alive: true

ReplayedJournalId: 43507

LastHeartbeat: 2021-11-15 14:41:48

IsHelper: true

ErrMsg:

1 row in set (0.05 sec)

Role为FOLLOWER说明这是一个能参与选主的FE;IsMaster为true,说明该FE当前为主节点。

如果MySQL客户端连接不成功,请查看log/fe.warn.log日志文件,确认问题。由于是初次启动,如果在操作过程中遇到任何意外问题,都可以删除并重新创建FE的元数据目录,再从头开始操作。

BE部署

BE的基本配置

BE的配置文件为StarRocks-1.19.1/be/conf/be.conf,默认配置已经足以启动集群,不建议初尝用户修改配置, 有经验的用户可以查看手册的系统配置章节,为生产环境定制配置。 为了让用户更好的理解集群的工作原理, 此处只列出基础配置。

192.168.130.183

# 修改fe配置

cd StarRocks-1.19.1/be

# 配置文件conf/fe.conf

# 调整BE参数,默认配置已经足以启动集群,暂不做调整。

# 创建元数据目录

mkdir -p storage

# 通过mysql客户端添加BE节点。

# 这里IP地址为和priority_networks设置匹配的IP,portheartbeat_service_port,默认为9050

mysql> ALTER SYSTEM ADD BACKEND "be1:9050";

# 启动be

bin/start_be.sh --daemon

添加BE节点如出现错误,需要删除BE节点,应用下列命令:

-

alter system decommission backend "be_host:be_heartbeat_service_port"; -

alter system dropp backend "be_host:be_heartbeat_service_port";

# 查看防火墙状态

[root@be1 be]# systemctl status firewalld

# 如已开启,需关闭防火墙,防止网络不通,导致BE和FE无法连接

[root@be1 be]# systemctl stop firewalld

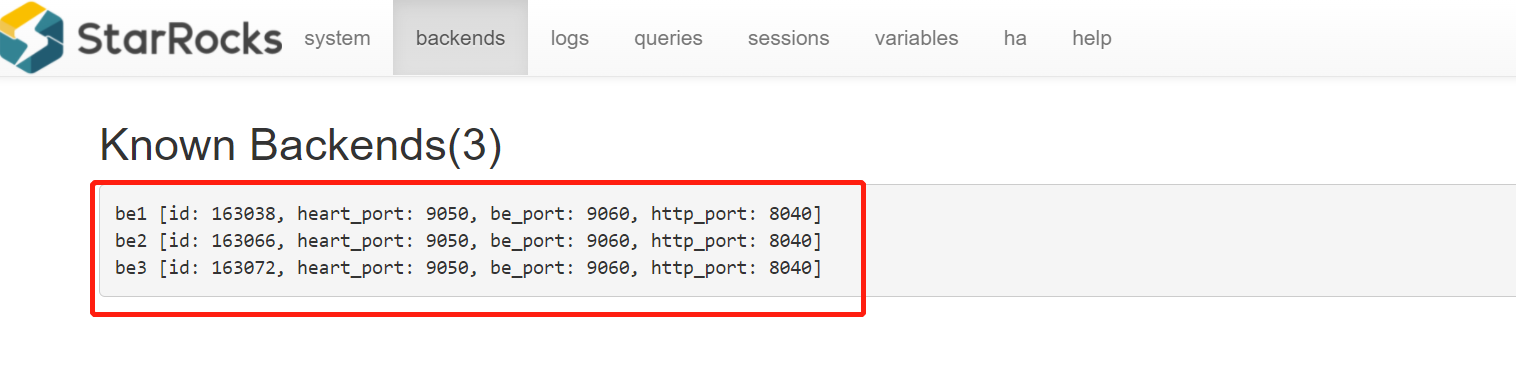

第四步: 查看BE状态, 确认BE就绪。同样步骤添加另外两个BE节点,mysql客户端中执行

MySQL [(none)]> SHOW PROC '/backends' \G;

*************************** 1. row ***************************

BackendId: 163038

Cluster: default_cluster

IP: 192.168.130.183

HostName: be1

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-11-21 13:56:47

LastHeartbeat: 2021-11-21 14:58:35

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 5

DataUsedCapacity: .000

AvailCapacity: 33.451 GB

TotalCapacity: 36.974 GB

UsedPct: 9.53 %

MaxDiskUsedPct: 9.53 %

ErrMsg:

Version: 1.19.1-65e87c3

Status: {"lastSuccessReportTabletsTime":"2021-11-21 14:57:48"}

DataTotalCapacity: 33.451 GB

DataUsedPct: 0.00 %

*************************** 2. row ***************************

BackendId: 163066

Cluster: default_cluster

IP: 192.168.130.36

HostName: be2

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-11-21 14:56:34

LastHeartbeat: 2021-11-21 14:58:35

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 5

DataUsedCapacity: .000

AvailCapacity: 33.452 GB

TotalCapacity: 36.974 GB

UsedPct: 9.53 %

MaxDiskUsedPct: 9.53 %

ErrMsg:

Version: 1.19.1-65e87c3

Status: {"lastSuccessReportTabletsTime":"2021-11-21 14:58:35"}

DataTotalCapacity: 33.452 GB

DataUsedPct: 0.00 %

*************************** 3. row ***************************

BackendId: 163072

Cluster: default_cluster

IP: 192.168.130.149

HostName: be3

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-11-21 14:58:15

LastHeartbeat: 2021-11-21 14:58:35

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 3

DataUsedCapacity: .000

AvailCapacity: 33.521 GB

TotalCapacity: 36.974 GB

UsedPct: 9.34 %

MaxDiskUsedPct: 9.34 %

ErrMsg:

Version: 1.19.1-65e87c3

Status: {"lastSuccessReportTabletsTime":"2021-11-21 14:58:16"}

DataTotalCapacity: 33.521 GB

DataUsedPct: 0.00 %

如果isAlive为true,则说明BE正常接入集群。如果BE没有正常接入集群,请查看log目录下的be.WARNING日志文件确定原因。

至此,安装完成。

参数设置

- Swappiness

关闭交换区,消除交换内存到虚拟内存时对性能的扰动。

echo 0 | sudo tee /proc/sys/vm/swappiness

- Compaction相关

当使用聚合表或更新模型,导入数据比较快的时候,可在配置文件 be.conf 中修改下列参数以加速compaction。

cumulative_compaction_num_threads_per_disk = 4

base_compaction_num_threads_per_disk = 2

cumulative_compaction_check_interval_seconds = 2

- 并行度

在客户端执行命令,修改StarRocks的并行度(类似clickhouse set max_threads= 8)。并行度可以设置为当前机器CPU核数的一半。

set global parallel_fragment_exec_instance_num = 8;

使用MySQL客户端访问StarRocks

Root用户登录

使用MySQL客户端连接某一个FE实例的query_port(9030), StarRocks内置root用户,密码默认为空:

mysql -h fe_host -P9030 -u root

清理环境:

mysql > drop database if exists example_db;

mysql > drop user test;

创建新用户

通过下面的命令创建一个普通用户:

mysql > create user 'test_xxx' identified by 'xxx123456';

创建数据库

StarRocks中root账户才有权建立数据库,使用root用户登录,建立example_db数据库:

mysql > create database test_xxx_db;

数据库创建完成之后,可以通过show databases查看数据库信息:

MySQL [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| _statistics_ |

| information_schema |

| test_xxx_db |

+--------------------+

3 rows in set (0.00 sec)

information_schema是为了兼容mysql协议而存在,实际中信息可能不是很准确,所以关于具体数据库的信息建议通过直接查询相应数据库而获得。

账户授权

example_db创建完成之后,可以通过root账户example_db读写权限授权给test账户,授权之后采用test账户登录就可以操作example_db数据库了:

mysql > grant all on test_xxx_db to test_xxx;

退出root账户,使用test登录StarRocks集群:

mysql > exit

mysql -h 127.0.0.1 -P9030 -utest_xxx -pxxx123456

建表

StarRocks支持支持单分区和复合分区两种建表方式。

在复合分区中:

- 第一级称为Partition,即分区。用户可以指定某一维度列作为分区列(当前只支持整型和时间类型的列),并指定每个分区的取值范围。

- 第二级称为Distribution,即分桶。用户可以指定某几个维度列(或不指定,即所有KEY列)以及桶数对数据进行HASH分布。

以下场景推荐使用复合分区:

- 有时间维度或类似带有有序值的维度:可以以这类维度列作为分区列。分区粒度可以根据导入频次、分区数据量等进行评估。

- 历史数据删除需求:如有删除历史数据的需求(比如仅保留最近N 天的数据)。使用复合分区,可以通过删除历史分区来达到目的。也可以通过在指定分区内发送DELETE语句进行数据删除。

- 解决数据倾斜问题:每个分区可以单独指定分桶数量。如按天分区,当每天的数据量差异很大时,可以通过指定分区的分桶数,合理划分不同分区的数据,分桶列建议选择区分度大的列。

用户也可以不使用复合分区,即使用单分区。则数据只做HASH分布。

下面分别演示两种分区的建表语句:

- 首先切换数据库:mysql > use test_xxx_db;

- 建立单分区表建立一个名字为table1的逻辑表。使用全hash分桶,分桶列为siteid,桶数为10。这个表的schema如下:

- siteid:类型是INT(4字节), 默认值为10

- city_code:类型是SMALLINT(2字节)

- username:类型是VARCHAR, 最大长度为32, 默认值为空字符串

- pv:类型是BIGINT(8字节), 默认值是0; 这是一个指标列, StarRocks内部会对指标列做聚合操作, 这个列的聚合方法是求和(SUM)。这里采用了聚合模型,除此之外StarRocks还支持明细模型和更新模型,具体参考数据模型介绍。

建表语句如下:

mysql >

CREATE TABLE table1

(

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(siteid, citycode, username)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "1");

- 建立复合分区表

建立一个名字为table2的逻辑表。这个表的 schema 如下:

- event_day:类型是DATE,无默认值

- siteid:类型是INT(4字节), 默认值为10

- city_code:类型是SMALLINT(2字节)

- username:类型是VARCHAR, 最大长度为32, 默认值为空字符串

- pv:类型是BIGINT(8字节), 默认值是0; 这是一个指标列, StarRocks 内部会对指标列做聚合操作, 这个列的聚合方法是求和(SUM)

我们使用event_day列作为分区列,建立3个分区: p1, p2, p3

- p1:范围为 [最小值, 2017-06-30)

- p2:范围为 [2017-06-30, 2017-07-31)

- p3:范围为 [2017-07-31, 2017-08-31)

每个分区使用siteid进行哈希分桶,桶数为10。

建表语句如下:

CREATE TABLE table2

(

event_day DATE,

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(event_day, siteid, citycode, username)

PARTITION BY RANGE(event_day)

(

PARTITION p1 VALUES LESS THAN ('2017-06-30'),

PARTITION p2 VALUES LESS THAN ('2017-07-31'),

PARTITION p3 VALUES LESS THAN ('2017-08-31')

)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "1");

表建完之后,可以查看example_db中表的信息:

mysql> show tables;

+-------------------------+

| Tables_in_example_db |

+-------------------------+

| table1 |

| table2 |

+-------------------------+

2 rows in set (0.01 sec)

<br/>

mysql> desc table1;

+----------+-------------+------+-------+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------+-------------+------+-------+---------+-------+

| siteid | int(11) | Yes | true | 10 | |

| citycode | smallint(6) | Yes | true | N/A | |

| username | varchar(32) | Yes | true | | |

| pv | bigint(20) | Yes | false | 0 | SUM |

+----------+-------------+------+-------+---------+-------+

4 rows in set (0.00 sec)

<br/>

mysql> desc table2;

+-----------+-------------+------+-------+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+-----------+-------------+------+-------+---------+-------+

| event_day | date | Yes | true | N/A | |

| siteid | int(11) | Yes | true | 10 | |

| citycode | smallint(6) | Yes | true | N/A | |

| username | varchar(32) | Yes | true | | |

| pv | bigint(20) | Yes | false | 0 | SUM |

+-----------+-------------+------+-------+---------+-------+

5 rows in set (0.00 sec)

MySQL [(none)]> use test_xxx_db;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MySQL [test_xxx_db]> insert into table1 values(1,3708,'zhaop',0);

Query OK, 1 row affected (0.20 sec)

{'label':'insert_d7aee4d4-52a4-11ec-be98-fa163e7a663b', 'status':'VISIBLE', 'txnId':'2005'}

MySQL [test_xxx_db]> select * from table1;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 1 | 3708 | zhaop | 0 |

+--------+----------+----------+------+

1 row in set (0.03 sec)

导入数据

curl --location-trusted -u test_xxx:xxx123456 -T table1_data -H "label: table1_20211121" \

-H "column_separator:," \

http://127.0.0.1:8030/api/test_xxx_db/table1/_stream_load

## 报错

curl: Can't open 'table1_data'!

curl: try 'curl --help' or 'curl --manual' for more information

问题

- 执行sql报错

MySQL [test_xxx_db]> select * from table1;

ERROR 1064 (HY000): Could not initialize class com.starrocks.rpc.BackendServiceProxy

后台日志:

2021-11-21 16:03:49,835 WARN (starrocks-mysql-nio-pool-31|379) [StmtExecutor.execute():456] execute Exception, sql select * from table1

java.lang.NoClassDefFoundError: Could not initialize class com.starrocks.rpc.BackendServiceProxy

at com.starrocks.qe.Coordinator$BackendExecState.execRemoteFragmentAsync(Coordinator.java:1695) ~[starrocks-fe.jar:?]

at com.starrocks.qe.Coordinator.exec(Coordinator.java:522) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.handleQueryStmt(StmtExecutor.java:771) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:371) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:248) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:397) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:633) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$480(ReadListener.java:54) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_312]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_312]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_312]

解决方案:openjdk改为

[root@fe1 jre-1.8.0-openjdk]# java -version

openjdk version "1.8.0_312"

OpenJDK Runtime Environment (build 1.8.0_312-b07)

OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode)

# 修改为

[root@fe1 java]# java -version

java version "1.8.0_192"

Java(TM) SE Runtime Environment (build 1.8.0_192-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.192-b12, mixed mod

- BE启动失败,java.net.NoRouteToHostException: No route to host (Host unreachable)

# 报错信息

W1128 23:13:56.493264 24083 utils.cpp:90] Fail to get master client from cache. host= port=0 code=THRIFT_RPC_ERROR

# 查看防火墙状态

[root@be1 be]# systemctl status firewalld

# 如已开启,需关闭防火墙,防止网络不通,导致BE和FE无法连接

[root@be1 be]# systemctl stop firewalld

至此,starRocks可以进行正常的增删改查操作。

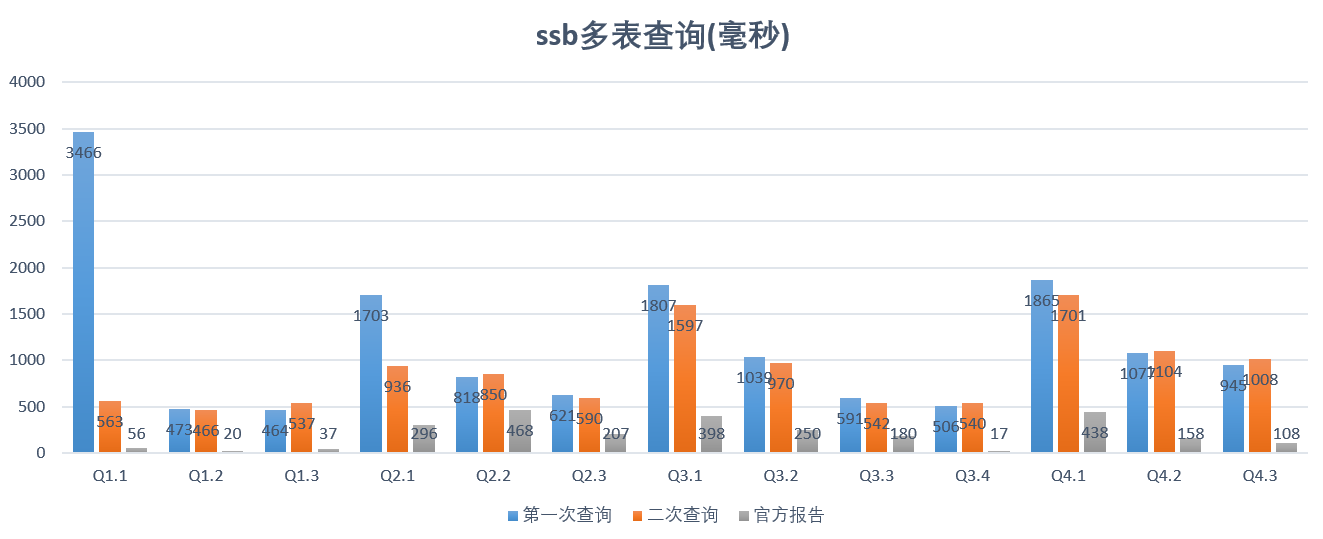

性能测试

共执行了4类场景,13条查询语句,分别为单表查询和多表查询,结果为毫秒,并发为1。

数据创建

下载ssb-poc工具包并编译

mkdir poc

wget https://starrocks-public.oss-cn-zhangjiakou.aliyuncs.com/ssb-poc-0.9.2.zip

unzip ssb-poc-0.9.2.zip

cd ssb-poc

make && make install

# 编译成功之后,可以看到output目录

[root@fe1 ssb-poc]# cd output/

[root@fe1 output]# ll

total 0

drwxr-xr-x. 2 root root 197 Nov 28 21:01 bin

drwxr-xr-x. 2 root root 37 Nov 28 21:01 conf

drwxr-xr-x. 3 root root 22 Nov 28 21:01 lib

drwxr-xr-x. 4 root root 39 Nov 28 21:01 share

所有相关工具都会安装到output目录

生成数据

bin/gen-ssb.sh 30 data_dir

这里会在data_dir目录下生成30GB规模的数据

导入数据

安装python3

压测脚本需要使用python3,先安装python3,参考https://www.jianshu.com/p/a916a22de3eb。详细步骤不再赘述。

确定测试目录

[root@fe1 output]# pwd

/root/starrocks/poc/ssb-poc/output

修改配置文件conf/doris.conf

[doris]

# for mysql cmd

mysql_host: fe1

mysql_port: 9030

mysql_user: root

mysql_password:

doris_db: ssb

# cluster ports

http_port: 8030

be_heartbeat_port: 9050

broker_port: 8000

...

执行建表脚本

bin/create_db_table.sh ddl_100

完成后我们在ssb下创建了6张表:lineorder, supplier, dates, customer, part, lineorder_flat

MySQL [ssb]> show tables;

+----------------+

| Tables_in_ssb |

+----------------+

| customer |

| dates |

| lineorder |

| lineorder_flat |

| part |

| supplier |

+----------------+

6 rows in set (0.00 sec)

数据导入

通过stream load导入数据

bin/stream_load.sh data_dir

data_dir是之前生成的数据目录,最大表数据量1.8亿

| 表名 | 数据量 | 备注 |

|---|---|---|

| lineorder | 179998372 | SSB商品订单表 |

| supplier | 60000 | SSB客户表 |

| dates | 2556 | SSB 零部件表 |

| customer | 900000 | SSB 供应商表 |

| part | 1000000 | 日期表 |

| lineorder_flat | 54675488 | 宽表 |

测试单表查询

[root@fe1 output]# time bin/benchmark.sh -p -d ssb

------ dataset: ssb, concurrency: 1 ------

sql\time(ms)\parallel_num 1

q1 3466.0

q2 473.0

q3 464.0

q4 1703.0

q5 818.0

q6 621.0

q7 1807.0

q8 1039.0

q9 591.0

q10 506.0

q11 1865.0

q12 1077.0

q13 945.0

# 二次执行

------ dataset: ssb, concurrency: 1 ------

sql\time(ms)\parallel_num 1

q1 563.0

q2 466.0

q3 537.0

q4 936.0

q5 850.0

q6 590.0

q7 1597.0

q8 970.0

q9 542.0

q10 540.0

q11 1701.0

q12 1104.0

q13 1008.0

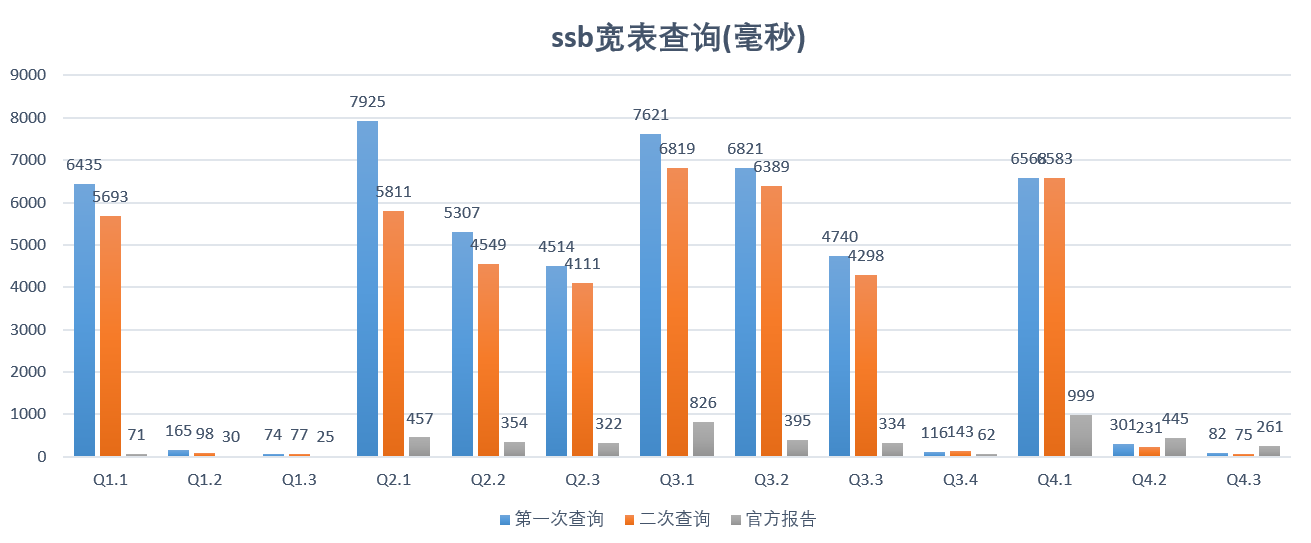

测试ssb宽表查询

# 生成宽表数据

[root@fe1 output]# bin/flat_insert.sh

sql: ssb_flat_insert start

sql: ssb_flat_insert. flat insert error, msg: (1064, 'index channel has intoleralbe failure')

# 测试宽表性能

time bin/benchmark.sh -p -d ssb-flat

[root@fe1 output]# bin/benchmark.sh -p -d ssb-flat

------ dataset: ssb-flat, concurrency: 1 ------

sql\time(ms)\parallel_num 1

q1 6435.0

q2 165.0

q3 74.0

q4 7925.0

q5 5307.0

q6 4514.0

q7 7621.0

q8 6821.0

q9 4740.0

q10 116.0

q11 6568.0

q12 301.0

q13 82.0

[root@fe1 output]# time bin/benchmark.sh -p -d ssb-flat

------ dataset: ssb-flat, concurrency: 1 ------

sql\time(ms)\parallel_num 1

q1 5693.0

q2 98.0

q3 77.0

q4 5811.0

q5 4549.0

q6 4111.0

q7 6819.0

q8 6389.0

q9 4298.0

q10 143.0

q11 6583.0

q12 231.0

q13 75.0

real 0m51.565s

user 0m0.192s

sys 0m0.152s

结论

在1.8亿条单表记录,5500万条宽表记录情况下,通过SSB测试,多表join查询性能较高,实测性能和官方提供的测试报告有所差距。主要原因可能有以下几点:

- 测试使用为虚机,配置低(官方使用16核64G ESSD高效云盘 10Gbits/s网络带宽)

- 未经过参数调优

测试SQL附录

单表测试SQL

--Q1.1

SELECT sum(lo_extendedprice * lo_discount) AS `revenue`

FROM lineorder_flat

WHERE lo_orderdate >= '1993-01-01' and lo_orderdate <= '1993-12-31' AND lo_discount BETWEEN 1 AND 3 AND lo_quantity < 25;

--Q1.2

SELECT sum(lo_extendedprice * lo_discount) AS revenue FROM lineorder_flat

WHERE lo_orderdate >= '1994-01-01' and lo_orderdate <= '1994-01-31' AND lo_discount BETWEEN 4 AND 6 AND lo_quantity BETWEEN 26 AND 35;

--Q1.3

SELECT sum(lo_extendedprice * lo_discount) AS revenue

FROM lineorder_flat

WHERE weekofyear(lo_orderdate) = 6 AND lo_orderdate >= '1994-01-01' and lo_orderdate <= '1994-12-31'

AND lo_discount BETWEEN 5 AND 7 AND lo_quantity BETWEEN 26 AND 35;

--Q2.1

SELECT sum(lo_revenue), year(lo_orderdate) AS year, p_brand

FROM lineorder_flat

WHERE p_category = 'MFGR#12' AND s_region = 'AMERICA'

GROUP BY year, p_brand

ORDER BY year, p_brand;

--Q2.2

SELECT

sum(lo_revenue), year(lo_orderdate) AS year, p_brand

FROM lineorder_flat

WHERE p_brand >= 'MFGR#2221' AND p_brand <= 'MFGR#2228' AND s_region = 'ASIA'

GROUP BY year, p_brand

ORDER BY year, p_brand;

--Q2.3

SELECT sum(lo_revenue), year(lo_orderdate) AS year, p_brand

FROM lineorder_flat

WHERE p_brand = 'MFGR#2239' AND s_region = 'EUROPE'

GROUP BY year, p_brand

ORDER BY year, p_brand;

--Q3.1

SELECT c_nation, s_nation, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue FROM lineorder_flat

WHERE c_region = 'ASIA' AND s_region = 'ASIA' AND lo_orderdate >= '1992-01-01' AND lo_orderdate <= '1997-12-31'

GROUP BY c_nation, s_nation, year

ORDER BY year ASC, revenue DESC;

--Q3.2

SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue

FROM lineorder_flat

WHERE c_nation = 'UNITED STATES' AND s_nation = 'UNITED STATES' AND lo_orderdate >= '1992-01-01' AND lo_orderdate <= '1997-12-31'

GROUP BY c_city, s_city, year

ORDER BY year ASC, revenue DESC;

--Q3.3

SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue

FROM lineorder_flat

WHERE c_city in ( 'UNITED KI1' ,'UNITED KI5') AND s_city in ( 'UNITED KI1' ,'UNITED KI5') AND lo_orderdate >= '1992-01-01' AND lo_orderdate <= '1997-12-31'

GROUP BY c_city, s_city, year

ORDER BY year ASC, revenue DESC;

--Q3.4

SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue

FROM lineorder_flat

WHERE c_city in ('UNITED KI1', 'UNITED KI5') AND s_city in ( 'UNITED KI1', 'UNITED KI5') AND lo_orderdate >= '1997-12-01' AND lo_orderdate <= '1997-12-31'

GROUP BY c_city, s_city, year

ORDER BY year ASC, revenue DESC;

--Q4.1

SELECT year(lo_orderdate) AS year, c_nation, sum(lo_revenue - lo_supplycost) AS profit FROM lineorder_flat

WHERE c_region = 'AMERICA' AND s_region = 'AMERICA' AND p_mfgr in ( 'MFGR#1' , 'MFGR#2')

GROUP BY year, c_nation

ORDER BY year ASC, c_nation ASC;

--Q4.2

SELECT year(lo_orderdate) AS year,

s_nation, p_category, sum(lo_revenue - lo_supplycost) AS profit

FROM lineorder_flat

WHERE c_region = 'AMERICA' AND s_region = 'AMERICA' AND lo_orderdate >= '1997-01-01' and lo_orderdate <= '1998-12-31' AND p_mfgr in ( 'MFGR#1' , 'MFGR#2')

GROUP BY year, s_nation, p_category

ORDER BY year ASC, s_nation ASC, p_category ASC;

--Q4.3

SELECT year(lo_orderdate) AS year, s_city, p_brand,

sum(lo_revenue - lo_supplycost) AS profit

FROM lineorder_flat

WHERE s_nation = 'UNITED STATES' AND lo_orderdate >= '1997-01-01' and lo_orderdate <= '1998-12-31' AND p_category = 'MFGR#14'

GROUP BY year, s_city, p_brand

ORDER BY year ASC, s_city ASC, p_brand ASC;

多表测试SQL

--Q1.1

select sum(lo_revenue) as revenue

from lineorder join dates on lo_orderdate = d_datekey

where d_year = 1993 and lo_discount between 1 and 3 and lo_quantity < 25;

--Q1.2

select sum(lo_revenue) as revenue

from lineorder

join dates on lo_orderdate = d_datekey

where d_yearmonthnum = 199401

and lo_discount between 4 and 6

and lo_quantity between 26 and 35;

--Q1.3

select sum(lo_revenue) as revenue

from lineorder

join dates on lo_orderdate = d_datekey

where d_weeknuminyear = 6 and d_year = 1994

and lo_discount between 5 and 7

and lo_quantity between 26 and 35;

--Q2.1

select sum(lo_revenue) as lo_revenue, d_year, p_brand

from lineorder

inner join dates on lo_orderdate = d_datekey

join part on lo_partkey = p_partkey

join supplier on lo_suppkey = s_suppkey

where p_category = 'MFGR#12' and s_region = 'AMERICA'

group by d_year, p_brand

order by d_year, p_brand;

--Q2.2

select sum(lo_revenue) as lo_revenue, d_year, p_brand

from lineorder

join dates on lo_orderdate = d_datekey

join part on lo_partkey = p_partkey

join supplier on lo_suppkey = s_suppkey

where p_brand between 'MFGR#2221' and 'MFGR#2228' and s_region = 'ASIA'

group by d_year, p_brand

order by d_year, p_brand;

--Q2.3

select sum(lo_revenue) as lo_revenue, d_year, p_brand

from lineorder

join dates on lo_orderdate = d_datekey

join part on lo_partkey = p_partkey

join supplier on lo_suppkey = s_suppkey

where p_brand = 'MFGR#2239' and s_region = 'EUROPE'

group by d_year, p_brand

order by d_year, p_brand;

--Q3.1

select c_nation, s_nation, d_year, sum(lo_revenue) as lo_revenue

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

where c_region = 'ASIA' and s_region = 'ASIA'and d_year >= 1992 and d_year <= 1997

group by c_nation, s_nation, d_year

order by d_year asc, lo_revenue desc;

--Q3.2

select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

where c_nation = 'UNITED STATES' and s_nation = 'UNITED STATES'

and d_year >= 1992 and d_year <= 1997

group by c_city, s_city, d_year

order by d_year asc, lo_revenue desc;

--Q3.3

select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

where (c_city='UNITED KI1' or c_city='UNITED KI5')

and (s_city='UNITED KI1' or s_city='UNITED KI5')

and d_year >= 1992 and d_year <= 1997

group by c_city, s_city, d_year

order by d_year asc, lo_revenue desc;

--Q3.4

select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

where (c_city='UNITED KI1' or c_city='UNITED KI5') and (s_city='UNITED KI1' or s_city='UNITED KI5') and d_yearmonth

= 'Dec1997'

group by c_city, s_city, d_year

order by d_year asc, lo_revenue desc;

--Q4.1

select d_year, c_nation, sum(lo_revenue) - sum(lo_supplycost) as profit

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

join part on lo_partkey = p_partkey

where c_region = 'AMERICA' and s_region = 'AMERICA' and (p_mfgr = 'MFGR#1' or p_mfgr = 'MFGR#2')

group by d_year, c_nation

order by d_year, c_nation;

--Q4.2

select d_year, s_nation, p_category, sum(lo_revenue) - sum(lo_supplycost) as profit

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

join part on lo_partkey = p_partkey

where c_region = 'AMERICA'and s_region = 'AMERICA'

and (d_year = 1997 or d_year = 1998)

and (p_mfgr = 'MFGR#1' or p_mfgr = 'MFGR#2')

group by d_year, s_nation, p_category

order by d_year, s_nation, p_category;

--Q4.3

select d_year, s_city, p_brand, sum(lo_revenue) - sum(lo_supplycost) as profit

from lineorder

join dates on lo_orderdate = d_datekey

join customer on lo_custkey = c_custkey

join supplier on lo_suppkey = s_suppkey

join part on lo_partkey = p_partkey

where c_region = 'AMERICA'and s_nation = 'UNITED STATES'

and (d_year = 1997 or d_year = 1998)

and p_category = 'MFGR#14'

group by d_year, s_city, p_brand

order by d_year, s_city, p_brand;