#################

主要是用于sql文件和csv文件的导入

最好还是tiup安装:

root@shell>> tiup install tidb-lightning

一、下载安装:

地址:https://docs.pingcap.com/zh/tidb/dev/download-ecosystem-tools#tidb-lightning

#下载地址格式约定为:https://download.pingcap.org/tidb-toolkit-{version}-linux-amd64.tar.gz

# 其中version格式为v5.2.1形式的格式,举例如下:

shell>> curl -o tidb-toolkit-v5.2.1-linux-amd64.tar.gz https://download.pingcap.org/tidb-toolkit-v5.2.1-linux-amd64.tar.gz

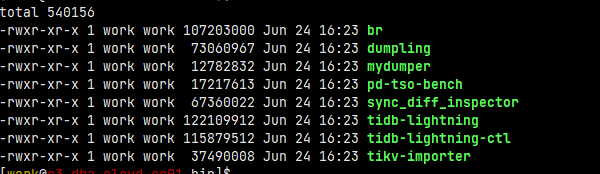

# 解压后可得: shell>> tar -xzvf tidb-toolkit-v5.2.1-linux-amd64.tar.gz

二、配置导入:

现在,需要将10.10.10.40机器上/home/work/sql_dir目录中大量的sql文件导入到tidb地址为10.10.10.10:4000的集群中 目标集群信息: tidb:

10.10.10.10:4000 10.10.10.11:4000

pd:

10.10.10.20:2379

10.10.10.21:2379

10.10.10.22:2379

tikv:

10.10.10.30:20160

10.10.10.31:20160

10.10.10.32:20160

[work@10.10.10.40 bin]$ cat tidb-lightning.toml [lightning] # 日志 level = "info" file = "tidb-lightning.log" [tikv-importer] # 选择使用的 local 后端;使用 TiDB-backend,则使用backend = "tidb" backend = "tidb" # 设置排序的键值对的临时存放地址,目标路径需要是一个空目录,弄一个空的临时目录即可 # sorted-kv-dir = "/home/work/tmp/sorted-kv-dir" [mydumper] # 源数据目录,这就是你的sql文件或csv文件所在目录。 data-source-dir = "/home/work/sql_dir/" # 配置通配符规则,默认规则会过滤 mysql、sys、INFORMATION_SCHEMA、PERFORMANCE_SCHEMA、METRICS_SCHEMA、INSPECTION_SCHEMA 系统数据库下的所有表 # 若不配置该项,导入系统表时会出现“找不到 schema”的异常 # filter = ['*.*', '!mysql.*', '!sys.*', '!INFORMATION_SCHEMA.*', '!PERFORMANCE_SCHEMA.*', '!METRICS_SCHEMA.*', '!INSPECTION_SCHEMA.*'] [tidb] # 集群中tidb组件中的入口地址:ip、port、user、password四大基本信息 host = "10.10.10.10" port = 4000 user = "root" password = "123456" # 表架构信息在从 TiDB 的“状态端口”获取。 #status-port = 10080 # 集群中任意一个pd实例的地址,当然也可以填写多个pd地址 pd-addr = "10.10.10.20:2379"

三、导入数据:

work@shell>> ./tidb-lightning -config tidb-lightning.toml --check-requirements=false

问题:

1)v5.1.0版本的tiup-lightning因为出现了检查不通过,因此提示给出了:--check-requirement=false ,实际上需要写成:--check-requirements=false

+---+-------------------------------------------------------------------------------------------+-------------+--------+ | # | CHECK ITEM | TYPE | PASSED | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 1 | Cluster is available | critical | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 2 | Cluster has no other loads | performance | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 3 | Lightning has the correct storage permission | critical | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 4 | Cluster resources are rich for this import task | critical | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 5 | large csv: event.event.000000000.sql file exists and it will slow down import performance | performance | false | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 6 | checkpoints are valid | critical | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ | 7 | table schemas are valid | critical | true | +---+-------------------------------------------------------------------------------------------+-------------+--------+ 1 performance check failed Error: lightning pre check failed.please fix the check item and make check passed or set --check-requirement=false to avoid this check tidb lightning encountered error: lightning pre check failed.please fix the check item and make check passed or set --check-requirement=false to avoid this check

参考:https://githubmemory.com/repo/pingcap/tiup/issues/1474

################

####################