一。基于requests模块的cookie操作(session处理cookie)

cookie概念:当用户通过浏览器首次访问一个域名时,访问的web服务器会给客户端发送数据,以保持web服务器与客户端之间的状态保持,这些数据就是cookie。

cookie作用:我们在浏览器中,经常涉及到数据的交换,比如你登录邮箱,登录一个页面。我们经常会在此时设置30天内记住我,或者自动登录选项。那么它们是怎么记录信息的呢,答案就是今天的主角cookie了,Cookie是由HTTP服务器设置的,保存在浏览器中,但HTTP协议是一种无状态协议,在数据交换完毕后,服务器端和客户端的链接就会关闭,每次交换数据都需要建立新的链接。就像我们去超市买东西,没有积分卡的情况下,我们买完东西之后,超市没有我们的任何消费信息,但我们办了积分卡之后,超市就有了我们的消费信息。cookie就像是积分卡,可以保存积分,商品就是我们的信息,超市的系统就像服务器后台,http协议就是交易的过程。

import requests from lxml import etree headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36' } #创建一个会话对象:可以像requests模块一样发起请求。如果请求过程中会产生cookie的话,则cookie会被会话自动处理 s = requests.Session() first_url = 'https://xueqiu.com/' #请求过程中会产生cookie,cookie就会被存储到session对象中 s.get(url=first_url,headers=headers) url = 'https://xueqiu.com/v4/statuses/public_timeline_by_category.json?since_id=-1&max_id=-1&count=10&category=-1' json_obj = s.get(url=url,headers=headers).json() print(json_obj)

二。打码验证识别验证码实现模拟登陆

云打码使用方法:https://i.cnblogs.com/EditPosts.aspx?postid=10839009&update=1

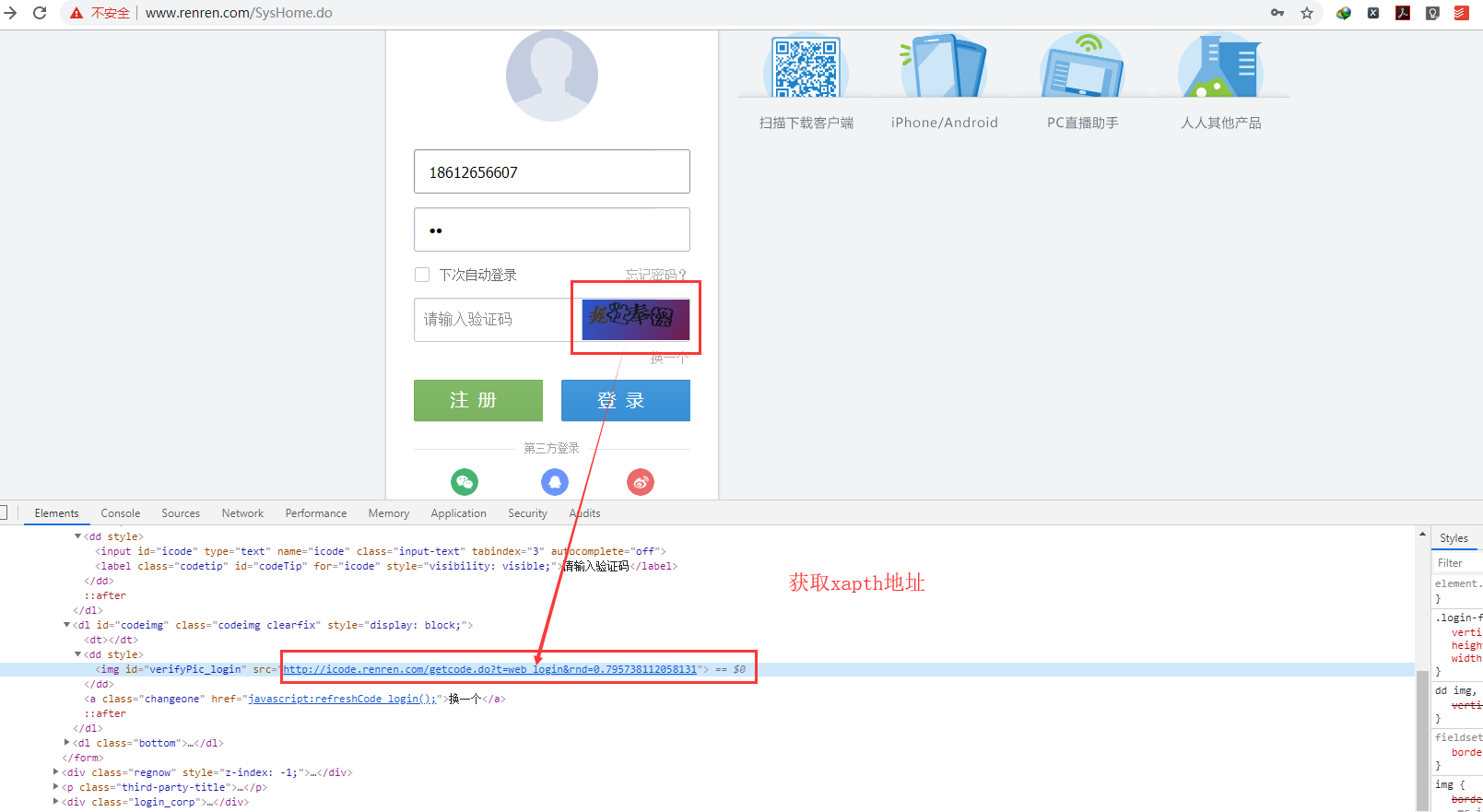

获取验证码图片:code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0]

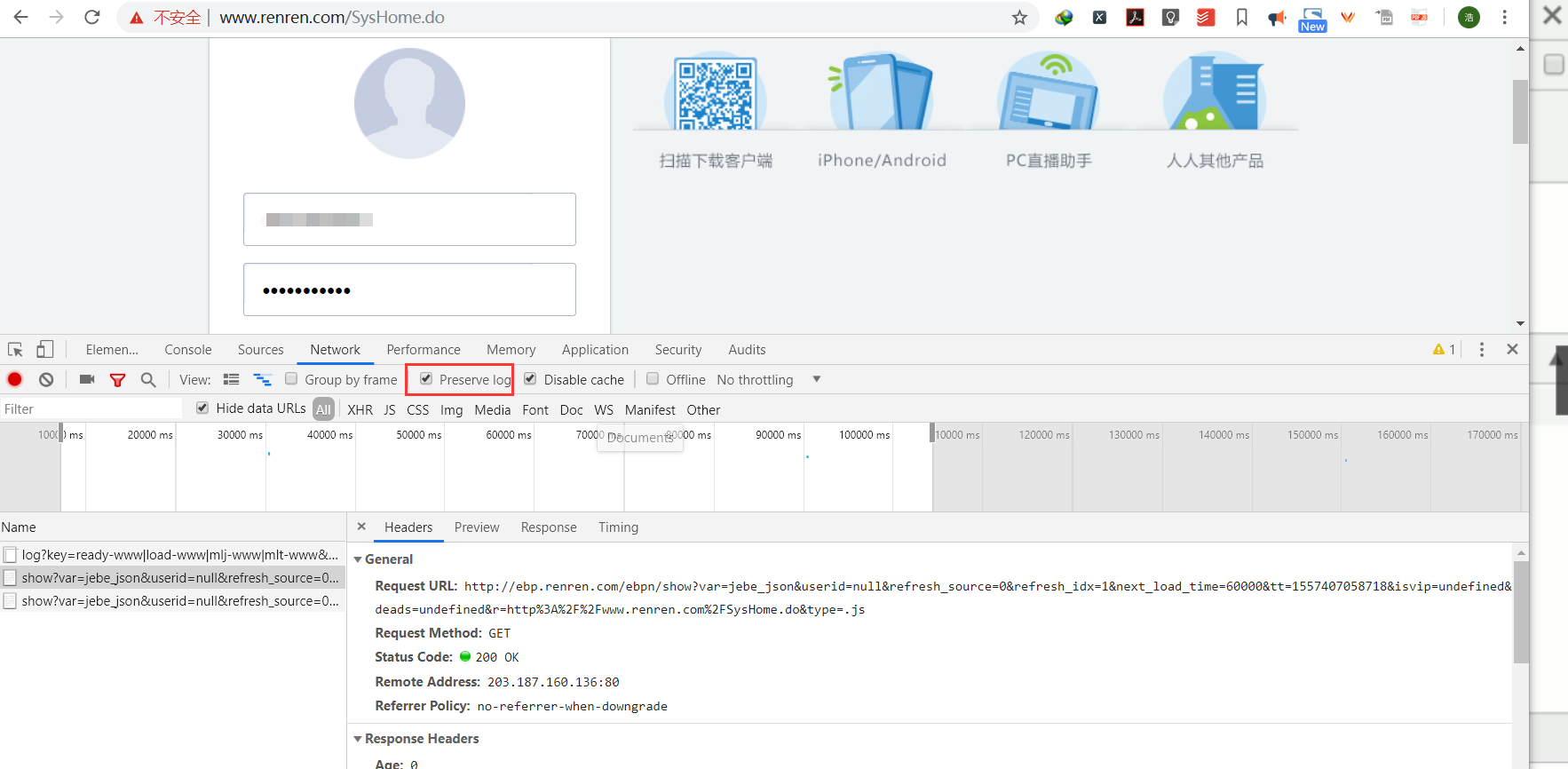

点击登录会进行页面跳转,Preserve log选项,这个选项是保留跳转与跳转之前所有记录

import http.client, mimetypes, urllib, json, time, requests ###################################################################### class YDMHttp: apiurl = 'http://api.yundama.com/api.php' username = '' password = '' appid = '' appkey = '' def __init__(self, username, password, appid, appkey): self.username = username self.password = password self.appid = str(appid) self.appkey = appkey def request(self, fields, files=[]): response = self.post_url(self.apiurl, fields, files) response = json.loads(response) return response def balance(self): data = {'method': 'balance', 'username': self.username, 'password': self.password, 'appid': self.appid, 'appkey': self.appkey} response = self.request(data) if (response): if (response['ret'] and response['ret'] < 0): return response['ret'] else: return response['balance'] else: return -9001 def login(self): data = {'method': 'login', 'username': self.username, 'password': self.password, 'appid': self.appid, 'appkey': self.appkey} response = self.request(data) if (response): if (response['ret'] and response['ret'] < 0): return response['ret'] else: return response['uid'] else: return -9001 def upload(self, filename, codetype, timeout): data = {'method': 'upload', 'username': self.username, 'password': self.password, 'appid': self.appid, 'appkey': self.appkey, 'codetype': str(codetype), 'timeout': str(timeout)} file = {'file': filename} response = self.request(data, file) if (response): if (response['ret'] and response['ret'] < 0): return response['ret'] else: return response['cid'] else: return -9001 def result(self, cid): data = {'method': 'result', 'username': self.username, 'password': self.password, 'appid': self.appid, 'appkey': self.appkey, 'cid': str(cid)} response = self.request(data) return response and response['text'] or '' def decode(self, filename, codetype, timeout): cid = self.upload(filename, codetype, timeout) if (cid > 0): for i in range(0, timeout): result = self.result(cid) if (result != ''): return cid, result else: time.sleep(1) return -3003, '' else: return cid, '' def report(self, cid): data = {'method': 'report', 'username': self.username, 'password': self.password, 'appid': self.appid, 'appkey': self.appkey, 'cid': str(cid), 'flag': '0'} response = self.request(data) if (response): return response['ret'] else: return -9001 def post_url(self, url, fields, files=[]): for key in files: files[key] = open(files[key], 'rb'); res = requests.post(url, files=files, data=fields) return res.text

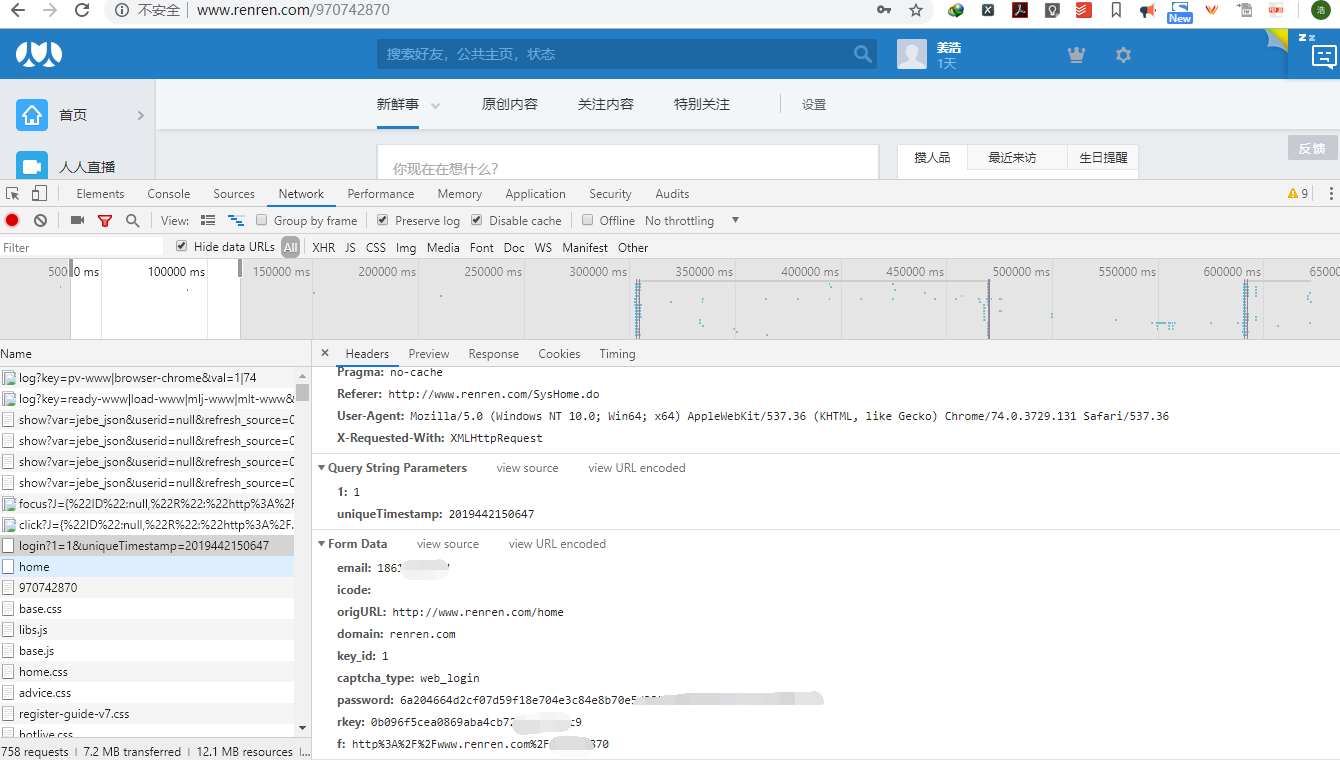

import requests from lxml import etree from YunCode import YDMHttp #该函数是用来返回验证码图片显示的数据值 def getCodeText(codeType,imgPath): result = None # 普通者用户名 username = '用户名' # 普通者密码 password = '密码' # 软件ID,开发者分成必要参数。登录开发者后台【我的软件】获得! appid = id # 软件密钥,开发者分成必要参数。登录开发者后台【我的软件】获得! appkey = '软件密钥' # 图片文件 filename = imgPath # 验证码类型,# 例:1004表示4位字母数字,不同类型收费不同。请准确填写,否则影响识别率。在此查询所有类型 http://www.yundama.com/price.html codetype = codeType # 超时时间,秒 timeout = 30 # 检查 if (username == 'username'): print('请设置好相关参数再测试') else: # 初始化 yundama = YDMHttp(username, password, appid, appkey) # 登陆云打码 uid = yundama.login(); print('uid: %s' % uid) # 查询余额 balance = yundama.balance(); print('balance: %s' % balance) # 开始识别,图片路径,验证码类型ID,超时时间(秒),识别结果 cid, result = yundama.decode(filename, codetype, timeout); print('cid: %s, result: %s' % (cid, result)) return result headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36' } #进行验证码的识别: #1.将验证码进行本地下载,将其提交给打码平台进行识别 url = 'http://www.renren.com/' page_text = requests.get(url=url,headers=headers).text #解析出验证码图片的url tree = etree.HTML(page_text) code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0] code_img_data = requests.get(url=code_img_src,headers=headers).content with open('./code.jpg','wb') as fp: fp.write(code_img_data) #将本地保存好的验证码图片交给打码平台识别 code_text = getCodeText(2004,'./code.jpg') #模拟登录(发送post请求) post_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2019361852954' data = { 'email': 'www.zhangbowudi@qq.com', 'icode': code_text, 'origURL': 'http://www.renren.com/home', 'domain': 'renren.com', 'key_id': '1', 'captcha_type': 'web_login', 'password': '784601bfcb6b9e78d8519a3885c4a3de0aa7c3f597477e00d26a7aa6598e83bf', 'rkey': '00313a9752665df609d455d36edfbe94', 'f':'', } page_text = requests.post(url=post_url,headers=headers,data=data).text with open('./renren.html','w',encoding='utf-8') as fp: fp.write(page_text)

三。proxies参数设置请求代理ip

类型:http与https

#!/usr/bin/env python # -*- coding:utf-8 -*- import requests import random if __name__ == "__main__": # 不同浏览器的UA header_list = [ # 遨游 {"user-agent": "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)"}, # 火狐 {"user-agent": "Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1"}, # 谷歌 { "user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11"} ] # 不同的代理IP proxy_list = [ {"http": "111.206.6.101:80"}, {'http': '39.137.107.98:80'} ] # 随机获取UA和代理IP header = random.choice(header_list) proxy = random.choice(proxy_list) url = 'http://www.baidu.com/s?ie=UTF-8&wd=ip' # 参数3:设置代理 response = requests.get(url=url, headers=header, proxies=proxy) response.encoding = 'utf-8' with open('daili.html', 'wb') as fp: fp.write(response.content) # 切换成原来的IP requests.get(url, proxies={"http": ""})

四。基于multiprocessing.dummy线程池的数据爬取

安装fake-useragent库: pip install fake-useragent

需求:爬取梨视频的视频信息,并计算其爬取数据的耗时

import requests import random from lxml import etree import re from fake_useragent import UserAgent #随机生成UA模块 # 安装fake-useragent库:pip install fake-useragent url = 'http://www.pearvideo.com/category_1' # 随机产生UA,如果报错则可以添加如下参数: # ua = UserAgent(verify_ssl=False,use_cache_server=False).random # 禁用服务器缓存: # ua = UserAgent(use_cache_server=False) # 不缓存数据: # ua = UserAgent(cache=False) # 忽略ssl验证: # ua = UserAgent(verify_ssl=False) ua = UserAgent().random headers = { 'User-Agent': ua } # 获取首页页面数据 page_text = requests.get(url=url, headers=headers).text # 对获取的首页页面数据中的相关视频详情链接进行解析 tree = etree.HTML(page_text) li_list = tree.xpath('//div[@id="listvideoList"]/ul/li') detail_urls = [] for li in li_list: detail_url = 'http://www.pearvideo.com/' + li.xpath('./div/a/@href')[0] title = li.xpath('.//div[@class="vervideo-title"]/text()')[0] detail_urls.append(detail_url) for url in detail_urls: page_text = requests.get(url=url, headers=headers).text vedio_url = re.findall('srcUrl="(.*?)"', page_text, re.S)[0] data = requests.get(url=vedio_url, headers=headers).content fileName = str(random.randint(1, 10000)) + '.mp4' # 随机生成视频文件名称 with open(fileName, 'wb') as fp: fp.write(data) print(fileName + ' is over')

import requests import random from lxml import etree import re from fake_useragent import UserAgent # 安装fake-useragent库:pip install fake-useragent # 导入线程池模块 from multiprocessing.dummy import Pool # 实例化线程池对象 pool = Pool() url = 'http://www.pearvideo.com/category_1' # 随机产生UA ua = UserAgent().random headers = { 'User-Agent': ua } # 获取首页页面数据 page_text = requests.get(url=url, headers=headers).text # 对获取的首页页面数据中的相关视频详情链接进行解析 tree = etree.HTML(page_text) li_list = tree.xpath('//div[@id="listvideoList"]/ul/li') detail_urls = [] # 存储二级页面的url for li in li_list: detail_url = 'http://www.pearvideo.com/' + li.xpath('./div/a/@href')[0] title = li.xpath('.//div[@class="vervideo-title"]/text()')[0] detail_urls.append(detail_url) vedio_urls = [] # 存储视频的url for url in detail_urls: page_text = requests.get(url=url, headers=headers).text vedio_url = re.findall('srcUrl="(.*?)"', page_text, re.S)[0] vedio_urls.append(vedio_url) # 使用线程池进行视频数据下载 func_request = lambda link: requests.get(url=link, headers=headers).content video_data_list = pool.map(func_request, vedio_urls) # 使用线程池进行视频数据保存 func_saveData = lambda data: save(data) pool.map(func_saveData, video_data_list) def save(data): fileName = str(random.randint(1, 10000)) + '.mp4' with open(fileName, 'wb') as fp: fp.write(data) print(fileName + '已存储') pool.close() pool.join()