一、实验环境最低配置

- 做这个实验需要高配置,每个节点配置不能低于2核4G

- k8s 1.19以上版本,快照功能需要单独安装snapshot控制器

- rook的版本大于1.3,不要使用目录创建集群,要使用单独的裸盘进行创建,也就是创建一个新的磁盘,挂载到宿主机,不进行格式化,直接使用即可。对于的磁盘节点配置如下

[root@k8s-master01 ~]# fdisk -l

Disk /dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x000d76eb

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 83886079 40893440 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes, 20971520 sectors # 新的磁盘

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

二、部署Rook

2.1、Rook官方文档

https://rook.io/docs/rook/v1.5/ceph-quickstart.html

2.2、下载Rook安装文件

[root@k8s-master01 app]# git clone --single-branch --branch v1.5.3 https://github.com/rook/rook.git

2.3、配置更改

[root@k8s-master01 app]# cd rook/cluster/examples/kubernetes/ceph

# 修改Rook CSI镜像地址,原本的地址可能是gcr的镜像,但是gcr的镜像无法被国内访问,所以需要同步gcr的镜像到阿里云镜像仓库,文档版本已经为大家完成同步,可以直接修改如下:

[root@k8s-master01 ceph]# vim operator.yaml

## 47-52行更改为:

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.1.2"

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-node-driver-registrar:v2.0.1"

ROOK_CSI_RESIZER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-resizer:v1.0.0"

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-provisioner:v2.0.0"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-snapshotter:v3.0.0"

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-attacher:v3.0.0"

##

# 如果是其他版本,需要自行同步,同步方法可以在网上找到相关文章。

# 还是operator文件,新版本rook默认关闭了自动发现容器的部署,可以找到ROOK_ENABLE_DISCOVERY_DAEMON改成true即可:

# ROOK_ENABLE_DISCOVERY_DAEMON改成true即可:

- name: ROOK_ENABLE_DISCOVERY_DAEMON

value: "true"

2.4、部署rook

# 1、进到/rook/cluster/examples/kubernetes/ceph目录

[root@k8s-master01 ceph]# pwd

/app/rook/cluster/examples/kubernetes/ceph

# 2、部署

[root@k8s-master01 ceph]# kubectl create -f crds.yaml -f common.yaml -f operator.yaml

# 3、等待operator容器和discover容器启动(全部变成1/1 Running 才可以创建Ceph集群)

[root@k8s-master01 ceph]# kubectl get pod -n rook-ceph -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rook-ceph-operator-7d569f655-6bcjv 1/1 Running 0 6m37s 10.244.195.13 k8s-master03

rook-discover-bdk7k 1/1 Running 0 4m2s 10.244.32.148 k8s-master01

rook-discover-j6w4m 1/1 Running 0 4m2s 10.244.58.247 k8s-node02

rook-discover-pnp52 1/1 Running 0 4m2s 10.244.122.136 k8s-master02

rook-discover-spw8l 1/1 Running 0 4m2s 10.244.195.21 k8s-master03

rook-discover-vcqh2 1/1 Running 0 4m2s 10.244.85.248 k8s-node01

三、创建ceph集群

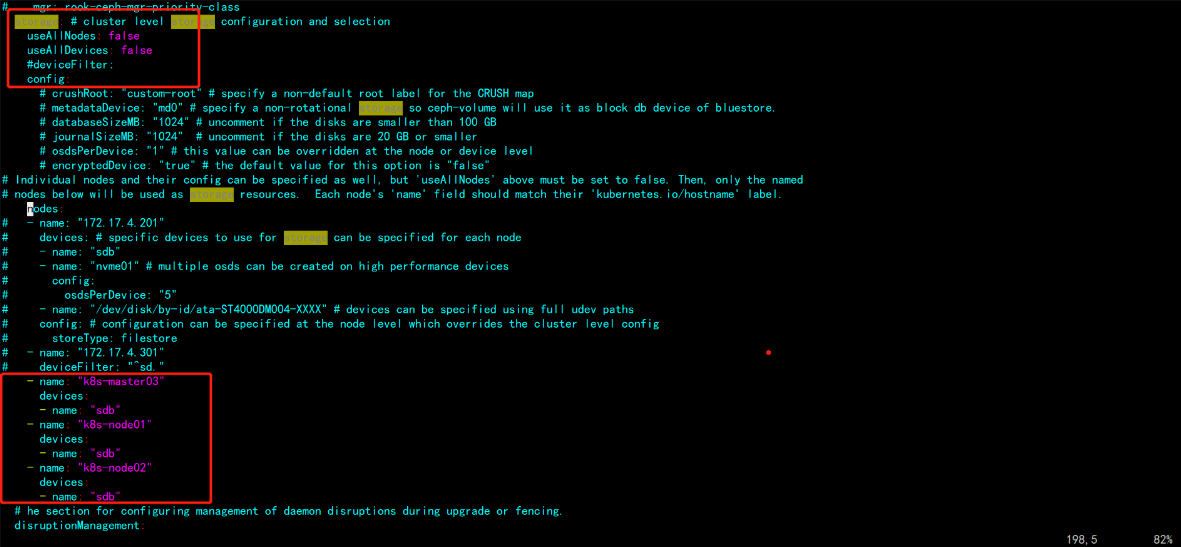

3.1、配置更改

主要更改的是osd节点所在的位置

[root@k8s-master01 ceph]# vim cluster.yaml

# 1、更改storage(自己指定使用磁盘的节点)

###

原配置:

storage: # cluster level storage configuration and selection

useAllNodes: true

useAllDevices: true

更改为:

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

###

- name: "k8s-master03"

devices:

- name: "sdb"

- name: "k8s-node01"

devices:

- name: "sdb"

- name: "k8s-node02"

devices:

- name: "sdb"

###

注意:新版必须采用裸盘,即未格式化的磁盘。其中k8s-master03 k8s-node01 node02有新加的一个磁盘,可以通过lsblk -f查看新添加的磁盘名称。建议最少三个节点,否则后面的试验可能会出现问题

3.2、创建Ceph集群

[root@k8s-master01 ceph]# kubectl create -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

# 创建完成后,可以查看pod的状态

[root@k8s-master01 ceph]# kubectl -n rook-ceph get pod

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-2gp6j 3/3 Running 0 31m

csi-cephfsplugin-5bqp2 3/3 Running 0 17m

csi-cephfsplugin-df5xq 3/3 Running 0 31m

csi-cephfsplugin-gk8f8 3/3 Running 0 31m

csi-cephfsplugin-provisioner-785798bc8f-fcdng 6/6 Running 0 31m

csi-cephfsplugin-provisioner-785798bc8f-mkjpt 6/6 Running 4 31m

csi-cephfsplugin-xdw2t 3/3 Running 0 31m

csi-rbdplugin-8cs79 3/3 Running 0 31m

csi-rbdplugin-d4mrr 3/3 Running 0 31m

csi-rbdplugin-jg77k 3/3 Running 0 31m

csi-rbdplugin-ksq66 3/3 Running 0 21m

csi-rbdplugin-provisioner-75cdf8cd6d-gvwmn 6/6 Running 0 31m

csi-rbdplugin-provisioner-75cdf8cd6d-nqwrn 6/6 Running 5 31m

csi-rbdplugin-wqxbm 3/3 Running 0 31m

rook-ceph-crashcollector-k8s-master03-6f7c7b5fbc-rv4tc 1/1 Running 0 31m

rook-ceph-crashcollector-k8s-node01-6769bf677f-bsr7c 1/1 Running 0 31m

rook-ceph-crashcollector-k8s-node02-7c97d7b8d4-6xgkb 1/1 Running 0 31m

rook-ceph-mgr-a-75fc775496-cqjmh 1/1 Running 1 32m

rook-ceph-mon-a-67cbdcd6d6-hpttq 1/1 Running 0 33m

rook-ceph-operator-7d569f655-6bcjv 1/1 Running 0 69m

rook-ceph-osd-0-9c67b5cb4-729r6 1/1 Running 0 31m

rook-ceph-osd-1-56cd8467fc-bbwcc 1/1 Running 0 31m

rook-ceph-osd-2-74f5c9f8d8-fwlw7 1/1 Running 0 31m

rook-ceph-osd-prepare-k8s-master03-kzgbd 0/1 Completed 0 94s

rook-ceph-osd-prepare-k8s-node01-hzcdw 0/1 Completed 0 92s

rook-ceph-osd-prepare-k8s-node02-pxfcc 0/1 Completed 0 90s

rook-discover-bdk7k 1/1 Running 0 67m

rook-discover-j6w4m 1/1 Running 0 67m

rook-discover-pnp52 1/1 Running 0 67m

rook-discover-spw8l 1/1 Running 0 67m

rook-discover-vcqh2 1/1 Running 0 67m

3.3、安装ceph snapshot控制器

k8s 1.19版本以上需要单独安装snapshot控制器,才能完成pvc的快照功能,所以在此提前安装下,如果是1.19以下版本,不需要单独安装,直接参考视频即可。

# 1、snapshot控制器的部署在集群安装时的k8s-ha-install项目中,需要切换到1.20.x分支

[root@k8s-master01 ~]# cd /root/k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# git checkout manual-installation-v1.20.x

# 2、创建snapshot controller

[root@k8s-master01 k8s-ha-install]# kubectl create -f snapshotter/ -n kube-system

# 3、查看snapshot controller状态

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system -l app=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-0 1/1 Running 0 15s

# 4、具体文档

具体文档:https://rook.io/docs/rook/v1.5/ceph-csi-snapshot.html

四、安装ceph客户端工具

# 1、安装

[root@k8s-master01 ceph]# pwd

/app/rook/cluster/examples/kubernetes/ceph

[root@k8s-master01 ceph]# kubectl create -f toolbox.yaml -n rook-ceph

deployment.apps/rook-ceph-tools created

# 2、待容器Running后,即可执行相关命令

[root@k8s-master01 ceph]# kubectl get po -n rook-ceph -l app=rook-ceph-tools

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-6f7467bb4d-r9vqx 1/1 Running 0 31s

# 3、执行命令ceph status

[root@k8s-master01 ceph]# kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash

[root@rook-ceph-tools-6f7467bb4d-r9vqx /]# ceph status

cluster:

id: 83c11641-ca98-4054-b2e7-422e942befe6

health: HEALTH_OK

services:

mon: 1 daemons, quorum a (age 43m)

mgr: a(active, since 13m)

osd: 3 osds: 3 up (since 18m), 3 in (since 44m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 27 GiB / 30 GiB avail

pgs: 1 active+clean

# 4、执行命令

[root@rook-ceph-tools-6f7467bb4d-r9vqx /]# ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 k8s-master03 1028M 9207M 0 0 0 0 exists,up

1 k8s-node01 1028M 9207M 0 0 0 0 exists,up

2 k8s-node02 1028M 9207M 0 0 0 0 exists,up

# 5、执行命令-查看状态

[root@rook-ceph-tools-6f7467bb4d-r9vqx /]# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 30 GiB 27 GiB 14 MiB 3.0 GiB 10.05

TOTAL 30 GiB 27 GiB 14 MiB 3.0 GiB 10.05

--- POOLS ---

POOL ID STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 0 B 0 0 B 0 8.5 GiB

五、Ceph dashboard

5.1、暴露服务

# 1、默认情况下,ceph dashboard是打开的,可以通过以下命令查看ceph dashboard的service

[root@k8s-master01 ceph]# kubectl -n rook-ceph get service rook-ceph-mgr-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr-dashboard ClusterIP 10.97.5.123 <none> 8443/TCP 47m

# 可以两种方式访问:

1. 将该service改为NodePort

2. 通过ingress代理

# 本文档演示NodePort,ingress可以参考课程的ingress章节。

[root@k8s-master01 ceph]# kubectl -n rook-ceph edit service rook-ceph-mgr-dashboard

# 更改type类型即可

type: NodePort

# 2、访问、任意节点ip:port访问即可

[root@k8s-master01 ceph]# kubectl -n rook-ceph get service rook-ceph-mgr-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr-dashboard NodePort 10.97.5.123 <none> 8443:32202/TCP 49m

# 3、登录、账号为admin,查看密码

[root@k8s-master01 ~]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

@}g"P{-FVe9yb]-AV/>3

六、ceph块存储的使用

块存储一般用于一个Pod挂载一块存储使用,相当于一个服务器新挂了一个盘,只给一个应用使用。

6.1、创建StorageClass和ceph的存储池

# 1、创建文件

[root@k8s-master01 ~]# cd /app/rook/cluster/examples/kubernetes/ceph/

[root@k8s-master01 ceph]# vim storageclass.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

# Change "rook-ceph" provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

# clusterID is the namespace where the rook cluster is running

clusterID: rook-ceph

# Ceph pool into which the RBD image shall be created

pool: replicapool

imageFormat: "2"

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

csi.storage.k8s.io/fstype: ext4

# Delete the rbd volume when a PVC is deleted

reclaimPolicy: Delete

# 2、创建块

[root@k8s-master01 ceph]# kubectl create -f storageclass.yaml

cephblockpool.ceph.rook.io/replicapool created

storageclass.storage.k8s.io/rook-ceph-block created

# 3、查看状态

[root@k8s-master01 ceph]# kubectl get CephBlockPool -n rook-ceph

NAME AGE

replicapool 2m14s

[root@k8s-master01 ceph]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 2m47s

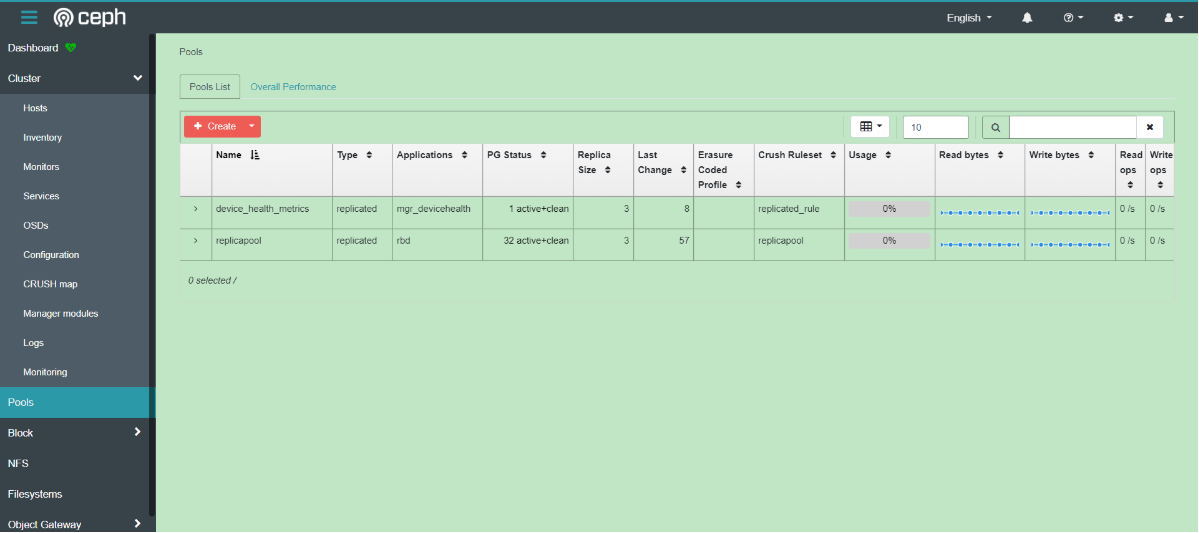

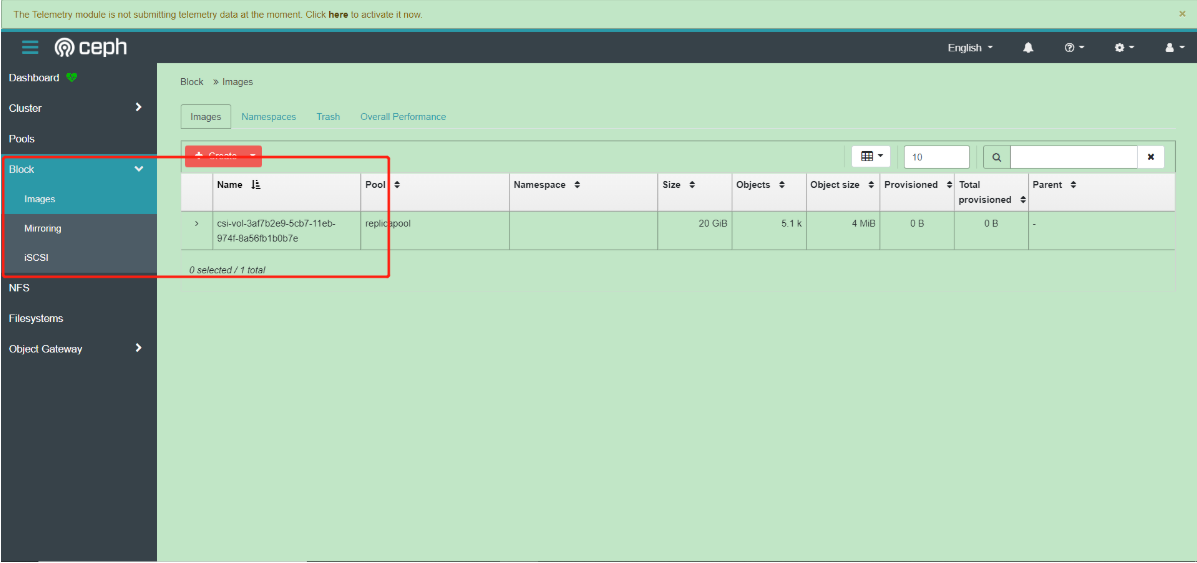

此时可以在ceph dashboard查看到改Pool,如果没有显示说明没有创建成功

6.2、挂载测试

创建一个MySQL服务

[root@k8s-master01 kubernetes]# pwd

/app/rook/cluster/examples/kubernetes

[root@k8s-master01 kubernetes]# kubectl create -f mysql.yaml

[root@k8s-master01 kubernetes]# kubectl create -f wordpress.yaml

# 查看svc

[root@k8s-master01 kubernetes]# kubectl get svc wordpress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wordpress LoadBalancer 10.109.161.119 <pending> 80:32301/TCP 3m57s

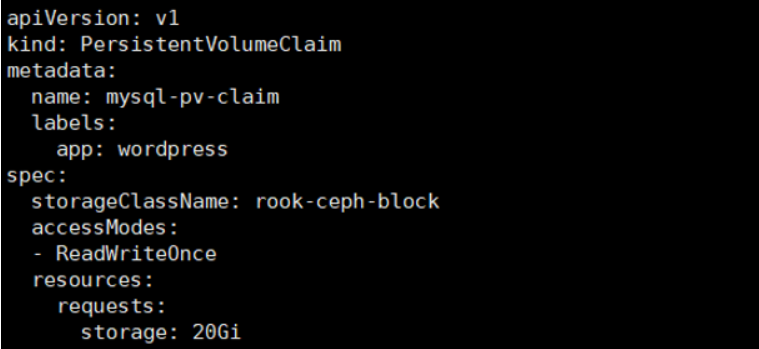

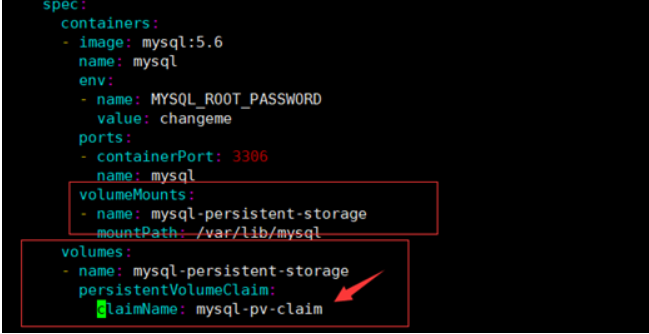

该文件有一段pvc的配置

pvc会连接刚才创建的storageClass,然后动态创建pv,然后连接到ceph创建对应的存储

之后创建pvc只需要指定storageClassName为刚才创建的StorageClass名称即可连接到rook的ceph。如果是statefulset,只需要将volumeTemplateClaim里面的Claim名称改为StorageClass名称即可动态创建Pod,具体请听视频。

其中MySQL deployment的volumes配置挂载了该pvc:

claimName为pvc的名称

因为MySQL的数据不能多个MySQL实例连接同一个存储,所以一般只能用块存储。相当于新加了一块盘给MySQL使用。

创建完成后可以查看创建的pvc和pv

[root@k8s-master01 kubernetes]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-1843c13e-09cb-46c6-9dd8-5f54a834681b 20Gi RWO Delete Bound default/mysql-pv-claim rook-ceph-block 65m

[root@k8s-master01 kubernetes]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-1843c13e-09cb-46c6-9dd8-5f54a834681b 20Gi RWO rook-ceph-block 66m

此时在ceph dashboard上面也可以查看到对应的image

七、共享文件系统的使用

共享文件系统一般用于多个Pod共享一个存储

默认情况下,只能使用Rook创建一个共享文件系统。Ceph中的多文件系统支持仍被认为是实验性的,可以使用中ROOK_ALLOW_MULTIPLE_FILESYSTEMS定义的环境变量启用operator.yaml。

7.1、创建共享类型的文件系统

通过为CephFilesystemCRD中的元数据池,数据池和元数据服务器指定所需的设置来创建文件系统

[root@k8s-master01 kubernetes]# pwd

/app/rook/cluster/examples/kubernetes

[root@k8s-master01 kubernetes]# vim filesystem.yaml

apiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: myfs

namespace: rook-ceph

spec:

metadataPool: # 原数据副本数

replicated:

size: 3

dataPools: # 数据副本数

- replicated:

size: 3

preserveFilesystemOnDelete: true

metadataServer: # 原数据服务副本数

activeCount: 1

activeStandby: true # 启了个从节点

# 创建

[root@k8s-master01 kubernetes]# kubectl create -f filesystem.yaml

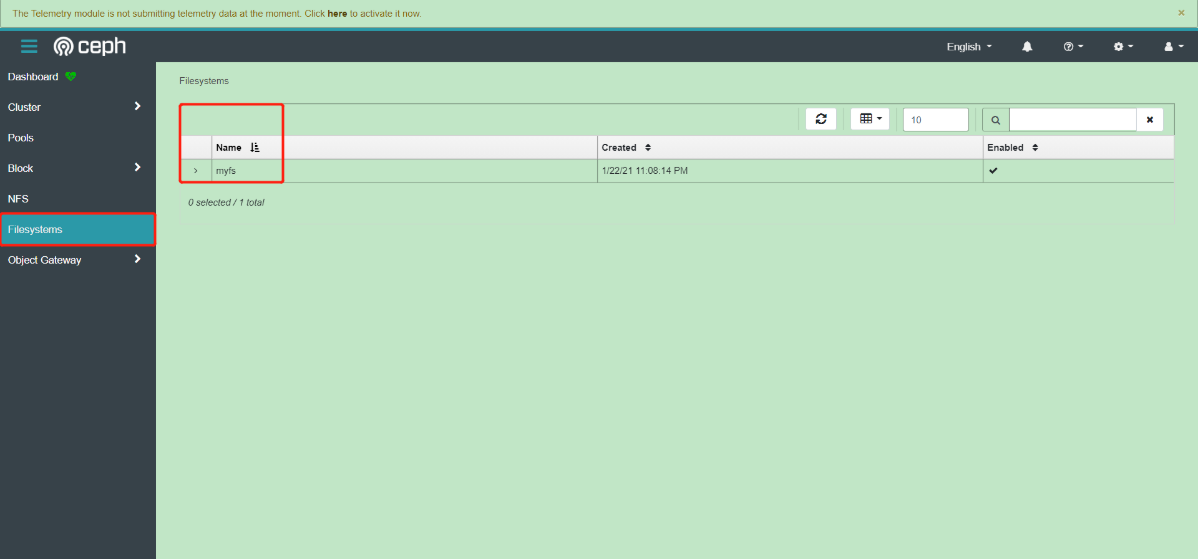

cephfilesystem.ceph.rook.io/myfs created

# 查看,一个主,一个备

[root@k8s-master01 kubernetes]# kubectl -n rook-ceph get pod -l app=rook-ceph-mds

NAME READY STATUS RESTARTS AGE

rook-ceph-mds-myfs-a-5d8547c74d-vfvx2 1/1 Running 0 90s

rook-ceph-mds-myfs-b-766d84d7cb-wj7nd 1/1 Running 0 87s

也可以在ceph dashboard上面查看状态

7.2、创建共享类型文件系统的StorageClass

官网:https://rook.io/docs/rook/v1.5/ceph-filesystem.html

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-cephfs

# Change "rook-ceph" provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.cephfs.csi.ceph.com

parameters:

# clusterID is the namespace where operator is deployed.

clusterID: rook-ceph

# CephFS filesystem name into which the volume shall be created

fsName: myfs

# Ceph pool into which the volume shall be created

# Required for provisionVolume: "true"

pool: myfs-data0

# Root path of an existing CephFS volume

# Required for provisionVolume: "false"

# rootPath: /absolute/path

# The secrets contain Ceph admin credentials. These are generated automatically by the operator

# in the same namespace as the cluster.

csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

reclaimPolicy: Delete

八、PVC扩容、快照、回滚

官方文档:https://rook.io/docs/rook/v1.5/ceph-csi-snapshot.html

8.1、快照

注意:PVC快照功能需要k8s 1.17+