- 系统版本:Ubuntu 18.04.4 LTS

- 内核版本:Linux 4.15.0-91-generic

- kubernetes:v1.18.0

- docker版本:18.06.3-ce

| k8s-vip | k8s-m1 | k8s-m2 | k8s-m3 |

| 192.168.88.200 | 192.168.88.10 | 192.168.88.11 | 192.168.88.12 |

配置主机名

127.0.0.1 localhost 192.168.88.10 k8s-m1 192.168.88.11 k8s-m2 192.168.88.12 k8s-m3

ssh免密登录

hostnamectl set-hostname k8s-m1/2/3 ssh-keygen ssh-copy-id root@192.168.88.10/11/12

关闭 防火墙 swap

swapoff -a;sed -i 's/.*swap.*/#&/g' /etc/fstab systemctl disable ufw;systemctl stop ufw

配置 docker kubernetes 阿里源

vim /etc/apt/sources.list deb http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse deb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update

#所有节点时间同步计划任务 0 * * * * ntpdate 202.112.10.36

安装docker

sudo apt-get install docker-ce=18*

sudo systemctl start docker;sudo systemctl enable docker

安装kube组件

for node in k8s-m{1,2,3};do ssh root@$node sudo apt-get install -y kubelet=1.18* kubeadm=1.18* kubectl=1.18* ipvsadm;done for node in k8s-m{1,2,3};do ssh root@$node sudo systemctl enable kubelet.service;done

设置环境变量

export ETCD_version=v3.3.12 export ETCD_SSL_DIR=/etc/etcd/ssl export APISERVER_IP=192.168.88.200 export SYSTEM_SERVICE_DIR=/usr/lib/systemd/system

for node in k8s-m{1,2,3};do ssh root@$node sudo mkdir -p /usr/lib/systemd/system ;done

etcd安装

cfssl下载

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

etcd下载

wget https://github.com/etcd-io/etcd/releases/download/${ETCD_version}/etcd-${ETCD_version}-linux-amd64.tar.gz

tar -zxvf etcd-${ETCD_version}-linux-amd64.tar.gz && cd etcd-${ETCD_version}-linux-amd64

#复制可执行文件到其余master节点

for node in k8s-m{1,2,3};do scp etcd* root@$node:/usr/local/bin/;done

生成etcd CA、etcd证书

mkdir -p ${ETCD_SSL_DIR} && cd ${ETCD_SSL_DIR}

cat > ca-config.json <<EOF

{"signing":{"default":{"expiry":"87600h"},"profiles":{"kubernetes":{"usages":["signing","key encipherment","server auth","client auth"],"expiry":"87600h"}}}}

EOF

cat > etcd-ca-csr.json <<EOF

{"CN":"etcd","key":{"algo":"rsa","size":2048},"names":[{"C":"CN","ST":"BeiJing","L":"BeiJing","O":"etcd","OU":"etcd"}]}

EOF

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca

cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=ca-config.json -hostname=127.0.0.1,192.168.88.10,192.168.88.11,192.168.88.12 -profile=kubernetes etcd-ca-csr.json | cfssljson -bare etcd

rm -rf *.json *.csr

for node in k8s-m{2,3};do ssh root@$node mkdir -p ${ETCD_SSL_DIR} /var/lib/etcd;scp * root@$node:${ETCD_SSL_DIR};done

ETCD配置文件

cat etcd_config.sh #!/bin/bash ETCD_URL="k8s-m1=https://192.168.88.10:2380,k8s-m2=https://192.168.88.11:2380,k8s-m3=https://192.168.88.12:2380" IP=$(cat /etc/netplan/50-cloud-init.yaml |grep 192|head -n 1 |cut -d "-" -f 2 |cut -d "/" -f 1) NODE=$(hostname) cat > /etc/etcd/config <<EOF #[Member] ETCD_NAME="$NODE" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://$IP:2380" ETCD_LISTEN_CLIENT_URLS="https://$IP:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://$IP:2380" ETCD_ADVERTISE_CLIENT_URLS="https://$IP:2379" ETCD_INITIAL_CLUSTER="ETCD_URL" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF cat > /usr/lib/systemd/system/etcd.service <<EOF [Unit] Description=Etcd Server After=neCNork.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/etc/etcd/config ExecStart=/usr/local/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/etcd-ca.pem --peer-trusted-ca-file=/etc/etcd/ssl/etcd-ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl start etcd.service;systemctl enable etcd.service for node in k8s-m{2,3};do scp /etc/etcd/config root@$node:/etc/etcd/;scp ${SYSTEM_SERVICE_DIR}/etcd.service root@$node:${SYSTEM_SERVICE_DIR};done for node in k8s-m{1,2,3};do scp etcd_config.sh root@$node:/tmp/ ;done for node in k8s-m{1,2,3};do root@$node bash /tmp/etcd_config.sh ;done

检查etcd集群状态

检查etcd集群状态

etcdctl --ca-file=/etc/etcd/ssl/etcd-ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --endpoints="https://192.168.88.10:2379, https://192.168.88.11:2379, https://192.168.88.12:2379" cluster-health

apiserver高可用部署 Haproxy+Keepalived

for node in k8s-m{1,2,3};do ssh root@$node yum -y install haproxy keepalived;done #检查网卡名称并修改 cat > /etc/keepalived/keepalived.conf << EOF vrrp_script check_haproxy { script "/etc/keepalived/check_haproxy.sh" interval 3 } vrrp_instance VI_1 { state BACKUP interface ens192 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { ${APISERVER_IP} } track_script { check_haproxy } } EOF cat > /etc/keepalived/check_haproxy.sh <<EOF #!/bin/bash systemctl status haproxy > /dev/null if [[ $? != 0 ]];then echo "haproxy is down,close the keepalived" systemctl stop keepalived fi EOF cat > /etc/haproxy/haproxy.cfg << EOF global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 #--------------------------------------------------------------------- frontend k8s-api bind *:8443 mode tcp default_backend apiserver #--------------------------------------------------------------------- backend apiserver balance roundrobin mode tcp server k8s-m1 192.168.88.10:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3 server k8s-m2 192.168.88.11:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3 EOF

复制配置文件到其余主节点

for node in k8s-m{2,3};do scp /etc/keepalived/* root@$node:/etc/keepalived;scp /etc/haproxy/haproxy.cfg root@$node:/etc/haproxy;done

for node in k8s-m{1,2,3};do ssh root@$node systemctl enable --now keepalived haproxy;done #查看VIP是否工作正常ping ${APISERVER_IP} -c 3

kubeadm初始化

#查看需要拉取的容器镜像 kubeadm config images list

for node in k8s-m{1,2,3};do images=(kube-apiserver:v1.18.0 kube-controller-manager:v1.18.0 kube-scheduler:v1.18.0 kube-proxy:v1.18.0 pause:3.2 coredns:1.6.7);done

for image in ${images[@]};do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/${image};docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/${image} k8s.gcr.io/${image};docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/${image};done

cat > kubeadm-config.yaml << EOF apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.18.0 controlPlaneEndpoint: "192.168.88.200:8443" etcd: external: endpoints: - https://192.168.88.10:2379 - https://192.168.88.11:2379 - https://192.168.88.12:2379 caFile: /etc/etcd/ssl/etcd-ca.pem certFile: /etc/etcd/ssl/etcd.pem keyFile: /etc/etcd/ssl/etcd-key.pem networking: podSubnet: 10.244.0.0/16 kubeletConfiguration: baseConfig: evictionHard: imagefs.available: 2Gi memory.available: 512Mi nodefs.available: 2Gi --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs EOF

apiVersion: kubeadm.k8s.io/v1alpha1 kind: MasterConfiguration networking: podSubnet: 192.169.0.0/16 serviceSubnet: 11.96.0.0/12 etcd: endpoints: {% for host in groups['etcd'] %} - https://{{ host }}:2379 {% endfor %} caFile: /etc/k8s-etcd/ca.pem certFile: /etc/k8s-etcd/etcd.pem keyFile: /etc/k8s-etcd/etcd-key.pem kubernetesVersion: v1.11.5 tokenTTL: "0" api: advertiseAddress: {{mastervip}} kubeletConfiguration: baseConfig: evictionHard: imagefs.available: 2Gi memory.available: 512Mi nodefs.available: 2Gi #kubeProxy: # config: # mode: "ipvs"

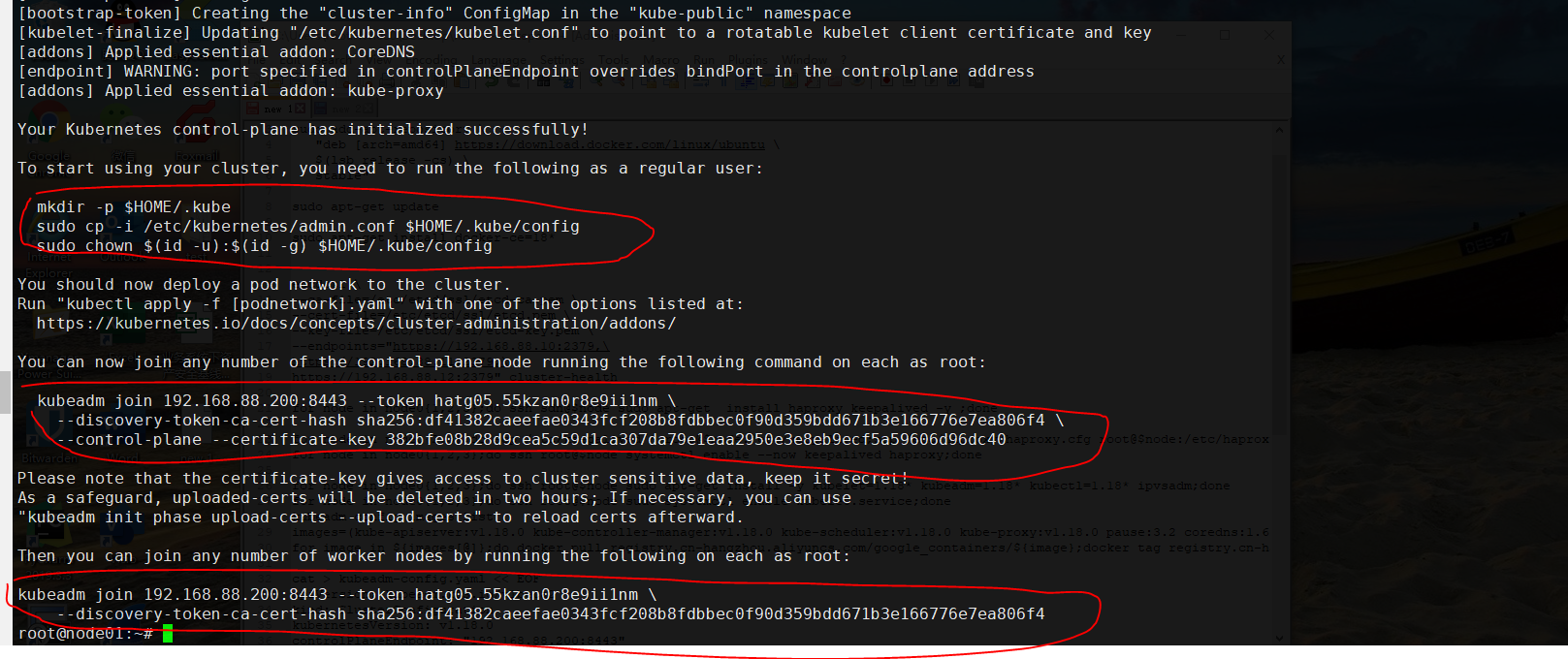

kubeadm init --config=kubeadm-config.yaml --upload-certs

root@node02:~/release-v3.11.2/k8s-manifests# kubectl get node NAME STATUS ROLES AGE VERSION node01 Ready master 19h v1.18.0 node02 Ready master 19h v1.18.0 node03 Ready <none> 19h v1.18.0

root@node02:~/release-v3.11.2/k8s-manifests# kubectl get pod -o wide -n kube-system NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-69d565f6ff-cf9gf 1/1 Running 0 4h1m 192.168.88.12 node03 <none> <none> calico-node-9hq7l 1/1 Running 0 4h1m 192.168.88.10 node01 <none> <none> calico-node-hxcfv 1/1 Running 0 4h1m 192.168.88.11 node02 <none> <none> calico-node-m7d6c 1/1 Running 0 4h1m 192.168.88.12 node03 <none> <none> coredns-66bff467f8-g262t 1/1 Running 0 19h 10.10.186.193 node03 <none> <none> coredns-66bff467f8-jf72n 1/1 Running 0 19h 10.10.186.192 node03 <none> <none> kube-apiserver-node01 1/1 Running 1 19h 192.168.88.10 node01 <none> <none> kube-apiserver-node02 1/1 Running 1 19h 192.168.88.11 node02 <none> <none> kube-controller-manager-node01 1/1 Running 1 19h 192.168.88.10 node01 <none> <none> kube-controller-manager-node02 1/1 Running 1 19h 192.168.88.11 node02 <none> <none> kube-proxy-dl9w8 1/1 Running 3 19h 192.168.88.12 node03 <none> <none> kube-proxy-rmwrp 1/1 Running 3 19h 192.168.88.10 node01 <none> <none> kube-proxy-tcm74 1/1 Running 3 19h 192.168.88.11 node02 <none> <none> kube-scheduler-node01 1/1 Running 2 19h 192.168.88.10 node01 <none> <none> kube-scheduler-node02 1/1 Running 2 19h 192.168.88.11 node02 <none> <none>

添加k8s备用master

kubeadm join 192.168.88.200:8443 --token hatg05.55kzan0r8e9ii1nm

--discovery-token-ca-cert-hash sha256:df41382caeefae0343fcf208b8fdbbec0f90d359bdd671b3e166776e7ea806f4

--control-plane --certificate-key 382bfe08b28d9cea5c59d1ca307da79e1eaa2950e3e8eb9ecf5a59606d96dc40

添加node

kubeadm join 192.168.88.200:8443 --token hatg05.55kzan0r8e9ii1nm

--discovery-token-ca-cert-hash sha256:df41382caeefae0343fcf208b8fdbbec0f90d359bdd671b3e166776e7ea806f4

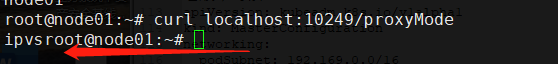

检测IPVS是否开启

curl localhost:10249/proxyMode

安装calico

下载calico

wget https://github.com/projectcalico/calico/releases/download/v3.11.2/release-v3.11.2.tgz tar -xf release-v3.11.2.tgz && cd release-v3.11.2/k8s-manifests/

将etcd证书信息填入 我的etcd证书在/etc/etcd/ssl下

ETCD_ENDPOINTS="https://192.168.88.10:2379,https://192.168.88.11:2379,https://192.168.88.12:2379" sed -i "s#.*etcd_endpoints:.*# etcd_endpoints: "${ETCD_ENDPOINTS}"#g" calico-etcd.yaml ETCD_CERT=`cat /etc/etcd/ssl/etcd.pem | base64 | tr -d ' '` ETCD_KEY=`cat /etc/etcd/ssl/etcd-key.pem | base64 | tr -d ' '` ETCD_CA=`cat /etc/etcd/ssl/etcd-ca.pem | base64 | tr -d ' '` sed -i "s#.*etcd-cert:.*# etcd-cert: ${ETCD_CERT}#g" calico-etcd.yaml sed -i "s#.*etcd-key:.*# etcd-key: ${ETCD_KEY}#g" calico-etcd.yaml sed -i "s#.*etcd-ca:.*# etcd-ca: ${ETCD_CA}#g" calico-etcd.yaml sed -i 's#.*etcd_ca:.*# etcd_ca: "/calico-secrets/etcd-ca"#g' calico-etcd.yaml sed -i 's#.*etcd_cert:.*# etcd_cert: "/calico-secrets/etcd-cert"#g' calico-etcd.yaml sed -i 's#.*etcd_key:.*# etcd_key: "/calico-secrets/etcd-key"#g' calico-etcd.yaml sed -i '/CALICO_IPV4POOL_CIDR/{n;s/192.168.0.0/10.10.0.0/g}' calico-etcd.yaml kubectl apply -f calico-etcd.yaml

shell: "F='/tmp/calico.yaml'; wget -O $F http://{{groups.repo[0]}}/k8s-1.11/calico.yaml; sed -i "s?http://127.0.0.1:2379?{{ETCD_NODES}}?g" $F; cat /etc/k8s-etcd/etcd-key.pem|base64 -w 0 > /tmp/ETCD-KEY; cat /etc/k8s-etcd/ca.pem|base64 -w 0 > /tmp/ETCD-CA; cat /etc/k8s-etcd/etcd.pem|base64 -w 0 > /tmp/ETCD-CERT; sed -i "s?# etcd-key: null?etcd-key: $(cat /tmp/ETCD-KEY)?g" $F; sed -i "s?# etcd-ca: null?etcd-ca: $(cat /tmp/ETCD-CA)?g" $F; sed -i "s?# etcd-cert: null?etcd-cert: $(cat /tmp/ETCD-CERT)?g" $F; sed -i 's?etcd_ca: ""?etcd_ca: "/calico-secrets/etcd-ca"?g' $F; sed -i 's?etcd_cert: ""?etcd_cert: "/calico-secrets/etcd-cert"?g' $F; sed -i 's?etcd_key: ""?etcd_key: "/calico-secrets/etcd-key"?g' $F; sed -i 's?192.168.0.0?192.169.0.0?g' $F ;sed -i "s?quay.io?{{groups.registry[0]}}:5000?g" $F; sed -i "s?192.168.0.179?{{mastervip}}?g" $F; su {{ansible_ssh_user}} -c "kubectl apply -f $F";"

calico BGP模式

#编辑 calico-etcd.yaml #修改 - name: CALICO_IPV4POOL_IPIP #ipip模式关闭 value: "off" #添加 - name: FELIX_IPINIPENABLED #felix关闭ipip value: "false" kubectl apply -f calico-etcd.yaml

原有的tunl0接口会消失

tunl0 Link encap:IPIP Tunnel HWaddr inet addr:10.244.0.1 Mask:255.255.255.255 UP RUNNING NOARP MTU:1440 Metric:1 RX packets:6025 errors:0 dropped:0 overruns:0 frame:0 TX packets:5633 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1 RX bytes:5916925 (5.9 MB) TX bytes:1600038 (1.6 MB)

检查calico网络

root@ub1604-k8s231:~# ip route |grep bird 10.244.0.0/24 via 10.96.141.233 dev ens160 proto bird #其他node配置的网络 blackhole 10.244.1.0/24 proto bird #本机node分配的网络 10.244.2.0/24 via 10.96.141.232 dev ens160 proto bird 10.244.3.0/24 via 10.96.141.234 dev ens160 proto bird 10.244.4.0/24 via 10.96.141.235 dev ens160 proto bird

测试一个pod

kubectl create namespace test cat << EOF | kubectl create -f - apiVersion: v1 kind: Pod metadata: name: network-test namespace: test spec: containers: - image: busybox:latest command: - sleep - "3600" name: network-test EOF

root@node02:~/release-v3.11.2/k8s-manifests# kubectl get pod -o wide -n test NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES network-test 1/1 Running 3 3h57m 10.10.186.194 node03 <none> <none>

至此:k8s安装已经完成

#kubernetes https://blog.51cto.com/13740724/2412370 #calico https://www.cnblogs.com/MrVolleyball/p/9920964.html https://www.cnblogs.com/jinxj/p/9414830.html