课程引用自伯禹平台:https://www.boyuai.com/elites/course/cZu18YmweLv10OeV

《动手学深度学习》官方网址:http://zh.gluon.ai/ ——面向中文读者的能运行、可讨论的深度学习教科书。

Task 1: 线性回归、Softmax与分类

课程详细内容在https://www.boyuai.com/elites/course/cZu18YmweLv10OeV/jupyter/FUT2TsxGNn4g4JY1ayb1W

(Task2 RNN模型: https://www.cnblogs.com/haiyanli/p/12309289.html)

这里记录个别内容;

#以前未注意矢量计算

1. 矢量计算

在模型训练或预测时,我们常常会同时处理多个数据样本并用到矢量计算。在介绍线性回归的矢量计算表达式之前,让我们先考虑对两个向量相加的两种方法。

- 向量相加的一种方法是,将这两个向量按元素逐一做标量加法。

- 向量相加的另一种方法是,将这两个向量直接做矢量加法。

代码示例:import torch

import time

# init variable a, b as 1000 dimension vector

n = 1000

a = torch.ones(n)

b = torch.ones(n)

# define a timer class to record time

class Timer(object):

"""Record multiple running times."""

def __init__(self):

self.times = []

self.start()

def start(self):

# start the timer

self.start_time = time.time()

def stop(self):

# stop the timer and record time into a list

self.times.append(time.time() - self.start_time)

return self.times[-1]

def avg(self):

# calculate the average and return

return sum(self.times)/len(self.times)

def sum(self):

# return the sum of recorded time

return sum(self.times)

timer = Timer()

c = torch.zeros(n)

for i in range(n):

c[i] = a[i] + b[i]

'%.5f sec' % timer.stop()

timer.start()

d = a + b

'%.5f sec' % timer.stop()

运行后结果很明显,后者比前者运算速度更快。因此,我们应该尽可能采用矢量计算,以提升计算效率。

2、借鉴平台的Pytorch代码学习,多看,多练

import torch

from torch import nn

import numpy as np

torch.manual_seed(1)

#generate data

num_inputs = 2

num_examples = 1000

true_w = [2, -3.4]

true_b = 4.2

features = torch.tensor(np.random.normal(0, 1, (num_examples, num_inputs)), dtype=torch.float)

labels = true_w[0] * features[:, 0] + true_w[1] * features[:, 1] + true_b

labels += torch.tensor(np.random.normal(0, 0.01, size=labels.size()), dtype=torch.float)

#load data

import torch.utils.data as Data

batch_size = 10

# combine featues and labels of dataset

dataset = Data.TensorDataset(features, labels)

# put dataset into DataLoader

data_iter = Data.DataLoader(

dataset=dataset, # torch TensorDataset format

batch_size=batch_size, # mini batch size

shuffle=True, # whether shuffle the data or not

num_workers=0, # read data in multithreading

)

#define module

class LinearNet(nn.Module):

def __init__(self, n_feature):

super(LinearNet, self).__init__() # call father function to init

self.linear = nn.Linear(n_feature, 1) # function prototype: `torch.nn.Linear(in_features, out_features, bias=True)`

def forward(self, x):

y = self.linear(x)

return y

#load net

net = LinearNet(num_inputs)

print(net)

#define loss

loss = nn.MSELoss()

#define optimizer

import torch.optim as optim

optimizer = optim.SGD(net.parameters(), lr=0.03) # built-in random gradient descent function

#train

num_epochs = 3

for epoch in range(1, num_epochs + 1):

for X, y in data_iter:

output = net(X)

l = loss(output, y.view(-1, 1))

optimizer.zero_grad() # reset gradient, equal to net.zero_grad()

l.backward()

optimizer.step()

print('epoch %d, loss: %f' % (epoch, l.item()))

#view para

for param in net.parameters():

print(param)

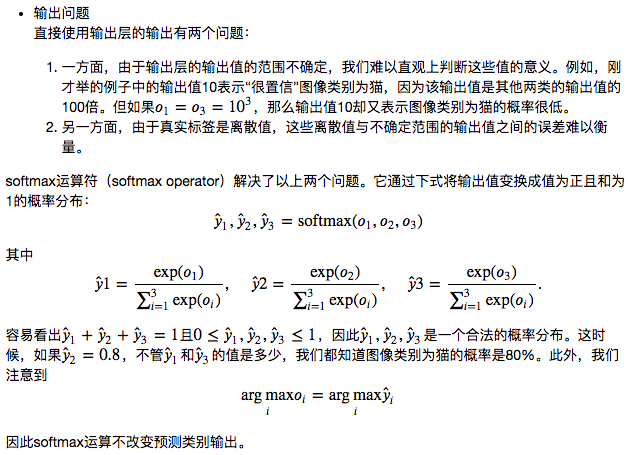

3、Softmax解决的神经网络输出带来的问题(来自课程内容)