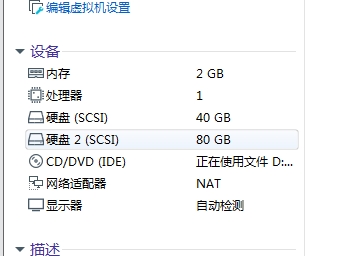

1.为主机增加80G SCSI 接口硬盘

2.划分三个各20G的主分区

设备 Boot Start End Blocks Id System

/dev/sdb1 2048 41945087 20971520 83 Linux

/dev/sdb2 41945088 83888127 20971520 83 Linux

/dev/sdb3 83888128 125831167 20971520 83 Linux

3.将三个主分区转换为物理卷(pvcreate),扫描系统中的物理卷

[root@localhost ~]# pvcreate /dev/sdb[123]

Physical volume "/dev/sdb1" successfully created.

Physical volume "/dev/sdb2" successfully created.

Physical volume "/dev/sdb3" successfully created.

4.使用两个物理卷创建卷组,名字为myvg,查看卷组大小

[root@localhost ~]# vgcreate myvg /dev/sdb[12]

Volume group "myvg" successfully created

5.创建逻辑卷mylv,大小为30G

[root@localhost ~]# lvcreate -L 30G -n mylv myvg

Logical volume "mylv" created.

6.将逻辑卷格式化成xfs文件系统,并挂载到/data目录上,创建文件测试

[root@localhost ~]# mkfs.xfs /dev/myvg/mylv

[root@localhost ~]# mkdir /data

[root@localhost ~]# mount /dev/myvg/mylv /data

[root@localhost ~]# mount

/dev/mapper/myvg-mylv on /data type xfs (rw,relatime,attr2,inode64,noquota)

7.增大逻辑卷到35G

[root@localhost ~]# lvextend -L +5G /dev/myvg/mylv && xfs_gr

owfs /dev/myvg/mylv

[root@localhost ~]# df -Th

8.编辑/etc/fstab文件挂载逻辑卷,并支持磁盘配额选项

[root@localhost ~]# vim /etc/fstab

末行添加 /dev/myvg/mylv /data xfs defaults,usrquota,grpquota 0 0

9.创建磁盘配额,crushlinux用户在/data目录下文件大小软限制为80M,硬限制为100M,

crushlinux用户在/data目录下文件数量软限制为80个,硬限制为100个。

[root@localhost ~]# useradd crushlinux

[root@localhost ~]# tail -1 /etc/passwd

crushlinux:x:1001:1001::/home/crushlinux:/bin/bash

[root@localhost ~]# mkfs.ext4 /dev/sdb3

[root@localhost ~]# mkdir /data1

[root@localhost ~]# mount /dev/sdb3 /data1

[root@localhost ~]# mount -o remount,usrquota,grpquota /data1

[root@localhost ~]# mount |grep /data1

[root@localhost ~]# grep /dev/sdb3 /etc/mtab

/dev/sdb3 /data1 ext4 rw,seclabel,relatime,quota,usrquota,grpquota,data=ordered 0 0

[root@localhost ~]# vim /etc/fstab

末行添加 /dev/sdb3 /data1 ext4 defaults,usrquota,grpquota 0 0

[root@localhost ~]# quotacheck -avug

[root@localhost ~]# ll /data1/a*

-rw-------. 1 root root 6144 8月 2 08:19 /data1/aquota.group

-rw-------. 1 root root 6144 8月 2 08:19 /data1/aquota.user

[root@localhost ~]# quotaon -auvg

/dev/sdb3 [/data1]: group quotas turned on

/dev/sdb3 [/data1]: user quotas turned on

[root@localhost ~]# edquota -u crushlinux

10.使用touch dd 命令在/data目录下测试

[root@localhost ~]# chmod 777 /data1

[root@localhost ~]# su - crushlinux

crushlinux@localhost ~]$ dd if=/dev/zero of=/data1/ceshi bs=1M count=90

sdb3: warning, user block quota exceeded.

记录了90+0 的读入

记录了90+0 的写出

94371840字节(94 MB)已复制,0.0962798 秒,980 MB/秒

[crushlinux@localhost ~]$ touch /data1/{1..90}.txt

sdb3: warning, user file quota exceeded.

root@localhost ~]# repquota -auvs

*** Report for user quotas on device /dev/sdb3

Block grace time: 7days; Inode grace time: 7days

Space limits File limits

User used soft hard grace used soft hard grace

----------------------------------------------------------------------

root -- 20K 0K 0K 2 0 0

crushlinux ++ 92160K 81920K 100M 7days 91 80 100 7days

Statistics:

Total blocks: 7

Data blocks: 1

Entries: 2

Used average: 2.000000

11.查看配额的使用情况:用户角度

[root@localhost ~]# su - crushlinux

上一次登录:五 8月 2 08:24:52 CST 2019pts/1 上

[crushlinux@localhost ~]$ quota

Disk quotas for user crushlinux (uid 1001):

Filesystem blocks quota limit grace files quota limit grace

/dev/sdb3 92160* 81920 102400 6days 91* 80 100 6days

12.查看配额的使用情况:文件系统角度

[crushlinux@localhost ~]$ su - root

密码:

上一次登录:五 8月 2 08:27:01 CST 2019pts/1 上

[root@localhost ~]# repquota /data1

*** Report for user quotas on device /dev/sdb3

Block grace time: 7days; Inode grace time: 7days

Block limits File limits

User used soft hard grace used soft hard grace

----------------------------------------------------------------------

root -- 20 0 0 2 0 0

crushlinux ++ 92160 81920 102400 6days 91 80 100 6days