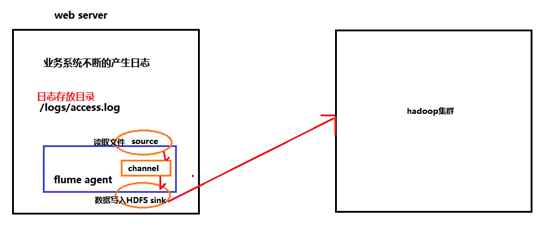

采集需求:比如业务系统使用log4j生成的日志,日志内容不断增加,需要把追加到日志文件中的数据实时采集到hdfs

根据需求,首先定义以下3大要素

l 采集源,即source——监控文件内容更新 : exec ‘tail -F file’

l 下沉目标,即sink——HDFS文件系统 : hdfs sink

l Source和sink之间的传递通道——channel,可用file channel 也可以用 内存channel

vi exec-hdfs-sink.conf

agent1.sources = source1

agent1.sinks = sink1

agent1.channels = channel1

# Describe/configure tail -F source1

agent1.sources.source1.type = exec

agent1.sources.source1.command = tail -F /root/logs/access_log

agent1.sources.source1.channels = channel1

#configure host for source

agent1.sources.source1.interceptors = i1 i2

agent1.sources.source1.interceptors.i1.type = host

agent1.sources.source1.interceptors.i1.hostHeader = hostname

#agent1.sources.source1.interceptors.i1.useIP=true 表示使用ip地址或者主机名

agent1.sources.source1.interceptors.i1.useIP=false

agent1.sources.source1.interceptors.i2.type = timestamp

# Describe sink1

agent1.sinks.sink1.type = hdfs

#a1.sinks.k1.channel = c1

agent1.sinks.sink1.hdfs.path=hdfs://mini1:9000/file/%{hostname}/%y-%m-%d/%H-%M

agent1.sinks.sink1.hdfs.filePrefix = access_log

agent1.sinks.sink1.hdfs.batchSize= 100

agent1.sinks.sink1.hdfs.fileType = DataStream

agent1.sinks.sink1.hdfs.writeFormat =Text

agent1.sinks.sink1.hdfs.rollSize = 10240

agent1.sinks.sink1.hdfs.rollCount = 1000

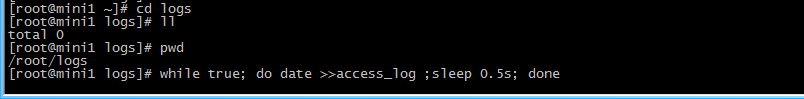

模拟数据

mkdir logs

cd logs

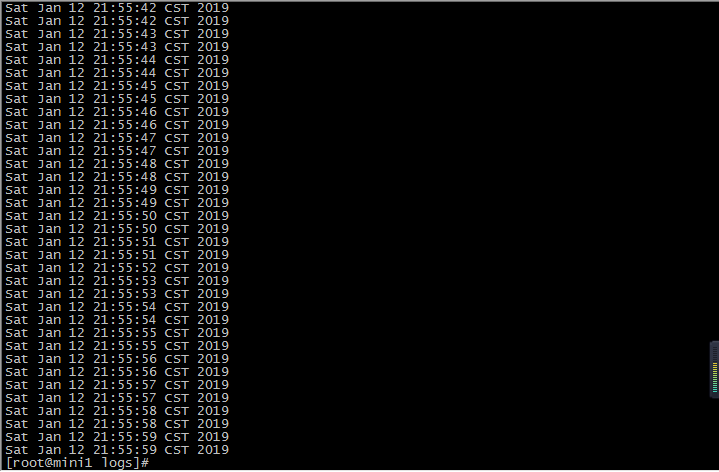

while true; do date >>access_log ;sleep 0.5s; done

启动

bin/flume-ng agent -c conf -f conf/exec-hdfs-sink.conf -n agent1 -Dflume.root.logger=INFO,console

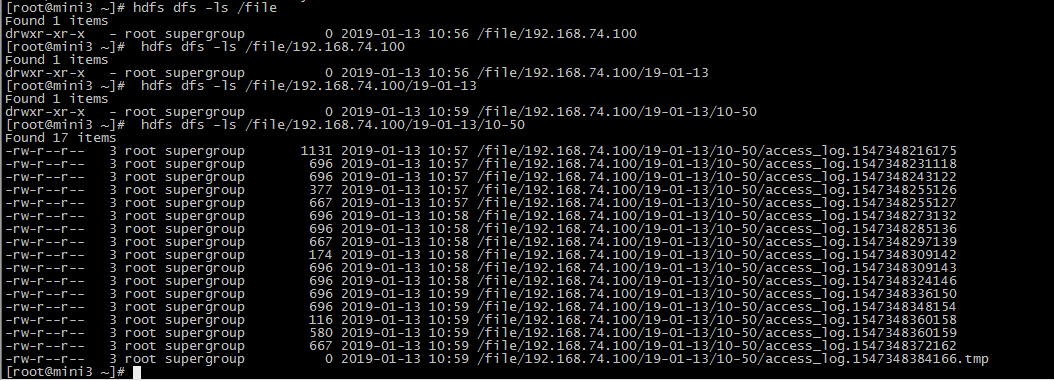

查看结果