ElasticSearch是一个搜索引擎,用来搜索、分析、存储日志;

Logstash用来采集日志,把日志解析为json格式交给ElasticSearch;

Kibana是一个数据可视化组件,把处理后的结果通过web界面展示;

Beats在这里是一个轻量级日志采集器,早期的ELK架构中使用Logstash收集、解析日志,但是Logstash对内存、cpu、io等资源消耗比较高.相比 Logstash,Beats所占系统的CPU和内存几乎可以忽略不计.

1.准备环境以及安装包

hostname:linux-elk1 ip:10.0.0.22

hostname:linux-elk2 ip:10.0.0.33

# 两台机器保持hosts一致,以及关闭防火墙和selinux

cat /etc/hosts

10.0.0.22 linux-elk1

10.0.0.33 linux-elk2

systemctl disable firewalld.service

systemctl disable NetworkManager

echo "*/5 * * * * /usr/sbin/ntpdate time1.aliyun.com &>/dev/null" >>/var/spool/cron/root

systemctl restart crond.service

cd /usr/local/src

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.4.0.rpm

wget https://artifacts.elastic.co/downloads/kibana/kibana-5.4.0-x86_64.rpm

wget https://artifacts.elastic.co/downloads/logstash/logstash-5.4.0.rpm

ll

total 342448

-rw-r--r-- 1 root root 33211227 May 15 2018 elasticsearch-5.4.0.rpm

-rw-r--r-- 1 root root 167733100 Feb 3 12:13 jdk-8u121-linux-x64.rpm

-rw-r--r-- 1 root root 56266315 May 15 2018 kibana-5.4.0-x86_64.rpm

-rw-r--r-- 1 root root 93448667 May 15 2018 logstash-5.4.0.rpm

# 安装配置elasticsearch

yum -y install jdk-8u121-linux-x64.rpm elasticsearch-5.4.0.rpm

grep "^[a-Z]" /etc/elasticsearch/elasticsearch.yml

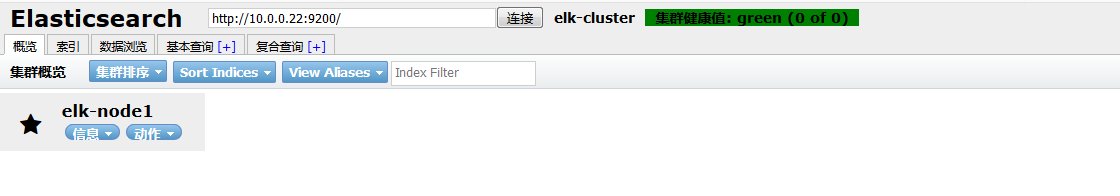

cluster.name: elk-cluster

node.name: elk-node1

path.data: /data/elkdata

path.logs: /data/logs

bootstrap.memory_lock: true

network.host: 10.0.0.22

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.0.0.22", "10.0.0.33"]

mkdir /data/{elkdata,logs}

chown -R elasticsearch.elasticsearch /data

# 打开启动脚本中的某行/usr/lib/systemd/system/elasticsearch.service

LimitMEMLOCK=infinity

# 修改最大、最小内存为3g

grep -vE "^$|#" /etc/elasticsearch/jvm.options

-Xms3g

-Xmx3g

...

systemctl restart elasticsearch.service

tail /data/logs/elk-cluster.log # 最后几行有个started时,说明服务已经启动

访问http://10.0.0.22:9200/,出现es信息,说明服务正常

在linux上验证es服务正常的命令:curl http://10.0.0.22:9200/_cluster/health?pretty=true

2.安装es插件head

wget https://nodejs.org/dist/v8.10.0/node-v8.10.0-linux-x64.tar.xz

tar xf node-v8.10.0-linux-x64.tar.xz

mv node-v8.10.0-linux-x64 /usr/local/node

vim /etc/profile

export NODE_HOME=/usr/local/node

export PATH=$PATH:$NODE_HOME/bin

source /etc/profile

which node

/usr/local/node/bin/node

which npm

/usr/local/node/bin/npm

npm install -g cnpm --registry=https://registry.npm.taobao.org # 生成了一个cnpm

/usr/local/node/bin/cnpm -> /usr/local/node/lib/node_modules/cnpm/bin/cnpm

npm install -g grunt-cli --registry=https://registry.npm.taobao.org # 生成了一个grunt

/usr/local/node/bin/grunt -> /usr/local/node/lib/node_modules/grunt-cli/bin/grunt

wget https://github.com/mobz/elasticsearch-head/archive/master.zip

unzip master.zip

cd elasticsearch-head-master/

vim Gruntfile.js

90 connect: {

91 server: {

92 options: {

93 hostname: '10.0.0.22',

94 port: 9100,

95 base: '.',

96 keepalive: true

97 }

98 }

99 }

vim _site/app.js

4360行将"http://localhost:9200"改为"http://10.0.0.22:9200";

cnpm install

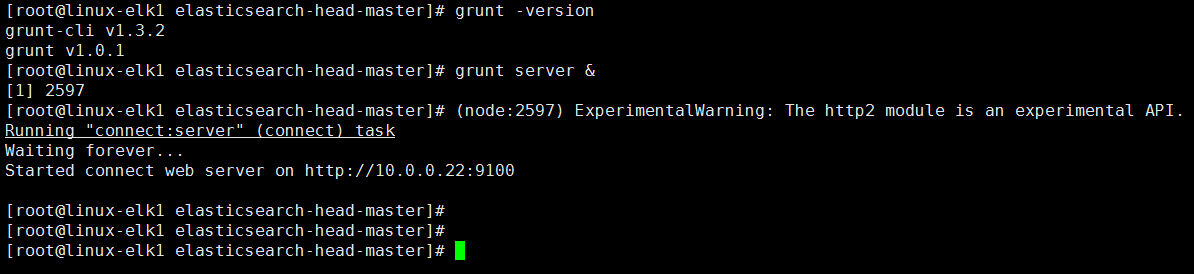

grunt --version

vim /etc/elasticsearch/elasticsearch.yml # 增加如下两行

http.cors.enabled: true

http.cors.allow-origin: "*"

systemctl restart elasticsearch

systemctl enable elasticsearch

grunt server &

在elasticsearch 2.x以前的版本可以通过:

/usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head来安装head插件;

在elasticsearch 5.x以上版本需要通过npm进行安装

docker方式启动插件(前提是装好了es)

yum -y install docker systemctl start docker && systemctl enable docker docker run -p 9100:9100 mobz/elasticsearch-head:5

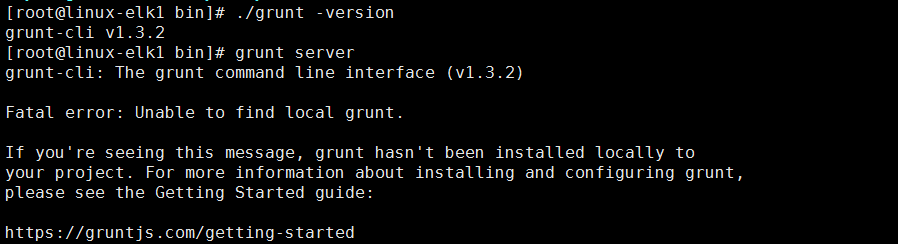

报错:Fatal error: Unable to find local grunt.

网上大多解决方法是:npm install grunt --save-dev,这是没有装grunt的时候的解决办法,在上面的步骤中已经安装了grunt,只是启动时没有在项目目录中,到项目目录执行该命令grunt server &即可.

3.logstash部署及基本语法

cd /usr/local/src

yum -y install logstash-5.4.0.rpm

# 使用rubydebug方式前台输出展示以及测试

/usr/share/logstash/bin/logstash -e 'input { stdin {} } output { stdout { codec => rubydebug} }'

The stdin plugin is now waiting for input:

00:37:04.711 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

hello

{

"@timestamp" => 2019-02-03T16:43:48.149Z,

"@version" => "1",

"host" => "linux-elk1",

"message" => "hello"

}

# 测试输出到文件

/usr/share/logstash/bin/logstash -e 'input { stdin {} } output { file { path => "/tmp/test-%{+YYYY.MM.dd}.log"} }'

# 开启gzip压缩输出

/usr/share/logstash/bin/logstash -e 'input { stdin {} } output{ file { path => "/tmp/test-%{+YYYY.MM.dd}.log.tar.gz" gzip => true } }'

# 测试输出到elasticsearch

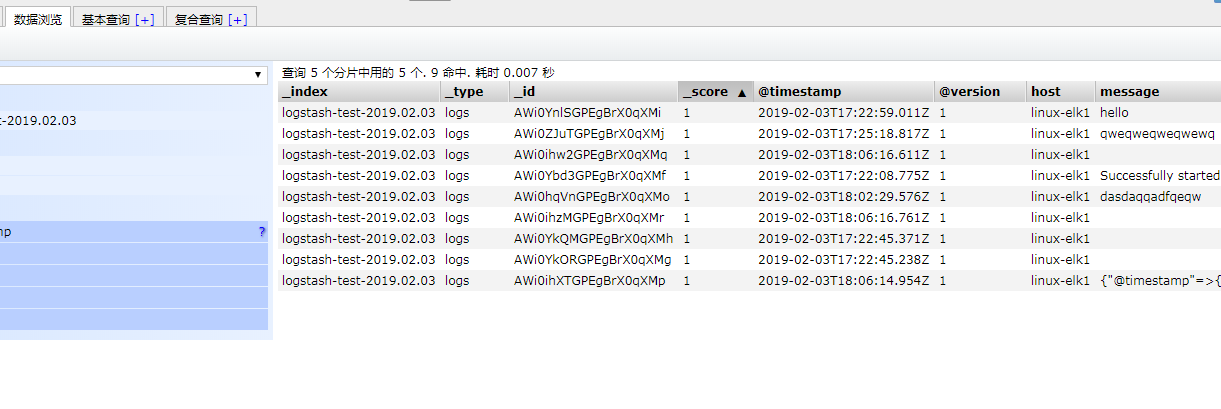

/usr/share/logstash/bin/logstash -e 'input { stdin {} } output { elasticsearch { hosts => ["10.0.0.22:9200"] index => "logstash-test-%{+YYYY.MM.dd}" } }'

systemctl enable logstash

systemctl restart logstash

在删除数据时,在该界面删除,切勿在服务器目录上删除,因为集群节点上都有这样的数据,删除某一个,可能会导致elasticsearch无法启动.

Elasticsearch环境准备-参考博客:http://blog.51cto.com/jinlong/2054787

logstash部署及基本语法-参考博客:http://blog.51cto.com/jinlong/2055024