1. 用Python批量爬取全站小说

爬取这个网站小说:http://www.shuquge.com/txt/89644/index.html

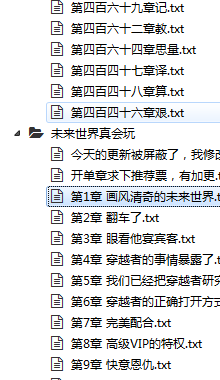

2. 爬取一本书

# -*- coding: utf-8 -*- """ Created on Sat Feb 8 20:31:43 2020 @author: douzi """ import requests from parsel import Selector import re import time def main(): index_url = 'http://www.shuquge.com/txt/89644/index.html' # 想要爬取的小说 tpl = 'http://www.shuquge.com/txt/89644/' headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"} # 获取小说目录页 urllist = requests.get(index_url, headers=headers) index_sel = Selector(urllist.text) # <div class="listmain"> <dl><dt>《九星毒奶》最新章节</dt><dd><a href="29287710.html">1040 养龙皮?</a></dd> index = index_sel.css('.listmain a::attr(href)').getall() # 保存10章节 for n in index: url = tpl + n # 第 n 章 response = requests.get(url, headers=headers, timeout=30) response.encoding = response.apparent_encoding print(response.request.url) # xpath css 选择器 提取网页数据结构(html) # lxml pyquery parsel sel = Selector(response.text) title = sel.css('h1::text').get() print(title) match = re.search(r'[0-9]*', title.split()[0]) if match: with open("./jiuxin/" + match.group(0) + '.txt', 'w', encoding = 'utf-8') as f: f.writelines(title) # <div id="content" class="showtxt"> for line in sel.css('#content::text').getall(): f.writelines(line) time.sleep(0.5) if __name__ == '__main__': main()

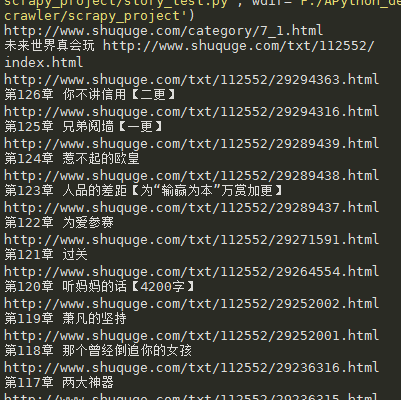

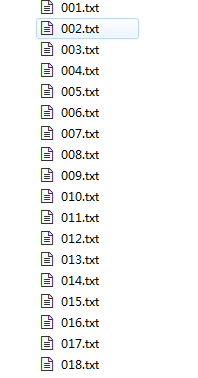

3. 爬取一个分类

# -*- coding: utf-8 -*- """ Created on Sat Feb 8 20:31:43 2020 @author: douzi """ import requests from parsel import Selector import re import time import os headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"} # 下载一章节 def download_one_chapter(url, book_name): # 第 n 章 response = requests.get(url, headers=headers, timeout=30) response.encoding = response.apparent_encoding print(response.request.url) # xpath css 选择器 提取网页数据结构(html) # lxml pyquery parsel sel = Selector(response.text) title = sel.css('h1::text').get() print(title) with open('./'+book_name+'/'+title+'.txt','a+', encoding = 'utf-8') as f: f.writelines(title) # <div id="content" class="showtxt"> for line in sel.css('#content::text').getall(): f.writelines(line) f.write(' �') time.sleep(0.5) # 下载一本书 def download_one_book(index_url, bname): # index_url = 'http://www.shuquge.com/txt/89644/index.html' # 想要爬取的小说:例,九星毒奶 book_name = re.split('/', index_url)[-2] # 例: 89644 tpl = 'http://www.shuquge.com/txt/' + book_name + '/' # 获取小说目录页 urllist = requests.get(index_url, headers=headers) urllist.encoding = urllist.apparent_encoding index_sel = Selector(urllist.text) # <div class="listmain"> <dl><dt>《九星毒奶》最新章节</dt><dd><a href="29287710.html">1040 养龙皮?</a></dd> index = index_sel.css('.listmain a::attr(href)').getall() for n in index: url = tpl + n download_one_chapter(url, bname) # 下载一类别 def download_one_category(): tpl = 'http://www.shuquge.com/category/7_{}.html' # 想要爬取的类别 # 3页 for page in range(1, 4): category_url = tpl.format(page) print(category_url) # 获取小说类别页 cate_list = requests.get(category_url, headers=headers) cate_list.encoding = cate_list.apparent_encoding index_sel = Selector(cate_list.text) books_url = index_sel.css('span.s2 a::attr(href)').getall() books_name = index_sel.css('span.s2 a::text').getall() for book_url in books_url: # 如:变成随身老奶奶 http://www.shuquge.com/txt/109203/index.html book_name = books_name[books_url.index(book_url)] print(book_name, book_url) if os.path.isdir('./' + book_name): os.removedirs(book_name) else: os.mkdir('./' + book_name) # 下载一本书 download_one_book(book_url, book_name) if __name__ == '__main__': # download_one_book('asd') download_one_category()