作业①

1)爬取中国气象网的所有图片实验

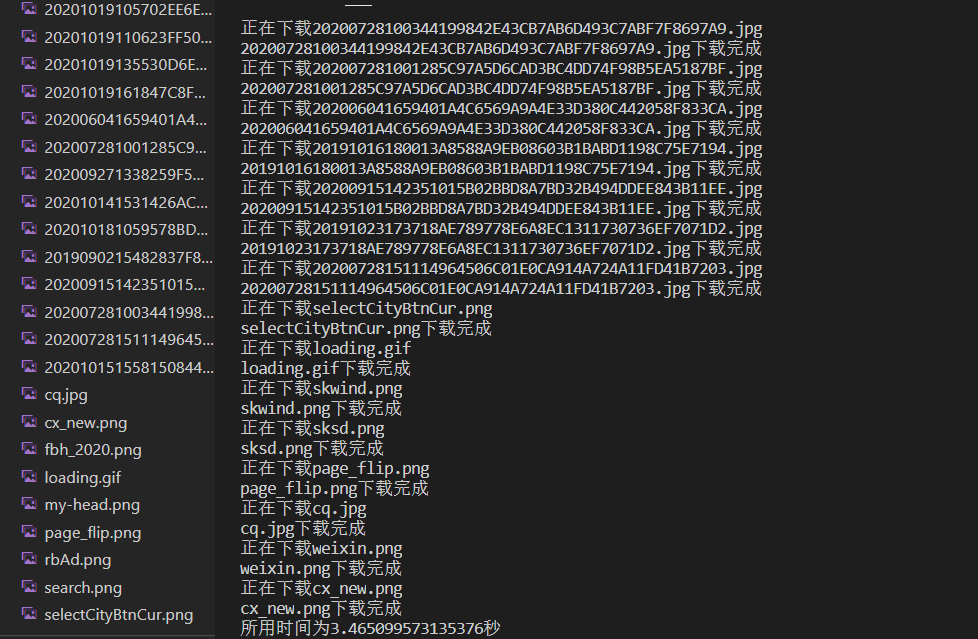

(1)单线程

代码部分

import re

import requests

from multiprocessing.dummy import Pool

from bs4 import BeautifulSoup

import os

import time

if not os.path.exists('./image1'):

os.mkdir('./image1')

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

url = "http://www.weather.com.cn/"

data_url_list = []

start_time = time.time()

response = requests.get(url = url,headers =headers)

page_text = response.text

soup = BeautifulSoup(page_text,"lxml")

img_list = soup.select("img")

for img in img_list:

detail_url = img["src"]

data_url_list.append(detail_url)

# print(data_url_list)

for data_url in data_url_list:

data = requests.get(url=data_url,headers=headers).content

name = data_url.split('/')[-1]

path_name = './image1/' + name

print("正在下载"+name)

with open(path_name,"wb") as fp:

fp.write(data)

print(name+"下载完成")

end_time = time.time()

print("所用时间为"+str(end_time-start_time)+"秒")

结果如下

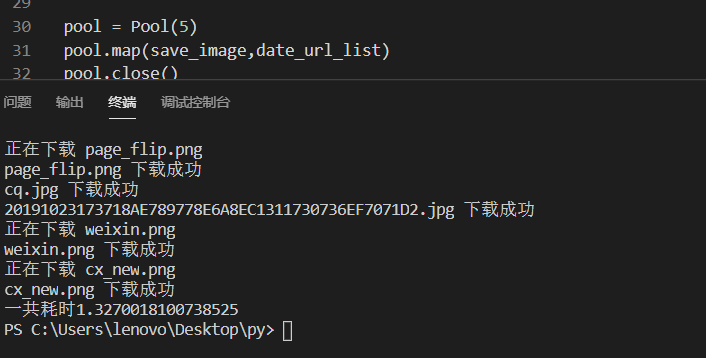

(2)多线程

代码部分

import time

import os

from bs4 import BeautifulSoup

import requests

from multiprocessing.dummy import Pool

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

if not os.path.exists('./image2'):

os.mkdir('./image2')

url = "http://www.weather.com.cn/"

start_time = time.time()

page_text = requests.get(url = url,headers=headers).text

soup = BeautifulSoup(page_text,"lxml")

img_list = soup.select("img")

date_url_list = []

for img in img_list:

data_url = img["src"]

date_url_list.append(data_url)

def save_image(image_url):

data = requests.get(url=url,headers=headers).content

name = image_url.split('/')[-1]

print("正在下载",name)

path_name = './image2/' + name

with open(path_name,"wb") as fp:

fp.write(data)

print(name,"下载成功")

pool = Pool(5)

pool.map(save_image,date_url_list)

pool.close()

pool.join()

end_time = time.time()

print("一共耗时"+str(end_time-start_time))

结果如下

2)心得体会

因为书上代码比较繁琐,看起来有点累,就自己打了一下。通过实验,可以看出多线程爬取图片所用的时间更少,可以为相关阻塞的操作单独开启线程,阻塞操作就可以异步执行。这里我使用了线程池,对比了一下其它同学的实验结果,好像耗时要长不少,不过我们可以降低系统对进程或者线程创建和销毁的一个频率,从而很好的降低系统的开销。弊端就是这样做的话线程池里面的线程数量有限。(我是设置了线程池的大小为5个)

作业②

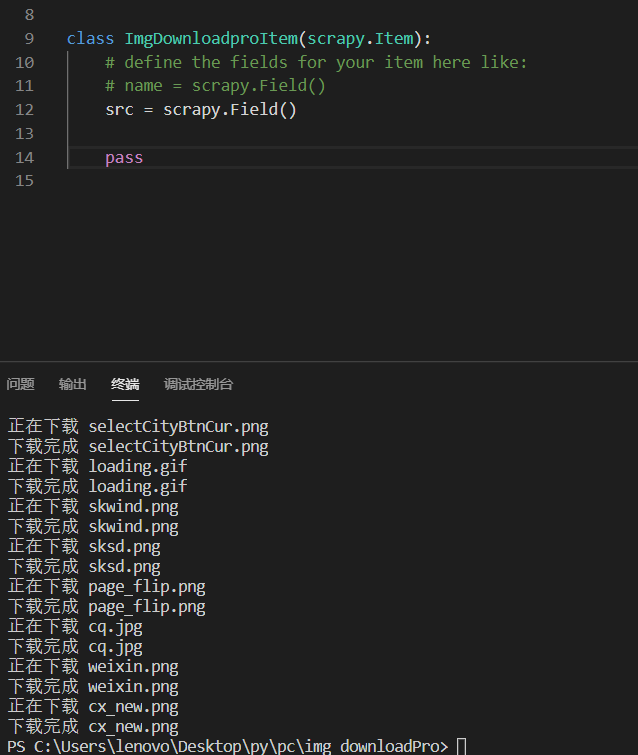

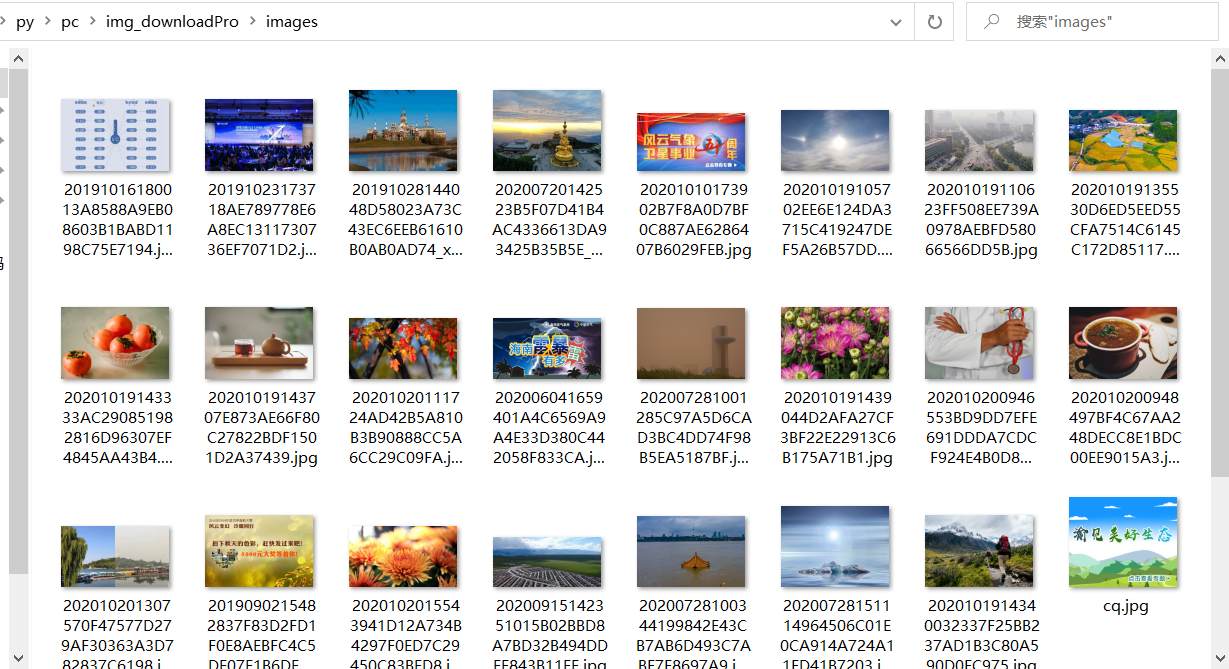

1)使用scrapy框架复现作业①实验

代码部分

img_download.py

import scrapy

import os

from ..items import ImgDownloadproItem

class ImgDownloadSpider(scrapy.Spider):

name = 'img_download'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.weather.com.cn/']

if not os.path.exists('./images'):

os.mkdir('./images')

def parse(self, response):

src_list = response.xpath('//img/@src').extract() #对于一个列表使用extract()方法,得到的也是一个列表 这样我们就得到了我们想要的url

for src in src_list:

item = ImgDownloadproItem() #实例化item对象

item['src'] = src

yield item #将之提交给管道,然后进行持久化存储

items.py

import scrapy

class ImgDownloadproItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

src = scrapy.Field()

pass

settings.py

BOT_NAME = 'img_downloadPro'

SPIDER_MODULES = ['img_downloadPro.spiders']

NEWSPIDER_MODULE = 'img_downloadPro.spiders'

LOG_LEVEL = 'ERROR' #这样设置就可以只看到提示错误的日志信息了

USER_AGENT = 'Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre' #UA伪装

# Obey robots.txt rules

ROBOTSTXT_OBEY = False #爬取数据的时候君子协议可以设置成False

ITEM_PIPELINES = {

'img_downloadPro.pipelines.ImgDownloadproPipeline': 300,

}

pipelines.py

from itemadapter import ItemAdapter

import requests

class ImgDownloadproPipeline:

def process_item(self, item, spider):

src = item["src"]

name = src.split('/')[-1]

data = requests.get(url=src).content

path_name = './images/'+name

with open(path_name,"wb") as fp:

print("正在下载",name)

fp.write(data)

print("下载完成",name)

fp.close()

return item

结果展示

2)心得体会

本次实验花费了我不少时间,可能是ddl到了,太慌了没打出来。。。通过本次实验我对Scrapy框架熟悉了很多,以及在srcapy中使用xpath方法的返回值是选择器,如果要提取我们想要的信息,要再后面加一个extract()方法。本次实验之后,我对五大核心组件在框架中所扮演的角色有了进一步的了解,通过编程实践,我也逐渐熟悉了各自的作用。听说ImagesPipeline是专门实现对图片的爬取,本次实验由于时间紧迫,下次再作尝试。

作业③

1)使用scrapy框架爬取股票相关信息

代码部分

Gupiao.py

import scrapy

import re

import json

from ..items import GupiaoproItem

class GupiaoSpider(scrapy.Spider):

name = 'Gupiao'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://75.push2.eastmoney.com/api/qt/clist/get?&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1602901412583%20Request%20Method:%20GET']

def parse(self, response):

data1 = json.loads(response.text)

data = data1["data"]

diff = data["diff"] #借鉴前面优秀同学的博客,josn.loads比上次自己用正则去匹配方便很多

#diff的值就是一个大列表,里面是小字典

for i in range(len(diff)):

item = GupiaoproItem()

item["count"]=str(i)

item["code"]=str(diff[i]["f12"])

item["name"]=str(diff[i]["f14"])

item["new_price"]=str(diff[i]["f2"])

item["zhangdiefu"]=str(diff[i]["f3"])

item["zhangdieer"]=str(diff[i]["f4"])

item["com_num"]=str(diff[i]["f5"])

item["com_price"]=str(diff[i]["f6"])

item["zhengfu"]=str(diff[i]["f7"])

item["top"]=str(diff[i]["f15"])

item["bottom"]=str(diff[i]["f16"])

item["today"]=str(diff[i]["f17"])

item["yesterday"]=str(diff[i]["f18"])

yield item

items.py

class GupiaoproItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

count = scrapy.Field()

code = scrapy.Field()

name = scrapy.Field()

new_price = scrapy.Field()

zhangdiefu = scrapy.Field()

zhangdieer = scrapy.Field()

com_num = scrapy.Field()

com_price = scrapy.Field()

zhengfu = scrapy.Field()

top = scrapy.Field()

bottom = scrapy.Field()

today = scrapy.Field()

yesterday = scrapy.Field()

pass

settings.py

LOG_LEVEL = 'ERROR' #这样设置就可以只看到提示错误的日志信息了

USER_AGENT = 'Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre' #UA伪装

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'GupiaoPro.pipelines.GupiaoproPipeline': 300,

}

pipelines.py

from itemadapter import ItemAdapter

class GupiaoproPipeline:

fp = None

#重写父类的一个方法:该方法只在开始爬虫的时候被调用一次,避免多次打开文件

def open_spider(self,spider):

print('开始爬虫......')

self.fp = open('./gupiao.txt','w',encoding='utf-8')

self.fp.write("序号" + " 股票代码" + " 股票名称 " + " 最新报价 " + " 涨跌幅 " + " 涨跌额 " +" 成交量 " + " 成交额 " + " 振幅 " + "最高 " + "最低 " + "今开 "+ " 昨收 " + "

")

def process_item(self, item, spider):

#设置对齐的格式

tplt = "{0:^2} {1:^1} {2:{13}^4} {3:^5} {4:^6} {5:^6} {6:^6} {7:^10} {8:^10} {9:^10} {10:^10} {11:^10} {12:^10}"

self.fp.write(

tplt.format(item["count"], item["code"], item["name"], item['new_price'], item['zhangdiefu'],

item['zhangdieer'], item['com_num'],item['com_price'],item['zhengfu'],

item['top'],item['bottom'],item['today'],item['yesterday'],chr(12288)))

self.fp.write('

')

return item

def close_spider(self,spider): #同样只要定义一次

print('结束爬虫!')

self.fp.close()

结果展示

2)心得体会

这次的实验和上次差不多,不过在参考了同学的一些代码后,发现用json.loads()方法要方便很多,不用像正则表达式那样看地眼花缭乱,还有就是在pipeline.py的编写时,可以把打开文件和关闭文件的方法写在外面,这样避免了不必要的资源浪费。因为process_item(self, item, spider)会根据所接收到的item对象执行多次,还有就是settings.py的设置,LOG_LEVEL = 'ERROR'能够只显示代码的错误信息,方便我们进行代码的调试。经过本次实验,我可以更加熟练地运用scrapy框架。