1、下载Hadoop的压缩包 tar.gz https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/stable/

2、关联jar包

在eclipse中新建项目中,建lib文件夹,把要用的jar包拷贝进来,jar包在解压好的 hadoop-2.9.1/share/hadoop中

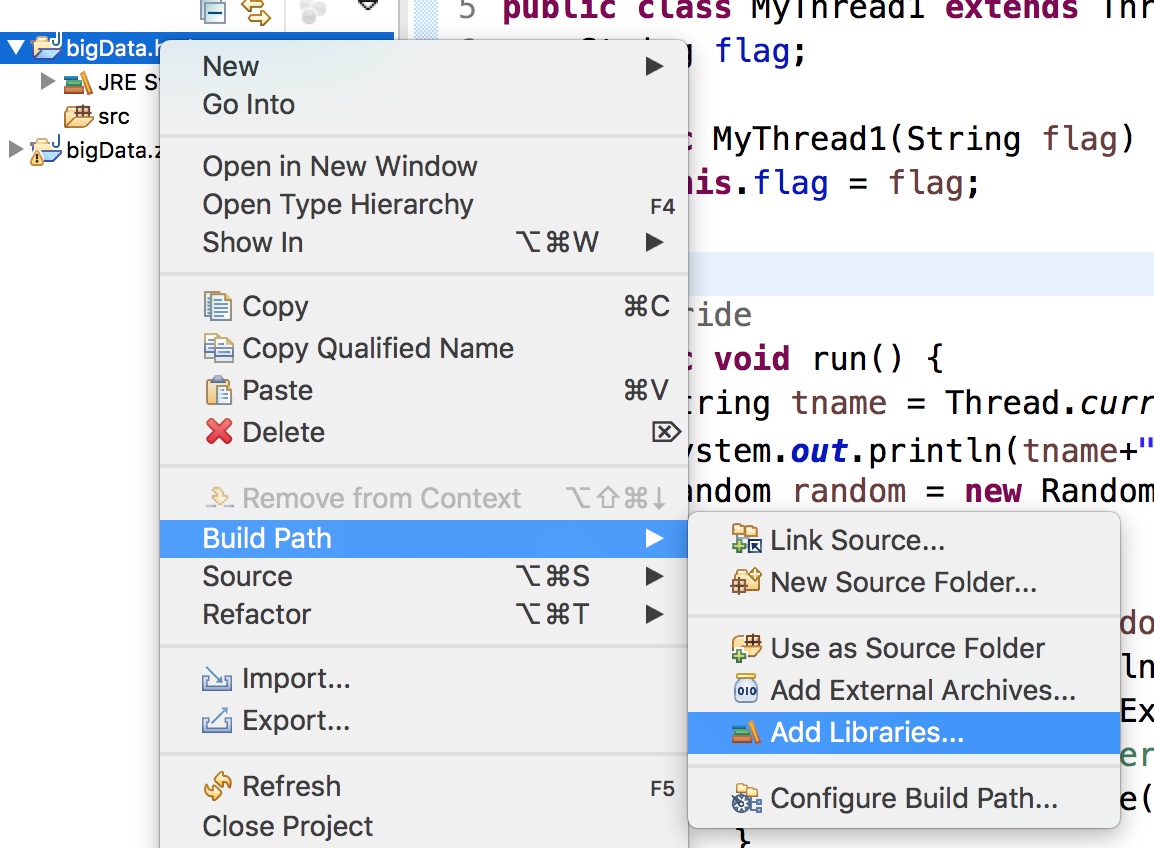

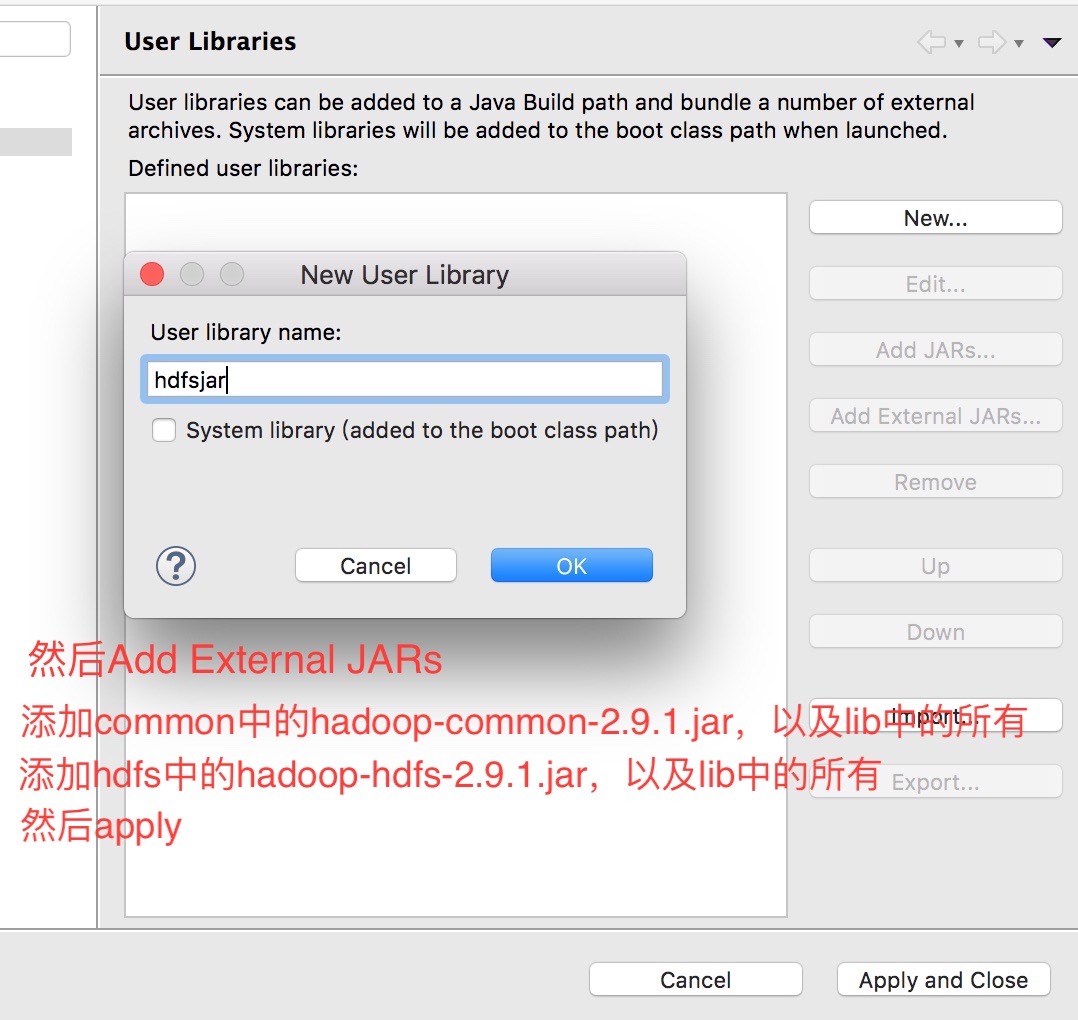

我们这里不拷贝,选择关联你所存放在电脑上的路径

在项目上右键选择 Bulid Path > Add Libraries > User Library > ok > new > 命名 > ok > Add External JARs > 选择jar包

然后会看到项目下多了个包

3、开始写代码

package bigdata.hdfs;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.net.URI;

import java.util.Iterator;

import java.util.Map.Entry;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.BlockLocation;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.junit.Before;

import org.junit.Test;

/* 客户端操作hdfs时,需要一个用户身份,

* 默认情况下,hdfs客户端会从jvm中获取一个参数来作为自己的用户身份:-DHADOOP_USER_NAME=hadoop,

* 解决方法方法有两种:

* 1、设置外部参数;

* 2、在代码中写明,用get方法时,选择三个参数的,将用户名传进去;

*/

public class HdfsClientDemo {

FileSystem fs = null;

@Before

public void init() throws Exception {

Configuration conf = new Configuration();

//这里要识别master的话,需要修改本机hosts文件

conf.set("fs.defaultFS", "hdfs://master:9000");

//拿到一个文件操作系统的客户端实例对象

fs = FileSystem.get(new URI("hdfs://master:9000"), conf, "wang");

}

// 上传

@Test

public void testUpload() throws Exception {

fs.copyFromLocalFile(new Path("/Users/wang/Desktop/upload.jpg"),new Path("/upload_copy.jpg"));

fs.close();

}

//下载

@Test

public void testDownload() throws Exception {

fs.copyToLocalFile(new Path("/upload_copy.jpg"), new Path("/Users/wang/Desktop/download.jpg"));

fs.close();

}

@Test

public void testUpload2() throws Exception {

//以流的方式上传

FSDataOutputStream outputStream = fs.create(new Path("/liu.txt"));

FileInputStream inputStream = new FileInputStream("/Users/wang/Desktop/");

org.apache.commons.io.IOUtils.copy(inputStream, outputStream);

}

@Test

public void testDownload2() throws Exception {

//以流的方式下载

FSDataInputStream InputStream= fs.open(new Path("/a.txt"));

//指定读取文件的指针的起始位置

InputStream.seek(12);

FileOutputStream OutputStream = new FileOutputStream("/Users/wang/Desktop/a.txt");

org.apache.commons.io.IOUtils.copy(InputStream, OutputStream);

}

//打印参数

@Test

public void testConf() {

Configuration conf = new Configuration();

Iterator<Entry<String, String>> it = conf.iterator();

while(it.hasNext()) {

Entry<String, String> en = it.next();

System.out.println(en.getKey()+':'+en.getValue());

}

}

@Test

public void testLs() throws Exception{

//递归列出所有文件,返回一个迭代器对象

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

while (listFiles.hasNext()) {

LocatedFileStatus fileStatus = listFiles.next();

System.out.println("blocksize:"+fileStatus.getBlockSize());

System.out.println("owner:"+fileStatus.getOwner());

System.out.println("Replication:"+fileStatus.getReplication());

System.out.println("Permission:"+fileStatus.getPermission());

System.out.println("Name:"+fileStatus.getPath().getName());

BlockLocation[] blockLocations = fileStatus.getBlockLocations();

for(BlockLocation b:blockLocations) {

System.out.println("块起始偏移量"+b.getOffset());

System.out.println("块长度"+b.getLength());

String[] hosts = b.getHosts();

//块所在的datanode节点

for(String host:hosts) {

System.out.println("datanode:"+host);

}

}

System.out.println("--------------------");

}

}

@Test

public void testLs2() throws Exception {

//只列出一个层级

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for(FileStatus file:listStatus) {

System.out.print("name:"+file.getPath().getName()+",");

System.out.println((file.isFile()?"file":"directory"));

}

}

}