转自:hive的hiveserver2模式启动不起来,发现Hadoop一直处于安全模式 - -小鱼- - 博客园 (cnblogs.com)

hadoop去掉保护模式

命令hadoop fs –safemode get 查看安全模式状态

命令hadoop fs –safemode enter 进入安全模式状态

命令hadoop fs –safemode leave 离开安全模式状态

hdfs dfsadmin -safemode leave

第一步:用hadoop fsck 检查hadoop文件系统

命令:Hadoop fsck

1 hadoop fsck 2 3 Usage: DFSck <path> [-move | -delete | -openforwrite] [-files [-blocks [-locations | -racks]]] 4 <path> 检查这个目录中的文件是否完整 5 6 -move 破损的文件移至/lost+found目录 7 -delete 删除破损的文件 8 9 -openforwrite 打印正在打开写操作的文件 10 11 -files 打印正在check的文件名 12 13 -blocks 打印block报告 (需要和-files参数一起使用) 14 15 -locations 打印每个block的位置信息(需要和-files参数一起使用) 16 17 -racks 打印位置信息的网络拓扑图 (需要和-files参数一起使用)

观察hadoop集群状态

CORRUPT FILES: 2 #损坏了两个文件

MISSING BLOCKS: 2 #丢失了两个块

1 Status: CORRUPT 2 Number of data-nodes: 1 3 Number of racks: 1 4 Total dirs: 72 5 Total symlinks: 0 6 7 Replicated Blocks: 8 Total size: 574348338 B 9 Total files: 76 10 Total blocks (validated): 74 (avg. block size 7761464 B) 11 ******************************** 12 UNDER MIN REPL'D BLOCKS: 35 (47.2973 %) 13 MINIMAL BLOCK REPLICATION: 1 14 CORRUPT FILES: 35 15 MISSING BLOCKS: 35 16 MISSING SIZE: 327840971 B 17 ******************************** 18 Minimally replicated blocks: 39 (52.7027 %) 19 Over-replicated blocks: 0 (0.0 %) 20 Under-replicated blocks: 38 (51.351353 %) 21 Mis-replicated blocks: 0 (0.0 %) 22 Default replication factor: 3 23 Average block replication: 0.527027 24 Missing blocks: 35 25 Corrupt blocks: 0 26 Missing replicas: 160 (38.46154 %) 27 28 Erasure Coded Block Groups: 29 Total size: 0 B 30 Total files: 0 31 Total block groups (validated): 0 32 Minimally erasure-coded block groups: 0 33 Over-erasure-coded block groups: 0 34 Under-erasure-coded block groups: 0 35 Unsatisfactory placement block groups: 0 36 Average block group size: 0.0 37 Missing block groups: 0 38 Corrupt block groups: 0 39 Missing internal blocks: 0 40 FSCK ended at Wed Jan 12 20:13:16 CST 2022 in 28 milliseconds

第二步 打印hadoop文件的状态信息

命令:hadoop fsck / -files -blocks -locations -racks

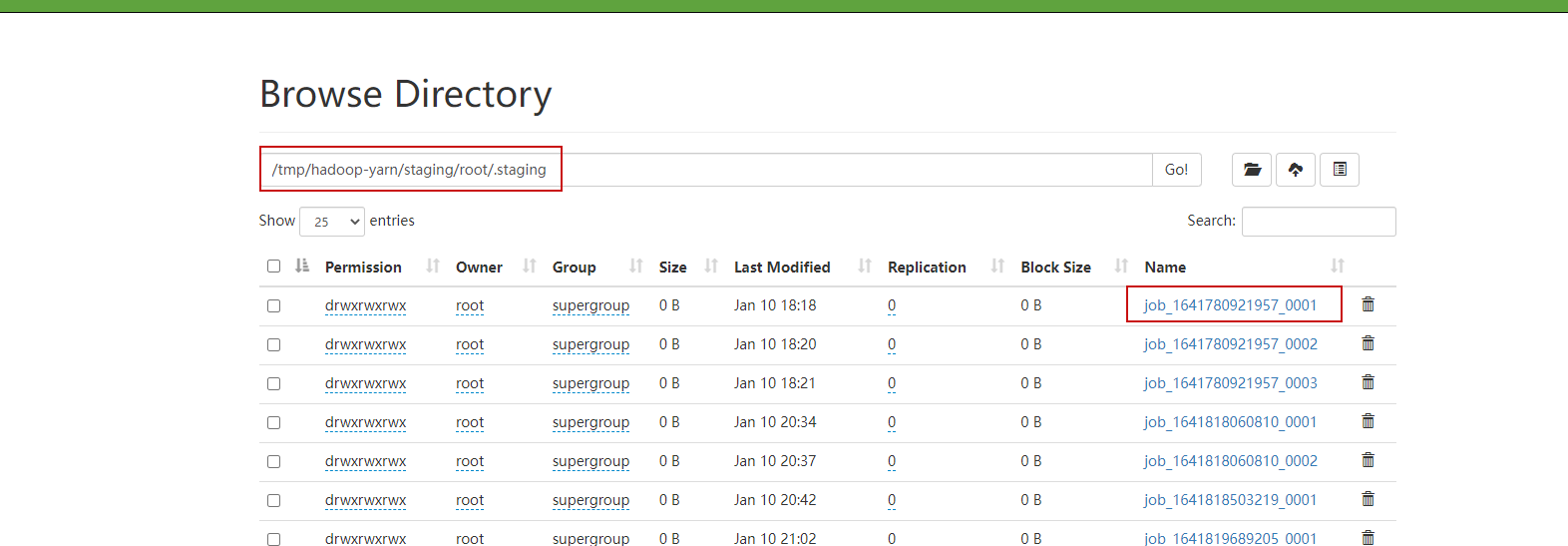

可以看到正常文件后面都有ok字样,有MISSING!字样的就是丢失的文件。

/tmp/hadoop-yarn/staging/history/done_intermediate/root/job_1641978125772_0001_conf.xml_tmp 0 bytes, replicated: replication=3, 0 block(s): OK

/tmp/hadoop-yarn/staging/root/.staging/job_1641780921957_0001/job.split 315 bytes, replicated: replication=10, 1 block(s): MISSING 1 blocks of total size 315 B

根据这个的路劲可以在hadoop浏览器界面中找到对应的文件路径,如下图

hadoop fs -chmod -R 777 /tmp

第三步:修复两个丢失、损坏的文件

[root@node03 conf]# hdfs debug recoverLease -path /flink-checkpoint/626ea65de810a2ec3b1799b605a6a995/chk-175/19195239-a205-4462-921d-09e0483a4080 -retries 10

[root@node03 conf]# hdfs debug recoverLease -path /flink-checkpoint/626ea65de810a2ec3b1799b605a6a995/chk-175/_metadata -retries 10

..Status: HEALTHY 集群状态:健康

现在重新启动hadoop就不会一直处于安全模式了,hiveserver2也能正常启动了。。

第四:意外状况

.............Status: CORRUPT #Hadoop状态:不正常

Total size: 273821489 B

Total dirs: 403

Total files: 213

Total symlinks: 0

Total blocks (validated): 201 (avg. block size 1362295 B)

********************************

UNDER MIN REPL'D BLOCKS: 2 (0.99502486 %)

dfs.namenode.replication.min: 1

CORRUPT FILES: 2 #损坏了两个文件

MISSING BLOCKS: 2 #丢失了两个块

MISSING SIZE: 6174 B

CORRUPT BLOCKS: 2

********************************

Minimally replicated blocks: 199 (99.004974 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 2.8208954

Corrupt blocks: 2

Missing replicas: 0 (0.0 %)

Number of data-nodes: 3

Number of racks: 1

FSCK ended at Fri Aug 23 10:43:11 CST 2019 in 12 milliseconds

1、如果损坏的文件不重要

首先:将找到的损坏文件备份好

然后:执行[root@node03 export]# hadoop fsck / -delete将损坏文件删除

[root@node03 export]# hadoop fsck / -delete

此命令一次不成功可以多试几次,前提是丢失、损坏的文件不重要!!!!!!!!!!

2、如果损坏的文件很重要不能丢失

可以先执行此命令:hadoop fs –safemode leave 强制离开安全模式状态

[root@node03 export]# hadoop fs –safemode leave

此操作不能完全解决问题,只能暂时让集群能够工作!!!!

而且,以后每次启动hadoop集群都要执行此命令,直到问题彻底解决。

如果并非以上问题请转这篇:

https://www.cnblogs.com/-xiaoyu-/p/12158984.html