openfire集群插件hazelcast配置

一、base:

1、ubuntu16.04

2、jdk1.8.0_181

3、openfire4.1.3

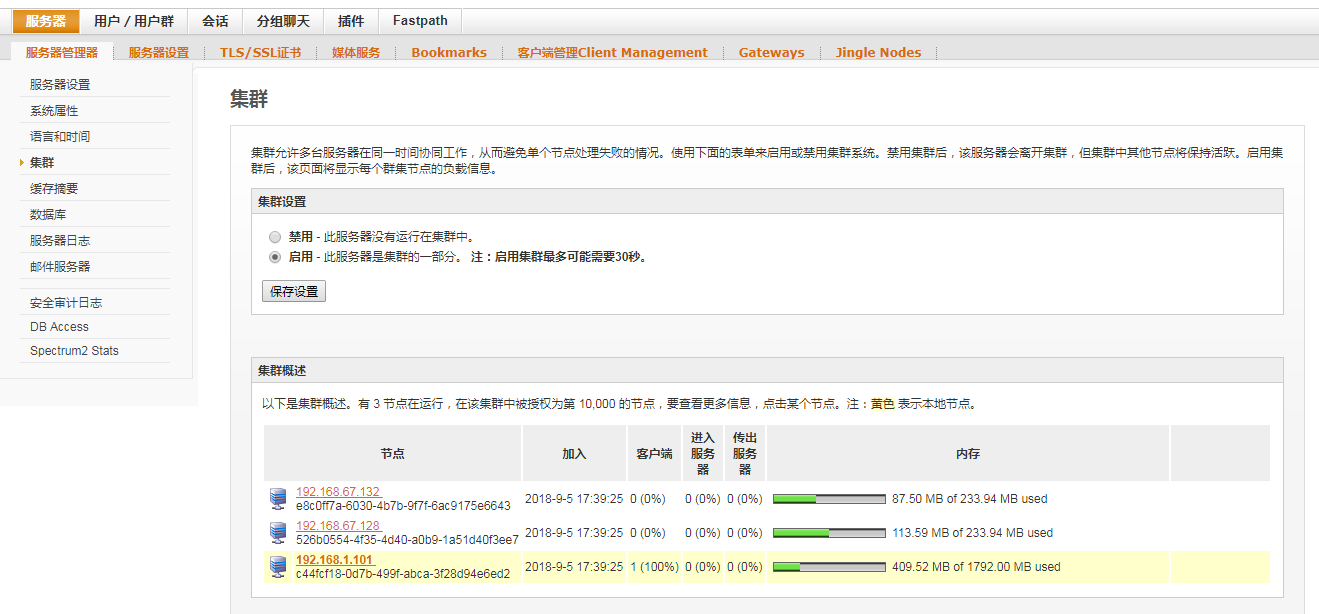

二、openfire集群配置

节点1:192.168.67.128

节点1:192.168.67.132

数据库:192.168.1.101:3306

上文已经配置好openfire.本文将openfire添加到集群中。确定所有节点都设置为同一个域:

| xmpp.domain | 192.168.1.101 |

1.登录节点web控制平台192.168.67.128:9090、192.168.67.132:9090

下载插件hazelcast、Broadcast

2.修改节点的数据库配置(需将所有节点的数据库连接到192.168.1.101上),本人openfire安装在/opt/openfire上

sudo gedit /opt/openfire/conf/openfire.xml

修改数据库连接url

3.修改集群配置文件,对每个节点都进行一样的配置(除了interfaces设置为本节点ip),本人设置了3个节点,在member中设置

sudo gedit /opt/openfire/conf/hazelcast-local-config.xml

4.重启openfire

5.启用集群,openfire会自己根据节点配置进行集群连接。本人配了个节点在windows上(192.168.1.101),与数据库同机器。

附:hazelcast-cache-config-xml

<?xml version="1.0" encoding="UTF-8"?> <!-- ~ Copyright (c) 2008-2015, Hazelcast, Inc. All Rights Reserved. ~ ~ Licensed under the Apache License, Version 2.0 (the "License"); ~ you may not use this file except in compliance with the License. ~ You may obtain a copy of the License at ~ ~ http://www.apache.org/licenses/LICENSE-2.0 ~ ~ Unless required by applicable law or agreed to in writing, software ~ distributed under the License is distributed on an "AS IS" BASIS, ~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. ~ See the License for the specific language governing permissions and ~ limitations under the License. --> <hazelcast xmlns="http://www.hazelcast.com/schema/config" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.hazelcast.com/schema/config http://www.hazelcast.com/schema/config/hazelcast-config-3.9.xsd"> <group> <name>openfire</name> <password>openfire</password> </group> <properties> <property name="hazelcast.logging.type">slf4j</property> <property name="hazelcast.operation.call.timeout.millis">30000</property> <property name="hazelcast.memcache.enabled">false</property> <property name="hazelcast.rest.enabled">false</property> </properties> <management-center enabled="false"/> <network> <port auto-increment="true" port-count="100">5701</port> <outbound-ports> <ports>0</ports> </outbound-ports> <join> <multicast enabled="false"> <multicast-group>224.2.2.3</multicast-group> <multicast-port>54327</multicast-port> </multicast> <tcp-ip enabled="true"> <member>192.168.67.128:5701</member> <member>192.168.67.132:5701</member> <member>192.168.1.101:5701</member> </tcp-ip> <aws enabled="false"/> </join> <interfaces enabled="true"> <interface>192.168.1.101</interface> </interfaces> <ssl enabled="false"/> <socket-interceptor enabled="false"/> <symmetric-encryption enabled="false"> <!-- encryption algorithm such as DES/ECB/PKCS5Padding, PBEWithMD5AndDES, AES/CBC/PKCS5Padding, Blowfish, DESede --> <algorithm>PBEWithMD5AndDES</algorithm> <!-- salt value to use when generating the secret key --> <salt>thesalt</salt> <!-- pass phrase to use when generating the secret key --> <password>thepass</password> <!-- iteration count to use when generating the secret key --> <iteration-count>19</iteration-count> </symmetric-encryption> </network> <partition-group enabled="false"/> <executor-service name="default"> <pool-size>16</pool-size> <!--Queue capacity. 0 means Integer.MAX_VALUE.--> <queue-capacity>0</queue-capacity> </executor-service> <queue name="default"> <!-- Maximum size of the queue. When a JVM's local queue size reaches the maximum, all put/offer operations will get blocked until the queue size of the JVM goes down below the maximum. Any integer between 0 and Integer.MAX_VALUE. 0 means Integer.MAX_VALUE. Default is 0. --> <max-size>0</max-size> <!-- Number of backups. If 1 is set as the backup-count for example, then all entries of the map will be copied to another JVM for fail-safety. 0 means no backup. --> <backup-count>1</backup-count> <!-- Number of async backups. 0 means no backup. --> <async-backup-count>0</async-backup-count> <empty-queue-ttl>-1</empty-queue-ttl> </queue> <!-- Default Hazelcast cache configuration for Openfire. --> <map name="default"> <!-- Data type that will be used for storing recordMap. Possible values: BINARY (default): keys and values will be stored as binary data OBJECT : values will be stored in their object forms NATIVE : values will be stored in non-heap region of JVM --> <in-memory-format>BINARY</in-memory-format> <!-- Number of backups. If 1 is set as the backup-count for example, then all entries of the map will be copied to another JVM for fail-safety. 0 means no backup. --> <backup-count>1</backup-count> <!-- Number of async backups. 0 means no backup. --> <async-backup-count>0</async-backup-count> <!-- Can we read the local backup entries? Default value is false for strong consistency. Being able to read backup data will give you greater performance. --> <read-backup-data>true</read-backup-data> <!-- Maximum number of seconds for each entry to stay in the map. Entries that are older than <time-to-live-seconds> and not updated for <time-to-live-seconds> will get automatically evicted from the map. Any integer between 0 and Integer.MAX_VALUE. 0 means infinite. Default is 0. --> <time-to-live-seconds>0</time-to-live-seconds> <!-- Maximum number of seconds for each entry to stay idle in the map. Entries that are idle(not touched) for more than <max-idle-seconds> will get automatically evicted from the map. Entry is touched if get, put or containsKey is called. Any integer between 0 and Integer.MAX_VALUE. 0 means infinite. Default is 0. --> <max-idle-seconds>0</max-idle-seconds> <!-- Valid values are: NONE (no eviction), LRU (Least Recently Used), LFU (Least Frequently Used). NONE is the default. --> <eviction-policy>LRU</eviction-policy> <!-- Maximum size of the map. When max size is reached, map is evicted based on the policy defined. Any integer between 0 and Integer.MAX_VALUE. 0 means Integer.MAX_VALUE. Default is 0. --> <max-size policy="PER_NODE">100000</max-size> <!-- When max. size is reached, specified percentage of the map will be evicted. Any integer between 0 and 100. If 25 is set for example, 25% of the entries will get evicted. --> <eviction-percentage>25</eviction-percentage> <!-- Minimum time in milliseconds which should pass before checking if a partition of this map is evictable or not. Default value is 100 millis. --> <min-eviction-check-millis>100</min-eviction-check-millis> <!-- While recovering from split-brain (network partitioning), map entries in the small cluster will merge into the bigger cluster based on the policy set here. When an entry merge into the cluster, there might an existing entry with the same key already. Values of these entries might be different for that same key. Which value should be set for the key? Conflict is resolved by the policy set here. Default policy is PutIfAbsentMapMergePolicy There are built-in merge policies such as com.hazelcast.map.merge.PassThroughMergePolicy; entry will be added if there is no existing entry for the key. com.hazelcast.map.merge.PutIfAbsentMapMergePolicy ; entry will be added if the merging entry doesn't exist in the cluster. com.hazelcast.map.merge.HigherHitsMapMergePolicy ; entry with the higher hits wins. com.hazelcast.map.merge.LatestUpdateMapMergePolicy ; entry with the latest update wins. --> <merge-policy>com.hazelcast.map.merge.PassThroughMergePolicy</merge-policy> <!-- Near cache provides a local view of the clustered map, which is ideal for high-read caches. Each cluster member retains a local copy of entries retrieved from the distributed map. This reduces network load for caches that require frequent reads. However, if the entries are updated frequently, there can be a performance penalty due to invalidations on the other cluster members. --> <near-cache> <eviction eviction-policy="LRU" max-size-policy="ENTRY_COUNT" size="1000"/> <time-to-live-seconds>0</time-to-live-seconds> <max-idle-seconds>600</max-idle-seconds> <invalidate-on-change>true</invalidate-on-change> </near-cache> </map> <multimap name="default"> <backup-count>1</backup-count> <value-collection-type>SET</value-collection-type> </multimap> <list name="default"> <backup-count>1</backup-count> </list> <set name="default"> <backup-count>1</backup-count> </set> <jobtracker name="default"> <max-thread-size>0</max-thread-size> <!-- Queue size 0 means number of partitions * 2 --> <queue-size>0</queue-size> <retry-count>0</retry-count> <chunk-size>1000</chunk-size> <communicate-stats>true</communicate-stats> <topology-changed-strategy>CANCEL_RUNNING_OPERATION</topology-changed-strategy> </jobtracker> <semaphore name="default"> <initial-permits>0</initial-permits> <backup-count>1</backup-count> <async-backup-count>0</async-backup-count> </semaphore> <reliable-topic name="default"> <read-batch-size>10</read-batch-size> <topic-overload-policy>BLOCK</topic-overload-policy> <statistics-enabled>true</statistics-enabled> </reliable-topic> <ringbuffer name="default"> <capacity>10000</capacity> <backup-count>1</backup-count> <async-backup-count>0</async-backup-count> <time-to-live-seconds>30</time-to-live-seconds> <in-memory-format>BINARY</in-memory-format> </ringbuffer> <serialization> <portable-version>0</portable-version> </serialization> <services enable-defaults="true"/> <!-- Partitioned Openfire caches without size/time limits (no eviction). --> <map name="opt-$cacheStats"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Client Session Info Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Components Sessions"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Connection Managers Sessions"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Secret Keys Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Validated Domains"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Disco Server Features"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Disco Server Items"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Incoming Server Sessions"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Sessions by Hostname"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Routing User Sessions"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Routing Components Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Routing Users Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Routing AnonymousUsers Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Routing Servers Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <map name="Directed Presences"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> </map> <!-- Caches with size and/or time limits (TTL) --> <map name="POP3 Authentication"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>3600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="LDAP Authentication"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>7200</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="File Transfer"> <backup-count>1</backup-count> <time-to-live-seconds>600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="File Transfer Cache"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Javascript Cache"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>864000</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Entity Capabilities"> <backup-count>1</backup-count> <time-to-live-seconds>172800</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Entity Capabilities Users"> <backup-count>1</backup-count> <time-to-live-seconds>172800</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Favicon Hits"> <backup-count>1</backup-count> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Favicon Misses"> <backup-count>1</backup-count> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Last Activity Cache"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Locked Out Accounts"> <backup-count>1</backup-count> <time-to-live-seconds>900</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Multicast Service"> <backup-count>1</backup-count> <max-size policy="PER_NODE">10000</max-size> <time-to-live-seconds>86400</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Offline Message Size"> <backup-count>1</backup-count> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>43200</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Offline Presence Cache"> <backup-count>1</backup-count> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Privacy Lists"> <backup-count>1</backup-count> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Remote Users Existence"> <backup-count>1</backup-count> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Remote Server Configurations"> <backup-count>1</backup-count> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>1800</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <!-- These caches have size and/or time limits (idle) --> <map name="Group Metadata Cache"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <max-idle-seconds>3600</max-idle-seconds> <time-to-live-seconds>900</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Group"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <max-idle-seconds>3600</max-idle-seconds> <time-to-live-seconds>900</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="Roster"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <max-idle-seconds>3600</max-idle-seconds> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <map name="User"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <max-idle-seconds>3600</max-idle-seconds> <time-to-live-seconds>1800</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> </map> <!-- These caches use a near-cache to improve read performance --> <map name="VCard"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>21600</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> <near-cache> <eviction eviction-policy="LRU" max-size-policy="ENTRY_COUNT" size="10000"/> <max-idle-seconds>1800</max-idle-seconds> <invalidate-on-change>true</invalidate-on-change> </near-cache> </map> <map name="Published Items"> <backup-count>1</backup-count> <read-backup-data>true</read-backup-data> <max-size policy="PER_NODE">100000</max-size> <time-to-live-seconds>900</time-to-live-seconds> <eviction-policy>LRU</eviction-policy> <near-cache> <eviction eviction-policy="LRU" max-size-policy="ENTRY_COUNT" size="10000"/> <max-idle-seconds>60</max-idle-seconds> <invalidate-on-change>true</invalidate-on-change> </near-cache> </map> </hazelcast>