作者:北京小远

出处:http://www.cnblogs.com/bj-xy/

文档禁止转载,转载需标明出处,否则保留追究法律责任的权利!

目录:

目录

一、集群可用性验证

k8s安装完成后需要进行可用性验证,因为k8s中有pod通信 server通信 node通信要保证通信正常

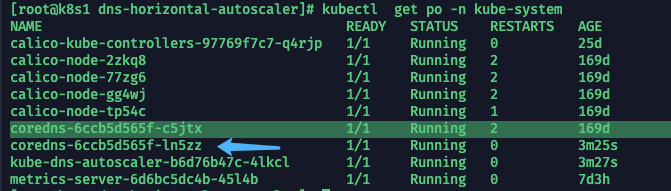

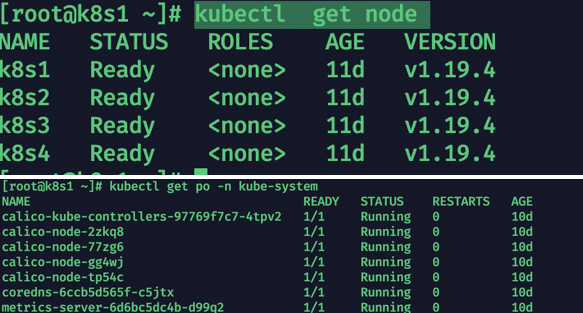

1、1查看基本组件情况

kubectl get node

kubectl get po -n kube-system

1、2创建测试pod

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

1.3 测试连通性

1、测试pod能否解析同namespace的kubernetes的 svc

kubectl get svc (查看kubernetes的svc)

kubectl exec busybox -n default -- nslookup kubernetes (解析)

结果:

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

2、测试pod能否解析跨namespace的 svc

kubectl get svc -n kube-system

kubectl exec busybox -n default -- nslookup kube-dns.kube-system

结果:

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

3、测试每个节点能否访问kubernetes svc的443端口 与kube-dns service的53端口

kubectl get svc 获取 cluster ip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24d

在每个节点执行

telnet 10.96.0.1 443

测试kube-dns的联通

kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 21d

metrics-server ClusterIP 10.111.181.43 <none> 443/TCP 21d

在每个节点执行

telnet 10.96.0.10 53

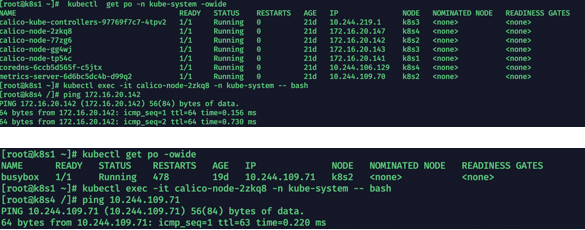

4、测试pod之间能否通信

kubectl get po -n kube-system -owide 获取pod

进入到calico-node的某台容器中

kubectl exec -it calico-node-2zkq8 -n kube-system -- bash

然后找一个其他节点的 calico-node pod的一个ip ping测试连通性

测试与busybox 测试容器的连通性

kubectl get po -owide

5、清除测试pod

kubectl delete po busybox

查看是否删除

kubectl get po

二、参数优化

2.1 容器配置参数优化

每台机器执行

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "50m",

"max-file": "3"

},

"registry-mirrors": ["https://ufkb7xyg.mirror.aliyuncs.com"],

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 5,

"live-restore": true

}

EOF

systemctl daemon-reload

systemctl restart docker

#删除状态是exited的容器

docker rm $(docker ps -q -f status=exited)

参数解析:

exec-opts:docker的CgroupDriver改成systemd

log-driver:日志格式

log-opts:日志存储大小与份数

registry-mirrors:镜像下载代理地址

max-concurrent-downloads:镜像下载启动的进程

max-concurrent-uploads:镜像上传启动的进程

live-restore:配置守护进程,让重启docker服务不重启容器

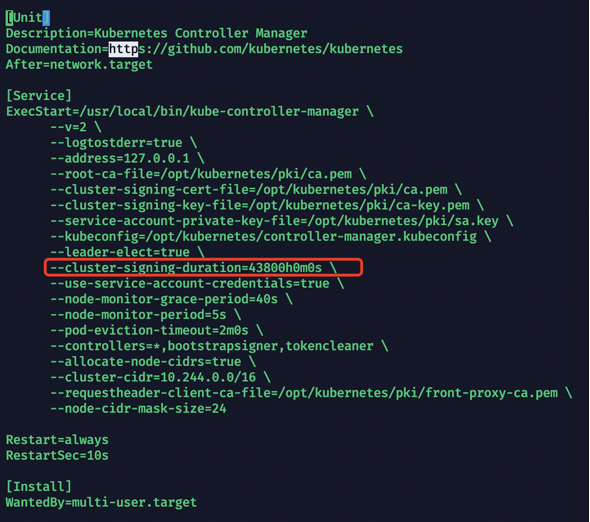

2.2 controller-manager配置参数优化

master节点配置

vim /usr/lib/systemd/system/kube-controller-manager.service

--cluster-signing-duration=43800h0m0s

systemctl daemon-reload

systemctl restart kube-controller-manager

设置自动办法证书周期:

最大支持5年

--cluster-signing-duration

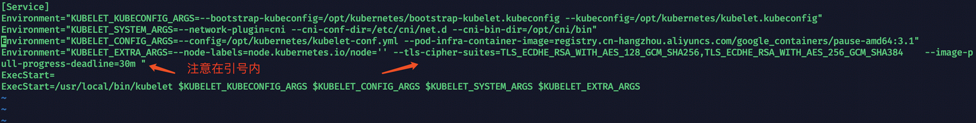

2.3 kubelet配置参数优化

每台机器执行

vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 --image-pull-progress-deadline=30m "

解析

-tls-cipher-suites= 加密方式因为默认的加密方式不安全

--image-pull-progress-deadline 设置下载镜像的 deadline避免重复尝试

vim /opt/kubernetes/kubelet-conf.yml

rotateServerCertificates: true

allowedUnsafeSysctls:

- "net.core*"

- "net.ipv4.*"

kubeReserved:

cpu: "100m"

memory: 300Mi

ephemeral-storage: 10Gi

systemReserved:

cpu: "100m"

memory: 300Mi

ephemeral-storage: 10Gi

解析:

rotateServerCertificates 自动配置证书

allowedUnsafeSysctls 允许就该内核参数

kubeReserved 预留系统资源给k8s 根据机器资源分配

systemReserved

systemctl daemon-reload

systemctl restart kubelet

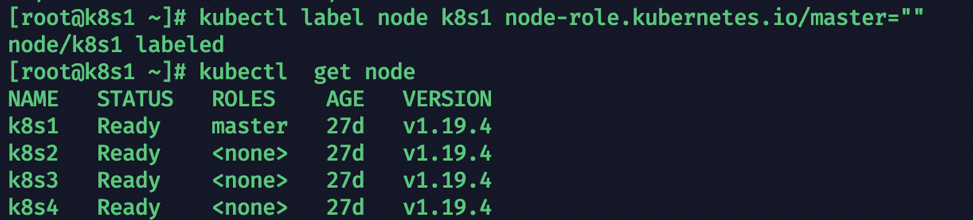

2.4 更改master节点的ROLES

设置所有master节点的角色

kubectl label node k8s1 node-role.kubernetes.io/master=""

三、设置coredns为自动扩容(可以不做)

1、资源配置文件

cat > dns-horizontal-autoscaler_v1.9.yaml << EOF

kind: ServiceAccount

apiVersion: v1

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list", "watch"]

- apiGroups: [""]

resources: ["replicationcontrollers/scale"]

verbs: ["get", "update"]

- apiGroups: ["apps"]

resources: ["deployments/scale", "replicasets/scale"]

verbs: ["get", "update"]

# Remove the configmaps rule once below issue is fixed:

# kubernetes-incubator/cluster-proportional-autoscaler#16

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: kube-dns-autoscaler

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:kube-dns-autoscaler

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

k8s-app: kube-dns-autoscaler

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kube-dns-autoscaler

template:

metadata:

labels:

k8s-app: kube-dns-autoscaler

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

securityContext:

supplementalGroups: [ 65534 ]

fsGroup: 65534

nodeSelector:

kubernetes.io/os: linux

containers:

- name: autoscaler

image: registry.cn-beijing.aliyuncs.com/yuan-k8s/cluster-proportional-autoscaler-amd64:1.8.1

resources:

requests:

cpu: "20m"

memory: "10Mi"

command:

- /cluster-proportional-autoscaler

- --namespace=kube-system

- --configmap=kube-dns-autoscaler

- --target=deployment/coredns

- --default-params={"linear":{"coresPerReplica":16,"nodesPerReplica":4,"min":2,"max":4,"preventSinglePointFailure":true,"includeUnschedulableNodes":true}}

- --logtostderr=true

- --v=2

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

serviceAccountName: kube-dns-autoscaler

EOF

2、解析

-default-params中的参数

min:coredns最小数

max:coredns最打数

coresPerReplica:集群CPU核心数

nodesPerReplica:集群节点数

preventSinglePointFailure:设置为 时true,如果有多个节点,控制器确保至少有 2 个副本

includeUnschedulableNodes被设定为true,副本将刻度基于节点的总数。否则副本将仅根据可调度节点的数量进行扩展

注意:

- --target= 这里与kube-dns.yaml中定义的name一致

如果报错没有ConfigMap则创建下面的资源

cat > dns-autoscaler-ConfigMap.yaml << EOF

kind: ConfigMap

apiVersion: v1

metadata:

name: dns-autoscaler

namespace: kube-system

data:

linear: |-

{

"coresPerReplica": 16,

"nodesPerReplica": 4,

"min": 2,

"preventSinglePointFailure": true

}

EOF

3、创建

dns-horizontal-autoscaler_v1.9.yaml

4、查看

kubectl get po -n kube-system