一、下载efk相关安装文件

1.1、下载对应的EFK yaml配置文件

[root@k8s-master01 k8s]# cd efk-7.10.2/ [root@k8s-master01 efk-7.10.2]# ls create-logging-namespace.yaml es-service.yaml es-statefulset.yaml filebeat fluentd-es-configmap.yaml fluentd-es-ds.yaml kafka kibana-deployment.yaml kibana-service.yaml

1.2、创建EFK所需要的Namespace

[root@k8s-master01 efk-7.10.2]# kubectl create -f create-logging-namespace.yaml namespace/logging created [root@k8s-master01 efk-7.10.2]# kubectl get ns logging Active 3sc

1.3、创建ES的service

[root@k8s-master01 efk-7.10.2]# kubectl create -f es-service.yaml service/elasticsearch-logging created [root@k8s-master01 efk-7.10.2]# kubectl get svc -n logging NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch-logging ClusterIP None <none> 9200/TCP,9300/TCP 37sc

二、创建ES集群

只要创建ServiceAccount、ClusterRole、ClusterRoleBinding、statefulset相应的容器

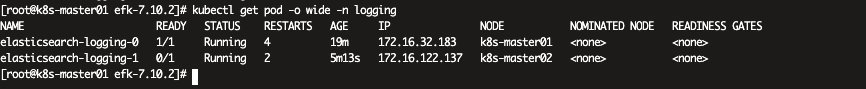

[root@k8s-master01 efk-7.10.2]# kubectl create -f es-statefulset.yaml serviceaccount/elasticsearch-logging created clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created statefulset.apps/elasticsearch-logging created [root@k8s-master01 efk-7.10.2]# kubectl get statefulset -n logging NAME READY AGE elasticsearch-logging 0/1 75s [root@k8s-master01 efk-7.10.2]# kubectl get pod -n logging NAME READY STATUS RESTARTS AGE elasticsearch-logging-0 0/1 PodInitializing 0 83s [root@k8s-master01 efk-7.10.2]# kubectl get ClusterRoleBinding -n logging | grep elas elasticsearch-logging ClusterRole/elasticsearch-logging 4m54s [root@k8s-master01 efk-7.10.2]# kubectl get ClusterRole-n logging | grep elas error: the server doesn't have a resource type "ClusterRole-n" [root@k8s-master01 efk-7.10.2]# kubectl get ClusterRole -n logging | grep elas elasticsearch-logging 2022-05-23T03:35:19Z [root@k8s-master01 efk-7.10.2]# kubectl get sa -n logging NAME SECRETS AGE default 1 13m elasticsearch-logging 1 5m32s

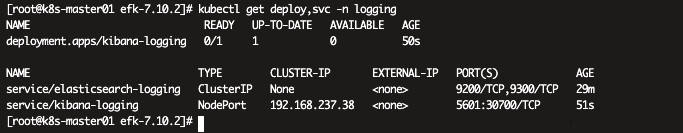

三、创建Kibana

root@k8s-master01 efk-7.10.2]# kubectl create -f kibana-deployment.yaml -f kibana-service.yaml deployment.apps/kibana-logging created service/kibana-logging created

四、部署Fluentd daemonSet 容器

4.1、部署ServiceAccount、ClusterRole、ClusterRoleBinding

[root@k8s-master01 efk-7.10.2]# kubectl create -f fluentd-es-ds.yaml serviceaccount/fluentd-es created clusterrole.rbac.authorization.k8s.io/fluentd-es created clusterrolebinding.rbac.authorization.k8s.io/fluentd-es created daemonset.apps/fluentd-es-v3.1.1 createdb

4.2、部署Fluentd依赖的configmap

[root@k8s-master01 efk-7.10.2]# kubectl create -f fluentd-es-configmap.yaml

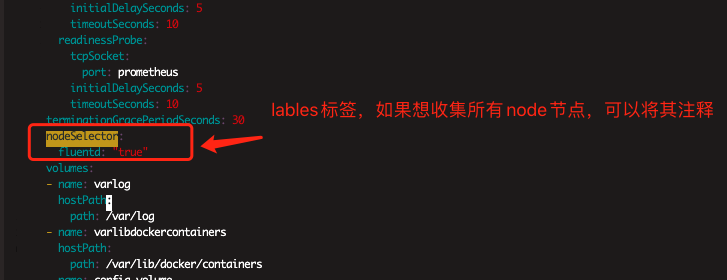

Ps:这里需要注意根据自己实际情况确定是否收集所有node节点日志,如果是,可以将其fluentd-es-ds.yaml配置文件中的nodeSelector注释

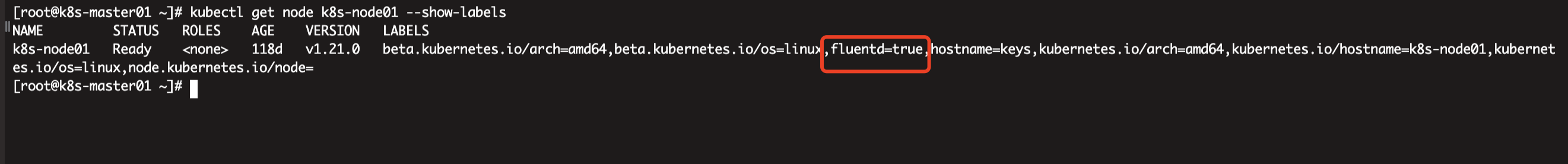

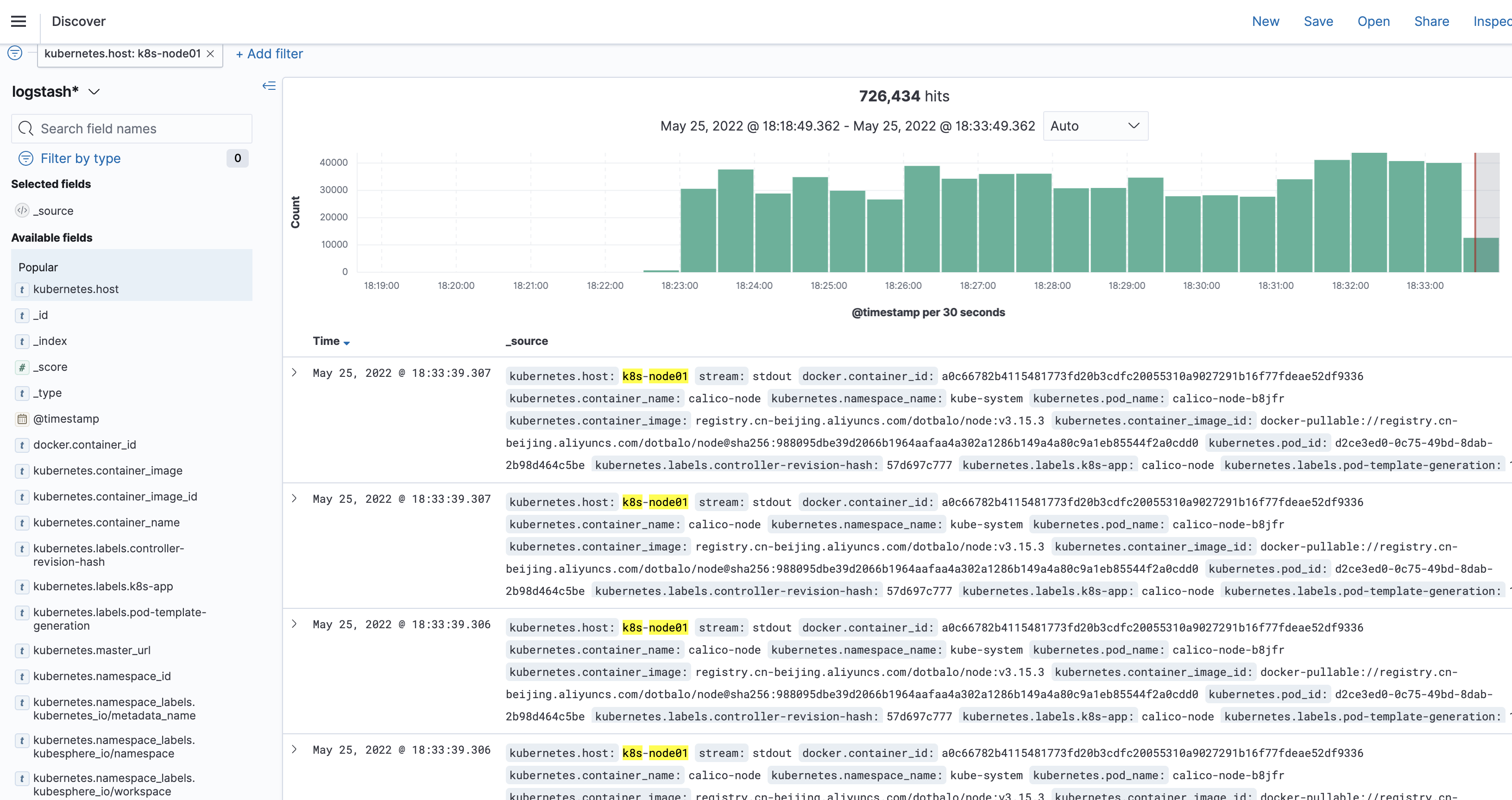

这里为了验证.仅给k8s-node01 打上标签,只收集该节点日志

#kubectl label node k8s-node01 fluentd=true

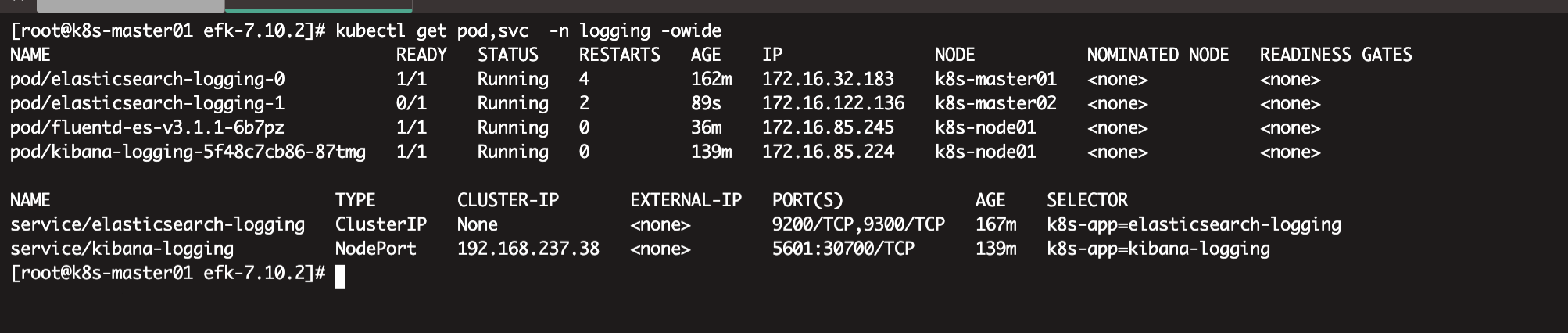

可以发现EFK环境都已经正常运行

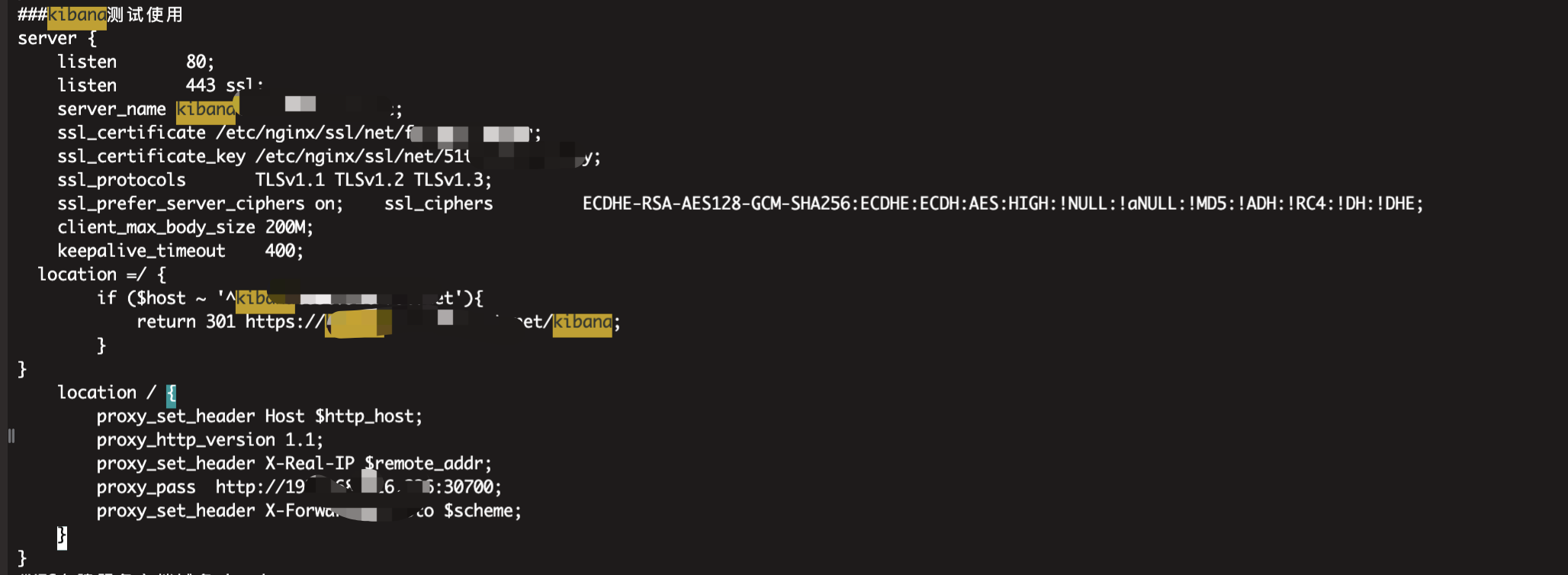

五、Nginx代理

这里可以选择性配置,如果你不想配置域名,可直接通过节点IP+30700直接访问即可

本人在这里给出nginx代理配置,可供参考

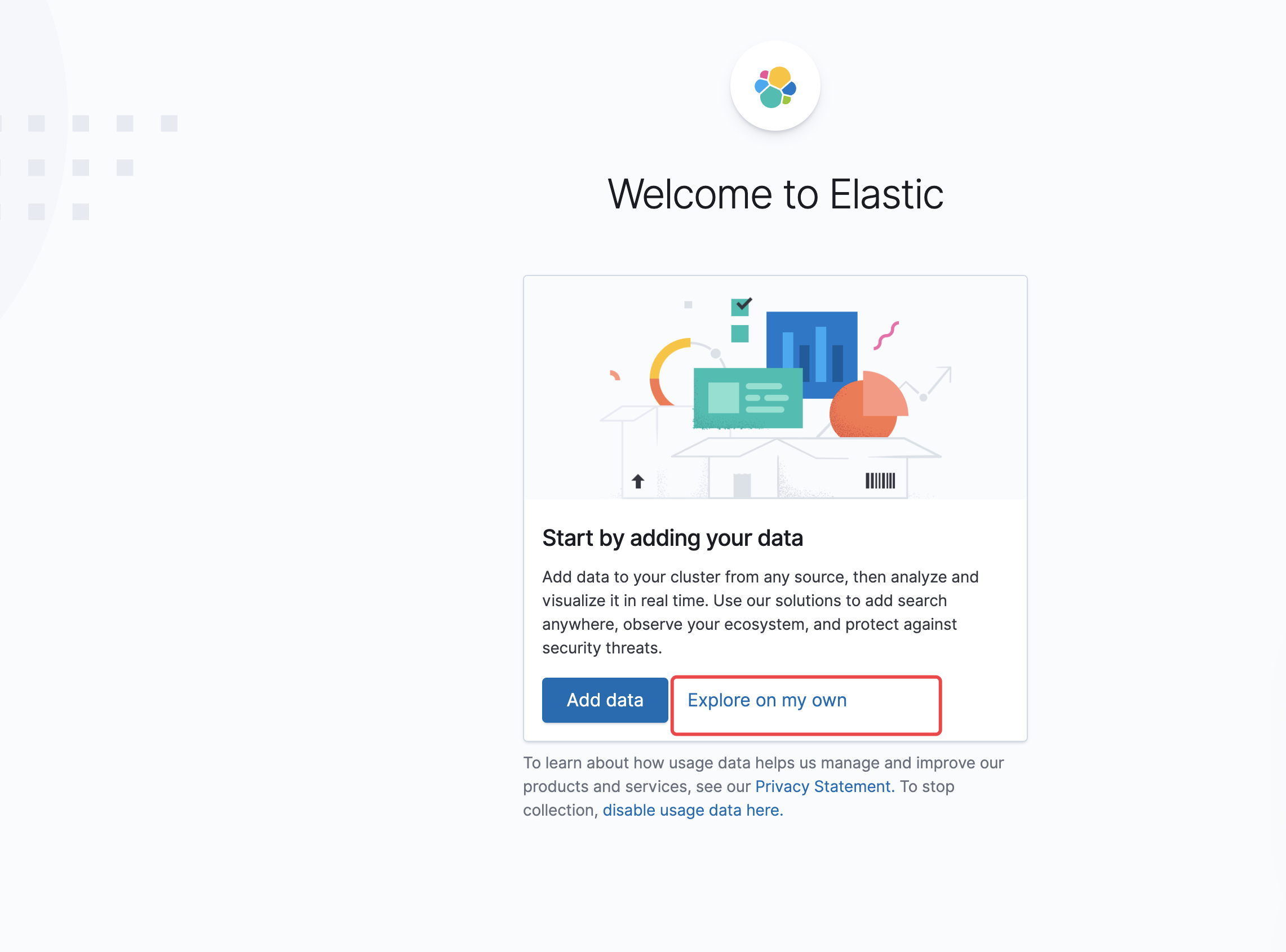

验证访问代理域名

添加数据

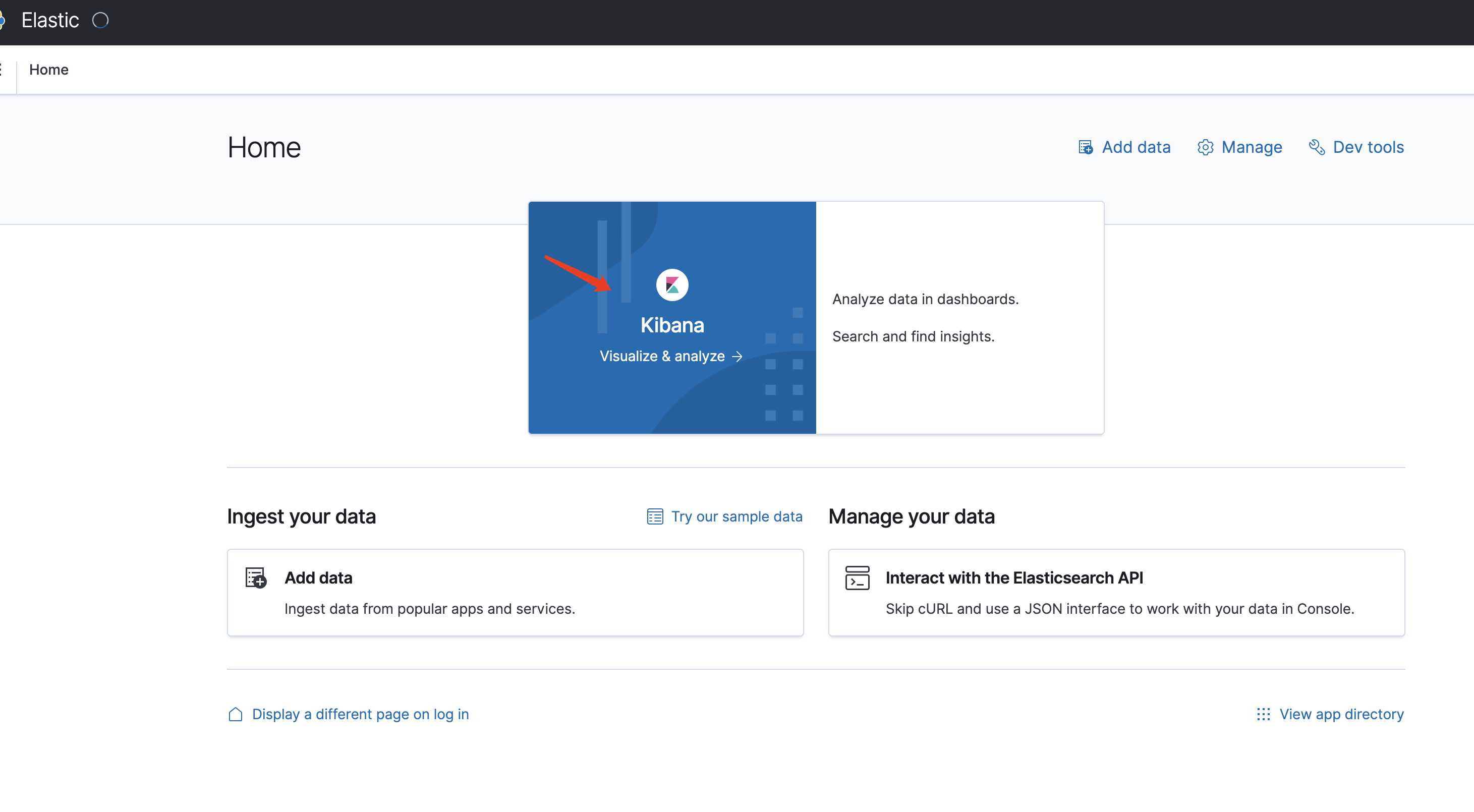

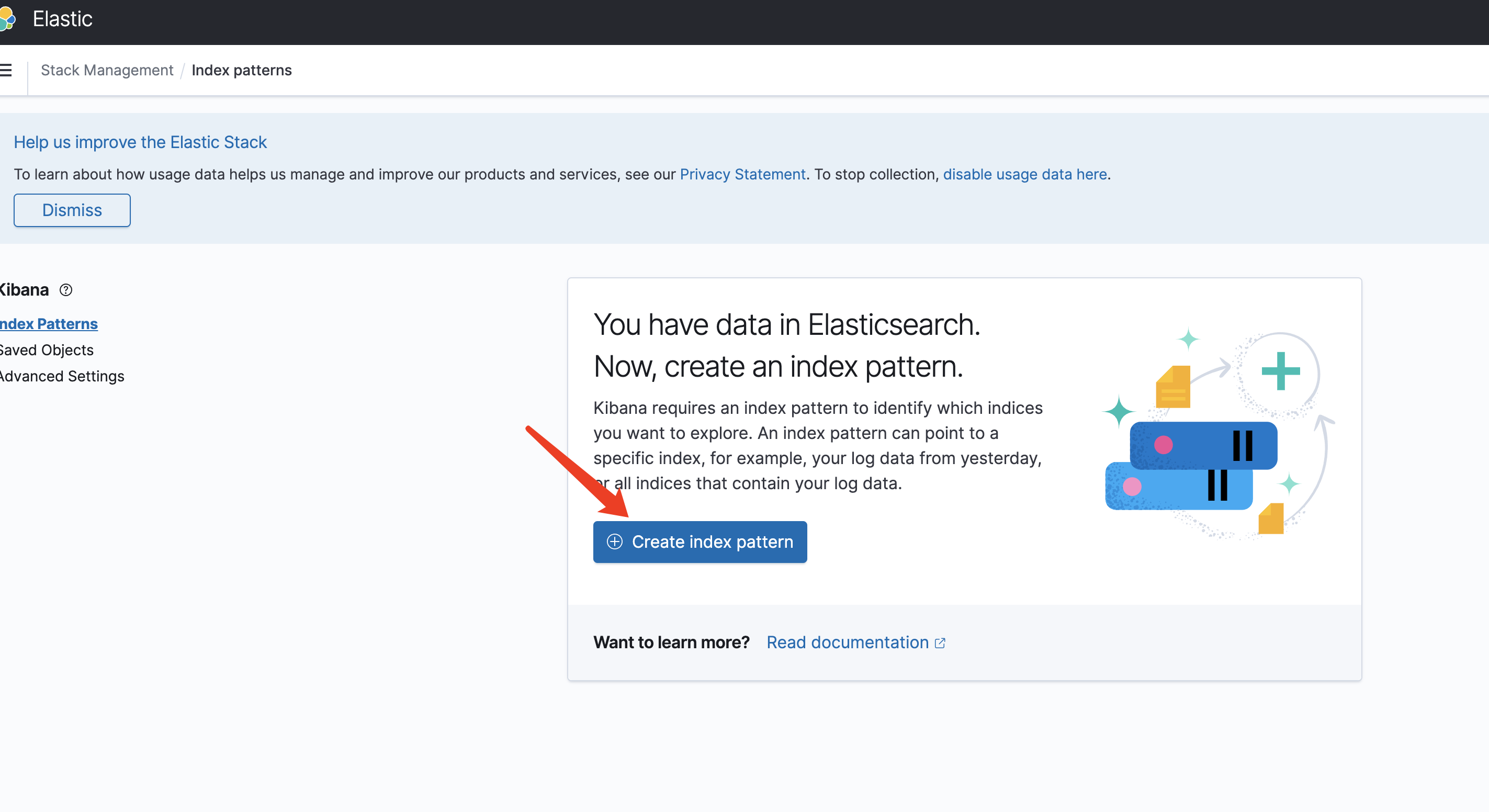

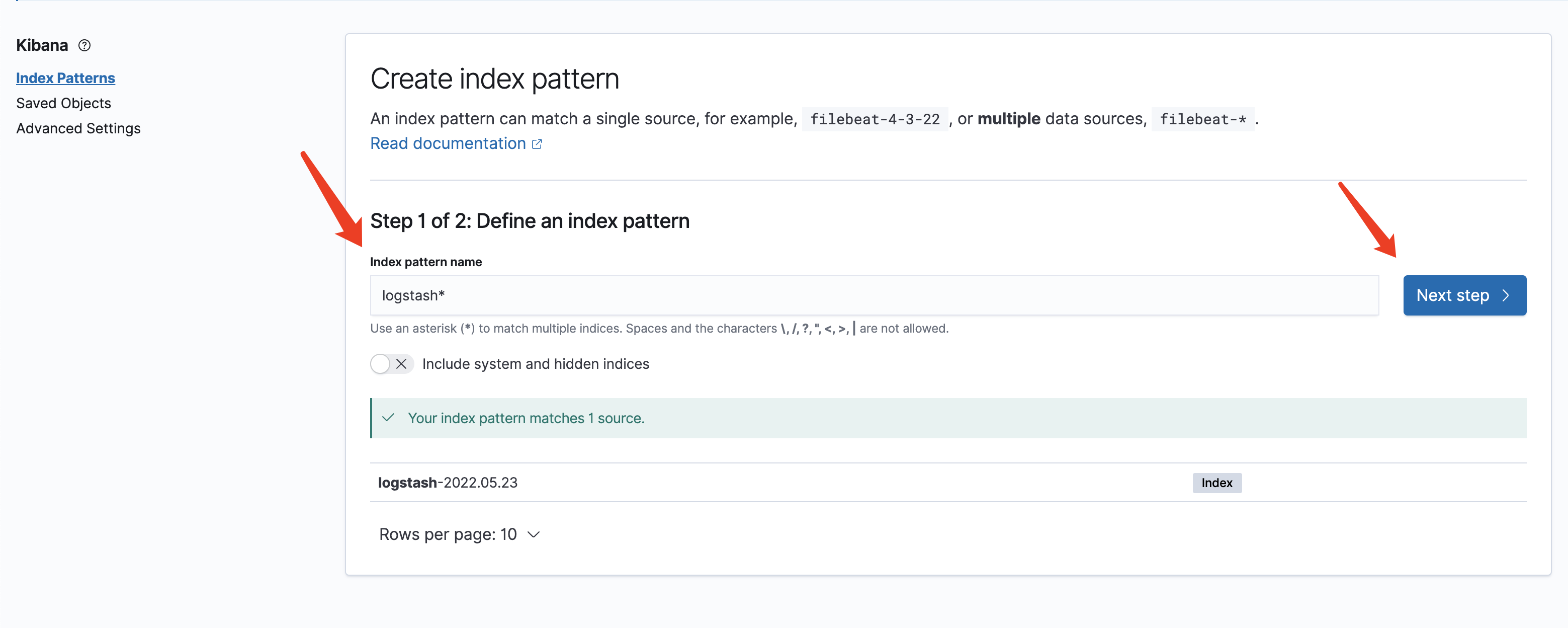

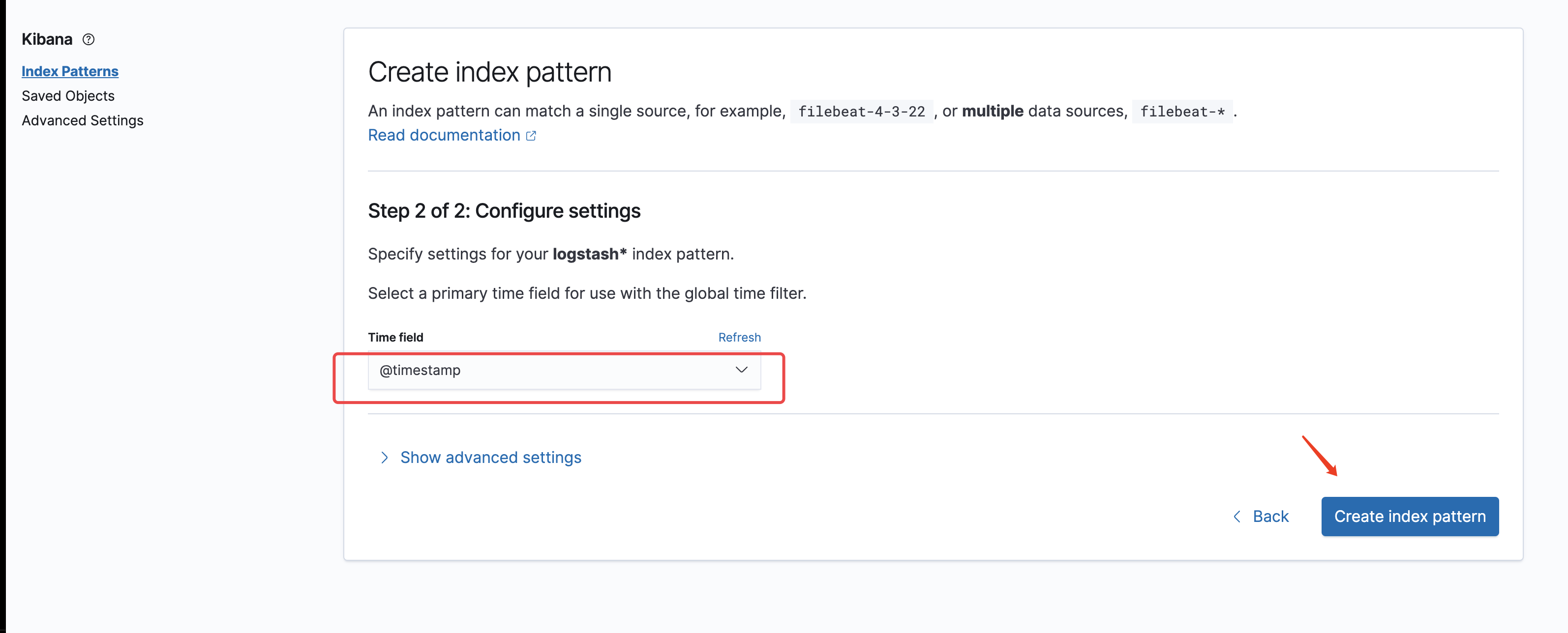

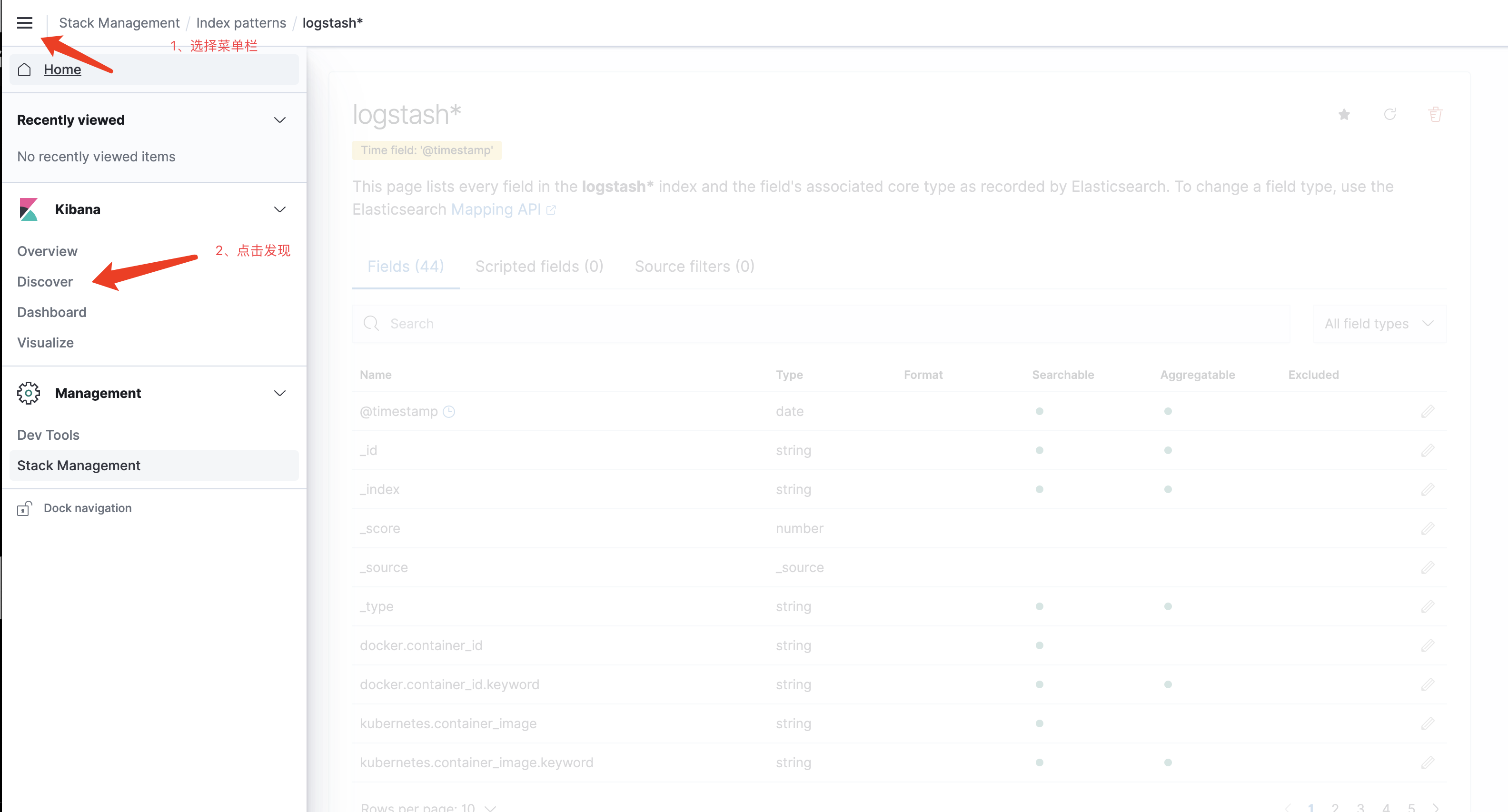

创建索引

创建索引

下面即可展示日志

END!