1.解压

-

tar -zxvf hbase-2.0.5-bin.tar.gz -C /opt/bigdata/

2.删除文档(防止拷贝占用时间)

3.配置环境

export HBASE_HOME=/opt/bigdata/hbase-2.0.5 export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin scorce /etc/profile

-

vi hbase-env.sh

:! ls /usr/java(在文件中查看java安装目录) export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64

-

vi hbase-site.xml

下面不需要修改 <configuration> <property> <name>hbase.rootdir</name> <value>file:///home/testuser/hbase</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/home/testuser/zookeeper</value> </property> <property> <name>hbase.unsafe.stream.capability.enforce</name> <value>false</value> <description> Controls whether HBase will check for stream capabilities (hflush/hsync). Disable this if you intend to run on LocalFileSystem, denoted by a rootdir with the 'file://' scheme, but be mindful of the NOTE below. WARNING: Setting this to false blinds you to potential data loss and inconsistent system state in the event of process and/or node failures. If HBase is complaining of an inability to use hsync or hflush it's most likely not a false positive. </description> </property> </configuration>

-

启动 start-hbase.sh

如果报错: 删除hadoop/share/hadoop/yarn/jline-2.12.jar -

进入hbase: hbase shell

-

查看所有表

list -

查看命名空间list_namespace

hbase(main):001:0> list_namespace NAMESPACE default hbase 2 row(s) Took 0.6773 seconds

-

查看命名空间具体有那些表

hbase(main):003:0> list_namespace_tables 'hbase' TABLE meta namespace 2 row(s) Took 0.0359 seconds => ["meta", "namespace"]

-

查看表 scan 'hbase:meta',

如果是自己创建的: scan '表名' -

创建表

格式:create 表 列族 创建的表存放在: /home/testuser/hbase/data/default下,新写入的数据需要看到,则需要溢写: flush 表 hbase(main):008:0> create 'ke','cf1' Created table ke Took 0.8761 seconds => Hbase::Table - ke

-

describe 表 描述列族

hbase(main):014:0> describe 'ke' Table ke is ENABLED ke COLUMN FAMILIES DESCRIPTION {NAME => 'cf1', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} 1 row(s) Took 0.1171 seconds

-

插入数据

hbase(main):015:0> put 'ke', '1', 'cf1:name', '.柯' Took 0.0622 seconds hbase(main):016:0> put 'ke', '1', 'cf1:age', '23' Took 0.0042 seconds hbase(main):017:0> scan 'ke' ROW COLUMN+CELL 1 column=cf1:age, timestamp=1603214083258, value=23 1 column=cf1:name, timestamp=1603214064282, value=xE5xB0x8FxE6x9FxAF 1 row(s) Took 0.0062 seconds

-

删表,先禁用在删除

hbase(main):019:0> disable 'ke1' Took 0.4648 seconds hbase(main):020:0> drop 'ke1' Took 0.2616 seconds hbase(main):021:0>

-

直接命令插入数据

[root@node01 cf1]# hbase hfile -p -f file:///home/testuser/hbase/data/default/ke/0f0002c39a10819fd2b84c92c6a6ec0a/cf1/00fba8e5471c419da40a0a6fd977070f SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/bigdata/hbase-2.0.5/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 2020-10-21 02:14:56,737 INFO [main] metrics.MetricRegistries: Loaded MetricRegistries class org.apache.hadoop.hbase.metrics.impl.MetricRegistriesImpl K: 1/cf1:age/1603214083258/Put/vlen=2/seqid=5 V: 23 K: 1/cf1:name/1603214064282/Put/vlen=6/seqid=4 V: xE5xB0x8FxE6x9FxAF Scanned kv count -> 2 K: 1/cf1:age/1603214083258/Put/vlen=2/seqid=5 V: 23 1 是row cf1:age 是列 1603214083258 时间戳 Put操作

-

插入的时候会排序: 字典序

搭建Hbase集群

-

停止上面 stop-hbase.sh

-

vi hbase-env.sh

-

修改配置:export HBASE_MANAGES_ZK=false

-

-

vi hbase-site.xml

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://mycluster/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>node02,node03,node04</value> </property> <oconfiguration>

-

vi regionservers

node02

node03

node04

-

备用node04,需要创建 backup-masters文件 vi backup-masters 配置node04

-

读取Hadoop信息配置

conf目录下

拷贝: cp /opt/bigdata/hadoop-2.6.5/etc/hadoop/hdfs-site.xml ./

/opt/bigdata目录下

scp -r hbase-2.0.5/ node02:`pwd`

scp -r hbase-2.0.5/ node03:`pwd`

scp -r hbase-2.0.5/ node04:`pwd`

给node02、node03、node04 配置HBase环境 -

node01启动start-hbase.sh

-

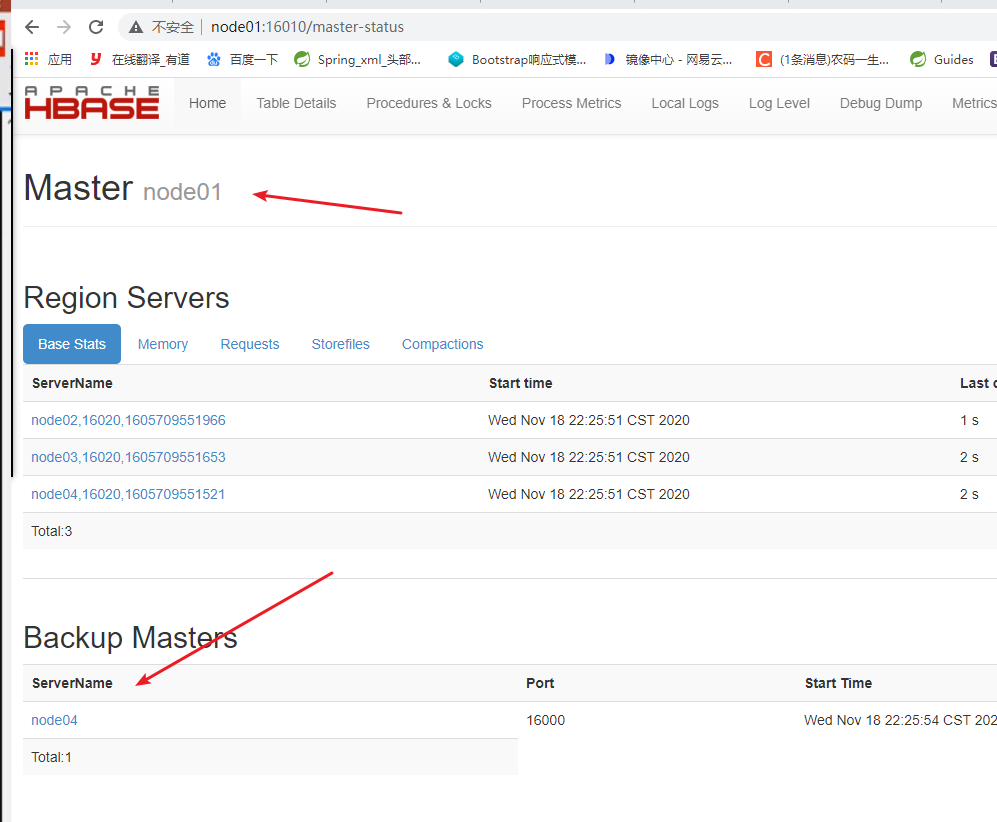

访问node01:16010

-

master有一个备份node04

-

hbase-daemon.sh start master 在node03上运行,就会将node03加入到备份主节点

-

-

同时HDFS中多一个目录: hbase

-

Hbase合并操作

-

配置/conf/hbase-site.xml如下图

-

插入第三个数,会有2个文件生成,其中一个是合并文件

-

hbase查看文件: hbase hfile -p -f /hbase/data/default/psn/8edxxx/cf/874dxxx(hdfs文件路径)

-

-

清空表数据: truncate 'table名'

-

delete只删除一条数据

注意

当出现 1.查看表为空,无法创建数据时候,不报错 2.用idea连接也无法创建,不报错 3.出现org.apache.hadoop.hbase.PleaseHoldException: Master is initializing错误解决 4.可以正常start-hbase.sh 无法stop-hbase(先排查/etc/profile) 以上解决办法: 1.关闭hbase 1.删除HDFS上面 目录/hbase 2.删除ZK上面 目录/hbase 3.重启hbase