1、执行Spark运行在yarn上的命令报错 spark-shell --master yarn-client,错误如下所示:

18/04/22 09:28:22 ERROR SparkContext: Error initializing SparkContext. org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master. at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:123) at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:63) at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:144) at org.apache.spark.SparkContext.<init>(SparkContext.scala:523) at org.apache.spark.repl.SparkILoop.createSparkContext(SparkILoop.scala:1017) at $line3.$read$$iwC$$iwC.<init>(<console>:9) at $line3.$read$$iwC.<init>(<console>:18) at $line3.$read.<init>(<console>:20) at $line3.$read$.<init>(<console>:24) at $line3.$read$.<clinit>(<console>) at $line3.$eval$.<init>(<console>:7) at $line3.$eval$.<clinit>(<console>) at $line3.$eval.$print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065) at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1340) at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819) at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857) at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902) at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:125) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324) at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974) at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159) at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108) at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135) at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059) at org.apache.spark.repl.Main$.main(Main.scala:31) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:672) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 18/04/22 09:28:22 INFO SparkUI: Stopped Spark web UI at http://192.168.19.131:4040 18/04/22 09:28:22 INFO DAGScheduler: Stopping DAGScheduler 18/04/22 09:28:22 INFO YarnClientSchedulerBackend: Shutting down all executors 18/04/22 09:28:22 INFO YarnClientSchedulerBackend: Asking each executor to shut down 18/04/22 09:28:22 INFO YarnClientSchedulerBackend: Stopped 18/04/22 09:28:22 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 18/04/22 09:28:22 ERROR Utils: Uncaught exception in thread main java.lang.NullPointerException at org.apache.spark.network.netty.NettyBlockTransferService.close(NettyBlockTransferService.scala:152) at org.apache.spark.storage.BlockManager.stop(BlockManager.scala:1228) at org.apache.spark.SparkEnv.stop(SparkEnv.scala:100) at org.apache.spark.SparkContext$$anonfun$stop$12.apply$mcV$sp(SparkContext.scala:1749) at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1185) at org.apache.spark.SparkContext.stop(SparkContext.scala:1748) at org.apache.spark.SparkContext.<init>(SparkContext.scala:593) at org.apache.spark.repl.SparkILoop.createSparkContext(SparkILoop.scala:1017) at $line3.$read$$iwC$$iwC.<init>(<console>:9) at $line3.$read$$iwC.<init>(<console>:18) at $line3.$read.<init>(<console>:20) at $line3.$read$.<init>(<console>:24) at $line3.$read$.<clinit>(<console>) at $line3.$eval$.<init>(<console>:7) at $line3.$eval$.<clinit>(<console>) at $line3.$eval.$print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065) at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1340) at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819) at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857) at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902) at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:125) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324) at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974) at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159) at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108) at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135) at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059) at org.apache.spark.repl.Main$.main(Main.scala:31) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:672) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 18/04/22 09:28:22 INFO SparkContext: Successfully stopped SparkContext org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master. at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:123) at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:63) at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:144) at org.apache.spark.SparkContext.<init>(SparkContext.scala:523) at org.apache.spark.repl.SparkILoop.createSparkContext(SparkILoop.scala:1017) at $iwC$$iwC.<init>(<console>:9) at $iwC.<init>(<console>:18) at <init>(<console>:20) at .<init>(<console>:24) at .<clinit>(<console>) at .<init>(<console>:7) at .<clinit>(<console>) at $print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065) at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1340) at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819) at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857) at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902) at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:125) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324) at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974) at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159) at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108) at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135) at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059) at org.apache.spark.repl.Main$.main(Main.scala:31) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:672) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) java.lang.NullPointerException at org.apache.spark.sql.execution.ui.SQLListener.<init>(SQLListener.scala:34) at org.apache.spark.sql.SQLContext.<init>(SQLContext.scala:77) at org.apache.spark.sql.hive.HiveContext.<init>(HiveContext.scala:72) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.spark.repl.SparkILoop.createSQLContext(SparkILoop.scala:1028) at $iwC$$iwC.<init>(<console>:9) at $iwC.<init>(<console>:18) at <init>(<console>:20) at .<init>(<console>:24) at .<clinit>(<console>) at .<init>(<console>:7) at .<clinit>(<console>) at $print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.repl.SparkIMain$ReadEvalPrint.call(SparkIMain.scala:1065) at org.apache.spark.repl.SparkIMain$Request.loadAndRun(SparkIMain.scala:1340) at org.apache.spark.repl.SparkIMain.loadAndRunReq$1(SparkIMain.scala:840) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:871) at org.apache.spark.repl.SparkIMain.interpret(SparkIMain.scala:819) at org.apache.spark.repl.SparkILoop.reallyInterpret$1(SparkILoop.scala:857) at org.apache.spark.repl.SparkILoop.interpretStartingWith(SparkILoop.scala:902) at org.apache.spark.repl.SparkILoop.command(SparkILoop.scala:814) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:132) at org.apache.spark.repl.SparkILoopInit$$anonfun$initializeSpark$1.apply(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkIMain.beQuietDuring(SparkIMain.scala:324) at org.apache.spark.repl.SparkILoopInit$class.initializeSpark(SparkILoopInit.scala:124) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1$$anonfun$apply$mcZ$sp$5.apply$mcV$sp(SparkILoop.scala:974) at org.apache.spark.repl.SparkILoopInit$class.runThunks(SparkILoopInit.scala:159) at org.apache.spark.repl.SparkILoop.runThunks(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoopInit$class.postInitialization(SparkILoopInit.scala:108) at org.apache.spark.repl.SparkILoop.postInitialization(SparkILoop.scala:64) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply$mcZ$sp(SparkILoop.scala:991) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop$$anonfun$org$apache$spark$repl$SparkILoop$$process$1.apply(SparkILoop.scala:945) at scala.tools.nsc.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:135) at org.apache.spark.repl.SparkILoop.org$apache$spark$repl$SparkILoop$$process(SparkILoop.scala:945) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:1059) at org.apache.spark.repl.Main$.main(Main.scala:31) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:672) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) <console>:10: error: not found: value sqlContext import sqlContext.implicits._ ^ <console>:10: error: not found: value sqlContext import sqlContext.sql

解决方法如下所示:

参考文章:https://blog.csdn.net/chengyuqiang/article/details/69934382

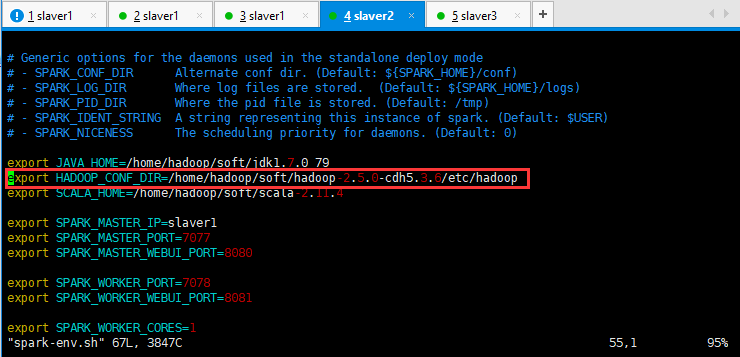

HADOOP_CONF_DIR的路径应该是如下所示,开始我写的是/home/hadoop/soft/hadoop-2.5.0-cdh5.3.6

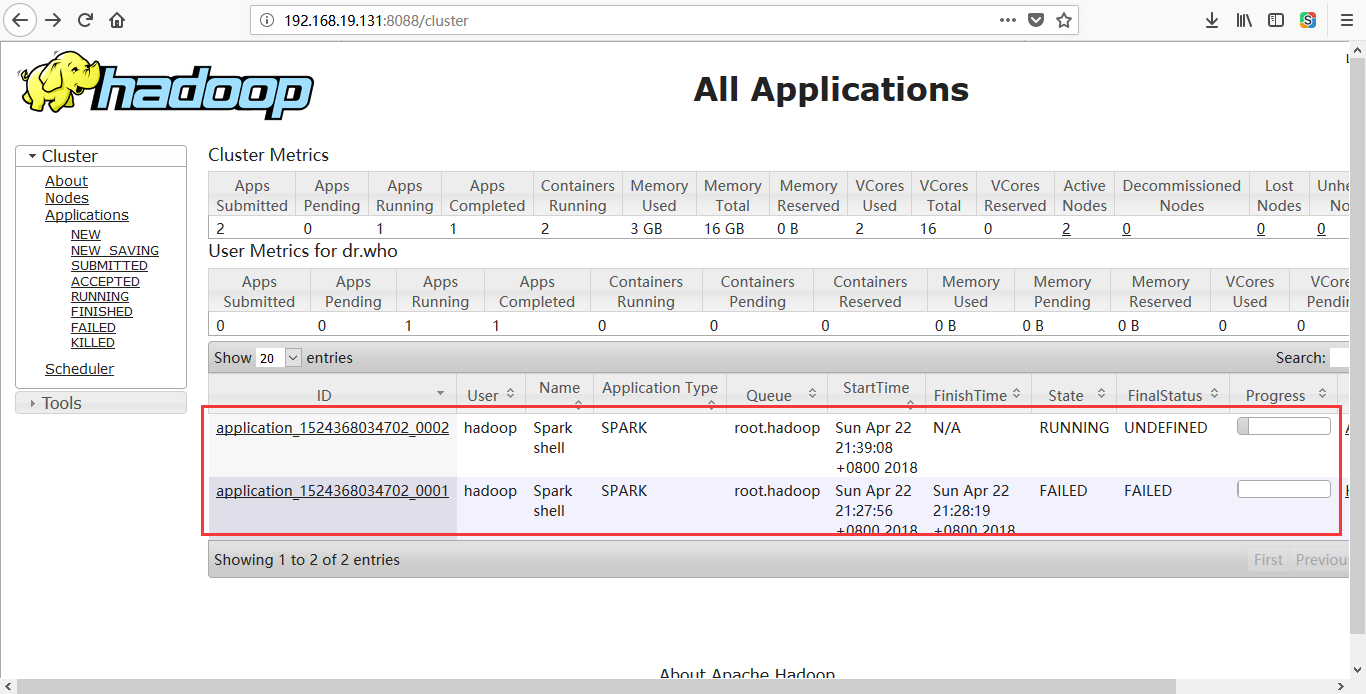

下面分别是运行失败前和运行成功后的效果如下所示:

命令运行如下所示:

[hadoop@slaver1 spark-1.5.1-bin-hadoop2.4]$ spark-shell --master yarn-client 18/04/22 09:37:29 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/04/22 09:37:30 INFO SecurityManager: Changing view acls to: hadoop 18/04/22 09:37:30 INFO SecurityManager: Changing modify acls to: hadoop 18/04/22 09:37:30 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop) 18/04/22 09:37:30 INFO HttpServer: Starting HTTP Server 18/04/22 09:37:30 INFO Utils: Successfully started service 'HTTP class server' on port 52507. Welcome to ____ __ / __/__ ___ _____/ /__ _ / _ / _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_ version 1.5.1 /_/ Using Scala version 2.10.4 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_79) Type in expressions to have them evaluated. Type :help for more information. 18/04/22 09:37:47 INFO SparkContext: Running Spark version 1.5.1 18/04/22 09:37:47 WARN SparkConf: SPARK_WORKER_INSTANCES was detected (set to '1'). This is deprecated in Spark 1.0+. Please instead use: - ./spark-submit with --num-executors to specify the number of executors - Or set SPARK_EXECUTOR_INSTANCES - spark.executor.instances to configure the number of instances in the spark config. 18/04/22 09:37:48 INFO SecurityManager: Changing view acls to: hadoop 18/04/22 09:37:48 INFO SecurityManager: Changing modify acls to: hadoop 18/04/22 09:37:48 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop) 18/04/22 09:37:50 INFO Slf4jLogger: Slf4jLogger started 18/04/22 09:37:50 INFO Remoting: Starting remoting 18/04/22 09:37:51 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.19.131:33571] 18/04/22 09:37:51 INFO Utils: Successfully started service 'sparkDriver' on port 33571. 18/04/22 09:37:51 INFO SparkEnv: Registering MapOutputTracker 18/04/22 09:37:52 INFO SparkEnv: Registering BlockManagerMaster 18/04/22 09:37:52 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-309a3ff2-fb4f-4f01-a5d9-2ab7db4d765c 18/04/22 09:37:52 INFO MemoryStore: MemoryStore started with capacity 534.5 MB 18/04/22 09:37:53 INFO HttpFileServer: HTTP File server directory is /tmp/spark-049ba1b9-9305-4745-b3dc-34f44c846003/httpd-d1991045-b8e1-419f-9335-a4c7762e1e2c 18/04/22 09:37:53 INFO HttpServer: Starting HTTP Server 18/04/22 09:37:54 INFO Utils: Successfully started service 'HTTP file server' on port 47488. 18/04/22 09:37:54 INFO SparkEnv: Registering OutputCommitCoordinator 18/04/22 09:37:55 INFO Utils: Successfully started service 'SparkUI' on port 4040. 18/04/22 09:37:55 INFO SparkUI: Started SparkUI at http://192.168.19.131:4040 18/04/22 09:37:55 WARN MetricsSystem: Using default name DAGScheduler for source because spark.app.id is not set. 18/04/22 09:37:55 WARN YarnClientSchedulerBackend: NOTE: SPARK_WORKER_MEMORY is deprecated. Use SPARK_EXECUTOR_MEMORY or --executor-memory through spark-submit instead. 18/04/22 09:37:55 WARN YarnClientSchedulerBackend: NOTE: SPARK_WORKER_CORES is deprecated. Use SPARK_EXECUTOR_CORES or --executor-cores through spark-submit instead. 18/04/22 09:37:56 INFO RMProxy: Connecting to ResourceManager at slaver1/192.168.19.131:8032 18/04/22 09:37:57 INFO Client: Requesting a new application from cluster with 2 NodeManagers 18/04/22 09:37:57 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container) 18/04/22 09:37:57 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead 18/04/22 09:37:57 INFO Client: Setting up container launch context for our AM 18/04/22 09:37:57 INFO Client: Setting up the launch environment for our AM container 18/04/22 09:37:57 INFO Client: Preparing resources for our AM container 18/04/22 09:37:59 INFO Client: Uploading resource file:/home/hadoop/soft/spark-1.5.1-bin-hadoop2.4/lib/spark-assembly-1.5.1-hadoop2.4.0.jar -> hdfs://slaver1:9000/user/hadoop/.sparkStaging/application_1524368034702_0002/spark-assembly-1.5.1-hadoop2.4.0.jar 18/04/22 09:39:00 INFO Client: Uploading resource file:/tmp/spark-049ba1b9-9305-4745-b3dc-34f44c846003/__spark_conf__1110039413441655708.zip -> hdfs://slaver1:9000/user/hadoop/.sparkStaging/application_1524368034702_0002/__spark_conf__1110039413441655708.zip 18/04/22 09:39:02 INFO SecurityManager: Changing view acls to: hadoop 18/04/22 09:39:02 INFO SecurityManager: Changing modify acls to: hadoop 18/04/22 09:39:02 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop) 18/04/22 09:39:02 INFO Client: Submitting application 2 to ResourceManager 18/04/22 09:39:11 INFO YarnClientImpl: Submitted application application_1524368034702_0002 18/04/22 09:39:12 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:12 INFO Client: client token: N/A diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: root.hadoop start time: 1524404348688 final status: UNDEFINED tracking URL: http://slaver1:8088/proxy/application_1524368034702_0002/ user: hadoop 18/04/22 09:39:14 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:15 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:16 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:17 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:18 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:19 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:20 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:21 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:22 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:23 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:24 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:25 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:26 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:27 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:28 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:29 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:30 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:31 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:32 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:33 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:34 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:35 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:36 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:37 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:38 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:39 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:41 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:42 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:43 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:44 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:45 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:46 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:47 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:48 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:49 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:50 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:51 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:52 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:52 INFO YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as AkkaRpcEndpointRef(Actor[akka.tcp://sparkYarnAM@192.168.19.132:39065/user/YarnAM#-650752241]) 18/04/22 09:39:52 INFO YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> slaver1, PROXY_URI_BASES -> http://slaver1:8088/proxy/application_1524368034702_0002), /proxy/application_1524368034702_0002 18/04/22 09:39:52 INFO JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter 18/04/22 09:39:53 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:54 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:55 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:56 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:57 INFO Client: Application report for application_1524368034702_0002 (state: ACCEPTED) 18/04/22 09:39:58 INFO Client: Application report for application_1524368034702_0002 (state: RUNNING) 18/04/22 09:39:58 INFO Client: client token: N/A diagnostics: N/A ApplicationMaster host: 192.168.19.132 ApplicationMaster RPC port: 0 queue: root.hadoop start time: 1524404348688 final status: UNDEFINED tracking URL: http://slaver1:8088/proxy/application_1524368034702_0002/ user: hadoop 18/04/22 09:39:58 INFO YarnClientSchedulerBackend: Application application_1524368034702_0002 has started running. 18/04/22 09:40:40 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 49581. 18/04/22 09:40:40 INFO NettyBlockTransferService: Server created on 49581 18/04/22 09:40:40 INFO BlockManagerMaster: Trying to register BlockManager 18/04/22 09:40:40 INFO BlockManagerMasterEndpoint: Registering block manager 192.168.19.131:49581 with 534.5 MB RAM, BlockManagerId(driver, 192.168.19.131, 49581) 18/04/22 09:40:40 INFO BlockManagerMaster: Registered BlockManager 18/04/22 09:40:44 INFO EventLoggingListener: Logging events to hdfs://slaver1:9000/spark/history/application_1524368034702_0002.snappy 18/04/22 09:40:46 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms) 18/04/22 09:40:46 INFO SparkILoop: Created spark context.. Spark context available as sc. 18/04/22 09:41:03 INFO YarnClientSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@slaver2:44020/user/Executor#-1604999953]) with ID 1 18/04/22 09:41:05 INFO BlockManagerMasterEndpoint: Registering block manager slaver2:34012 with 417.6 MB RAM, BlockManagerId(1, slaver2, 34012) 18/04/22 09:41:06 INFO HiveContext: Initializing execution hive, version 1.2.1 18/04/22 09:41:07 INFO ClientWrapper: Inspected Hadoop version: 2.4.0 18/04/22 09:41:07 INFO ClientWrapper: Loaded org.apache.hadoop.hive.shims.Hadoop23Shims for Hadoop version 2.4.0 18/04/22 09:41:12 INFO HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore 18/04/22 09:41:12 INFO ObjectStore: ObjectStore, initialize called 18/04/22 09:41:13 INFO Persistence: Property datanucleus.cache.level2 unknown - will be ignored 18/04/22 09:41:13 INFO Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored 18/04/22 09:41:13 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 18/04/22 09:41:16 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 18/04/22 09:41:21 INFO ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order" 18/04/22 09:41:26 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:26 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:32 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:32 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:34 INFO MetaStoreDirectSql: Using direct SQL, underlying DB is DERBY 18/04/22 09:41:34 INFO ObjectStore: Initialized ObjectStore 18/04/22 09:41:35 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0 18/04/22 09:41:36 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException 18/04/22 09:41:37 INFO HiveMetaStore: Added admin role in metastore 18/04/22 09:41:37 INFO HiveMetaStore: Added public role in metastore 18/04/22 09:41:38 INFO HiveMetaStore: No user is added in admin role, since config is empty 18/04/22 09:41:39 INFO HiveMetaStore: 0: get_all_databases 18/04/22 09:41:39 INFO audit: ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases 18/04/22 09:41:39 INFO HiveMetaStore: 0: get_functions: db=default pat=* 18/04/22 09:41:39 INFO audit: ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=* 18/04/22 09:41:39 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:43 INFO SessionState: Created HDFS directory: /tmp/hive/hadoop 18/04/22 09:41:43 INFO SessionState: Created local directory: /tmp/a864abec-e802-46d6-9440-168ef2747988_resources 18/04/22 09:41:43 INFO SessionState: Created HDFS directory: /tmp/hive/hadoop/a864abec-e802-46d6-9440-168ef2747988 18/04/22 09:41:43 INFO SessionState: Created local directory: /tmp/hadoop/a864abec-e802-46d6-9440-168ef2747988 18/04/22 09:41:43 INFO SessionState: Created HDFS directory: /tmp/hive/hadoop/a864abec-e802-46d6-9440-168ef2747988/_tmp_space.db 18/04/22 09:41:46 INFO HiveContext: default warehouse location is /user/hive/warehouse 18/04/22 09:41:46 INFO HiveContext: Initializing HiveMetastoreConnection version 1.2.1 using Spark classes. 18/04/22 09:41:46 INFO ClientWrapper: Inspected Hadoop version: 2.4.0 18/04/22 09:41:47 INFO ClientWrapper: Loaded org.apache.hadoop.hive.shims.Hadoop23Shims for Hadoop version 2.4.0 18/04/22 09:41:49 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/04/22 09:41:49 INFO HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore 18/04/22 09:41:50 INFO ObjectStore: ObjectStore, initialize called 18/04/22 09:41:50 INFO Persistence: Property datanucleus.cache.level2 unknown - will be ignored 18/04/22 09:41:50 INFO Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored 18/04/22 09:41:50 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 18/04/22 09:41:52 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 18/04/22 09:41:53 INFO ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order" 18/04/22 09:41:56 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:56 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:56 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:56 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:41:57 INFO Query: Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing 18/04/22 09:41:57 INFO MetaStoreDirectSql: Using direct SQL, underlying DB is DERBY 18/04/22 09:41:57 INFO ObjectStore: Initialized ObjectStore 18/04/22 09:41:57 INFO HiveMetaStore: Added admin role in metastore 18/04/22 09:41:57 INFO HiveMetaStore: Added public role in metastore 18/04/22 09:41:58 INFO HiveMetaStore: No user is added in admin role, since config is empty 18/04/22 09:41:58 INFO HiveMetaStore: 0: get_all_databases 18/04/22 09:41:58 INFO audit: ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases 18/04/22 09:41:58 INFO HiveMetaStore: 0: get_functions: db=default pat=* 18/04/22 09:41:58 INFO audit: ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=* 18/04/22 09:41:58 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table. 18/04/22 09:42:06 INFO SessionState: Created local directory: /tmp/5990c858-3991-40f6-80a5-cb10039ec99a_resources 18/04/22 09:42:06 INFO SessionState: Created HDFS directory: /tmp/hive/hadoop/5990c858-3991-40f6-80a5-cb10039ec99a 18/04/22 09:42:06 INFO SessionState: Created local directory: /tmp/hadoop/5990c858-3991-40f6-80a5-cb10039ec99a 18/04/22 09:42:06 INFO SessionState: Created HDFS directory: /tmp/hive/hadoop/5990c858-3991-40f6-80a5-cb10039ec99a/_tmp_space.db 18/04/22 09:42:06 INFO SparkILoop: Created sql context (with Hive support).. SQL context available as sqlContext. scala>