EBLK

第1章 传统日志分析需求

1.找出访问排名前十的IP,URL

2.找出10点到12点之间排名前十的IP,URL

3.对比昨天这个时间段访问情况有什么变化

4.对比上个星期同一天同一时间段的访问变化

5.找出搜索引擎访问的次数和每个搜索弓|擎各访问了多少次

6.指定域名的关键链接访问次数,响应时间

7.网站HTTP状态码情况

8.找出攻击者的IP地址,这个IP访问了什么页面,这个IP什么时候来的,什么时候走的共访问了多少次

9.5分钟内告诉为结果

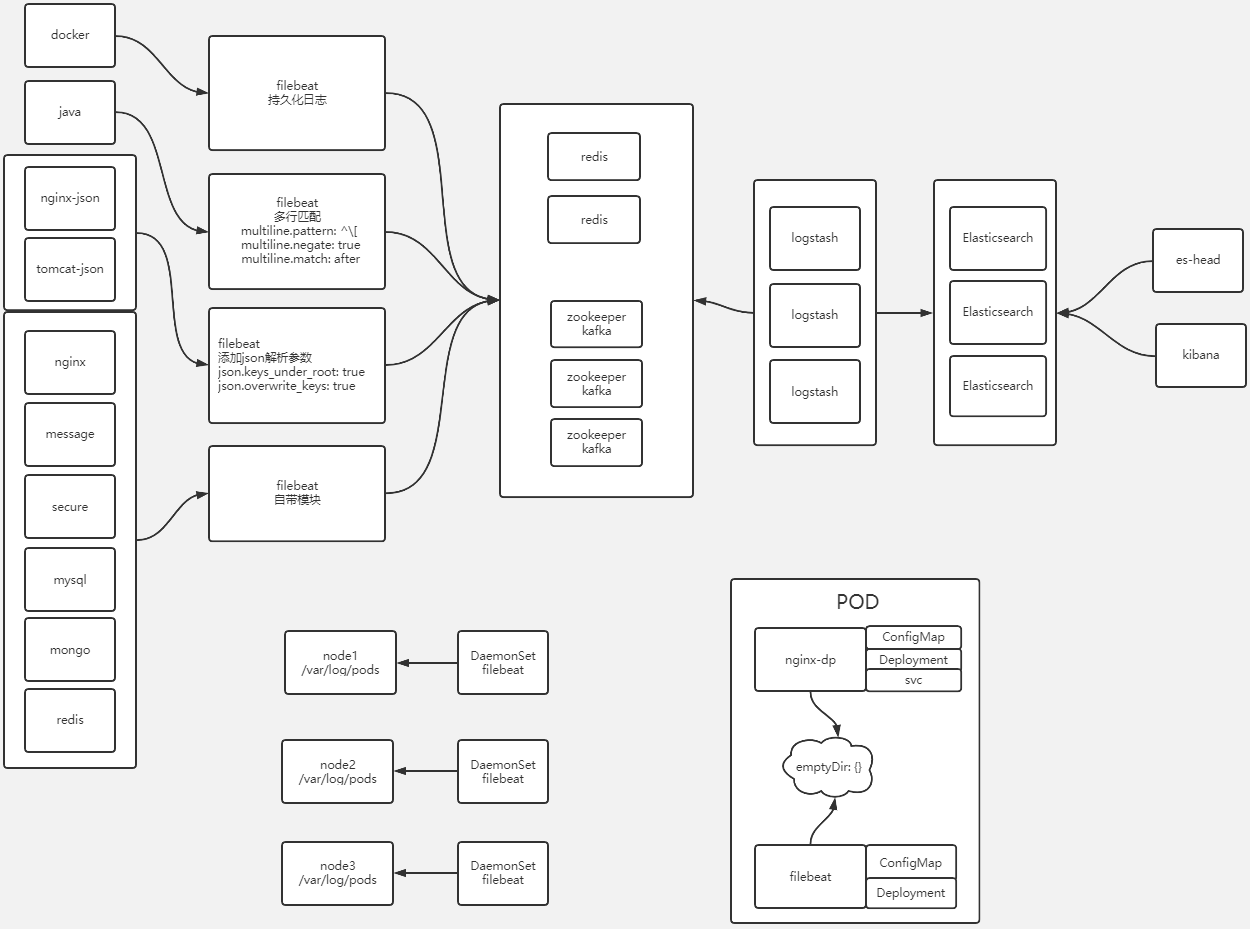

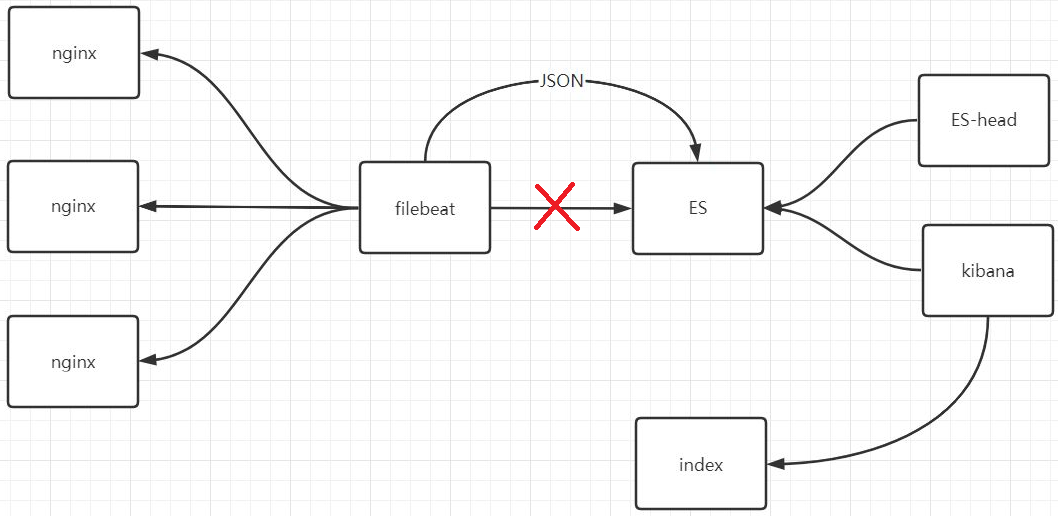

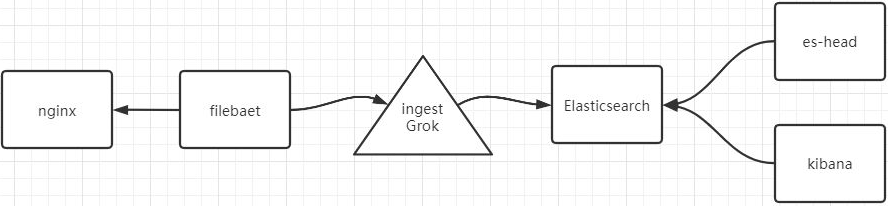

第2章 EBLK介绍

EBLK介绍

E Elasticsearch java

B Filebeat Go

L Logstash java

K Kibana java

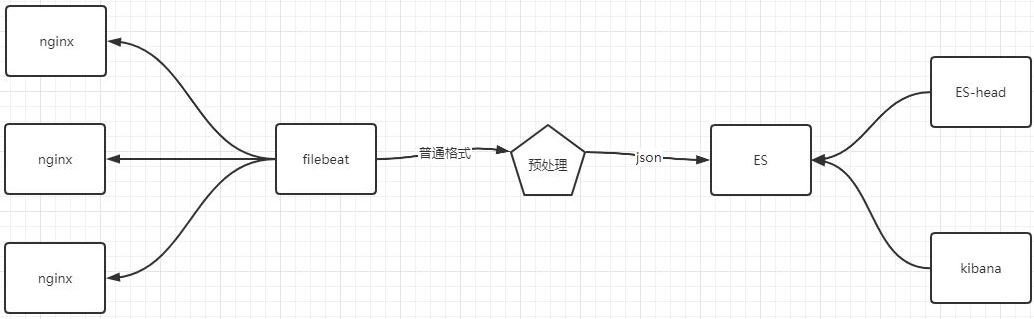

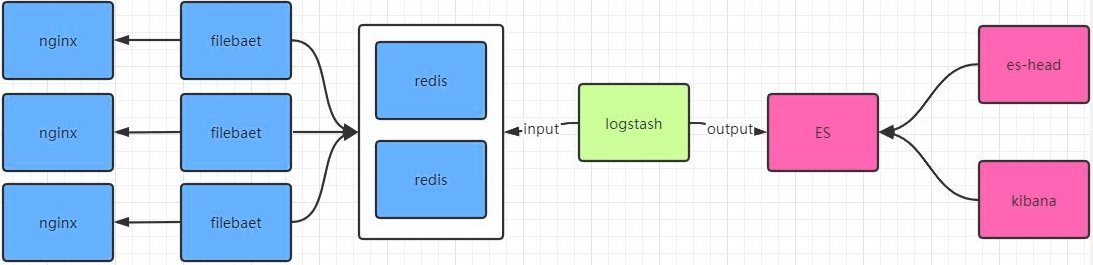

EBLK日志收集流程

第3章 EBL部署

Elasticsearch单节点部署

rpm -ivh elasticsearch-7.9.1-x86_64.rpm

cat > /etc/elasticsearch/elasticsearch.yml << 'EOF'

node.name: node-1

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 127.0.0.1,10.0.0.51

http.port: 9200

discovery.seed_hosts: ["10.0.0.51"]

cluster.initial_master_nodes: ["10.0.0.51"]

EOF

systemctl daemon-reload

systemctl start elasticsearch.service

netstat -lntup|grep 9200

curl 127.0.0.1:9200

有问题,停止,清除,启动

systemctl stop elasticsearch.service rm -rf /var/lib/elasticsearch/* systemctl start elasticsearch.service

Kibana部署

rpm -ivh kibana-7.9.1-x86_64.rpm

cat > /etc/kibana/kibana.yml << 'EOF'

server.port: 5601

server.host: "10.0.0.51"

elasticsearch.hosts: ["http://10.0.0.51:9200"]

kibana.index: ".kibana"

EOF

systemctl start kibana

有问题,停止,清除,启动

systemctl stop kibana.service rm -rf /var/lib/kibana/* # ES删除kibana索引 systemctl start kibana

Elasticsearch-head部署

google浏览器 --> 更多工具 --> 扩展程序 --> 开发者模式 --> 选择解压缩后的插件目录

第4章 收集普通格式的nginx日志

nginx部署

cat > /etc/yum.repos.d/nginx.repo <<'EOF'

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=0

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=0

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

EOF

yum install nginx -y

systemctl start nginx

nginx生成访问日志

for i in `seq 10`;do curl -I 127.0.0.1 &>/dev/null ;done

filebeat部署

rpm -ivh filebeat-7.9.1-x86_64.rpm

cp /etc/filebeat/filebeat.yml /opt/

cat > /etc/filebeat/filebeat.yml << EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

EOF

有问题,停止,清除,启动

systemctl stop filebeat.service rm -rf /var/lib/filebeat/* systemctl start filebeat.service

filebeat启动并查看日志验证

systemctl start filebeat

tail -f /var/log/filebeat/filebeat

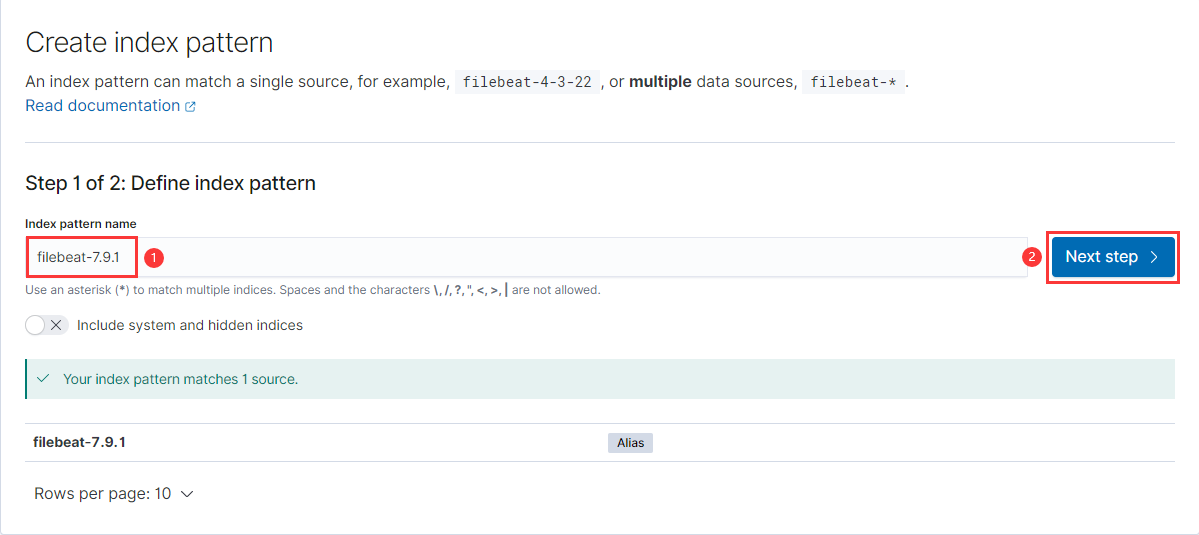

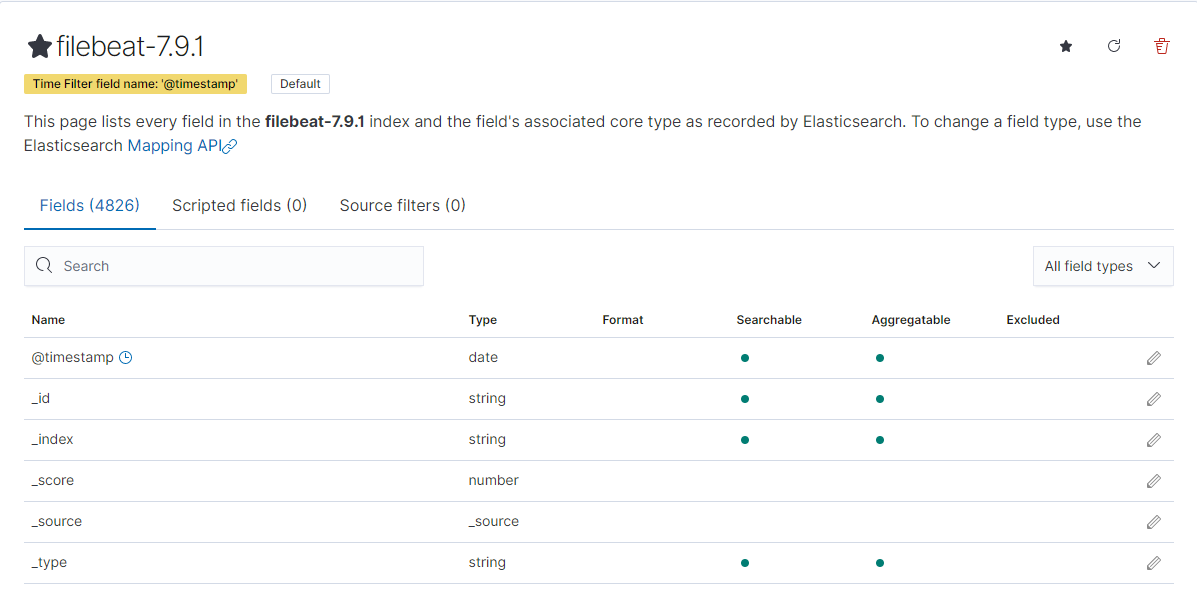

- filebeat导入的索引:

filebeat-7.9.1-2020.12.29-000001 - 索引生命周期管理的索引:

ilm-history-2-000001

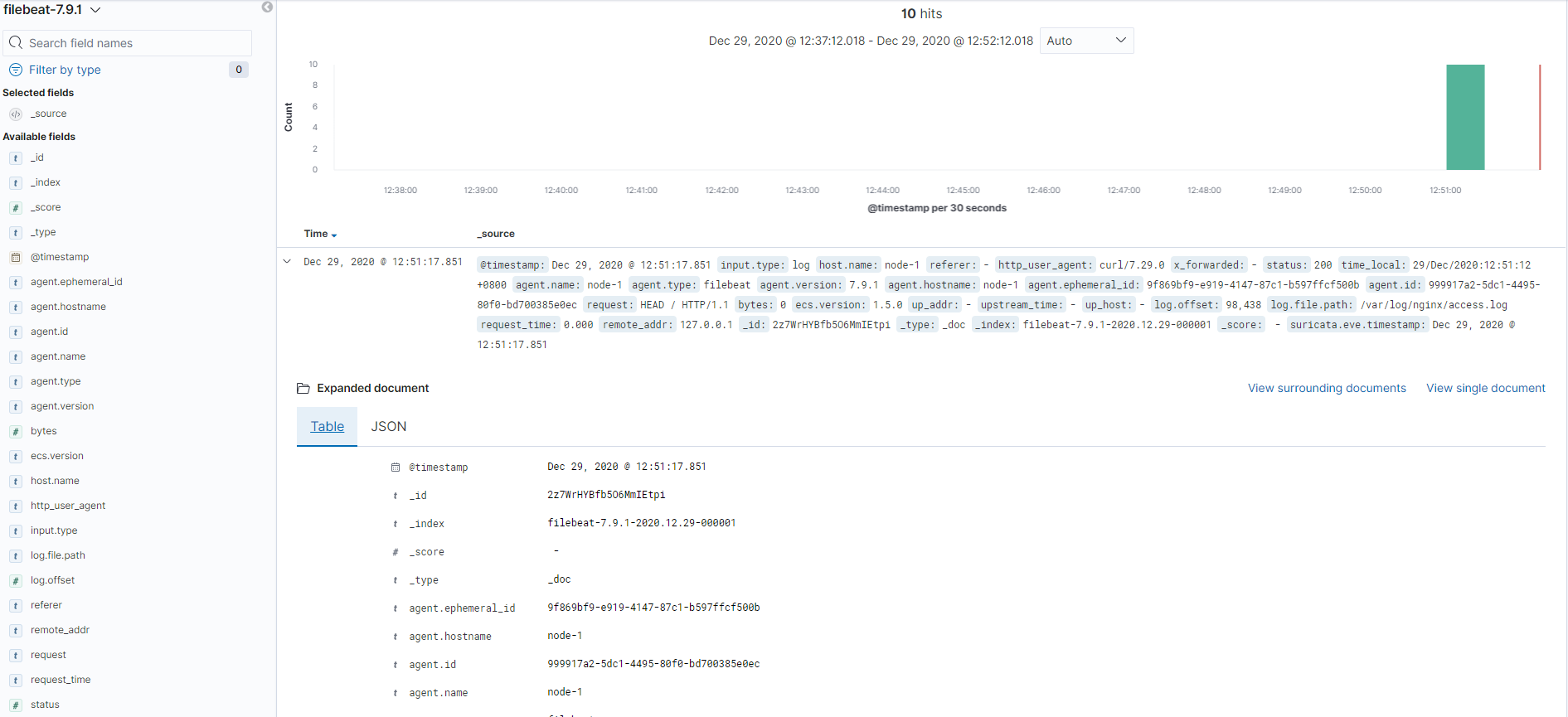

检查收集结果

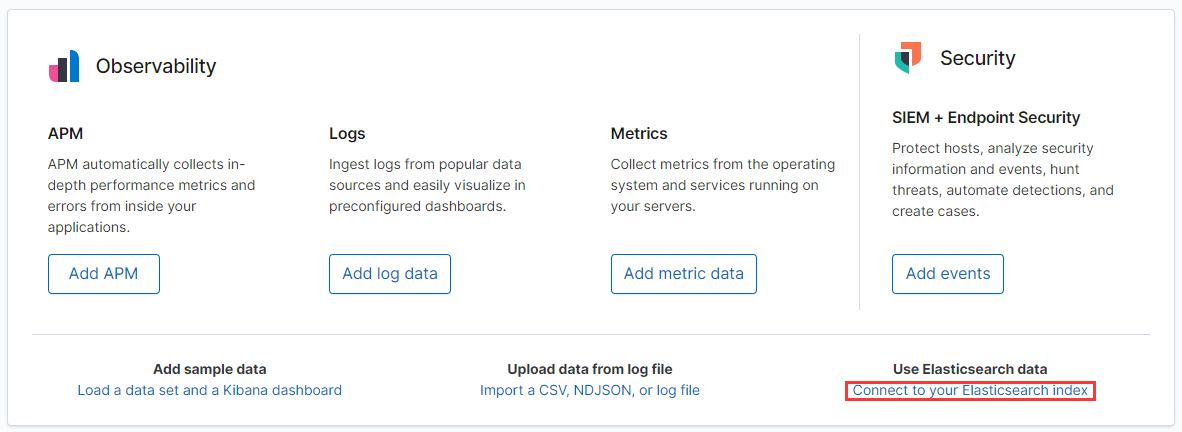

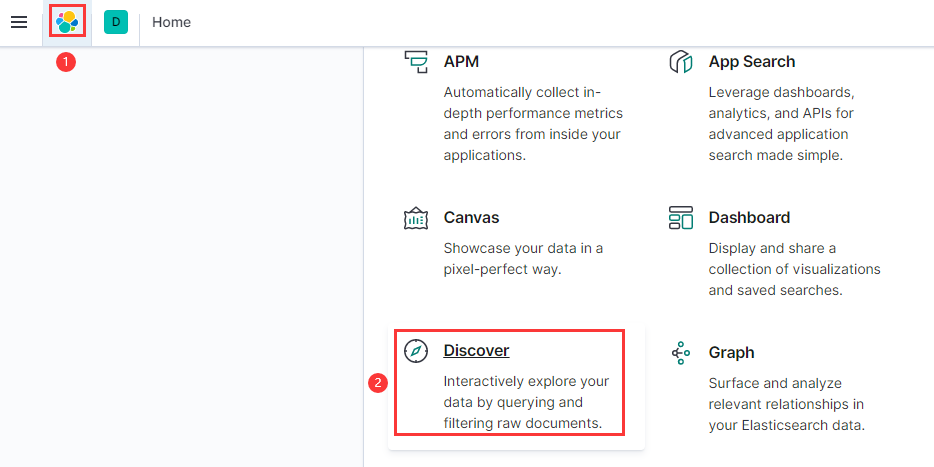

- 访问http://10.0.0.51:5601/

- Connect to your Elasticsearch index

- Index pattern name

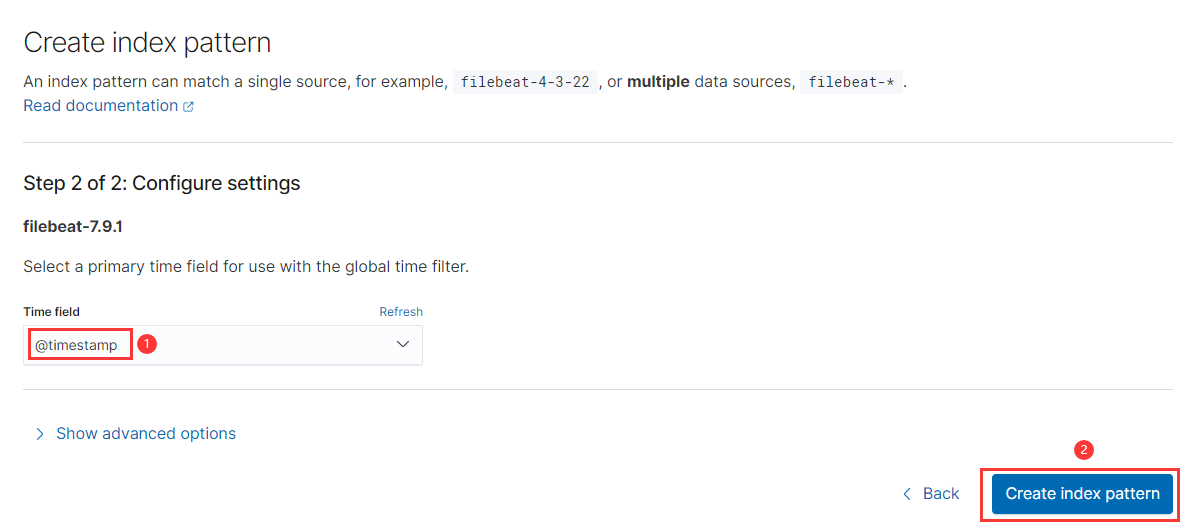

- 选择时间顺序

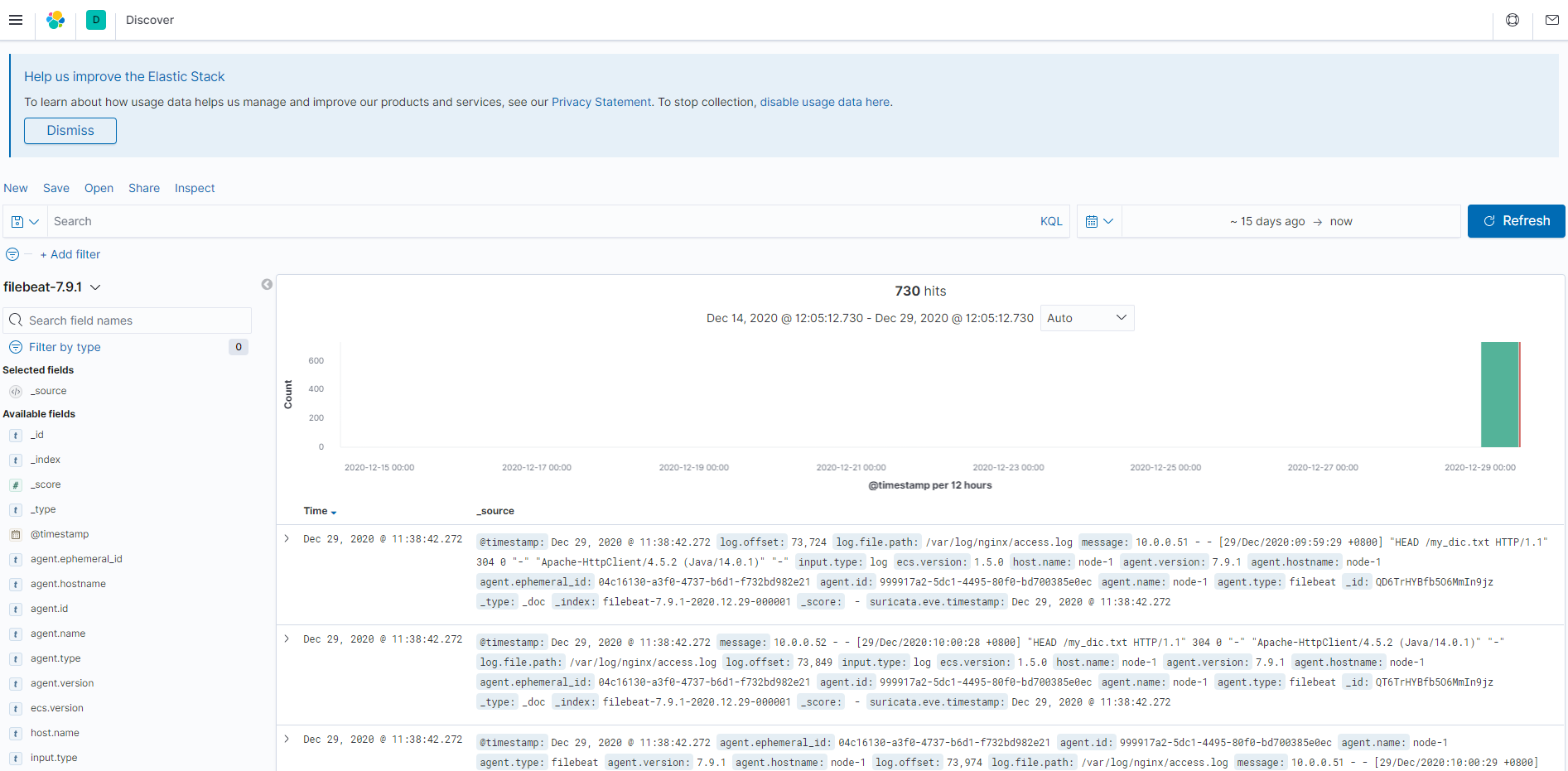

- 查看日志:主页 --> Discover

第5章 收集Json格式的Nginx日志

不足与期望

当前日志收集方案的不足:

- 所有日志都存储在message的value里,不能拆分单独显示

- 要想单独显示,就得想办法把日志字段拆分开,变成json格式

我们期望的日志收集效果:可以把日志所有字段拆分出来

{

"time_local": "24/Dec/2020:09:43:45 +0800",

"remote_addr": "127.0.0.1",

"referer": "-",

"request": "HEAD / HTTP/1.1",

"status": 200,

"bytes": 0,

"http_user_agent": "curl/7.29.0",

"x_forwarded": "-",

"up_addr": "-",

"up_host": "-",

"upstream_time": "-",

"request_time": "0.000"

}

Nginx改进

- 修改配置文件

vi /etc/nginx/nginx.conf

log_format json '{ "time_local": "$time_local", '

'"remote_addr": "$remote_addr", '

'"referer": "$http_referer", '

'"request": "$request", '

'"status": $status, '

'"bytes": $body_bytes_sent, '

'"http_user_agent": "$http_user_agent", '

'"x_forwarded": "$http_x_forwarded_for", '

'"up_addr": "$upstream_addr",'

'"up_host": "$upstream_http_host",'

'"upstream_time": "$upstream_response_time",'

'"request_time": "$request_time"'

' }';

access_log /var/log/nginx/access.log json;

- 检查并重启nginx,清空旧日志,创建新日志,检查是否为json格式

nginx -t

systemctl restart nginx

> /var/log/nginx/access.log

for i in `seq 10`;do curl -I 127.0.0.1 &>/dev/null ;done

cat /var/log/nginx/access.log

Filebeat改进

- 修改配置文件

cat > /etc/filebeat/filebeat.yml << EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

EOF

- 清除之前filebeat导入ES的数据

- 重启filebeat生效,重新将JSON日志导入ES

systemctl restart filebeat

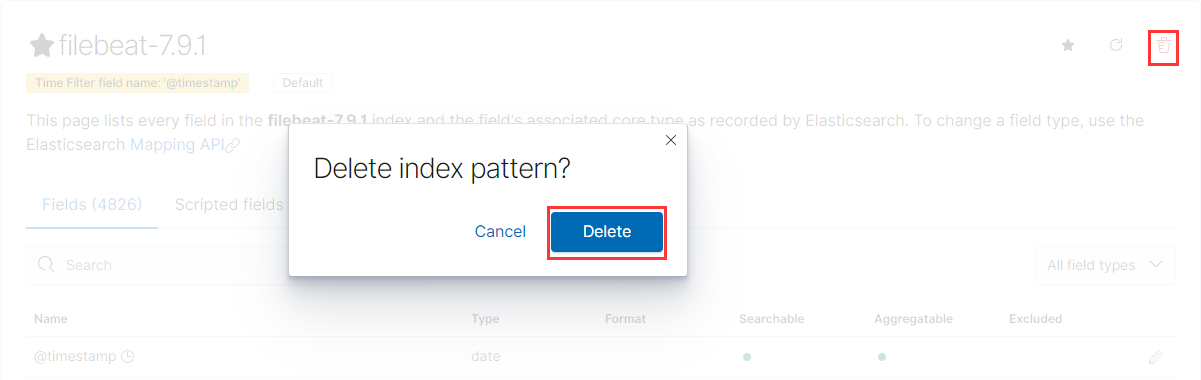

检查收集结果

-

移除之前创建的

filebeat-7.9.1

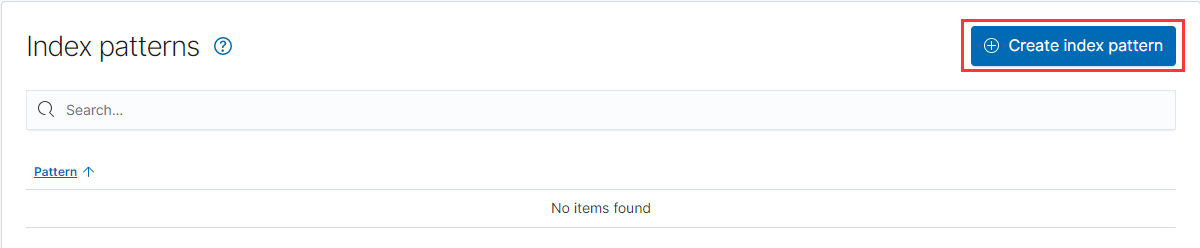

- 创建新的索引模式(Index patterns),流程同上

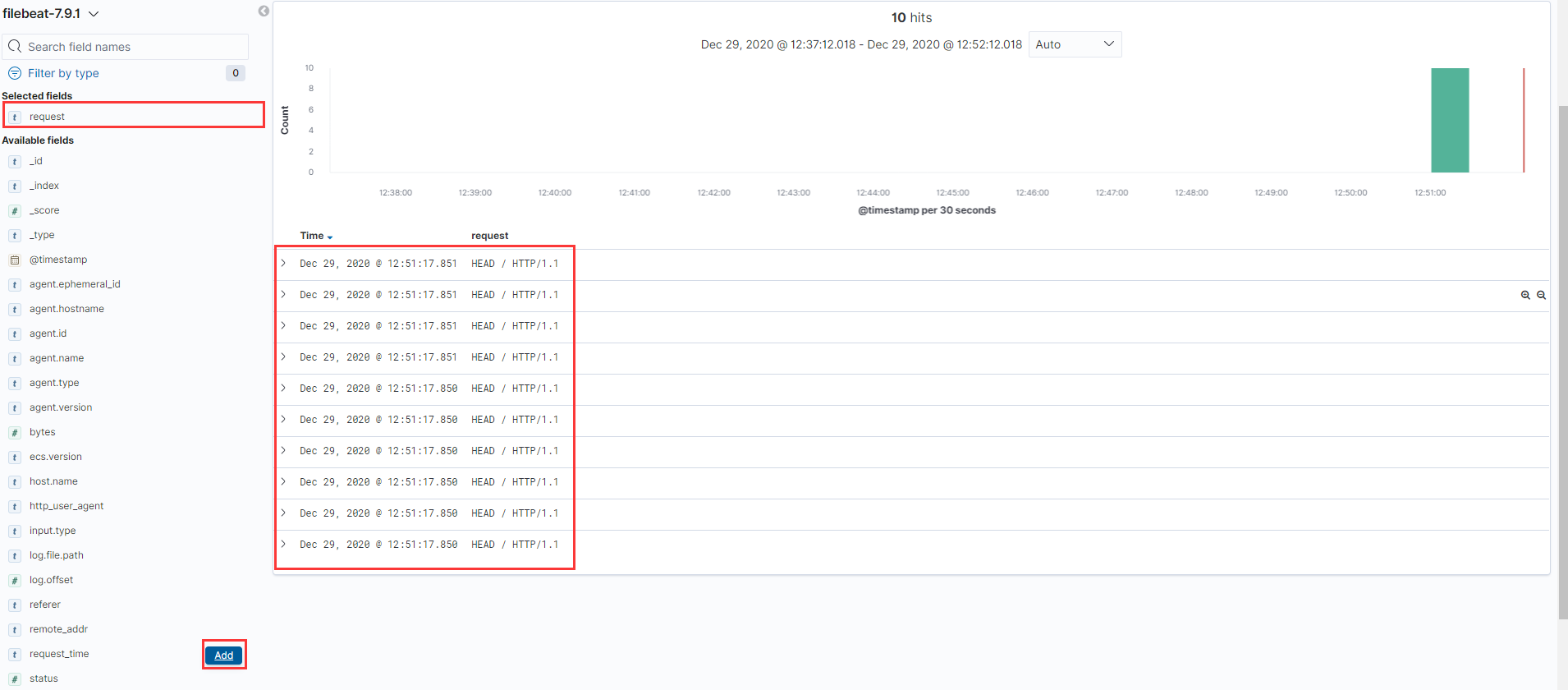

- 可以筛选日志所有字段

第6章 自定义Elasticsearch索引名称

不足与期望

当前日志收集方案的不足:filebeat-7.9.1-2020.12.29-000001

- 虽然日志可以拆分了,但是索引名称还是默认的,根据索引名称并不能看出来收集的是什么日志

- 每天创建一个索引

我们期望的日志收集效果:nginx-7.9.1-2020.12

- 自定义索引名称

- 每月的日志存在一个索引中

Filebeat改进

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "nginx-%{[agent.version]}-%{+yyyy.MM}"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- 重启filebeat生效

systemctl restart filebeat

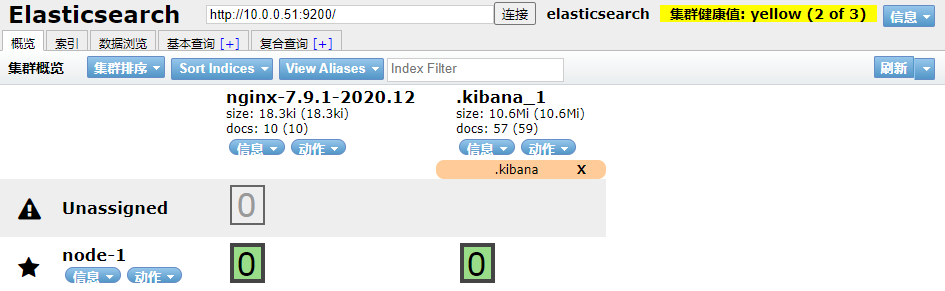

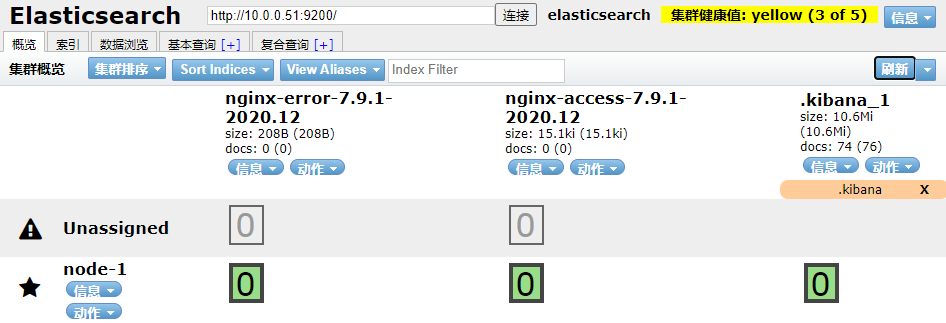

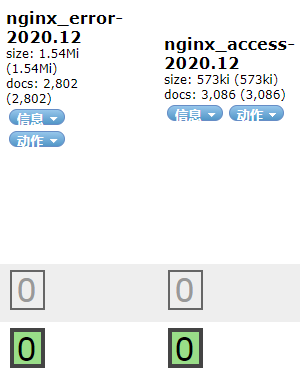

检查收集结果

- 清除之前filebeat导入ES的数据

- nginx生成新的访问日志,稍等

for i in `seq 10`;do curl -I 127.0.0.1 &>/dev/null ;done

第7章 按日志类型定义索引名称

不足与期望

当前日志收集方案的不足:只有访问日志,没有错误日志

我们期望的日志收集效果:

nginx-access-7.9.1-2020.12nginx-error-7.9.1-2020.12

Filebeat改进

-

修改配置文件

-

以指定项,作为某类索引名的筛选条件

-

以自定义标签项,作为某类索引名的筛选条件

-

cat > /etc/filebeat/filebeat.yml << EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

log.file.path: "/var/log/nginx/access.log"

- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

log.file.path: "/var/log/nginx/error.log"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

cat > /etc/filebeat/filebeat.yml << EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

tags: "access"

- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

tags: "error"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- 重启filebeat生效

systemctl restart filebeat

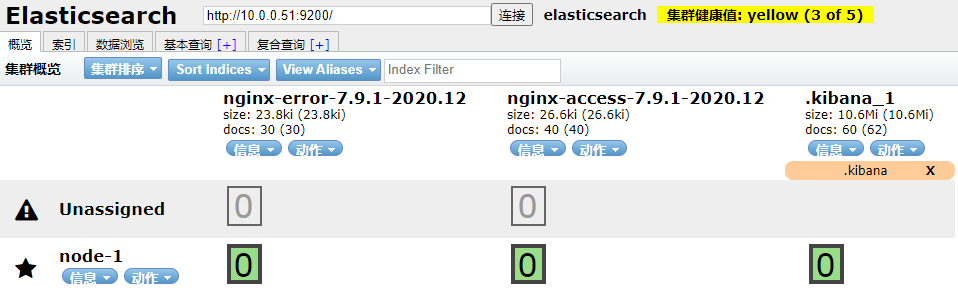

检查收集结果

- nginx生成新的错误日志,稍等

for i in `seq 10`;do curl -I 127.0.0.1/1 &>/dev/null ;done

第8章 使用ES-ingest 节点转换Nginx普通日志

Ingest 节点介绍

ingest 节点可以看作是ES数据前置处理转换的节点,支持 pipeline管道 设置,可以使用 ingest 对数据进行过滤、转换等操作,类似于 logstash 中 filter 的作用,功能相当强大。

Ingest 节点是 Elasticsearch 5.0 新增的节点类型和功能。

Ingest 节点的基础原理,是:节点接收到数据之后,根据请求参数中指定的管道流 id,找到对应的已注册管道流,对数据进行处理,然后将处理过后的数据,按照 Elasticsearch 标准的 indexing 流程继续运行。

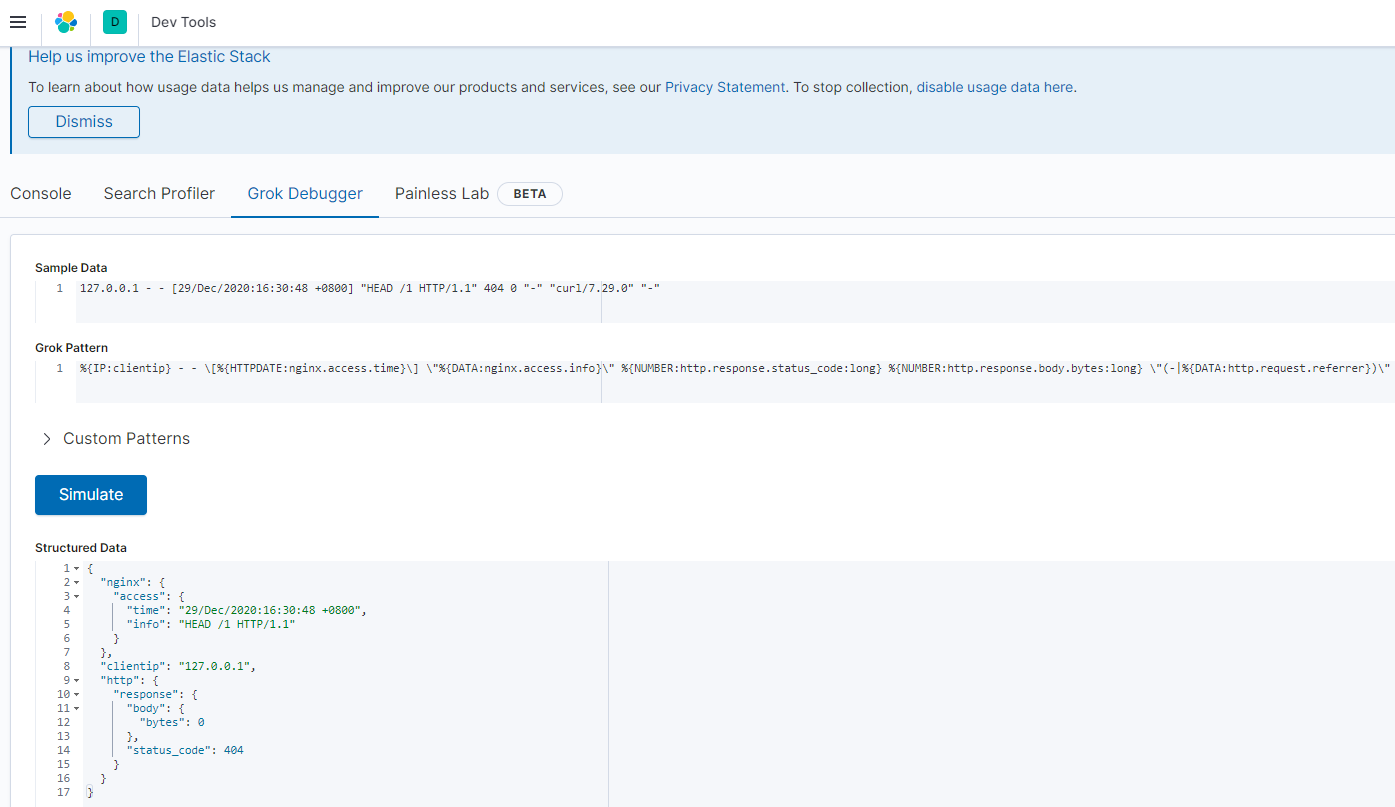

Grok 介绍

Grok 转换语法

127.0.0.1 ==> %{IP:clientip}

- ==> -

- ==> -

[08/Oct/2020:16:34:40 +0800] ==> \[%{HTTPDATE:nginx.access.time}\]

"GET / HTTP/1.1" ==> "%{DATA:nginx.access.info}"

200 ==> %{NUMBER:http.response.status_code:long}

5 ==> %{NUMBER:http.response.body.bytes:long}

"-" ==> "(-|%{DATA:http.request.referrer})"

"curl/7.29.0" ==> "(-|%{DATA:user_agent.original})"

"-" ==> "(-|%{IP:clientip})"

Sample Data 通过 Grok Pattern 转换为JSON格式

- Sample Data

127.0.0.1 - - [29/Dec/2020:16:30:48 +0800] "HEAD /1 HTTP/1.1" 404 0 "-" "curl/7.29.0" "-"

- Grok Pattern

%{IP:clientip} - - [%{HTTPDATE:nginx.access.time}] "%{DATA:nginx.access.info}" %{NUMBER:http.response.status_code:long} %{NUMBER:http.response.body.bytes:long} "(-|%{DATA:http.request.referrer})" "(-|%{DATA:user_agent.original})"

预处理日志流程

filebaet输出日志,先到ingest节点,通过 pipeline 脚本匹配 Grok Pattern 将普通格式转换为 Json格式,再存入ES。

ES导入pipeline规则

GET _ingest/pipeline

PUT _ingest/pipeline/pipeline-nginx-access

{

"description" : "nginx access log",

"processors": [

{

"grok": {

"field": "message",

"patterns": ["%{IP:clientip} - - \[%{HTTPDATE:nginx.access.time}\] "%{DATA:nginx.access.info}" %{NUMBER:http.response.status_code:long} %{NUMBER:http.response.body.bytes:long} "(-|%{DATA:http.request.referrer})" "(-|%{DATA:user_agent.original})""]

}

},{

"remove": {

"field": "message"

}

}

]

}

Nginx配置

- 修改配置文件

vi /etc/nginx/nginx.conf

access_log /var/log/nginx/access.log main;

- 重启nginx,清空旧日志,创建新日志,检查是否为普通格式

systemctl restart nginx

> /var/log/nginx/access.log

> /var/log/nginx/error.log

for i in `seq 10`;do curl -I 127.0.0.1/1 &>/dev/null ;done

cat /var/log/nginx/access.log

cat /var/log/nginx/error.log

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml << EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

processors:

- drop_fields:

fields: ["ecs","log"]

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

pipelines:

- pipeline: "pipeline-nginx-access"

when.contains:

tags: "access"

indices:

- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

tags: "access"

- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

tags: "error"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- 重启filebeat生效

systemctl restart filebeat

检查收集结果

- 清除之前filebeat导入ES的数据

- nginx生成新的访问日志,稍等

第9章 使用filebeat模块收集Nginx普通日志

Filebeat模块介绍

[root@node-1 soft]# ls /etc/filebeat/modules.d/

activemq.yml.disabled haproxy.yml.disabled nginx.yml.disabled

apache.yml.disabled ibmmq.yml.disabled o365.yml.disabled

auditd.yml.disabled icinga.yml.disabled okta.yml.disabled

aws.yml.disabled iis.yml.disabled osquery.yml.disabled

azure.yml.disabled imperva.yml.disabled panw.yml.disabled

barracuda.yml.disabled infoblox.yml.disabled postgresql.yml.disabled

bluecoat.yml.disabled iptables.yml.disabled rabbitmq.yml.disabled

cef.yml.disabled juniper.yml.disabled radware.yml.disabled

checkpoint.yml.disabled kafka.yml.disabled redis.yml.disabled

cisco.yml.disabled kibana.yml.disabled santa.yml.disabled

coredns.yml.disabled logstash.yml.disabled sonicwall.yml.disabled

crowdstrike.yml.disabled microsoft.yml.disabled sophos.yml.disabled

cylance.yml.disabled misp.yml.disabled squid.yml.disabled

elasticsearch.yml.disabled mongodb.yml.disabled suricata.yml.disabled

envoyproxy.yml.disabled mssql.yml.disabled system.yml.disabled

f5.yml.disabled mysql.yml.disabled tomcat.yml.disabled

fortinet.yml.disabled nats.yml.disabled traefik.yml.disabled

googlecloud.yml.disabled netflow.yml.disabled zeek.yml.disabled

gsuite.yml.disabled netscout.yml.disabled zscaler.yml.disabled

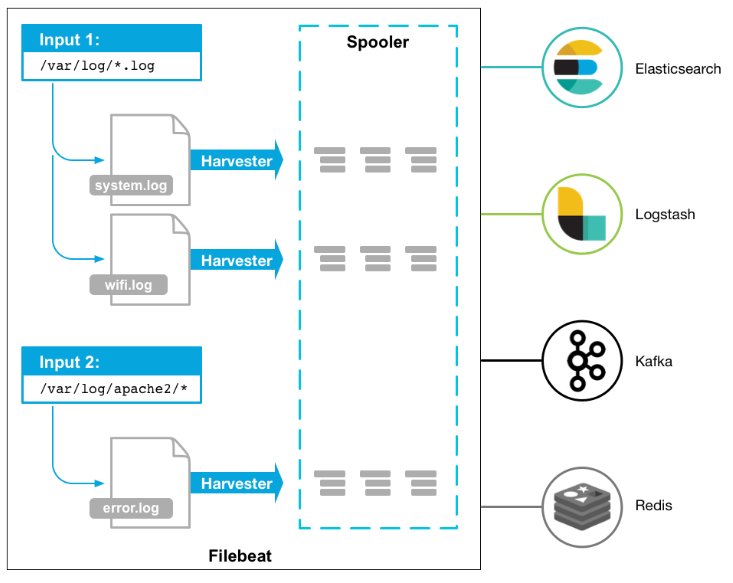

Filebeat工作流程

参考文档:Filebeat

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: enable

filebeat.modules:

- module: nginx

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

log.file.path: "/var/log/nginx/access.log"

- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM}"

when.contains:

log.file.path: "/var/log/nginx/error.log"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- 激活filebeat模块:nginx

filebeat modules list

filebeat modules enable nginx

filebeat modules list

激活实质上就是重命名:

mv /etc/filebeat/modules.d/nginx.yml.disabled /etc/filebeat/modules.d/nginx.yml

- 配置filebeat模块nginx:配置日志路径

cat > /etc/filebeat/modules.d/nginx.yml << 'EOF'

- module: nginx

access:

enabled: true

var.paths: ["/var/log/nginx/access.log"]

error:

enabled: true

var.paths: ["/var/log/nginx/error.log"]

EOF

- 一个BUG:删除多余的规则

rm -rf /usr/share/filebeat/module/nginx/ingress_controller

filebeat模块

nginx下有三套pipeline.yml规则:[root@node-1 ~]# tree /usr/share/filebeat/module/nginx /usr/share/filebeat/module/nginx |-- access | |-- config | | `-- nginx-access.yml | |-- ingest | | `-- pipeline.yml | `-- manifest.yml |-- error | |-- config | | `-- nginx-error.yml | |-- ingest | | `-- pipeline.yml | `-- manifest.yml |-- ingress_controller | |-- config | | `-- ingress_controller.yml | |-- ingest | | `-- pipeline.yml | `-- manifest.yml `-- module.yml 9 directories, 10 files

- 重启filebeat生效

systemctl restart filebeat

Nginx配置

- 修改配置文件

vi /etc/nginx/nginx.conf

access_log /var/log/nginx/access.log main;

- 重启nginx,清空旧日志,创建新日志,检查是否为普通格式

systemctl restart nginx

> /var/log/nginx/access.log

> /var/log/nginx/error.log

for i in `seq 10`;do curl -I 127.0.0.1/1 &>/dev/null ;done

cat /var/log/nginx/access.log

cat /var/log/nginx/error.log

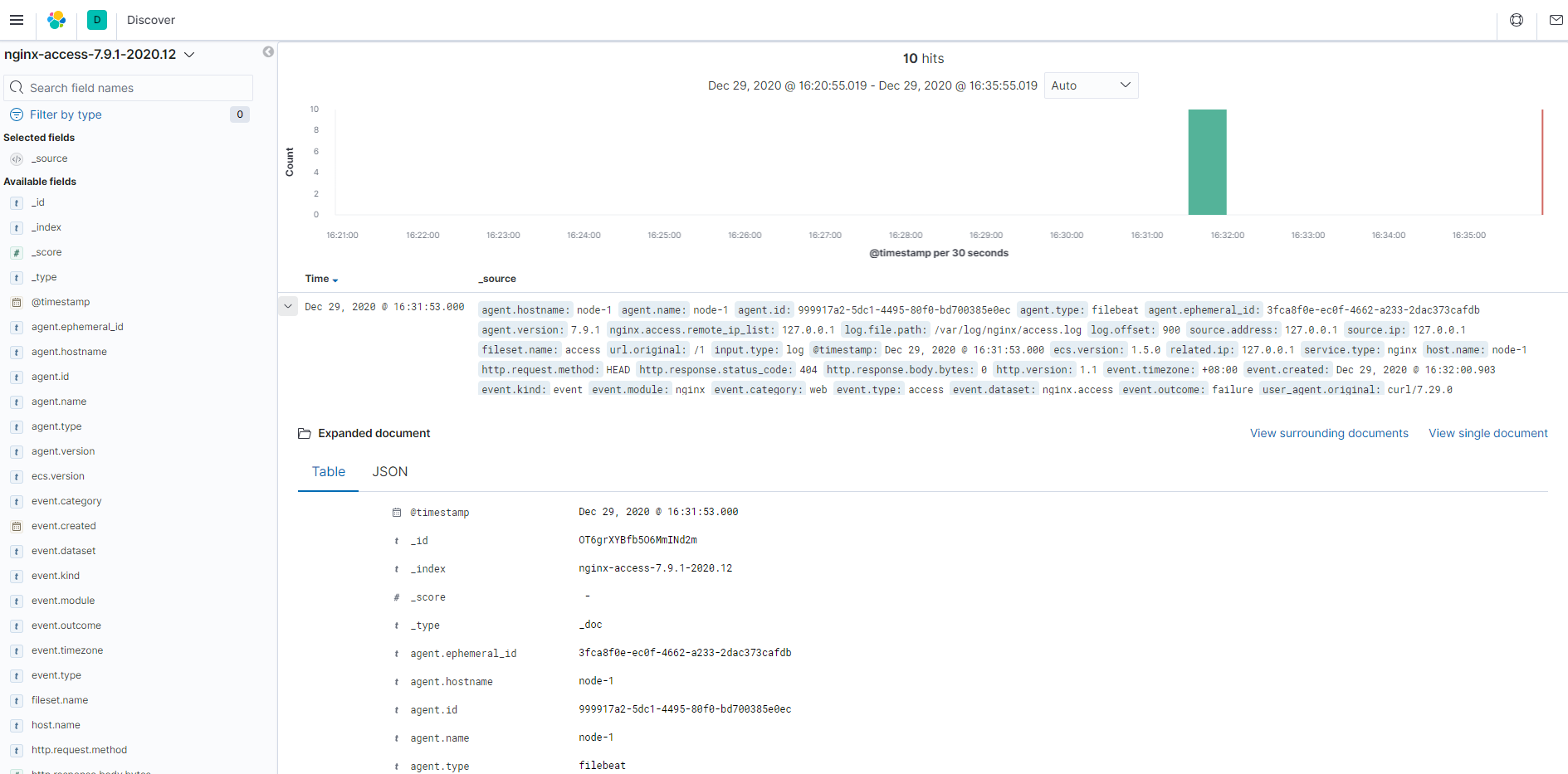

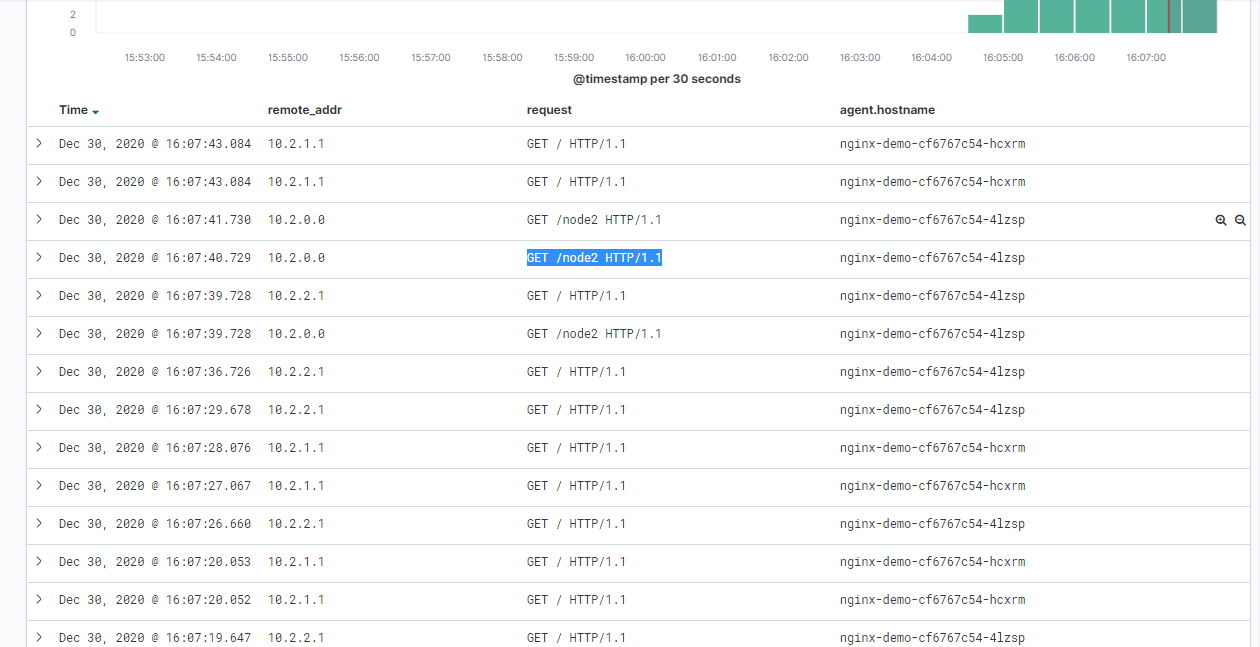

检查收集结果

- 查看ES数据

-

移除之前创建的

filebeat-7.9.1,创建新的索引模式(Index patterns),流程同上

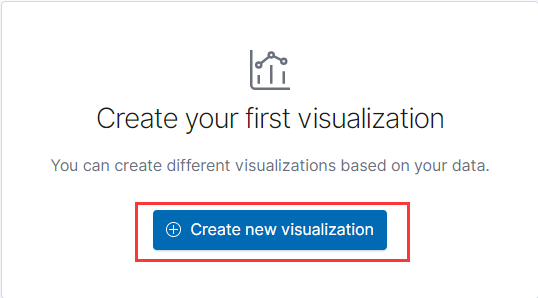

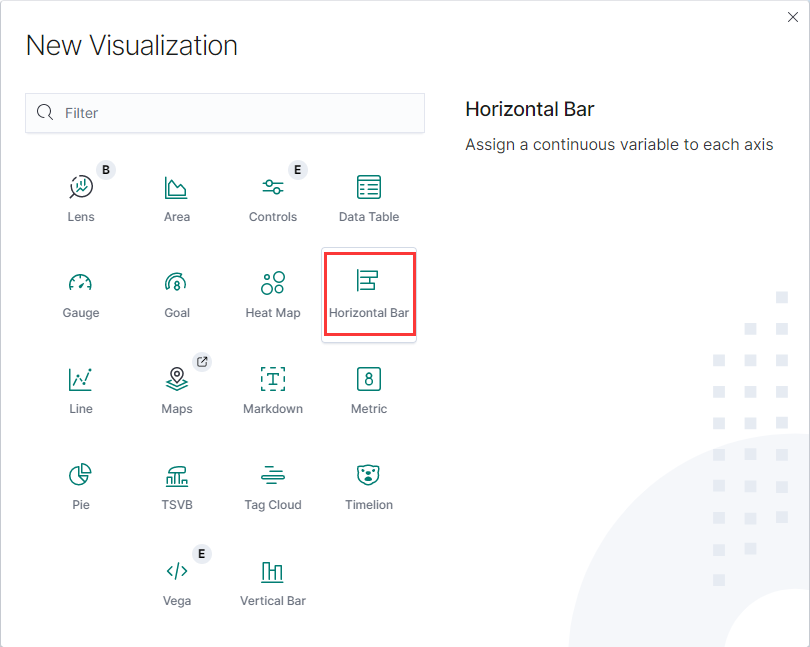

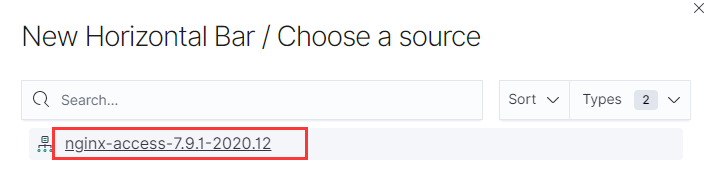

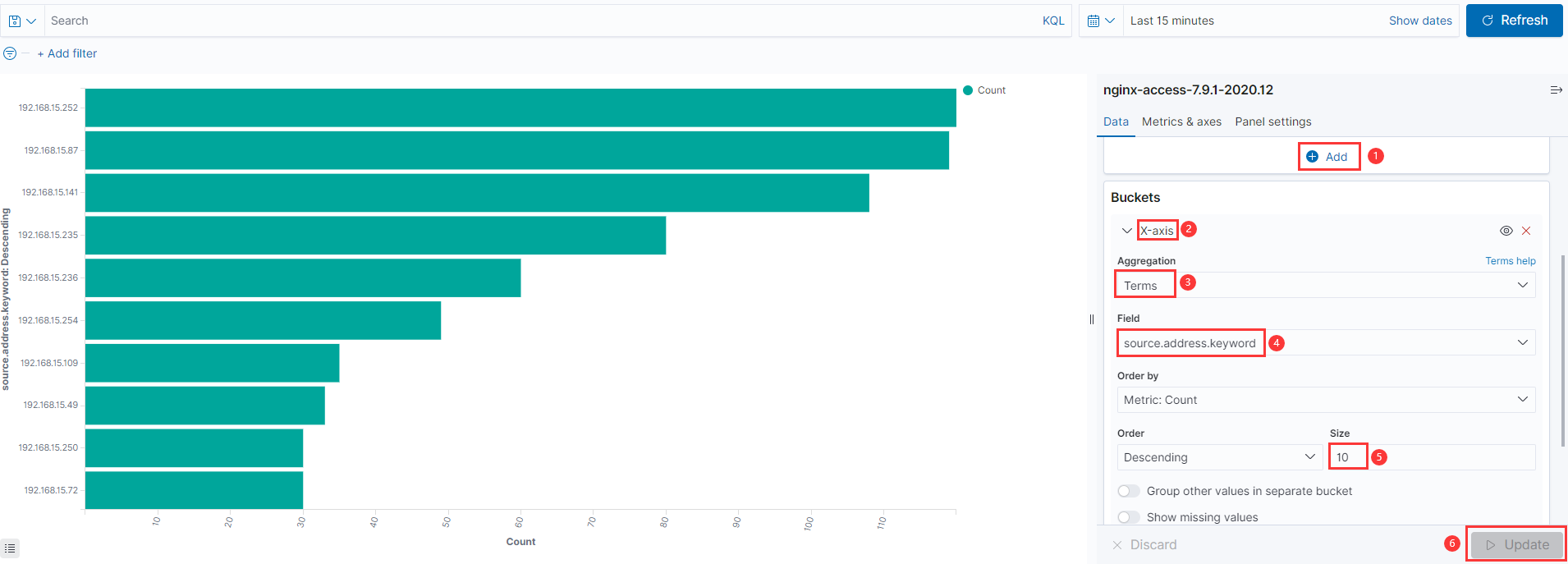

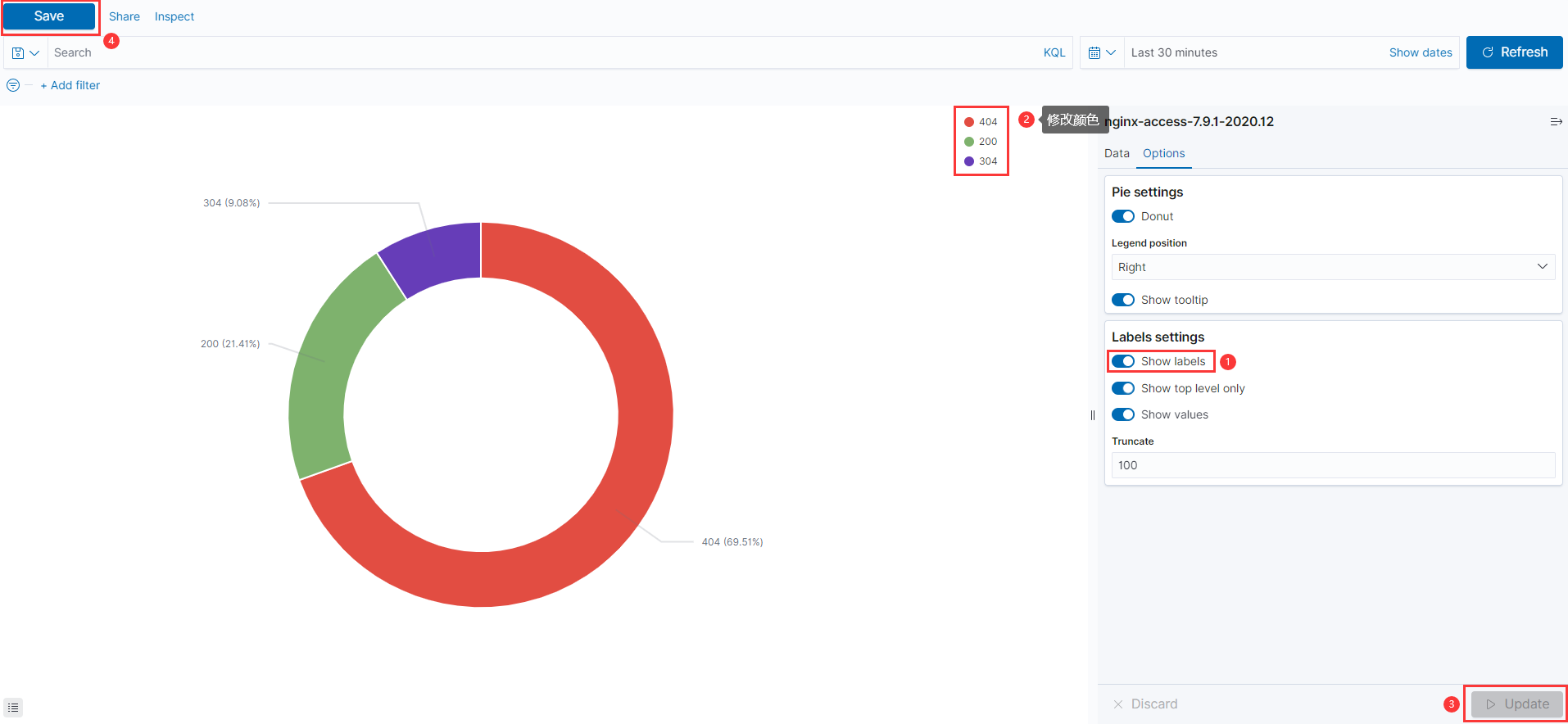

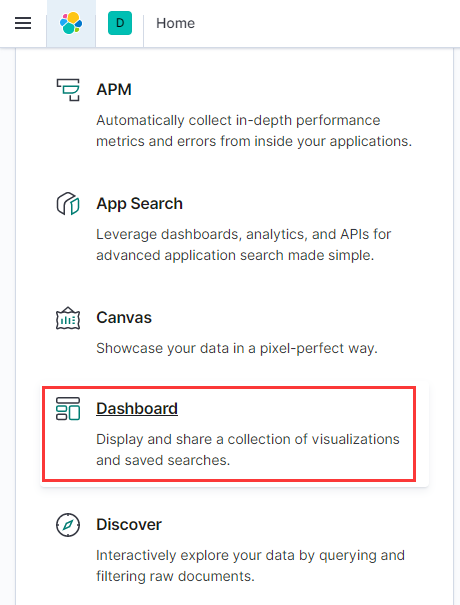

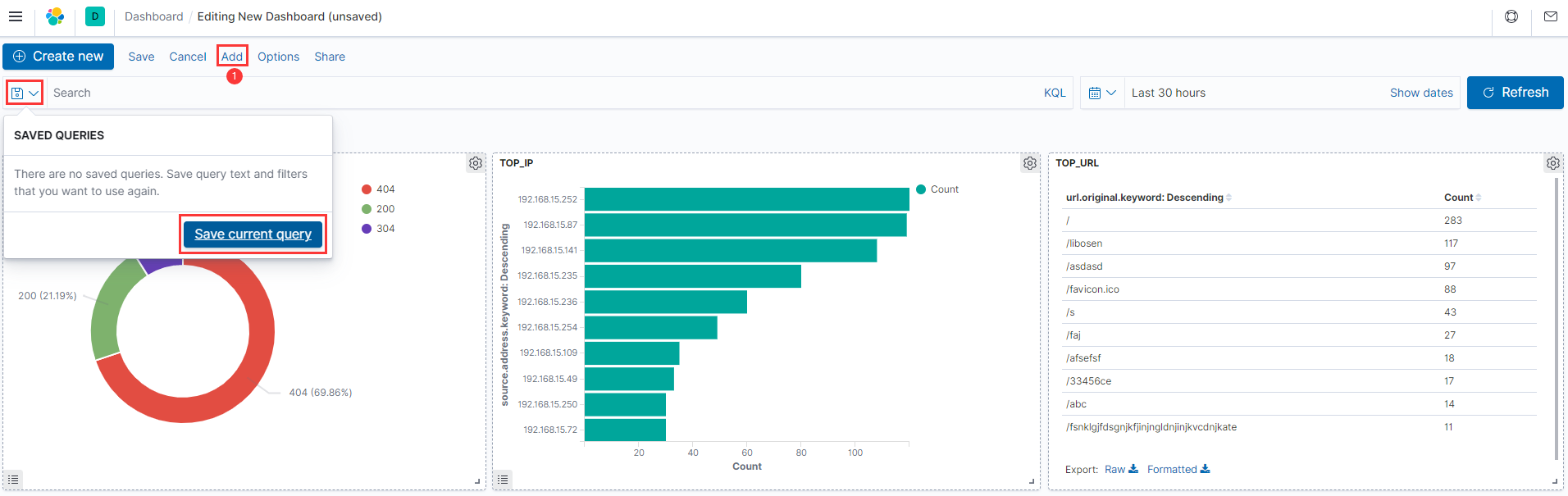

创建监控视图面板

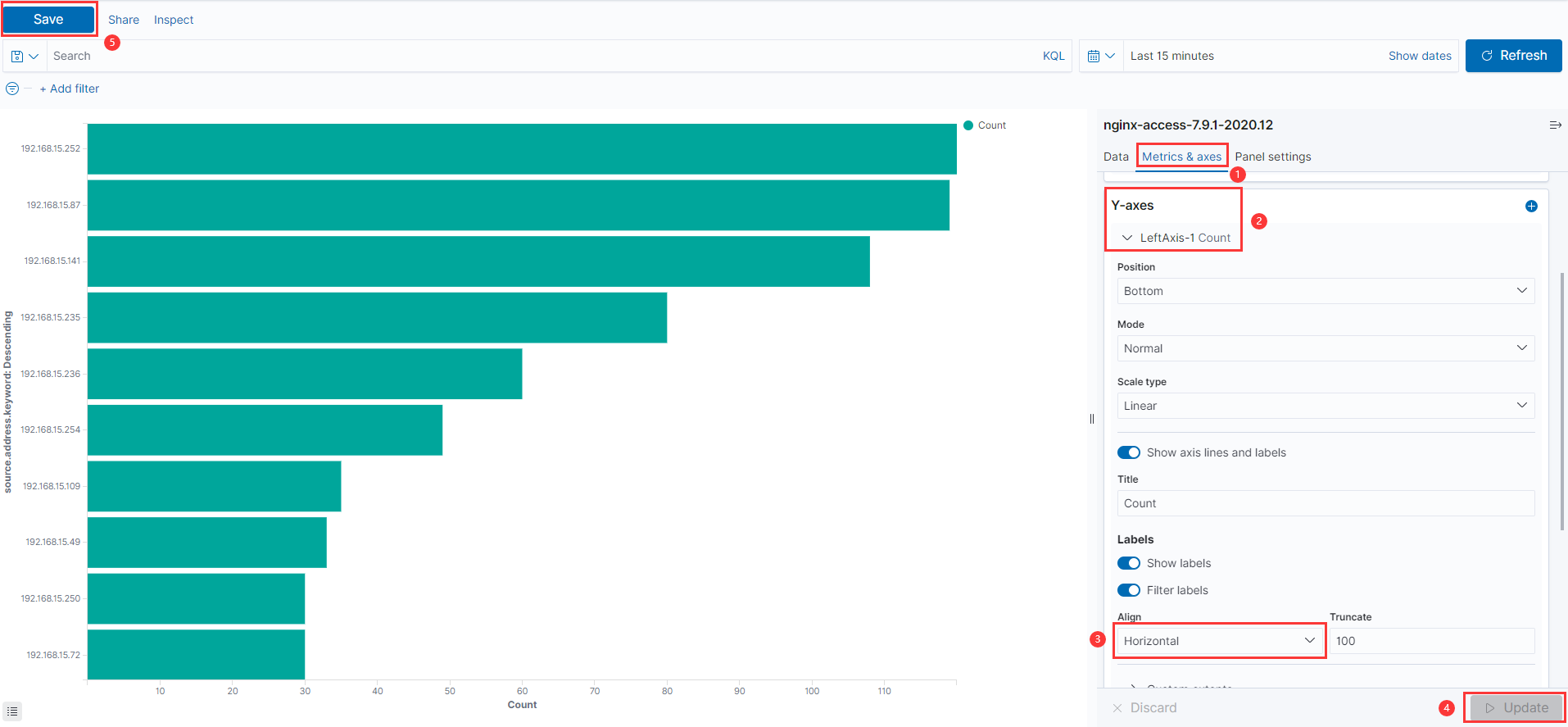

创建一个柱状图

访问排名前十的IP

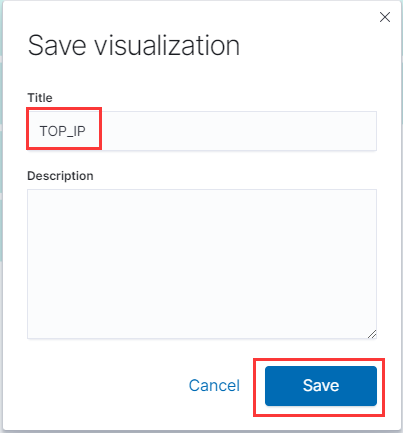

创建一个URL图

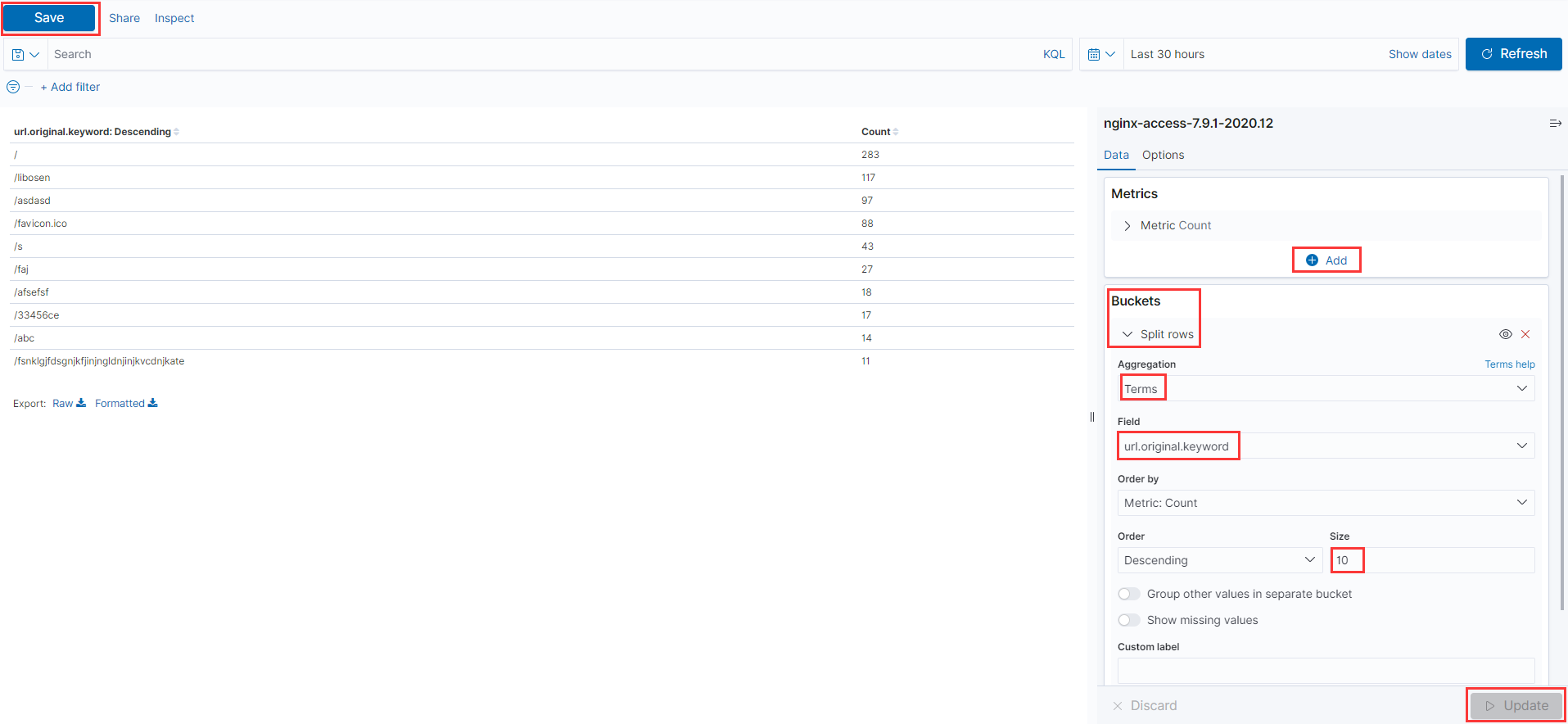

创建一个饼状图

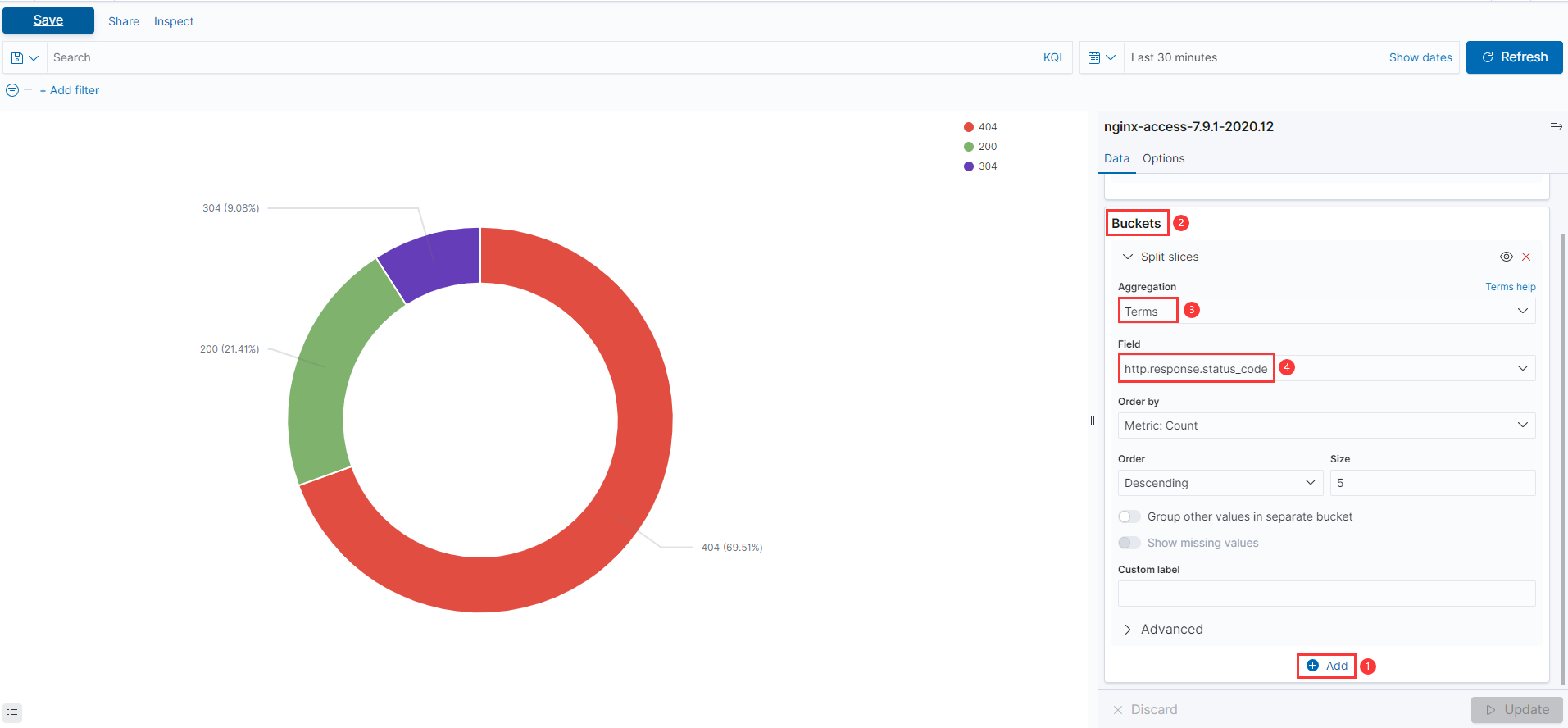

创建一个仪表盘

监控仪表盘:数据实时更新,点击过滤,+ Add filter 排除

第10章 使用模块收集MySQL慢日志

mysql日志介绍

| 类型 | 文件 |

|---|---|

| 查询日志 | general_log |

| 慢查询日志 | log_slow_queries |

| 错误日志 | log_error, log_warnings |

| 二进制日志 | binlog |

| 中继日志 | relay_log |

慢查询:运行时间超出指定时长的查询。

MySQL 5.7二进制部署

- 下载并安装

cd /data/soft

wget https://downloads.mysql.com/archives/get/p/23/file/mysql-5.7.30-linux-glibc2.12-x86_64.tar.gz

tar xf mysql-5.7.30-linux-glibc2.12-x86_64.tar.gz -C /opt

ln -s /opt/mysql-5.7.30-linux-glibc2.12-x86_64 /usr/local/mysql

- 初始化数据,修改配置文件:启用慢日志

mkdit -p /data/3306/data

mysqld --initialize-insecure --user=mysql --basedir=/usr/local/mysql --datadir=/data/3306/data

cat > /etc/my.cnf <<EOF

[mysqld]

user=mysql

basedir=/usr/local/mysql

datadir=/data/3306/data

slow_query_log=1

slow_query_log_file=/data/3306/data/slow.log

long_query_time=0.1

log_queries_not_using_indexes

port=3306

socket=/tmp/mysql.sock

[client]

socket=/tmp/mysql.sock

EOF

- 加入systemctl服务管理,启动并开机自启

cp /usr/local/mysql/support-files/mysql.server /etc/init.d/mysqld

systemctl enable mysqld

systemctl start mysqld

- 创造mysql慢日志并查看

mysql -e "select sleep(2);"

tail -f /data/3306/data/slow.log

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: enable

filebeat.modules:

- module: mysql

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "mysql-slow-%{[agent.version]}-%{+yyyy.MM}"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

注意:

- filebeat的mysql模块匹配的是二进制版的mysql

- mariadb的慢日志和mysql二进制安装的慢日志格式不一样

- 激活filebeat模块:mysql

filebeat modules enable mysql

- 配置filebeat模块mysql:配置日志路径

cat > /etc/filebeat/modules.d/mysql.yml << 'EOF'

- module: mysql

access:

enabled: true

var.paths: ["/data/3306/data/slow.log"]

EOF

- 重启filebeat生效

systemctl restart filebeat

检查收集结果

- 验证:ES查看到索引 mysql-slow

第11章 收集tomcat的json日志

Tomcat日志介绍

| 类型 | 文件名 |

|---|---|

| 控制台输出的日志 | catalina.out |

| Cataline引擎的日志文件 | catalina.日期.log |

| 应用初始化的日志 | localhost.日期.log |

| 访问tomcat的日志 | localhost_access_log.日期 |

| Tomcat默认manager应用日志 | manager.日期.log |

Tomcat部署

- tomcat和JDK安装

cd /data/soft/

rpm -ivh /data/soft/jdk-*.rpm

wget https://mirror.bit.edu.cn/apache/tomcat/tomcat-9/v9.0.41/bin/apache-tomcat-9.0.41.tar.gz

tar xf apache-tomcat-9.0.41.tar.gz -C /opt/

ln -s /opt/apache-tomcat-9.0.41 /opt/tomcat/

- tomcat修改配置文件:日志JSON格式

vim /opt/tomcat/conf/server.xml

... ...

pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

</Host>

</Engine>

</Service>

</Server>

- tomcat启动并查看日志

/opt/tomcat/bin/startup.sh

tail -f /usr/local/tomcat/logs/localhost_access_log.*.txt

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /opt/tomcat/logs/localhost_access_log.*.txt

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "tomcat-%{[agent.version]}-%{+yyyy.MM}"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

支持使用通配符*

- 重启filebeat生效

systemctl restart filebeat

检查收集结果

- 验证:ES查看到索引 tomcat

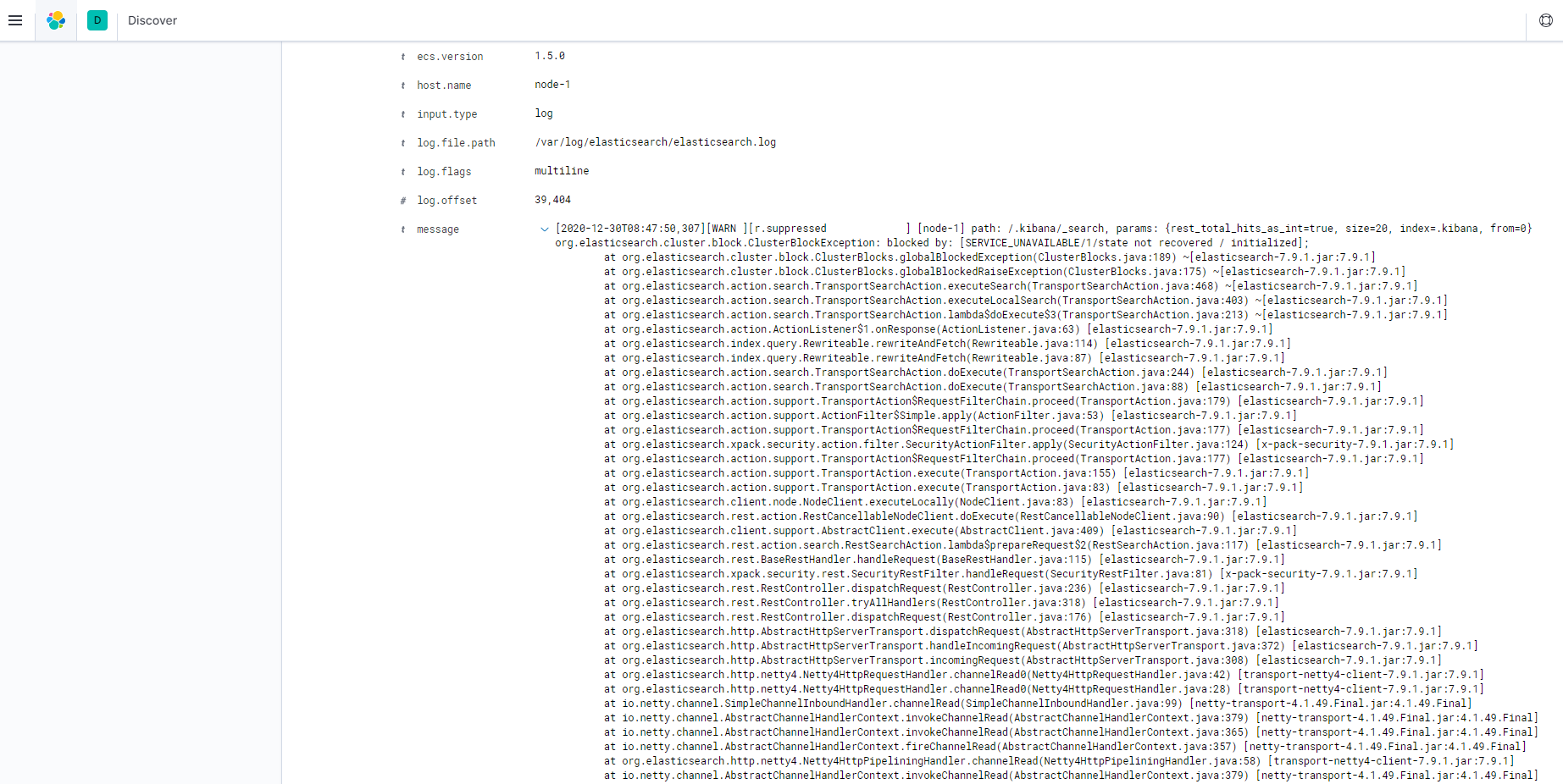

第12章 收集Java多行日志

介绍

java日志特点:一个报错,多行日志

filebeat多行匹配模式:

- multline 是模块名,filebeat爬取的日志满足pattern的条件则开始多行匹配,

- negate 设置为false 匹配pattern的多行语句都需要连着上一行,

- match 合并到末尾。

multiline.pattern: '^<|^[[:space:]]|^[[:space:]]+(at|.{3})|^Caused by:' #正则,自己定义,一个表示可以匹配多种模式使用or 命令也就是“|”

multiline.negate: false #默认是false,匹配pattern的行合并到上一行;true,不匹配pattern的行合并到上一行

multiline.match: after #合并到上一行的末尾或开头

#优化参数

multiline.max_lines: 500 #最多合并500行

multiline.timeout: 5s #5s无响应则取消合并

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/elasticsearch/elasticsearch.log

multiline.pattern: ^[

multiline.negate: true

multiline.match: after

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "es-%{[agent.version]}-%{+yyyy.MM}"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- 重启filebeat生效

systemctl restart filebeat

检查收集结果

-

创造Java日志:故意改错elasticsearch配置文件,重启

-

ES查看索引

- 创建新的索引模式(Index patterns),流程同上

第13章 使用Redis缓存

使用Redis缓存的日志收集流程

注意:

Redis不支持Filebeat模块。

Filebeat只支持Redis单点,不支持传输给Redis哨兵,集群,主从复制。

Filebeat支持Redis列表:多Redis主备,没有负载均衡,恢复自动作为备加入。

Nginx日志改为json格式

- 修改配置文件

vi /etc/nginx/nginx.conf

access_log /var/log/nginx/access.log json;

- 重启nginx,清空旧日志,创建新日志,检查是否为json格式

systemctl restart nginx

> /var/log/nginx/access.log

> /var/log/nginx/error.log

for i in `seq 10`;do curl -I 127.0.0.1/1 &>/dev/null ;done

cat /var/log/nginx/access.log

cat /var/log/nginx/error.log

Redis部署

yum install redis -y

systemctl start redis

Filebeat配置

- 修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

processors:

- drop_fields:

fields: ["ecs","log"]

output.redis:

hosts: ["localhost"]

keys:

- key: "nginx_access"

when.contains:

tags: "access"

- key: "nginx_error"

when.contains:

tags: "error"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

优化:将两个日志放入redis的一个key中

cat > /etc/filebeat/filebeat.yml <<EOF filebeat.inputs: - type: log enabled: true paths: - /var/log/nginx/access.log json.keys_under_root: true json.overwrite_keys: true tags: ["access"] - type: log enabled: true paths: - /var/log/nginx/error.log tags: ["error"] processors: - drop_fields: fields: ["ecs","log"] output.redis: hosts: ["localhost"] key: "nginx" setup.ilm.enabled: false setup.template.enabled: false # logout logging.level: info logging.to_files: true logging.files: path: /var/log/filebeat name: filebeat keepfiles: 7 permissions: 0644 EOF

- 重启filebeat生效

systemctl restart filebeat

- 查看redis验证

[root@node-1 ~]# redis-cli

127.0.0.1:6379> keys *

1) "nginx_error"

2) "nginx_access"

127.0.0.1:6379> type nginx_access

list

127.0.0.1:6379> LLEN nginx_access

(integer) 10

127.0.0.1:6379> LRANGE nginx_access 0 0

1) "{"@timestamp":"2020-12-30T01:21:57.664Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.9.1"},"bytes":0,"upstream_time":"-","request_time":"0.000","referer":"-","up_host":"-","time_local":"30/Dec/2020:09:19:08 +0800","tags":["access"],"input":{"type":"log"},"up_addr":"-","status":404,"remote_addr":"127.0.0.1","http_user_agent":"curl/7.29.0","x_forwarded":"-","host":{"name":"node-1"},"agent":{"hostname":"node-1","ephemeral_id":"ee22f8d8-db49-4a93-a2a2-76e971707a39","id":"999917a2-5dc1-4495-80f0-bd700385e0ec","name":"node-1","type":"filebeat","version":"7.9.1"},"request":"HEAD /1 HTTP/1.1"}"

127.0.0.1:6379> LRANGE nginx_access 0 -1

Logstash部署

- Logstash安装,依赖JDK

cd /data/soft/

rpm -ivh logstash-7.9.1.rpm

- 创建子配置文件

cat <<EOF >/etc/logstash/conf.d/redis.conf

input {

redis {

host => "localhost"

port => "6379"

db => "0"

key => "nginx_access"

data_type => "list"

}

redis {

host => "localhost"

port => "6379"

db => "0"

key => "nginx_error"

data_type => "list"

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

优化:redis根据tags输出日志

cat <<EOF >/etc/logstash/conf.d/redis.conf input { redis { host => "localhost" port => "6379" db => "0" key => "nginx" data_type => "list" } } filter { mutate { convert => ["upstream_time", "float"] convert => ["request_time", "float"] } } output { stdout {} if "access" in [tags] { elasticsearch { hosts => "http://10.0.0.51:9200" manage_template => false index => "nginx_access-%{+yyyy.MM}" } } if "error" in [tags] { elasticsearch { hosts => "http://10.0.0.51:9200" manage_template => false index => "nginx_error-%{+yyyy.MM}" } } } EOF

- 前台启动测试

# 很慢,就像夯住一样

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

- 验证redis数据流程:filebeat将日志存到redis(增加),logstash从redis取走日志(减少)

> /var/log/nginx/access.log

> /var/log/nginx/error.log

for i in `seq 10000`;do curl -I 127.0.0.1/1 &>/dev/null ;done

redis-cli LLEN nginx_access

多Redis主备配置

- Filebeat修改配置文件

cat > /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

processors:

- drop_fields:

fields: ["ecs","log"]

output.redis:

hosts: ["localhost","10.0.0.52"]

key: "nginx"

setup.ilm.enabled: false

setup.template.enabled: false

# logout

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

EOF

- Logstash修改配置文件

cat <<EOF >/etc/logstash/conf.d/redis.conf

input {

redis {

host => "localhost"

port => "6379"

db => "0"

key => "nginx"

data_type => "list"

}

redis {

host => "10.0.0.52"

port => "6379"

db => "0"

key => "nginx"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

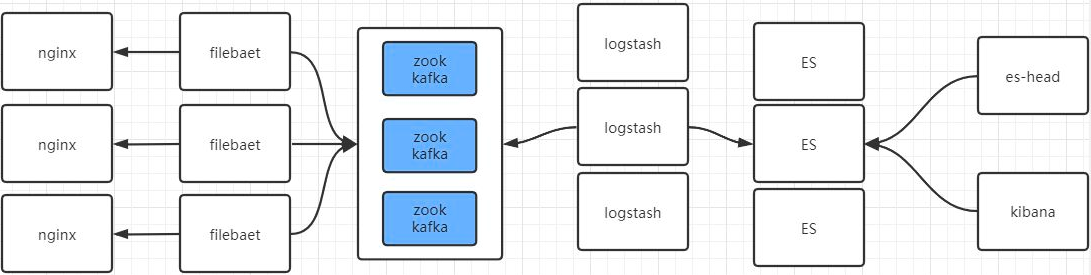

第14章 使用kafka缓存

使用kafka为缓存的日志收集流程

所有节点配置hosts和密钥

cat <<EOF >>/etc/hosts

10.0.0.51 node-51

10.0.0.52 node-52

10.0.0.53 node-53

EOF

ssh-keygen

ssh-copy-id 10.0.0.51

ssh-copy-id 10.0.0.52

ssh-copy-id 10.0.0.53

zookeeper集群部署

- node-1安装zookeeper并推送给其他节点

cd /data/soft

tar zxf zookeeper-3.4.11.tar.gz -C /opt/

ln -s /opt/zookeeper-3.4.11/ /opt/zookeeper

mkdir -p /data/zookeeper

cp /opt/zookeeper/conf/zoo_sample.cfg /opt/zookeeper/conf/zoo.cfg

cat >/opt/zookeeper/conf/zoo.cfg<<EOF

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=10.0.0.51:2888:3888

server.2=10.0.0.52:2888:3888

server.3=10.0.0.53:2888:3888

EOF

echo "1" > /data/zookeeper/myid

cat /data/zookeeper/myid

rsync -avz /opt/zookeeper* 10.0.0.52:/opt/

rsync -avz /opt/zookeeper* 10.0.0.53:/opt/

rsync -avz jdk-*.rpm 10.0.0.52:/data/soft/

rsync -avz jdk-*.rpm 10.0.0.53:/data/soft/

- node-2安装JDK修改myid

rpm -ivh /data/soft/jdk-*.rpm

mkdir -p /data/zookeeper

echo "2" > /data/zookeeper/myid

cat /data/zookeeper/myid

- node-3安装JDK修改myid

rpm -ivh /data/soft/jdk-*.rpm

mkdir -p /data/zookeeper

echo "3" > /data/zookeeper/myid

cat /data/zookeeper/myid

- 所有节点启动zookeeper,并检查状态(1个leader、2个follower)

/opt/zookeeper/bin/zkServer.sh start

/opt/zookeeper/bin/zkServer.sh status

- 测试zookeeper

# 一个节点上创建一个频道

/opt/zookeeper/bin/zkCli.sh -server 10.0.0.51:2181

create /test "hello"

# 其他节点上看能否接收到

/opt/zookeeper/bin/zkCli.sh -server 10.0.0.52:2181

get /test

kafka集群部署

- node-1安装kafka并推送给其他节点

cd /data/soft/

tar zxf kafka_2.11-1.0.0.tgz -C /opt/

ln -s /opt/kafka_2.11-1.0.0/ /opt/kafka

mkdir /opt/kafka/logs

cat >/opt/kafka/config/server.properties<<EOF

broker.id=1

listeners=PLAINTEXT://10.0.0.51:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/opt/kafka/logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=24

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

EOF

rsync -avz /opt/kafka* 10.0.0.52:/opt/

rsync -avz /opt/kafka* 10.0.0.53:/opt/

- node-2修改id及IP

sed -i "s#10.0.0.51:9092#10.0.0.52:9092#g" /opt/kafka/config/server.properties

sed -i "s#broker.id=1#broker.id=2#g" /opt/kafka/config/server.properties

- node-3修改id及IP

sed -i "s#10.0.0.51:9092#10.0.0.53:9092#g" /opt/kafka/config/server.properties

sed -i "s#broker.id=1#broker.id=3#g" /opt/kafka/config/server.properties

- 所有节点前台启动并检查进程

/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

jps

- 测试kafka

# 创建topic

/opt/kafka/bin/kafka-topics.sh --create --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --partitions 3 --replication-factor 3 --topic kafkatest

# 获取toppid

/opt/kafka/bin/kafka-topics.sh --describe --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --topic kafkatest

# 删除topic

/opt/kafka/bin/kafka-topics.sh --delete --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --topic kafkatest

- 测试kafka通信

# 1.创建topic

/opt/kafka/bin/kafka-topics.sh --create --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --partitions 3 --replication-factor 3 --topic messagetest

# 2.发送消息

/opt/kafka/bin/kafka-console-producer.sh --broker-list 10.0.0.51:9092,10.0.0.52:9092,10.0.0.53:9092 --topic messagetest

# 3.其他节点测试接收

/opt/kafka/bin/kafka-console-consumer.sh --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --topic messagetest --from-beginning

# 4.测试获取所有的频道

/opt/kafka/bin/kafka-topics.sh --list --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181

- 测试成功之后,后台启动

/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Filebeat配置

- 修改配置文件

cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.kafka:

hosts: ["10.0.0.51:9092", "10.0.0.52:9092", "10.0.0.53:9092"]

topic: 'filebeat'

setup.ilm.enabled: false

setup.template.enabled: false

EOF

- 重启filebeat生效

systemctl restart filebeat

Logstash配置

- 修改配置文件

cat >/etc/logstash/conf.d/kafka.conf <<EOF

input {

kafka{

bootstrap_servers=>["10.0.0.51:9092,10.0.0.52:9092,10.0.0.53:9092"]

topics=>["filebeat"]

#group_id=>"logstash"

codec => "json"

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

- 前台启动logstash测试

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/kafka.conf

检查收集结果

- 清除ES索引

- 清除日志,创建日志

> /var/log/nginx/access.log

> /var/log/nginx/error.log

for i in `seq 100`;do curl -I 127.0.0.1/1 &>/dev/null ;done

- 索引nginx_access-2020.12和nginx_error-2020.12自动创建

- 关闭node-2节点(follower),创建日志,ES索引size增加,仍然可以收集日志

第15章 EBLK安全认证配置

1.EBLK认证介绍

2.Elasticsearch配置账号密码

3.修改filebeat配置文件

4.修改logstash配置文件

5.修改kibana配置文件

6.通过kibana设置不同用户的权限

7.检查实验效果是否符合期望效果

第16章 使用EBK收集k8s的pod日志

1.k8s日志收集流程介绍

方案一:将filebeat和业务容器放在同一个POD中,日志挂载到同一个空目录。

方案二:使用DaemonSet资源,在每个k8s节点上收集指定容器的日志。

2.k8s环境快速搭建部署

3.k8s交付EBK

4.k8s交付边车模式的NginxPOD

5.创造访问日志并检查收集结果

第17章 EBK最大架构