mydumper 特性

(1)多线程备份(和mysqlpump的多线程不同,mysqlpump多线程备份的粒度是表,mydumper多线程备份的粒度是行,这对于备份大表特别有用)(2)因为是多线程逻辑备份,备份后会生成多个备份文件

(3)备份时对 MyISAM 表施加 FTWRL (FLUSH TABLES WITH READ LOCK), 会阻塞 DML 语句

(4)保证备份数据的一致性

(5)支持文件压缩

(6)支持导出binlog

(7)支持多线程恢复

(8)支持以守护进程模式工作,定时快照和连续二进制日志

(9)支持将备份文件切块

下载安装包

mydumper-0.9.1.tar.gz

mydumper-0.9.1.tar.gz

安装依赖包:

yum install glib2-devel mysql-devel zlib-devel pcre-devel openssl-devel cmake

yum install glib2-devel mysql-devel zlib-devel pcre-devel openssl-devel cmake

tar zxvf mydumper-0.9.1.tar.gz

cd mydumper-0.9.1

cmake .

make

make install

cd mydumper-0.9.1

cmake .

make

make install

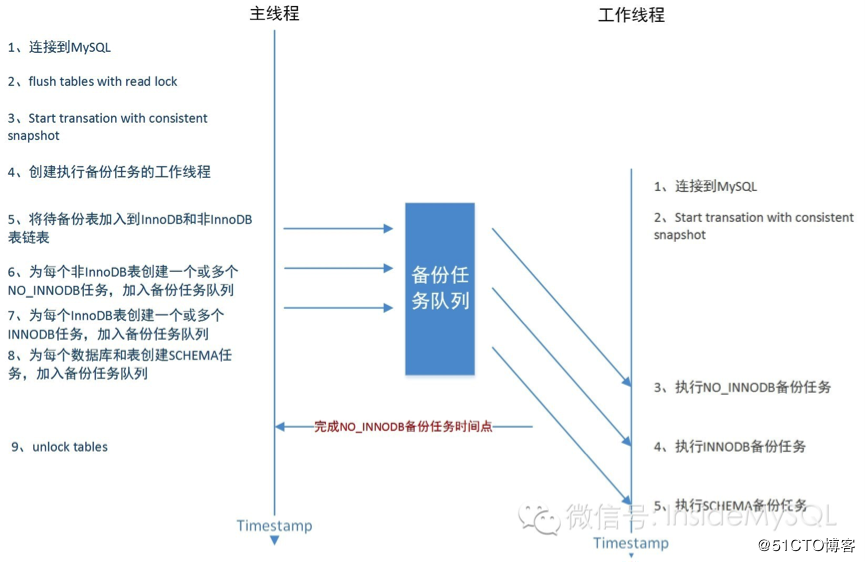

mydumper主要流程概括:

0、连接目标数据库1、通过show processlist来判断是否有长查询,根据参数long-query-guard和kill-long-queries决定退出或杀掉长查询

2、主线程,针对MyISAM引擎表,FLUSH TABLES WITH READ LOCK, 施加全局只读锁,以阻止DML语句写入,保证数据的一致性

3、主线程,针对InnoDB引擎表,开启事务,start transaction,读取当前时间点的二进制日志文件名和日志写入的位置并记录在metadata文件中,以供即使点恢复使用

4、创建worker子线程,N个(线程数可以指定,默认是4)dump线程 START TRANSACTION WITH CONSISTENT SNAPSHOT; 开启读一致的事务

5、确定候选表,根据类别分别插入innodb_table,non_innodb_table以及table_schemas链表(表结构)

6、将候选表通过g_async_queue_push加入任务队列(队列最后元素是thread shutdown),由worker子线程从队列中读取表信息并执行数据导出

7、dump non-InnoDB tables, 首先导出非事务引擎的表

8、主线程 UNLOCK TABLES 非 事务引擎备份完后,释放全局只读锁

9、dump InnoDB tables, 基于 事务导出InnoDB表

10、事务结束

11、等待worker退出

mydumper使用--less-locking可以减少锁等待时间,此时mydumper的执行机制大致为

1、主线程 FLUSH TABLES WITH READ LOCK (全局锁)

2、Dump线程 START TRANSACTION WITH CONSISTENT SNAPSHOT;

3、LL Dump线程 LOCK TABLES non-InnoDB (线程内部锁)

4、主线程UNLOCK TABLES

5、LL Dump线程 dump non-InnoDB tables

6、LL DUmp线程 UNLOCK non-InnoDB

7、Dump线程 dump InnoDB tables

1、主线程 FLUSH TABLES WITH READ LOCK (全局锁)

2、Dump线程 START TRANSACTION WITH CONSISTENT SNAPSHOT;

3、LL Dump线程 LOCK TABLES non-InnoDB (线程内部锁)

4、主线程UNLOCK TABLES

5、LL Dump线程 dump non-InnoDB tables

6、LL DUmp线程 UNLOCK non-InnoDB

7、Dump线程 dump InnoDB tables

备份文件相关信息:

1、所有的备份文件在一个目录中,未指定时为当前目录,且自动生成备份日志时间文件夹,如export-20150703-1458062、如果是在从库进行备份,还会记录备份时同步至主库的二进制日志文件及写入位置

3、每个表有两个备份文件:database.table-schema.sql表结构文件,database.table.sql表数据文件

4、如果对表文件分片,将生成多个备份数据文件,可以指定行数或指定大小分片

# mydumper --help

Usage:

mydumper [OPTION...] multi-threaded MySQL dumping

Help Options:

-?, --help Show help options

-?, --help Show help options

Application Options:

-B, --database Database to dump

-T, --tables-list Comma delimited table list to dump (does not exclude regex option)

-o, --outputdir Directory to output files to

-s, --statement-size Attempted size of INSERT statement in bytes, default 1000000

-r, --rows Try to split tables into chunks of this many rows. This option turns off --chunk-filesize

-F, --chunk-filesize Split tables into chunks of this output file size. This value is in MB

-c, --compress Compress output files

-e, --build-empty-files Build dump files even if no data available from table

-x, --regex Regular expression for 'db.table' matching

-i, --ignore-engines Comma delimited list of storage engines to ignore

-m, --no-schemas Do not dump table schemas with the data

-d, --no-data Do not dump table data

-G, --triggers Dump triggers

-E, --events Dump events

-R, --routines Dump stored procedures and functions

-k, --no-locks Do not execute the temporary shared read lock. WARNING: This will cause inconsistent backups

--less-locking Minimize locking time on InnoDB tables.

-l, --long-query-guard Set long query timer in seconds, default 60

-K, --kill-long-queries Kill long running queries (instead of aborting)

-D, --daemon Enable daemon mode

-I, --snapshot-interval Interval between each dump snapshot (in minutes), requires --daemon, default 60

-L, --logfile Log file name to use, by default stdout is used

--tz-utc SET TIME_ZONE='+00:00' at top of dump to allow dumping of TIMESTAMP data when a server has data in different time zones or data is being moved between servers with different time zones, defaults to on use --skip-tz-utc to disable.

--skip-tz-utc

--use-savepoints Use savepoints to reduce metadata locking issues, needs SUPER privilege

--success-on-1146 Not increment error count and Warning instead of Critical in case of table doesn't exist

--lock-all-tables Use LOCK TABLE for all, instead of FTWRL

-U, --updated-since Use Update_time to dump only tables updated in the last U days

--trx-consistency-only Transactional consistency only

-h, --host The host to connect to

-u, --user Username with privileges to run the dump

-p, --password User password

-P, --port TCP/IP port to connect to

-S, --socket UNIX domain socket file to use for connection

-t, --threads Number of threads to use, default 4

-C, --compress-protocol Use compression on the MySQL connection

-V, --version Show the program version and exit

-v, --verbose Verbosity of output, 0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2

-B, --database Database to dump

-T, --tables-list Comma delimited table list to dump (does not exclude regex option)

-o, --outputdir Directory to output files to

-s, --statement-size Attempted size of INSERT statement in bytes, default 1000000

-r, --rows Try to split tables into chunks of this many rows. This option turns off --chunk-filesize

-F, --chunk-filesize Split tables into chunks of this output file size. This value is in MB

-c, --compress Compress output files

-e, --build-empty-files Build dump files even if no data available from table

-x, --regex Regular expression for 'db.table' matching

-i, --ignore-engines Comma delimited list of storage engines to ignore

-m, --no-schemas Do not dump table schemas with the data

-d, --no-data Do not dump table data

-G, --triggers Dump triggers

-E, --events Dump events

-R, --routines Dump stored procedures and functions

-k, --no-locks Do not execute the temporary shared read lock. WARNING: This will cause inconsistent backups

--less-locking Minimize locking time on InnoDB tables.

-l, --long-query-guard Set long query timer in seconds, default 60

-K, --kill-long-queries Kill long running queries (instead of aborting)

-D, --daemon Enable daemon mode

-I, --snapshot-interval Interval between each dump snapshot (in minutes), requires --daemon, default 60

-L, --logfile Log file name to use, by default stdout is used

--tz-utc SET TIME_ZONE='+00:00' at top of dump to allow dumping of TIMESTAMP data when a server has data in different time zones or data is being moved between servers with different time zones, defaults to on use --skip-tz-utc to disable.

--skip-tz-utc

--use-savepoints Use savepoints to reduce metadata locking issues, needs SUPER privilege

--success-on-1146 Not increment error count and Warning instead of Critical in case of table doesn't exist

--lock-all-tables Use LOCK TABLE for all, instead of FTWRL

-U, --updated-since Use Update_time to dump only tables updated in the last U days

--trx-consistency-only Transactional consistency only

-h, --host The host to connect to

-u, --user Username with privileges to run the dump

-p, --password User password

-P, --port TCP/IP port to connect to

-S, --socket UNIX domain socket file to use for connection

-t, --threads Number of threads to use, default 4

-C, --compress-protocol Use compression on the MySQL connection

-V, --version Show the program version and exit

-v, --verbose Verbosity of output, 0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2

# myloader --help

Usage:

myloader [OPTION...] multi-threaded MySQL loader

Usage:

myloader [OPTION...] multi-threaded MySQL loader

Help Options:

-?, --help Show help options

-?, --help Show help options

Application Options:

-d, --directory Directory of the dump to import

-q, --queries-per-transaction Number of queries per transaction, default 1000

-o, --overwrite-tables Drop tables if they already exist

-B, --database An alternative database to restore into

-s, --source-db Database to restore

-e, --enable-binlog Enable binary logging of the restore data

-h, --host The host to connect to

-u, --user Username with privileges to run the dump

-p, --password User password

-P, --port TCP/IP port to connect to

-S, --socket UNIX domain socket file to use for connection

-t, --threads Number of threads to use, default 4

-C, --compress-protocol Use compression on the MySQL connection

-V, --version Show the program version and exit

-v, --verbose Verbosity of output, 0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2

-d, --directory Directory of the dump to import

-q, --queries-per-transaction Number of queries per transaction, default 1000

-o, --overwrite-tables Drop tables if they already exist

-B, --database An alternative database to restore into

-s, --source-db Database to restore

-e, --enable-binlog Enable binary logging of the restore data

-h, --host The host to connect to

-u, --user Username with privileges to run the dump

-p, --password User password

-P, --port TCP/IP port to connect to

-S, --socket UNIX domain socket file to use for connection

-t, --threads Number of threads to use, default 4

-C, --compress-protocol Use compression on the MySQL connection

-V, --version Show the program version and exit

-v, --verbose Verbosity of output, 0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2

备份 test 库到 /tmp/backup/01 文件夹中,并压缩备份文件

mydumper -u dba_user -p msds007 -h 192.168.1.101 -P 3306 -B mytest -c -o /tmp/backup/01

mydumper -u dba_user -p msds007 -h 192.168.1.101 -P 3306 -B mytest -c -o /tmp/backup/01

备份所有数据库,备份至 /tmp/backup/02 文件夹

mydumper -u root -p msds007 -h 192.168.1.101 -P 3306 -o /tmp/backup/02

备份 test.wx_edu_homework 表,且不备份表结构,备份至 /tmp/backup/03 文件夹

mydumper -u root -p msds007 -h 127.0.0.1 -P 3306 -B test -T wx_edu_homework -m -o /tmp/backup/03

mydumper -u root -p msds007 -h 192.168.1.101 -P 3306 -o /tmp/backup/02

备份 test.wx_edu_homework 表,且不备份表结构,备份至 /tmp/backup/03 文件夹

mydumper -u root -p msds007 -h 127.0.0.1 -P 3306 -B test -T wx_edu_homework -m -o /tmp/backup/03

恢复test库,如果恢复的表存在则先删除

myloader -u dba_user -p msds007 -h 192.168.1.101 -P 3306 -e -o -d /tmp/backup/01

-e参数会把恢复数据时的SQL写进binlog

myloader -u dba_user -p msds007 -h 192.168.1.101 -P 3306 -e -o -d /tmp/backup/01

-e参数会把恢复数据时的SQL写进binlog

打开general log执行

mydumper -u root -p msds007 -h 192.168.1.101 -P 3306 -o /tmp/backup/02

myloader -u dba_user -p msds007 -h 192.168.1.101 -P 3306 -e -o -d /tmp/backup/01

看general log的详细信息

原理图

mydumper和mysqldump原理类似,最大的区别在于多线程复制。并发的粒度可以到行级别,还是通过线程分别调用FTWRL并获取一致性点位。