torchnet+VGG16计算patch之间相似度

本来打算使用VGG实现siamese CNN的,但是没想明白怎么使用torchnet对模型进行微调。。。所以只好把VGG的卷积层单独做一个数据预处理模块,后面跟一个网络,将两个VGG输出的结果输入该网络中,仅训练这个浅层网络。

数据:使用了MOTChallenge数据库MOT16-02中的pedestrian

代码:

- -- ---------------------------------------------------------------------------------------

- -- 读取MOT16-02数据集的groundtruth,分成训练集和测试集

- -- ---------------------------------------------------------------------------------------

- require 'torch'

- require 'cutorch'

- torch.setdefaulttensortype('torch.FloatTensor')

- data_type = 'torch.CudaTensor' -- 设置数据类型,不适用GPU可以设置为torch.FloatTensor

-

- require 'image'

- local datapath = '/home/zwzhou/programFiles/2DMOT2015/MOT16/train/MOT16-02/'

- local tmp = image.load(datapath .. 'img1/000001.jpg',3,'byte')

- local width = tmp:size(3)

- local height = tmp:size(2)

- local num = 600

- local imgs = torch.Tensor(num,3,height,width)

-

- local file,_ = io.open('imgs.t7')

- if not file then

- for i=1,num do -- 读取视频帧

- imgs[i]=image.load(datapath .. 'img1/' .. string.format('%06d.jpg',i))

- end

- torch.save('imgs.t7',imgs)

- else

- imgs = torch.load('imgs.t7')

- end

-

- require'sys'

- local gt_path = datapath .. 'gt/gt.txt'

- local gt_info={}

- local i=0

- for line in io.lines(gt_path) do -- pedestrians的patch信息

- local v=sys.split(line,',')

- if tonumber(v[7]) ==1 and tonumber(v[9]) > 0.8 then -- 筛选有效的patch,是pedestrian且可见度>0.8

- table.insert(gt_info,{tonumber(v[1]),tonumber(v[2]),tonumber(v[3]),tonumber(v[4]),tonumber(v[5]),tonumber(v[6])})

- -- 对应的是frame index,track index, x, y, w, h

- end

- end

- -- 构建样本对,这里主要是为了正负样本个数相同,每个pedestrian选取25个相同id的patch,25个不同id的patch

- local pairwise={}

- for i=1,#gt_info do

- local count=0

- local iter=0

- repeat

- local j=torch.ceil(torch.rand(1)*(#gt_info))[1]

- if gt_info[i][2] == gt_info[j][2] then

- count=count+1

- table.insert(pairwise,{i,j})

- end

- iter=iter+1

- until(count >25 or iter>100)

- repeat

- local j=torch.ceil(torch.rand(1)*#gt_info)[1]

- if gt_info[i][2] ~= gt_info[j][2] then

- count=count-1

- table.insert(pairwise,{i,j})

- end

- until(count <0)

- end

-

- local function cast(x) return x:type(data_type) end -- 类型转换

-

- -- 加载pretrained VGG16 model

- require 'nn'

- require 'loadcaffe'

- local function getPretrainedModel()

- local proto = '/home/zwzhou/modelZoo/VGG_ILSVRC_16_layers_deploy.prototxt'

- local caffemodel = '/home/zwzhou/modelZoo/VGG_ILSVRC_16_layers.caffemodel'

- local VGG16 = loadcaffe.load(proto,caffemodel,'nn')

- for i = 1,3 do

- VGG16.modules[#VGG16.modules]=nil

- end

- return VGG16

- end

-

- -- 为了能够使用VGG,需要定义一些预处理方法

- local loadSize = {3,256,256}

- local sampleSize={3,224,224}

-

- local function adjustScale(input) -- VGG需要先将输入图片的最小边缩放到256,另一边保持纵横比

- if input:size(3) < input:size(2) then

- input = image.scale(input,loadSize[2],loadSize[3]*input:size(2)/input:size(3))

- else

- input = image.scale(input,loadSize[2]*input:size(3)/input:size(2),loadSize[3])

- end

- return input

- end

-

- local bgr_means = {103.939,116.779,123.68} -- VGG使用的均值,注意是BGR通道,image.load()获得的是rgb

- local function vggProcessing(img)

- local img2 = img:clone() -- 深度拷贝

- img2[{{1}}] = img[{{3}}]

- img2[{{3}}] = img[{{1}}] -- rgb -> bgr

- img2=img2:mul(255)

- for i=1,3 do

- img2[i]:add(-bgr_means[i])

- end

- return img2

- end

-

- local function centerCrop(input) -- 截取224*224大小

- local oH = sampleSize[2]

- local oW = sampleSize[3]

- local iW = input:size(3)

- local iH = input:size(2)

- local w1 = math.ceil((iW-oW)/2)

- local h1 = math.ceil((iH-oH)/2)

- local out = image.crop(input,w1,h1,w1+oW,h1+oH)

- return out

- end

-

- local file,_ = io.open('vgg_info.t7')

- local vgg_info={}

- if not file then

- local VGG16_model = getPretrainedModel()

- if data_type:match'torch.Cuda.*Tensor' then

- require 'cudnn'

- require 'cunn'

- cudnn.convert(VGG16_model,cudnn):cuda()

- cudnn.benchmark = true

- end

- cast(VGG16_model)

- for i=1, #gt_info do

- local idx=gt_info[i]

- local img = imgs[idx[1]]

- local x1 = math.max(idx[3],1)

- local y1 = math.max(idx[4],1)

- local x2 = math.min(idx[3]+idx[5],width)

- local y2 = math.min(idx[4]+idx[6],height)

- local patch = image.crop(img,x1,y1,x2,y2)

- patch = adjustScale(patch)

- patch = vggProcessing(patch)

- patch = centerCrop(patch)

- patch=cast(patch)

- table.insert(vgg_info,VGG16_model:forward(patch):float())

- end

- torch.save('vgg_info.t7',vgg_info)

- else

- vgg_info=torch.load('vgg_info.t7')

- end

-

- local function getPatchPair(tmp) -- 获得patch 对

- local pp = {}

- pp[1] = vgg_info[tmp[1]]

- pp[2] = vgg_info[tmp[2]]

- local t=torch.cat(pp[1],pp[2],1)

- return t

- end

-

- -- 定义datasetiterator

- local tnt=require'torchnet'

- local function getIterator(mode)

- -- 创建model

- local fc = nn.Sequential()

- fc:add(nn.View(-1,4096*2))

- fc:add(nn.Linear(4096*2,500))

- fc:add(nn.ReLU(true))

- fc:add(nn.Normalize(2))

- fc:add(nn.Linear(500,500))

- fc:add(nn.ReLU(true))

- fc:add(nn.Linear(500,1))

-

- -- print(fc:forward(torch.randn(2,4096*2)))

- if data_type:match'torch.Cuda.*Tensor' then

- require 'cudnn'

- require 'cunn'

- cudnn.convert(fc,cudnn):cuda()

- cudnn.benchmark = true

- end

- cast(fc)

-

- -- 构建训练引擎,使用OptimEngine

- require 'optim'

- local engine = tnt.OptimEngine()

- local criterion = cast(nn.MarginCriterion())

-

- -- 创建一些评估值

- local train_timer = torch.Timer()

- local test_timer = torch.Timer()

- local data_timer = torch.Timer()

-

- local meter = tnt.AverageValueMeter() -- 用于统计评估函数的输出

- local confusion = optim.ConfusionMatrix(2) -- 2类混淆矩阵

- local data_time_meter = tnt.AverageValueMeter()

- -- log

- local logtext=require 'torchnet.log.view.text'

- log = tnt.Log{

- keys = {'train_loss','train_acc','data_loading_time','epoch','test_acc','train_time','test_time'},

- onFlush={

- logtext{keys={'train_loss','train_acc','data_loading_time','epoch','test_acc','train_time','test_time'}}

- }

- }

-

- local inputs = cast(torch.Tensor())

- local targets = cast(torch.Tensor())

-

- -- 填一些hook函数,以便观察训练过程

- engine.hooks.onSample = function(state)

- if state.training then

- data_time_meter:add(data_timer:time().real)

- end

- inputs:resize(state.sample.input:size()):copy(state.sample.input)

- targets:resize(state.sample.target:size()):copy(state.sample.target)

- state.sample.input = inputs

- state.sample.target = targets

- end

-

- engine.hooks.onForwardCriterion = function(state)

- meter:add(state.criterion.output)

- confusion:batchAdd(state.network.output:gt(0):add(1),state.sample.target:gt(0):add(1))

- end

-

- local function test() -- 用于测试

- engine:test{

- network = fc,

- iterator = getIterator('test'),

- criterion=criterion,

- }

- confusion:updateValids()

- end

-

- engine.hooks.onStartEpoch = function(state)

- local epoch = state.epoch + 1

- print('===>' .. ' online epoch # ' .. epoch .. '[batchsize = 256]')

- meter:reset()

- confusion:zero()

- train_timer:reset()

- data_time_meter:reset()

- end

-

- engine.hooks.onEndEpoch = function(state)

- local train_loss = meter:value()

- confusion:updateValids()

- local train_acc = confusion.totalValid*100

- local train_time = train_timer:time().real

- meter:reset()

- print(confusion)

- confusion:zero()

- test_timer:reset()

-

- local cache = state.params:clone() -- 保存现场

- --state.params:copy(state.optim.ax)

- test()

- --state.params:copy(cache) -- 恢复现场

-

- log:set{

- train_loss = train_loss,

- train_acc = train_acc,

- data_loading_time = data_time_meter:value(),

- epoch = state.epoch,

- test_acc = confusion.totalValid*100,

- train_time = train_time,

- test_time = test_timer:time().real,

- }

- log:flush()

- end

-

- engine.hooks.onUpdate = function(state)

- data_timer:reset()

- end

-

- engine:train{

- network = fc,

- criterion = criterion,

- iterator = getIterator('train'),

- optimMethod = optim.sgd,

- config = {learningRate = 0.05,

- --weightDecay = 0.05,

- momentum = 0.9,

- --t0 = 1e+4,

- --eta0 =0.1

- },

- maxepoch = 30,

- }

-

- -- 保存模型

- local modelpath = 'SiaVGG16_model.t7'

- print('Saving to ' .. modelpath)

- torch.save(modelpath,fc:float():clearState())

- --]]

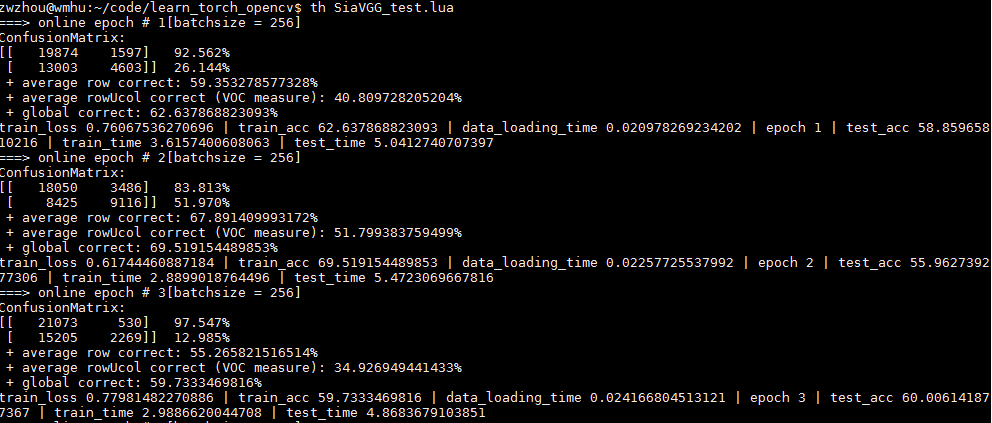

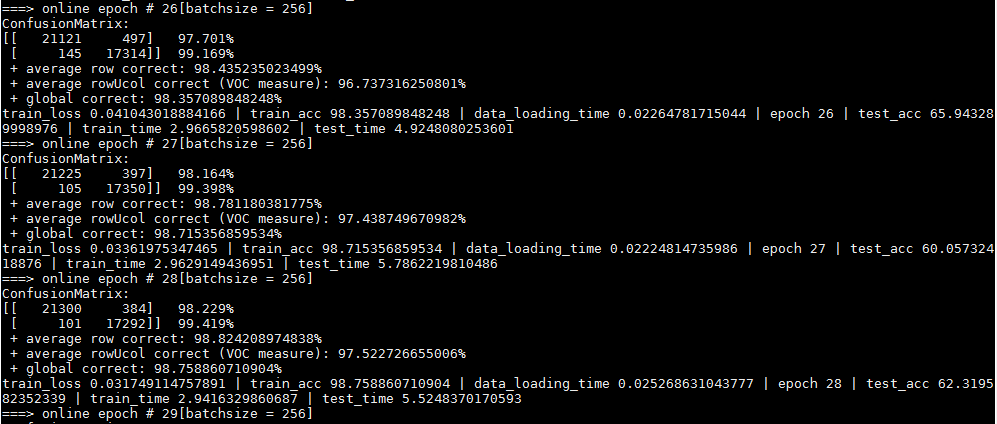

输出:

1493386765674.jpg

发现网络太容易过拟合,主要一方面是数据太少,另一方面是视频中就那么几个人,所以patch之间的相关性太大,对网络提供的信息太少。所以使用更多的数据测试结果应该会好许多。

这个代码主要是为了熟悉torchnet package,感受呢,

对于数据的预处理,确实方便多了

如果使用提供的Engine,虽然训练过程简单了但是也太模块化了,比如某些层的微调,比如每层设置不同的学习率

使用Iterator时,尤其要小心