统计系统功能,把每个步骤操作时间都进行记录,找出比较长的请求时间

一、nginx 过程时间节点

1: $time_local

The $time_local variable contains the time when the log entry is written. when the HTTP request header is read, nginx does a lookup of the associated virtual server configuration. If the virtual server is found, the request goes through six phases: server rewrite phase location phase location rewrite phase (which can bring the request back to the previous phase) access control phase try_files phase log phase Since the log phase is the last one, $time_local variable is much more colse to the end of the request than it's start.

意思就是这个$time_local 是很接近请求处理结束时间

2: $request_time

request processing time in seconds with a milliseconds resolution; time elapsed between the first bytes were read from the client and the log write after the last bytes were sent to the client

意思就是这个request_time指的就是从接受用户请求的第一个字节到发送完响应数据的时间,即包括接收请求数据时间、程序响应时间、输出响应数据时间。从请求到输出的所有时间

3:$upstream_response_time

keeps time spent on receiving the response from the upstream server; the time is kept in seconds with millisecond resolution. Times of several responses are separated by commas and colons like addresses in the $upstream_addr variable.

是指从Nginx向后端(python)建立连接开始到接受完数据然后关闭连接为止的时间。意思就是这个$upstream_response_time 是后台处理时间

总结

从上面的描述可以看出,$request_time肯定比$upstream_response_time值大,特别是使用POST方式传递参数时,因为Nginx会把request body缓存住,接受完毕后才会把数据一起发给后端。所以如果用户网络较差,或者传递数据较大时,$request_time会比$upstream_response_time大很多。

所以如果使用nginx的accesslog查看程序中哪些接口比较慢的话,使用$request_time,

所以如果使用nginx的accesslog查看程序中哪些后台逻辑比较慢用$upstream_response_time,

二、配置日志格式

既然了解了各个字段的含义和作用我们就需要配置一下日志记录的conf,把我们需要信息保存到日志中。

1:日志记录格式说明

具体log format的变量解释官方解释如下:

$request_time – Full request time, starting when NGINX reads the first byte from the client and ending when NGINX sends the last byte of the response body

$upstream_connect_time – Time spent establishing a connection with an upstream server

$upstream_header_time – Time between establishing a connection to an upstream server and receiving the first byte of the response header

$upstream_response_time – Time between establishing a connection to an upstream server and receiving the last byte of the response body

$msec - time in seconds with a milliseconds resolution at the time of the log write

中文版本:

$remote_addr, $http_x_forwarded_for 记录客户端IP地址

$remote_user 记录客户端用户名称

$request 记录请求的URL和HTTP协议

$status 记录请求状态

$body_bytes_sent 发送给客户端的字节数,不包括响应头的大小; 该变量与Apache模块mod_log_config里的“%B”参数兼容。

$bytes_sent 发送给客户端的总字节数。

$connection 连接的序列号。

$connection_requests 当前通过一个连接获得的请求数量。

$msec 日志写入时间。单位为秒,精度是毫秒。

$pipe 如果请求是通过HTTP流水线(pipelined)发送,pipe值为“p”,否则为“.”。

$http_referer 记录从哪个页面链接访问过来的

$http_user_agent 记录客户端浏览器相关信息

$request_length 请求的长度(包括请求行,请求头和请求正文)。

$request_time 请求处理时间,单位为秒,精度毫秒; 从读入客户端的第一个字节开始,直到把最后一个字符发送给客户端后进行日志写入为止。

$time_iso8601 ISO8601标准格式下的本地时间。

$time_local 通用日志格式下的本地时间。

2:服务器配置

配置路径 /etc/nginx/nginx.conf

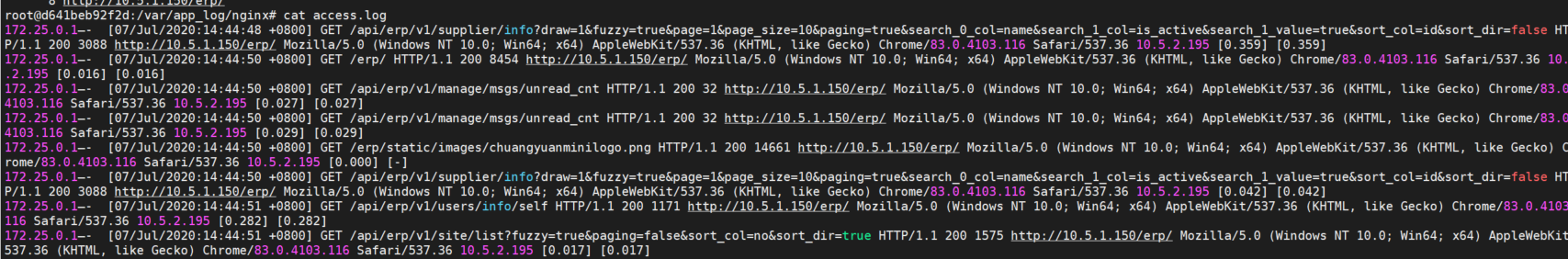

日志存储路径 /var/app_log/nginx/access.log

2.1修改当前nginx日志配置,以及查看日志记录结果

主要是添加 log_format 并配置access_log

user www-data; worker_processes auto; pid /run/nginx.pid; include /etc/nginx/modules-enabled/*.conf; events { worker_connections 768; # multi_accept on; } http { ## # Basic Settings ## sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; # server_tokens off; # server_names_hash_bucket_size 64; # server_name_in_redirect off; include /etc/nginx/mime.types; default_type application/octet-stream; log_format myFormat '$remote_addr–$remote_user [$time_local] $request $status $body_bytes_sent $http_referer $http_user_agent $http_x_forwarded_for [$request_time] [$upstream_response_time]'; #自定义格式 access_log log/access.log myFormat; #combined为日志格式的默认值 ## # SSL Settings ## ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE ssl_prefer_server_ciphers on; ## # Logging Settings ## access_log /var/app_log/nginx/access.log myFormat; error_log /var/app_log/nginx/error.log; ## # Gzip Settings ## gzip on; gzip_disable "msie6"; # gzip_vary on; # gzip_proxied any; # gzip_comp_level 6; # gzip_buffers 16 8k; # gzip_http_version 1.1; # gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; ## # Virtual Host Configs ## include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; } #mail { # # See sample authentication script at: # # http://wiki.nginx.org/ImapAuthenticateWithApachePhpScript # # # auth_http localhost/auth.php; # # pop3_capabilities "TOP" "USER"; # # imap_capabilities "IMAP4rev1" "UIDPLUS"; # # server { # listen localhost:110; # protocol pop3; # proxy on; # } # # server { # listen localhost:143; # protocol imap; # proxy on; # } #}

2.2 重启nginx: 定位后Nginx可执行程序,然后输入 -s reload,完成重启,该重启不需要关闭Nginx服务器.

/usr/sbin ./nginx -s reload

tail 10 /var/app_log/nginx/access.log

3:数据分析

由于数据日志的格式(列数)发生了变化,所以需要把access.log重新备份,数据清空,方便以后数据统计

shell 中的管道| : command 1 | command 2 #他的功能是把第一个命令command 1执行的结果作为command 2的输入传给command 2 wc -l #统计行数 uniq -c #在输出行前面加上每行在输入文件中出现的次数 uniq -u #仅显示不重复的行 sort -nr -n:依照数值的大小排序 -r:以相反的顺序来排序 -k:按照哪一列进行排序 head -3 #取前三名

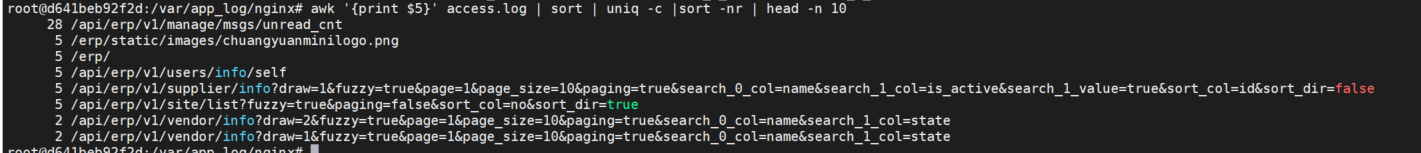

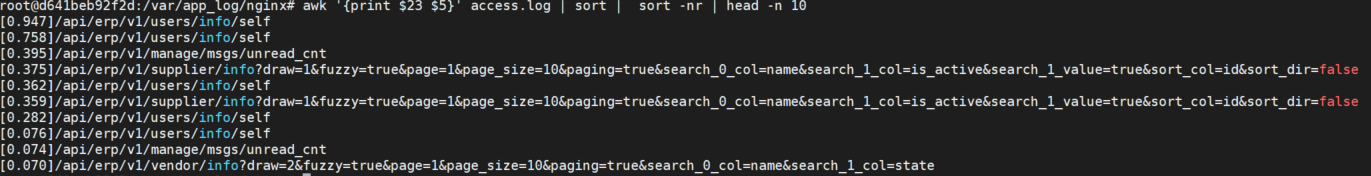

耗时较长前十的URL

awk '{print $23 $5}' access.log | sort | sort -nr | head -n 10

访问前十的URL

awk '{print $5}' access.log | sort | uniq -c |sort -nr | head -n 10