Hbase 作为 Hadoop 全家桶中,非常重要的存储组件,适用于海量数据的随机查询,使用是非常广泛的。

实时数仓项目使用 Kafka 作为数仓的基础表,我们也会把 Kafka 的数据往 Hbase 写一份,方便其他场景使用,比如:做维表

Flink Hbase 表默认使用 TableScan 一次性加载全量维表数据来关联,维表数据不能更新,适用场景比较少(由于 TableScan 完成后,算子状态为 Finish,导致任务不能完成 Checkpoint)

Flink Hbase 时态表 Lookup 功能(仅限于关联 Hbase 表主键,不支持非主键),支持缓存和透查外部系统,完美解决默认表维表数据不能更新和不能 完成 Checkpoint 的问题

关联 sql 如下

CREATE TEMPORARY TABLE hbase_behavior_conf ( rowkey STRING ,cf ROW(item_id STRING ,category_id STRING ,behavior STRING ,ts TIMESTAMP(3)) ) WITH ( 'connector' = 'hbase-2.2' ,'zookeeper.quorum' = 'thinkpad:12181' ,'table-name' = 'user_log' ,'lookup.cache.max-rows' = '10000' ,'lookup.cache.ttl' = '1 minute' -- ttl time 超过这么长时间无数据才行 ,'lookup.async' = 'true' ); INSERT INTO kakfa_join_mysql_demo(user_id, item_id, category_id, behavior, behavior_map, ts) SELECT a.user_id, a.item_id, a.category_id, a.behavior, c.cf.item_id, a.ts FROM user_log a left join hbase_behavior_conf FOR SYSTEM_TIME AS OF a.process_time AS c ON a.user_id = rowkey -- 主键 -- left join hbase_behavior_conf FOR SYSTEM_TIME AS OF a.process_time AS c ON a.user_id = cf.item_id --非主键 where a.behavior is not null;

关联非主键报错如下:

Caused by: java.lang.IllegalArgumentException: Currently, HBase table can only be lookup by single rowkey. at org.apache.flink.util.Preconditions.checkArgument(Preconditions.java:138) at org.apache.flink.connector.hbase2.source.HBaseDynamicTableSource.getLookupRuntimeProvider(HBaseDynamicTableSource.java:52) at org.apache.flink.table.planner.plan.utils.LookupJoinUtil.getLookupFunction(LookupJoinUtil.java:172) at org.apache.flink.table.planner.plan.nodes.physical.common.CommonPhysicalLookupJoin.explainTerms(CommonPhysicalLookupJoin.scala:168) at org.apache.calcite.rel.AbstractRelNode.getDigestItems(AbstractRelNode.java:409) at org.apache.calcite.rel.AbstractRelNode.deepHashCode(AbstractRelNode.java:391) at org.apache.calcite.rel.AbstractRelNode$InnerRelDigest.hashCode(AbstractRelNode.java:443) at java.util.HashMap.hash(HashMap.java:339) at java.util.HashMap.get(HashMap.java:557) at org.apache.calcite.plan.volcano.VolcanoPlanner.registerImpl(VolcanoPlanner.java:1150) at org.apache.calcite.plan.volcano.VolcanoPlanner.register(VolcanoPlanner.java:589) at org.apache.calcite.plan.volcano.VolcanoPlanner.ensureRegistered(VolcanoPlanner.java:604) at org.apache.calcite.plan.volcano.VolcanoRuleCall.transformTo(VolcanoRuleCall.java:148) ... 45 more

Flink 关联 Hbase 场景也有关联非主键的场景,刚开始用的时候,为了方便就直接实现一个 UDF,启动的时候加载全量的 Hbase 表数据到内存中(维表数据并不多),根据策略定期去Hbase 重新加载最新的数据。

受 Lookup Source 的启发,想实现一个关联 Hbase 非主键的 UDF,支持缓存和缓存失效透查 Hbase

需求如下:

1、UDF 关联 Hbase 表非主键

2、支持缓存时间和缓存数量的控制

3、关联键缓存有值就从缓存出

4、关联键缓存没有值就从 Hbase 查,结果放到缓存中

基于这些需求,实现了这样的一个 UDTF(由于 Hbase 表非主键可能有重复值,所以使用 Table Function,如果有多条数据,返回多条数据到 SQL 端处理)

## UDF 代码

@FunctionHint(output = new DataTypeHint("ROW<arr ARRAY<STRING>>")) def eval(key: String): Unit = { // if key is empty if (key == null || key.length == 0) { return } // insert val rowKind = RowKind.fromByteValue(0.toByte) val row = new Row(rowKind, 1) // get result from cache var list: ListBuffer[Array[String]] = cache.getIfPresent(key) if (list != null) { list.foreach(arr => { row.setField(0, arr) collect(row) }) return } // cache get nothing, query hbase list = queryHbase(key) if (list.length == 0) { // if get nothing return } // get result, add to cache cache.put(key, list) list.foreach(arr => { row.setField(0, arr) collect(row) }) // LOG.info("finish join key : " + key) } /** * query hbase * * @param key join key * @return query result row */ private def queryHbase(key: String): ListBuffer[Array[String]] = { val scan: Scan = new Scan(); qualifier.foreach(item => scan.addColumn(family, item)) val filter = new SingleColumnValueFilter(family, qualifier.head, CompareOperator.EQUAL, key.getBytes("UTF8")) scan.setFilter(filter) val resultScanner = table.getScanner(scan) val it = resultScanner.iterator() val list = new ListBuffer[Array[String]] // loop result while (it.hasNext) { val result = it.next() val arr = new Array[String](qualifier.length + 1) // add rowkey to result array var index = 0 val rowkey = new String(result.getRow) arr(index) = rowkey // add special qualify to result array qualifier.foreach(item => { val value = result.getValue(family, item) if (value != null) { index += 1 arr(index) = new String(value, "UTF8") } }) // add array to result list.+=(arr) } list }

## 测试 SQL

-- kafka source CREATE TABLE user_log ( user_id STRING ,item_id STRING ,category_id STRING ,behavior STRING ,ts TIMESTAMP(3) ,process_time as proctime() -- , WATERMARK FOR ts AS ts - INTERVAL '5' SECOND ) WITH ( 'connector' = 'kafka' ,'topic' = 'user_behavior' ,'properties.bootstrap.servers' = 'localhost:9092' ,'properties.group.id' = 'user_log' ,'scan.startup.mode' = 'group-offsets' ,'format' = 'json' ); ---sinkTable CREATE TABLE join_hbbase_sink ( user_id STRING ,item_id STRING ,category_id STRING ,behavior STRING ,ts TIMESTAMP(3) ,rowkey STRING ,c1 STRING ,c2 STRING ,c3 STRING ,c4 STRING ,primary key (user_id) not enforced ) WITH ( 'connector' = 'upsert-kafka' ,'topic' = 'user_behavior_1' ,'properties.bootstrap.servers' = 'localhost:9092' ,'properties.group.id' = 'user_log' ,'key.format' = 'csv' ,'value.format' = 'csv' ); INSERT INTO join_hbbase_sink SELECT a.user_id, a.item_id, a.category_id, a.behavior, a.ts, t2.col[1], t2.col[2], t2.col[3], t2.col[4], t2.col[5] FROM user_log a -- left join lateral table(udf_join_hbase_non_rowkey_no_cache(item_id)) as t2(col) on true left join lateral table(udf_join_hbase_non_rowkey_cache(item_id)) as t2(col) on true where a.item_id is not null -- and t2.col[1] = a.item_id -- 返回多条数据可以在 where 条件后面过滤 ;

## 测试Hbase 表

hbase(main):014:0> count 'user_info' Current count: 1000, row: 0999 Current count: 2000, row: 2 Current count: 3000, row: 3 Current count: 4000, row: 4 Current count: 5000, row: 5 Current count: 6000, row: 6 Current count: 7000, row: 7 Current count: 8000, row: 8 Current count: 9000, row: 9 9999 row(s) Took 0.5151 seconds => 9999 hbase(main):015:0> get 'user_info','0001' COLUMN CELL cf:c1 timestamp=1632311933302, value=0001 # 关联键 cf:c10 timestamp=1632311933302, value=xEDx9Ex99xDEx88xE6xB4x92xE6x90x9DxECx84x88xECxA0x89xE3x97xA6xEBxB3xAFxE6x9Bx91xE6xBCx9BxE8xB0x 81xE7x89xB2xE6xBEx94xECx8CxB8xEBx86xA6 cf:c2 timestamp=1632311933302, value=xE7x8FxADxE7x99x83xEDx80x98xE0xABx86xEBxB0x9AxE7xB8x8BxE7xA2xA8xE8x9FxBFxE8xBFx82xEBx9Fx86xEBx 8BxB8xE4x96x82xE7xBCxA0xEDx99xB8xE9xAEx89 cf:c3 timestamp=1632311933302, value=xE3x8AxA6xE8xABxA5xE7x80xA2xE3x83xAFxE3x9DxA5xECxBAx86xE4xA0xB7xEAxB4x83xE5xACx8DxE3x9FxB0xE6x B1xABxD6x8BxD7xB8xE2xBBxB4xE1xB5xA6 cf:c4 timestamp=1632311933302, value=xE3xA5x95xE9x80xA1xE8x9BxB5xE4xBBxB1xD8x90xE2x82xA7xECx98xA6xE6xA5xA0xEBxB1xBExEBxADx8AxE7xABx B3xE6xB7xB6xEAx89xA3xEDx92xBExE4xBCx9B cf:c5 timestamp=1632311933302, value=xE9xA0x91xE2x93x86xE6x86xA0xE1x85xA7xE5xB9x84xE7x9FxB7xE3x99x88xE2xAFx8FxE3x80x84xECxB9xADxE6x 92xB7xE3x91xB7xE9x94xB8xEAx85x9CxE2x90xAB cf:c6 timestamp=1632311933302, value=xE9xBFxBAxE2x9Ax80xEAx81x99xECx99xA1xE3xB1x83xE0xBFxA4xE7x8Cx8ExE2x9BxB5xE6xABxBDxE9xBBx9ExE8x 96xA2xEAx8ExAFxE5x98xBAxE3x83x8CxE1x91x8F cf:c7 timestamp=1632311933302, value=xEAx8Dx89xE9x87xACxEAxB9xBBxE3x93xBCxE4xABxAAxEBxA2x9BxE3x85x90xECx93xB9xE8x9Ax84xE2xBFx82xE7x A4xA7xEAxB5xB6xE2x8FxBCxE0xACx80xE6xB1xA9 cf:c8 timestamp=1632311933302, value=xE1x82x8FxE3xABxBExE7x92x93xE5x97xA0xE1xA0x93xC6xBExE0xB6xA5xE3xB4x93xECx9DxB3xC5x89xE3x98xB2x E8x91x8ExC2xA3xE4xB3x90xE5x87xA8 cf:c9 timestamp=1632311933302, value=xEAx80xB8xECxADx8FxEBx95x91xD8xB6xE8x90xAEx5CxE5x85x95xECx89x83xCCxAFxE5xA4x8BxE4x88x99xEBxBAx 88xE1xA4xB8xEAx9Ex99xE6x9Bx8F 1 row(s) Took 0.0446 seconds

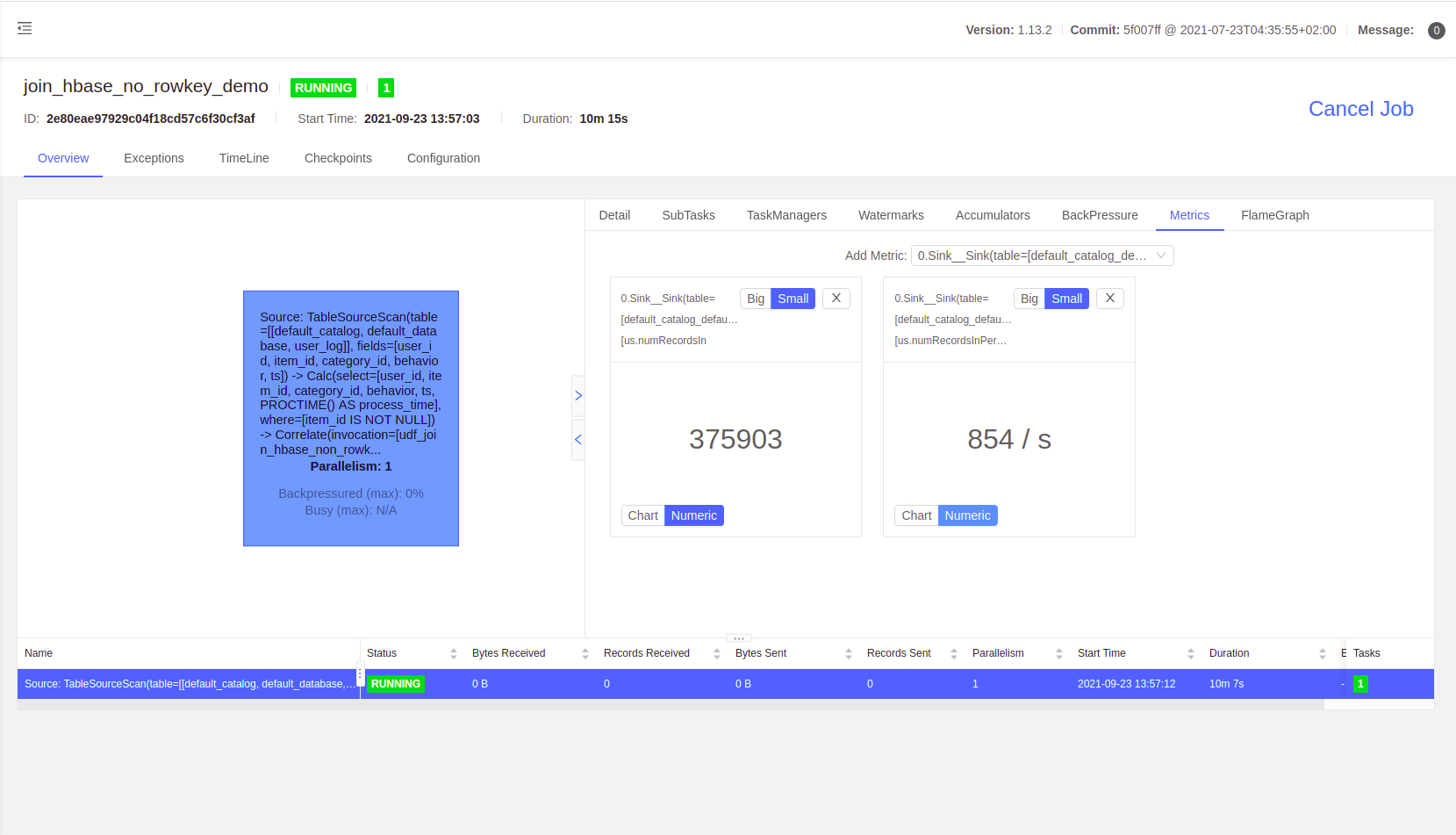

Flink 任务:

hbase 表: 10000 条数据,非主键关联测试,关联键值10000条不重复,缓存时间 10 min

笔记本 on yarn 测试 TPS: 850+ (基本可用,服务器环境应该会好很多,不够还可以加并行度)

改进点:

1、将 UDF 改为基于配置的

2、将关联字段改成支持多个字段

3、修改 Hbase connector 源码,支持关联非主键的 Lookup join

完整案例参考 GitHub: https://github.com/springMoon/sqlSubmit

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文