第4次实践作业

(1)使用Docker-compose实现Tomcat+Nginx负载均衡

-

nginx反向代理原理

反向代理,其实客户端对代理是无感知的,因为客户端不需要任何配置就可以访问,我们只需要将请求发送到反向代理服务器,由反向代理服务器去选择目标服务器获取数据后,在返回给客户端,此时反向代理服务器和目标服务器对外就是一个服务器,暴露的是代理服务器地址,隐藏了真实服务器IP地址。

正向代理代理客户端(VPN),反向代理代理服务器

-

nginx代理tomcat集群,代理2个以上tomcat;

-

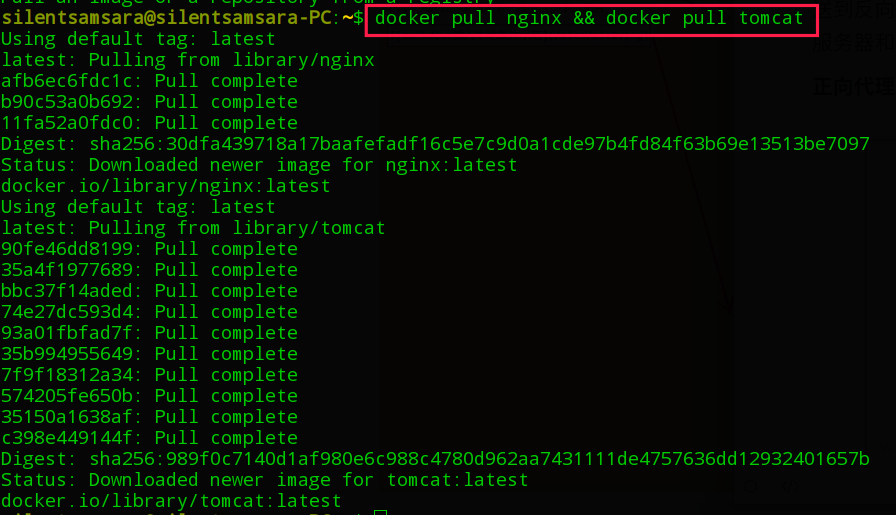

拉取tomcat和nginx镜像

docker pull nginx && docker pull tomcat

-

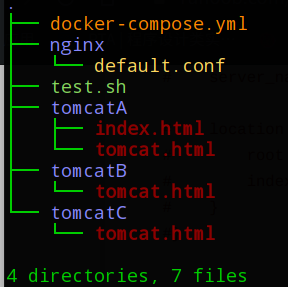

创建项目结构如下

-

编辑各文件

-

docker-compose.yml

version: "3.8" services: nginx: image: nginx container_name: tm_niginx ports: - 8080:8081 volumes: - ./nginx/default.conf:/etc/nginx/conf.d/default.conf tomcatA: hostname: tomcatA image: tomcat container_name: tomcatA volumes: - ./tomcatA:/usr/local/tomcat/webapps/ROOT tomcatB: hostname: tomcatB image: tomcat container_name: tomcatB volumes: - ./tomcatB:/usr/local/tomcat/webapps/ROOT tomcatC: hostname: tomcatC image: tomcat container_name: tomcatC volumes: - ./tomcatC:/usr/local/tomcat/webapps/ROOT -

default.conf

upstream tomcats { server tomcatA:8080 max_fails=3 fail_timeout=30s; server tomcatB:8080 max_fails=3 fail_timeout=30s; server tomcatC:8080 max_fails=3 fail_timeout=30s; } server { listen 8081; server_name localhost; location / { proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_pass http://tomcats; proxy_redirect off; } } -

tomcat.html

<title> Tomcat(X) </title><h1>This is Tomcat(X)</h1> -

test.sh

#!/bin/bash for((i=1;i<=20;i++)); do curl http://127.0.0.1:8080/tomcat.html done

-

-

执行并验证

-

执行

docker-compose up -d

-

浏览器验证

127.0.0.1:8080/tomcat.html

-

-

-

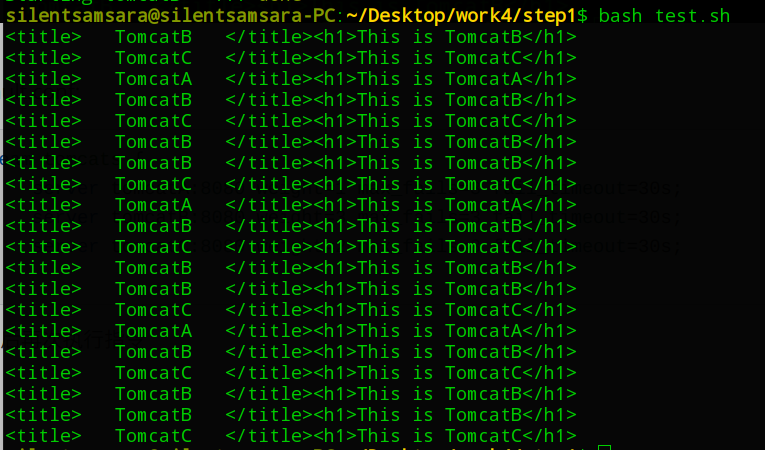

了解nginx的负载均衡策略,并至少实现nginx的2种负载均衡策略;

测试指令:

bash test.sh

-

默认方式----轮询

-

权重方式

-

修改default.conf中的upstream:

upstream tomcats { server tomcatA:8080 weight=1 max_fails=3 fail_timeout=30s; server tomcatB:8080 weight=3 max_fails=3 fail_timeout=30s; server tomcatC:8080 weight=2 max_fails=3 fail_timeout=30s; } -

重新构建后再次执行指令

可见访问概率:tomcatB>tomcatC>tomcatA

-

-

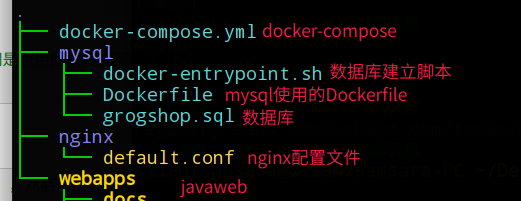

(2)使用Docker-compose部署javaweb运行环境

-

分别构建tomcat、数据库等镜像服务;

-

创建如下目录结构:

-

编辑主要文件:

-

docker-compose.yml:

version: "3.8" services: tomcat01: image: tomcat hostname: tomcat01 container_name: tomcat01 ports: - "5050:8080" volumes: #挂载卷 - "./webapps:/usr/local/tomcat/webapps" - ./wait-for-it.sh:/wait-for-it.sh networks: webnet: ipv4_address: 15.22.0.15 tomcat02: image: tomcat container_name: tomcat02 ports: - "5051:8080" volumes: #挂载卷 - "./webapps1:/usr/local/tomcat/webapps" networks: #网络设置静态IP webnet: ipv4_address: 15.22.0.16 my_mysql: build: ./mysql image: my_mysql:test container_name: my_mysql ports: - "3309:3306" command: [ '--character-set-server=utf8mb4', '--collation-server=utf8mb4_unicode_ci' ] environment: MYSQL_ROOT_PASSWORD: "123456" networks: webnet: ipv4_address: 15.22.0.6 nginx: image: nginx container_name: nginx-tomcat ports: - 8080:8080 volumes: #挂载卷 - ./nginx/default.conf:/etc/nginx/conf.d/default.conf tty: true stdin_open: true networks: webnet: ipv4_address: 15.22.0.7 networks: webnet: driver: bridge #网桥模式 ipam: config: - subnet: 15.22.0.0/24 #子网 -

default.conf:

upstream tomcats{ server tomcat01:8080 max_fails=3 fail_timeout=30s; server tomcat02:8080 max_fails=3 fail_timeout=30s; } server { listen 8080; server_name localhost; location / { proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_pass http://tomcats; proxy_redirect off; } } -

其他文件与作者的差不多,作者博客,只需要根据作者博客的要求改一下配置如下:

-

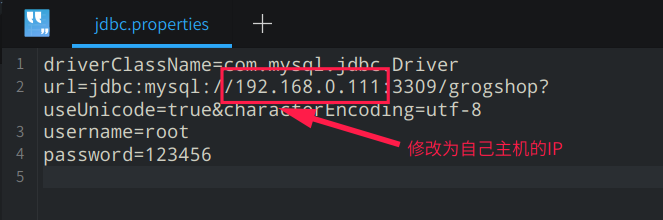

进入目录:./webapps/ssmgrogshop_war/WEB-INF/classes,修改其中jdbc.properties,如下

-

-

-

-

成功部署Javaweb程序,包含简单的数据库操作;

-

执行

docker-compose up -d -

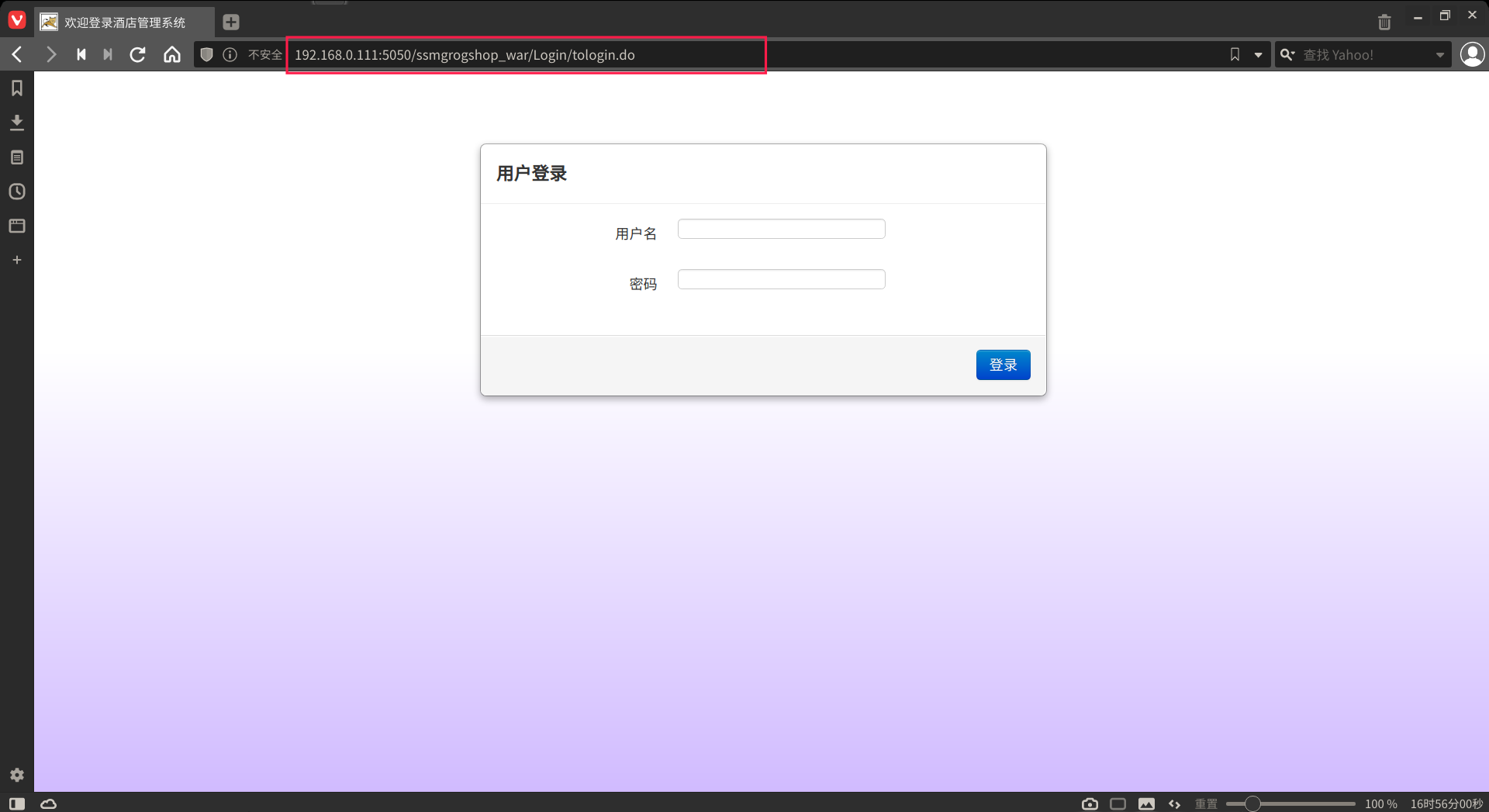

浏览器进入

http://192.168.0.111:5050/ssmgrogshop_war/Login/tologin.do

-

输入 用户名:

sa,密码:123

-

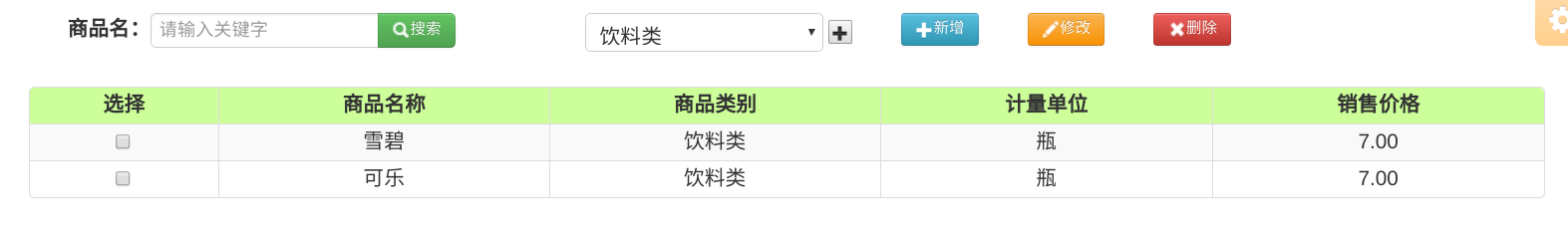

简单是数据操作

-

查询:

-

删除:

-

增加:

-

-

-

成功

-

-

为上述环境添加nginx反向代理服务,实现负载均衡。

-

修改nginx的配置文件default.conf,将其中的upstream修改为:

upstream tomcats{ server tomcat01:8080 weight=3 max_fails=3 fail_timeout=30s; server tomcat02:8080 weight=1 max_fails=3 fail_timeout=30s; }该方法为权值方式.

-

重构,执行即可

-

(3)使用Docker搭建大数据集群环境

-

带JDK8的Ubuntu

-

拉取ubuntu镜像

docker pull ubuntu

-

运行镜像并与本地

/home/admin/build文件夹共享cd /home/admin/

mkdir build

docker run -it -v /home/admin/build:/root/build --name ubuntu ubuntu

-

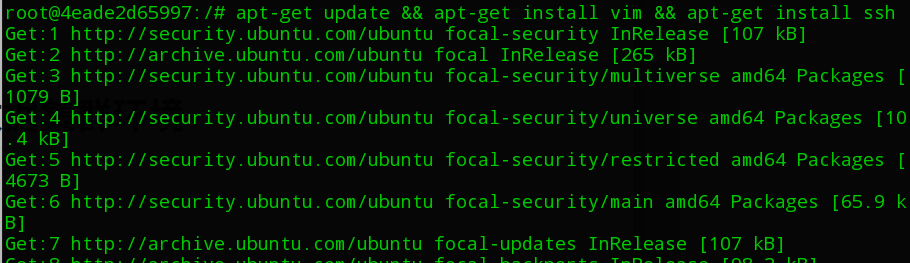

在ubuntu容器内,安装所需要的软件

apt-get update && apt-get install vim && apt-get install ssh

-

设置ssh自启动

vim ~/.bashrc

在末尾添加

/etc/init.d/ssh start -

配置sshd

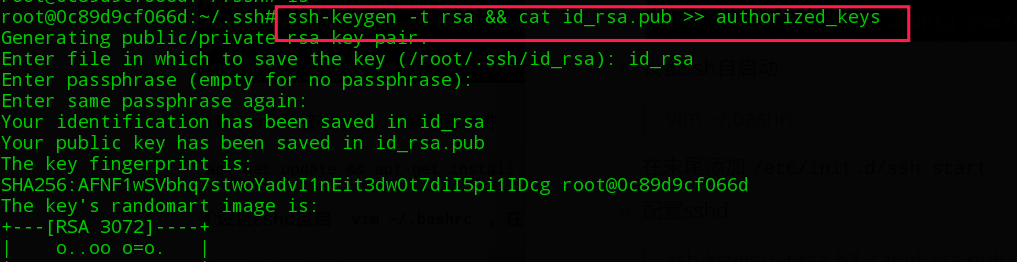

ssh-keygen -t rsa && cat id_rsa.pub >> authorized_keys

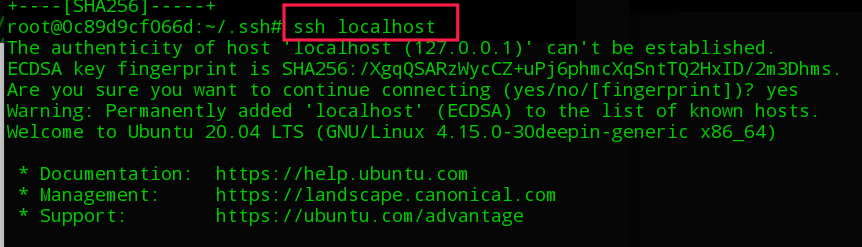

使用

ssh localhost测试:

-

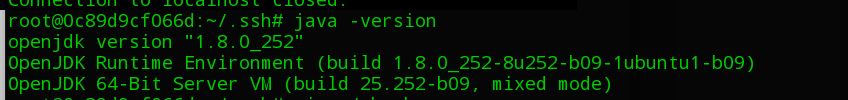

安装JDK8

apt-get install openjdk-8-jdk

测试

java -version

-

配置环境变量

vim ~/.bashrc在末尾输入如下内容:export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ export PATH=$PATH:$JAVA_HOME/bin -

保存镜像

docker commit 0c8 ubuntu/openjdk

-

-

带Hadoop的Ubuntu

-

下载Hadoop-3.1.3.tar.gz至宿主主机的/home/admin/build

-

进入容器执行

cd /root/build/

tar -zxvf hadoop-3.1.3.tar.gz -C /usr/local

cd /usr/local/

mv hadoop-3.1.3 hadoop

vim ~/.bashrc

- 在末尾加入:

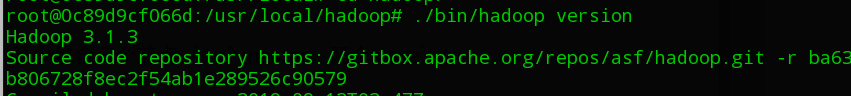

export HADOOP_HOME=/usr/local/hadoop export PATH=$PATH:$HADOOP_HOME/bin export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop- 验证Hadoop指令

./bin/hadoop version

-

修改hadoop-env.sh

vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh

加入

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ -

修改core-site.xml

vim /usr/local/hadoop/etc/hadoop/core-site.xml

<configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration> -

修改hdfs-site.xml

vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.name.dir</name> <value>/usr/local/hadoop/hdfs/name</value> </property> <property> <name>dfs.data.dir</name> <value>/usr/local/hadoop/hdfs/data</value> </property> </configuration> -

修改mapred-site.xml

vim /usr/local/hadoop/etc/hadoop/mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.application.classpath</name> <value>$HADOOP_HOME/share/hadoop/mapreduce/*:$HADOOP_HOME/share/hadoop/mapreduce/lib/*</value> </property> </configuration> -

修改yarn-site.xml

vim /usr/local/hadoop/etc/hadoop/yarn-site.xml

<configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name> <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME</value> </property> </configuration>

-

保存镜像

docker commit 0c8 ubuntu/hadoop_installed

-

-

完成hadoop分布式集群环境配置,至少包含三个节点(一个master,两个slave)

-

开启三个容器运行ubuntu/hadoop_installed镜像

# 第一个终端 docker run -it -h master --name master ubuntu/hadoop_installed bash # 第二个终端 docker run -it -h slave01 --name slave01 ubuntu/hadoop_installed bash # 第三个终端 docker run -it -h slave02 --name slave02 ubuntu/hadoop_installed bash -

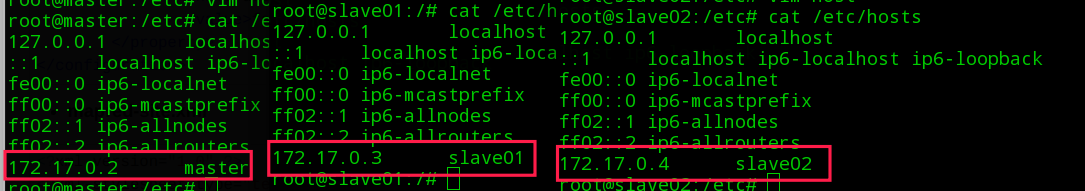

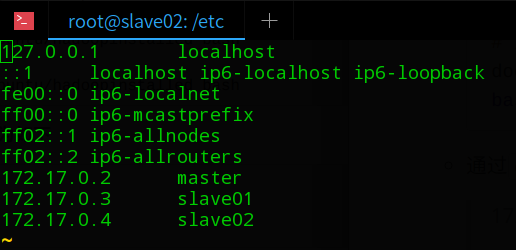

通过

cat /etc/hosts分别获取三个容器的IP地址如下:172.17.0.2 master

172.17.0.3 slave01

172.17.0.4 slave02

将地址映射分别写到三个容器各自的/etc/hosts中

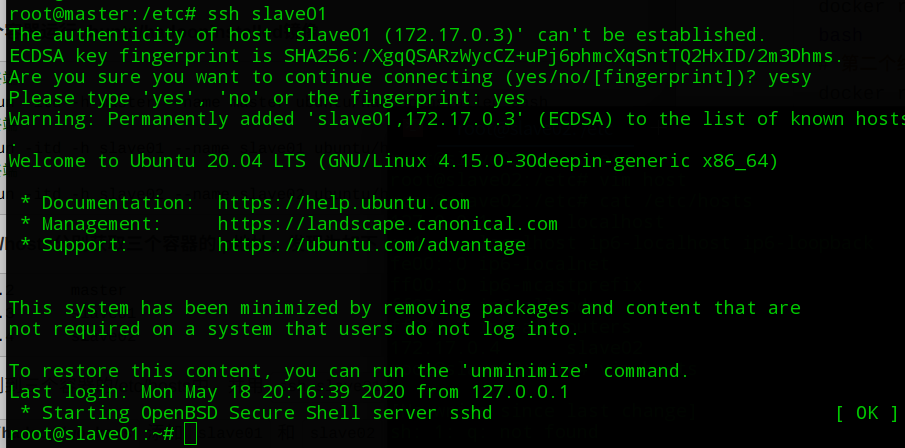

在master中使用

ssh slave01和ssh slave 02进行验证

-

-

成功运行hadoop 自带的测试实例。

-

添加slaves机器

vim /usr/local/hadoop/etc/hadoop/workers

-

一些小修改

vim /usr/local/hadoop/etc/hadoop hadoop-env.sh

export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root -

在master终端上,进入

/usr/local/hadoop并执行如下命令:bin/hdfs namenode -format

sbin/start-all.sh

-

运行Hadoop实例程序

-

防止输入文件

./bin/hdfs dfs -mkdir -p /user/root/input

./bin/hdfs dfs -put ./etc/hadoop/*.xml input

./bin/hdfs dfs -ls /user/root/input

-

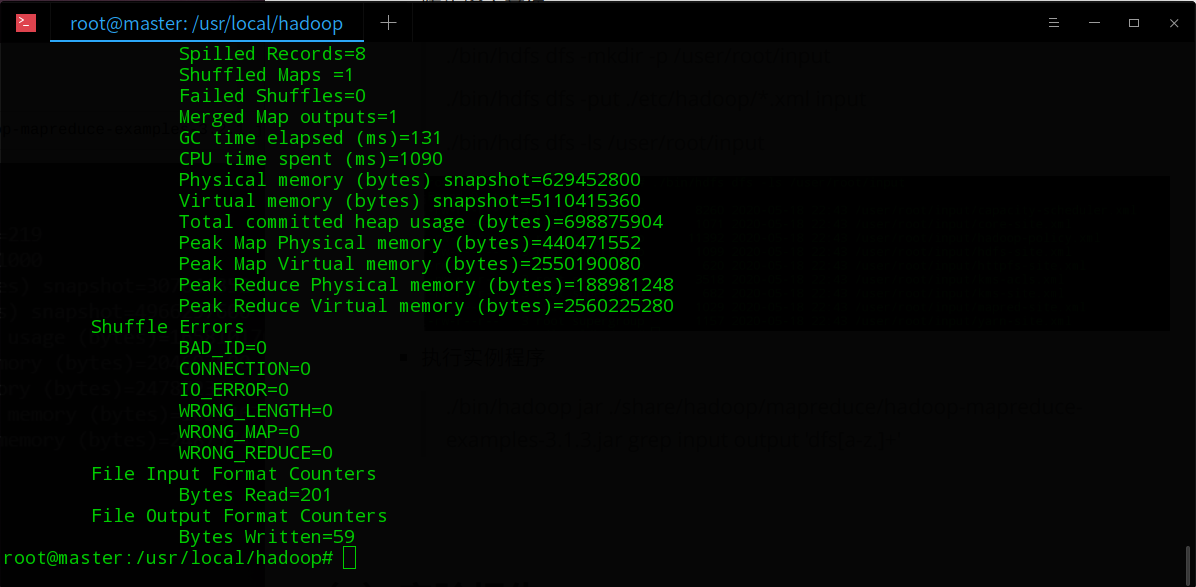

执行实例程序

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar grep input output 'dfs[a-z.]+'

-

-