在上一篇的博客讲述了SSD的原理,这一篇主要是讲解keras的实现。

keras代码的github地址为:点击打开链接

model 的框架实现(ssd.py):

先给出了改变后的VGG16的实现:

- def SSD300(input_shape, num_classes=21):

- #Input_shape 为输入的形状(300,300,3)

- #num_class 为需要检测的种类。

- # Block 1

- input_tensor = input_tensor = Input(shape=input_shape)

- img_size = (input_shape[1], input_shape[0])

- net['input'] = input_tensor

- net['conv1_1'] = Convolution2D(64, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv1_1')(net['input'])

- net['conv1_2'] = Convolution2D(64, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv1_2')(net['conv1_1'])

- net['pool1'] = MaxPooling2D((2, 2), strides=(2, 2), border_mode='same',

- name='pool1')(net['conv1_2'])

- # Block 2

- net['conv2_1'] = Convolution2D(128, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv2_1')(net['pool1'])

- net['conv2_2'] = Convolution2D(128, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv2_2')(net['conv2_1'])

- net['pool2'] = MaxPooling2D((2, 2), strides=(2, 2), border_mode='same',

- name='pool2')(net['conv2_2'])

- # Block 3

- net['conv3_1'] = Convolution2D(256, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv3_1')(net['pool2'])

- net['conv3_2'] = Convolution2D(256, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv3_2')(net['conv3_1'])

- net['conv3_3'] = Convolution2D(256, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv3_3')(net['conv3_2'])

- net['pool3'] = MaxPooling2D((2, 2), strides=(2, 2), border_mode='same',

- name='pool3')(net['conv3_3'])

- # Block 4

- net['conv4_1'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv4_1')(net['pool3'])

- net['conv4_2'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv4_2')(net['conv4_1'])

- net['conv4_3'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv4_3')(net['conv4_2'])

- net['pool4'] = MaxPooling2D((2, 2), strides=(2, 2), border_mode='same',

- name='pool4')(net['conv4_3'])

- # Block 5

- net['conv5_1'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv5_1')(net['pool4'])

- net['conv5_2'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv5_2')(net['conv5_1'])

- net['conv5_3'] = Convolution2D(512, 3, 3,

- activation='relu',

- border_mode='same',

- name='conv5_3')(net['conv5_2'])

- net['pool5'] = MaxPooling2D((3, 3), strides=(1, 1), border_mode='same',

- name='pool5')(net['conv5_3'])

- # FC6

- net['fc6'] = AtrousConvolution2D(1024, 3, 3, atrous_rate=(6, 6),

- activation='relu', border_mode='same',

- name='fc6')(net['pool5'])

- # FC7

- net['fc7'] = Convolution2D(1024, 1, 1, activation='relu',

- border_mode='same', name='fc7')(net['fc6'])

- # Block 6

- net['conv6_1'] = Convolution2D(256, 1, 1, activation='relu',

- border_mode='same',

- name='conv6_1')(net['fc7'])

- net['conv6_2'] = Convolution2D(512, 3, 3, subsample=(2, 2),

- activation='relu', border_mode='same',

- name='conv6_2')(net['conv6_1'])

- # Block 7

- net['conv7_1'] = Convolution2D(128, 1, 1, activation='relu',

- border_mode='same',

- name='conv7_1')(net['conv6_2'])

- net['conv7_2'] = ZeroPadding2D()(net['conv7_1'])

- net['conv7_2'] = Convolution2D(256, 3, 3, subsample=(2, 2),

- activation='relu', border_mode='valid',

- name='conv7_2')(net['conv7_2'])

- # Block 8

- net['conv8_1'] = Convolution2D(128, 1, 1, activation='relu',

- border_mode='same',

- name='conv8_1')(net['conv7_2'])

- net['conv8_2'] = Convolution2D(256, 3, 3, subsample=(2, 2),

- activation='relu', border_mode='same',

- name='conv8_2')(net['conv8_1'])

- # Last Pool

- net['pool6'] = GlobalAveragePooling2D(name='pool6')(net['conv8_2'])

标红部分就是进行改变的部分,可以看出把FC6换成了空洞卷积,和普通卷积差不多,就是把一次卷积的感受域扩大了。FC7换成了普通卷积,之后再添加了几个卷积块。

接下来就是通过改变后的VGG16得到的多层feature map来预测location 和 confidence。使用到的feature map 有:conv4_3、fc7、conv6_2、conv7_2、conv8_2、pool6。总共6层的feature map。因为对于每层的处理步骤差不多,所以就贴出conv4_3处理的代码:

- # Prediction from conv4_3

- net['conv4_3_norm'] = Normalize(20, name='conv4_3_norm')(net['conv4_3'])

- num_priors = 3

- x = Convolution2D(num_priors * 4, 3, 3, border_mode='same',

- name='conv4_3_norm_mbox_loc')(net['conv4_3_norm'])

- net['conv4_3_norm_mbox_loc'] = x

- flatten = Flatten(name='conv4_3_norm_mbox_loc_flat')

- net['conv4_3_norm_mbox_loc_flat'] = flatten(net['conv4_3_norm_mbox_loc'])

- name = 'conv4_3_norm_mbox_conf'

- if num_classes != 21:

- name += '_{}'.format(num_classes)

- x = Convolution2D(num_priors * num_classes, 3, 3, border_mode='same',

- name=name)(net['conv4_3_norm'])

- net['conv4_3_norm_mbox_conf'] = x

- flatten = Flatten(name='conv4_3_norm_mbox_conf_flat')

- net['conv4_3_norm_mbox_conf_flat'] = flatten(net['conv4_3_norm_mbox_conf'])

- priorbox = PriorBox(img_size, 30.0, aspect_ratios=[2],

- variances=[0.1, 0.1, 0.2, 0.2],

- name='conv4_3_norm_mbox_priorbox')

- net['conv4_3_norm_mbox_priorbox'] = priorbox(net['conv4_3_norm'])

可以看出对于conv4_3这层的feature map,采用的default box 的个数为3。所以location预测这个卷积层使用的卷积核个数为:3*4=12个。卷积完之后进行flatten,因为最后的输出是多层feature map预测的concatenate。同理,对于confidence预测采用的卷积核个数为:21*3=36(对于voc数据集而言)。对于PriorBox这一层,目前只需要知道它是对feature map 进行相应的操作,来得到default box的,而且对于特定的一层feature map而言,它是固定不变的,不随train或者predict的过程改变的。

对于pool6产生的feature map处理有一些不一样,这里单独的拿出来说一下,因为pool6层使用的是globa laverage pool,所以它输出的大小为1*1*256,比较小,不太适合用卷积处理了,就直接用Dense层来处理了:

- # Prediction from pool6

- num_priors = 6

- x = Dense(num_priors * 4, name='pool6_mbox_loc_flat')(net['pool6'])

- net['pool6_mbox_loc_flat'] = x

- name = 'pool6_mbox_conf_flat'

- if num_classes != 21:

- name += '_{}'.format(num_classes)

- x = Dense(num_priors * num_classes, name=name)(net['pool6'])

- net['pool6_mbox_conf_flat'] = x

- priorbox = PriorBox(img_size, 276.0, max_size=330.0, aspect_ratios=[2, 3],

- variances=[0.1, 0.1, 0.2, 0.2],

- name='pool6_mbox_priorbox')

- if K.image_dim_ordering() == 'tf':

- target_shape = (1, 1, 256)

- else:

- target_shape = (256, 1, 1)

- net['pool6_reshaped'] = Reshape(target_shape,

- name='pool6_reshaped')(net['pool6'])

- net['pool6_mbox_priorbox'] = priorbox(net['pool6_reshaped'])

每层预测完事之后呢,当然是把他们都给concatenate起来,就贴location的实现,其他两个类似:

- net['mbox_loc'] = merge([net['conv4_3_norm_mbox_loc_flat'],

- net['fc7_mbox_loc_flat'],

- net['conv6_2_mbox_loc_flat'],

- net['conv7_2_mbox_loc_flat'],

- net['conv8_2_mbox_loc_flat'],

- net['pool6_mbox_loc_flat']],

- mode='concat', concat_axis=1, name='mbox_loc')

因为之前进行了flatten,所以concatenate得到的是一个batch中每个sample所有的location位置,并且是一个一维的形式存在,需要把它给重新reshape成[batch, number of default box, 4 ]的形式;预测的class分类也是类似的:[batch, number of default box, 21 ]。最后再将location、class、default box三者进行merge得到最终的预测结果。

- #计算default box 的个数

- if hasattr(net['mbox_loc'], '_keras_shape'):

- num_boxes = net['mbox_loc']._keras_shape[-1] // 4

- elif hasattr(net['mbox_loc'], 'int_shape'):

- num_boxes = K.int_shape(net['mbox_loc'])[-1] // 4

- net['mbox_loc'] = Reshape((num_boxes, 4),

- name='mbox_loc_final')(net['mbox_loc'])

- net['mbox_conf'] = Reshape((num_boxes, num_classes),

- name='mbox_conf_logits')(net['mbox_conf'])

- net['mbox_conf'] = Activation('softmax',

- name='mbox_conf_final')(net['mbox_conf'])

- net['predictions'] = merge([net['mbox_loc'],

- net['mbox_conf'],

- net['mbox_priorbox']],

- mode='concat', concat_axis=2,

- name='predictions')

我们来计算一下这六层feature map总共拥有的default box的数量:38*38*3+19*19*6+10*10*6+5*5*6+3*3*6+1*1*6=7308。和论文中还是存在一定的差别的。

接一下就是介绍一下model中使用到的PriorBox层的作用。它是作用在每一层的feature map上的,根据输入的不同aspect ratio 和 scale 以及 num_prior来返回特定的default box,default box 的数目是feature map的height*width*num_prior。具体看代码:

- class PriorBox(Layer):

- '''

- img_size: 输入图片的大小(w, h).

- min_size: 每个feature cell中最小的scale,不是归一化后的值,而是实际的大小

- max_size: 每个feature cell中最大的scale,不是归一化的值,而是实际的大小

- aspect_ratios: 长宽比

- flip:是否需要对长宽比进行反转。

- variances: 添加的方差x,y,w,h

- clip: 让输出保持在[0,1之间

- 输入的shape:

- `4D的tensor:(samples, rows, cols, channels)

- 输出的shape:

- 3D的tensor:(samples, num_boxes, 8)

- 其中的8具体为:(xmin, ymin, xmax, ymax, variance[0], variance[1], variance[2], variance[3])

- """

- def __init__(self, img_size, min_size, max_size=None, aspect_ratios=None,

- flip=True, variances=[0.1], clip=True, **kwargs):

- self.waxis = 2

- self.haxis = 1

- self.img_size = img_size

- if min_size <= 0:

- raise Exception('min_size must be positive.')

- self.min_size = min_size

- self.max_size = max_size

- self.aspect_ratios = [1.0]

- if max_size:

- if max_size < min_size:

- raise Exception('max_size must be greater than min_size.')

- self.aspect_ratios.append(1.0)

- #根据给定的aspect_ratio来计算全部的aspect ratio

- if aspect_ratios:

- for ar in aspect_ratios:

- if ar in self.aspect_ratios:

- continue

- self.aspect_ratios.append(ar)

- if flip:

- self.aspect_ratios.append(1.0 / ar)

- self.variances = np.array(variances)

- self.clip = True

- super(PriorBox, self).__init__(**kwargs)

- #用于返回自定义层的输出shape

- def compute_output_shape(self, input_shape):

- num_priors_ = len(self.aspect_ratios)

- layer_width = input_shape[self.waxis]

- layer_height = input_shape[self.haxis]

- num_boxes = num_priors_ * layer_width * layer_height

- return (input_shape[0], num_boxes, 8)

- def call(self, x, mask=None):

- if hasattr(x, '_keras_shape'):

- input_shape = x._keras_shape

- elif hasattr(K, 'int_shape'):

- input_shape = K.int_shape(x)

- layer_width = input_shape[self.waxis]

- layer_height = input_shape[self.haxis]

- img_width = self.img_size[0]

- img_height = self.img_size[1]

- # define prior boxes shapes

- box_widths = []

- box_heights = []

- for ar in self.aspect_ratios:

- if ar == 1 and len(box_widths) == 0:

- box_widths.append(self.min_size)

- box_heights.append(self.min_size)

- elif ar == 1 and len(box_widths) > 0:

- box_widths.append(np.sqrt(self.min_size * self.max_size))

- box_heights.append(np.sqrt(self.min_size * self.max_size))

- elif ar != 1:

- box_widths.append(self.min_size * np.sqrt(ar))

- box_heights.append(self.min_size / np.sqrt(ar))

- box_widths = 0.5 * np.array(box_widths)

- box_heights = 0.5 * np.array(box_heights)

- # define centers of prior boxes

- step_x = img_width / layer_width

- step_y = img_height / layer_height

- #用于产生default box的中心坐标

- linx = np.linspace(0.5 * step_x, img_width - 0.5 * step_x,

- layer_width)

- liny = np.linspace(0.5 * step_y, img_height - 0.5 * step_y,

- layer_height)

- centers_x, centers_y = np.meshgrid(linx, liny)

- centers_x = centers_x.reshape(-1, 1)

- centers_y = centers_y.reshape(-1, 1)

- # define xmin, ymin, xmax, ymax of prior boxes

- num_priors_ = len(self.aspect_ratios)

- #concatenate之后得到了一连串的(centers_x,centers_y)形式的坐标

- prior_boxes = np.concatenate((centers_x, centers_y), axis=1)

- #扩充得到(centers_x, centers_y, centers_x, centers_y)形式的坐标

- prior_boxes = np.tile(prior_boxes, (1, 2 * num_priors_))

- prior_boxes[:, ::4] -= box_widths

- prior_boxes[:, 1::4] -= box_heights

- prior_boxes[:, 2::4] += box_widths

- prior_boxes[:, 3::4] += box_heights

- prior_boxes[:, ::2] /= img_width

- prior_boxes[:, 1::2] /= img_height

- #最终得到各个default box的归一化后的(Xmin,Ymin, Xmax, Ymax)

- #reshape成[num_box, 4]的形式

- prior_boxes = prior_boxes.reshape(-1, 4)

- if self.clip:

- prior_boxes = np.minimum(np.maximum(prior_boxes, 0.0), 1.0)

- # define variances

- num_boxes = len(prior_boxes)

- if len(self.variances) == 1:

- variances = np.ones((num_boxes, 4)) * self.variances[0]

- elif len(self.variances) == 4:

- variances = np.tile(self.variances, (num_boxes, 1))

- else:

- raise Exception('Must provide one or four variances.')

- ##把variance加入到输出之中。

- prior_boxes = np.concatenate((prior_boxes, variances), axis=1)

- prior_boxes_tensor = K.expand_dims(K.variable(prior_boxes), 0)

- if K.backend() == 'tensorflow':

- pattern = [tf.shape(x)[0], 1, 1]

- prior_boxes_tensor = tf.tile(prior_boxes_tensor, pattern)

- return prior_boxes_tensor

综合上面对model的分析,最后预测输出的shape为:[batch_size, num_box, location+num_class+8]

整体的架构完事之后,就需要准备好数据和loss function了,先看看如何预处理数据吧。

model的数据准备:

代码中编写了一个处理VOC数据集的py文件:

- import numpy as np

- import os

- from xml.etree import ElementTree

-

- class XML_preprocessor(object):

- #输出为:{image_name: [num_image, num_object_per_image, location+num_class]}

- def __init__(self, data_path):

- self.path_prefix = data_path

- self.num_classes = 20

- self.data = dict()

- self._preprocess_XML()

-

- def _preprocess_XML(self):

- filenames = os.listdir(self.path_prefix)

- for filename in filenames:

- tree = ElementTree.parse(self.path_prefix + filename)

- root = tree.getroot()

- bounding_boxes = []

- one_hot_classes = []

- size_tree = root.find('size')

- width = float(size_tree.find('width').text)

- height = float(size_tree.find('height').text)

- for object_tree in root.findall('object'):

- for bounding_box in object_tree.iter('bndbox'):

- xmin = float(bounding_box.find('xmin').text)/width

- ymin = float(bounding_box.find('ymin').text)/height

- xmax = float(bounding_box.find('xmax').text)/width

- ymax = float(bounding_box.find('ymax').text)/height

- bounding_box = [xmin,ymin,xmax,ymax]

- bounding_boxes.append(bounding_box)

- class_name = object_tree.find('name').text

- one_hot_class = self._to_one_hot(class_name)

- one_hot_classes.append(one_hot_class)

- image_name = root.find('filename').text

- bounding_boxes = np.asarray(bounding_boxes)

- one_hot_classes = np.asarray(one_hot_classes)

- image_data = np.hstack((bounding_boxes, one_hot_classes))

- self.data[image_name] = image_data

-

- def _to_one_hot(self,name):

- one_hot_vector = [0] * self.num_classes

- if name == 'aeroplane':

- one_hot_vector[0] = 1

- elif name == 'bicycle':

- one_hot_vector[1] = 1

- elif name == 'bird':

- one_hot_vector[2] = 1

- elif name == 'boat':

- one_hot_vector[3] = 1

- elif name == 'bottle':

- one_hot_vector[4] = 1

- elif name == 'bus':

- one_hot_vector[5] = 1

- elif name == 'car':

- one_hot_vector[6] = 1

- elif name == 'cat':

- one_hot_vector[7] = 1

- elif name == 'chair':

- one_hot_vector[8] = 1

- elif name == 'cow':

- one_hot_vector[9] = 1

- elif name == 'diningtable':

- one_hot_vector[10] = 1

- elif name == 'dog':

- one_hot_vector[11] = 1

- elif name == 'horse':

- one_hot_vector[12] = 1

- elif name == 'motorbike':

- one_hot_vector[13] = 1

- elif name == 'person':

- one_hot_vector[14] = 1

- elif name == 'pottedplant':

- one_hot_vector[15] = 1

- elif name == 'sheep':

- one_hot_vector[16] = 1

- elif name == 'sofa':

- one_hot_vector[17] = 1

- elif name == 'train':

- one_hot_vector[18] = 1

- elif name == 'tvmonitor':

- one_hot_vector[19] = 1

- else:

- print('unknown label: %s' %name)

- return one_hot_vector

- ## 写入到pkl文件中。

- import pickle

- data = XML_preprocessor('VOC2007/Annotations/').data

- pickle.dump(data,open('VOC2007.p','wb'))

把标注写入到pkl文件中后,再利用定义一个Generator类来产生x_batch和 y_batch用于训练,直接看重点,类中的generate函数:

- def generate(self, train=True):

- while True:

- if train:

- shuffle(self.train_keys)

- keys = self.train_keys

- else:

- shuffle(self.val_keys)

- keys = self.val_keys

- inputs = []

- targets = []

- for key in keys:

- img_path = self.path_prefix + key

- img = imread(img_path).astype('float32')

- y = self.gt[key].copy()#从pkl文件读取而来的groud truth

- ##y的shape是一张图片中box的数目和位置+类别。(num_box, coordinate+num_class)

- if train and self.do_crop:

- img, y = self.random_sized_crop(img, y)

- img = imresize(img, self.image_size).astype('float32')

- if train:#进行数据扩充

- shuffle(self.color_jitter)

- for jitter in self.color_jitter:

- img = jitter(img)

- if self.lighting_std:

- img = self.lighting(img)

- if self.hflip_prob > 0:

- img, y = self.horizontal_flip(img, y)

- if self.vflip_prob > 0:

- img, y = self.vertical_flip(img, y)

- y = self.bbox_util.assign_boxes(y) #给groud truth 分配 default box

- inputs.append(img)

- targets.append(y)

- if len(targets) == self.batch_size:

- tmp_inp = np.array(inputs)

- tmp_targets = np.array(targets)

- inputs = []

- targets = []

- yield preprocess_input(tmp_inp), tmp_targets#产生一个batch的输入数据,及其标准的输出label。

在给groud truth 分配 default box 时用到了BBoxUtility类中的assign_boxes函数,这个类是写在ssd_utils.py文件中的,其中的assign_boxes函数的代码如下:

- #用于给label分配高分的default box

- def assign_boxes(self, boxes):

- #变量: boxes: Box,它的shape为:(num_boxes, 4 + num_classes),其中num_classes没有包括背景

- #返回值: assignment:它的shape为: (num_boxes, 4 + num_classes + 8),

- #第二维上的8其实很多都是0,只有在assignment[:, -8]存在1,代表给default box分配了哪个groud truth

- assignment = np.zeros((self.num_priors, 4 + self.num_classes + 8))

- assignment[:, 4] = 1.0

- if len(boxes) == 0:

- return assignment

- encoded_boxes = np.apply_along_axis(self.encode_box, 1, boxes[:, :4])

- encoded_boxes = encoded_boxes.reshape(-1, self.num_priors, 5)

- #找出一张图中的所有的object与所有的prior box的最大IOU,即每个prior box对应一个object

- best_iou = encoded_boxes[:, :, -1].max(axis=0)

- ##找出每个prior box对应的那个object的索引。len(best_iou_idx)=num_priors

- best_iou_idx = encoded_boxes[:, :, -1].argmax(axis=0)

- ##找出与groud truth 存在IOU的prior box

- best_iou_mask = best_iou > 0

- best_iou_idx = best_iou_idx[best_iou_mask]

- assign_num = len(best_iou_idx)

- ##筛选出与groud truth 有IOU的prior box

- encoded_boxes = encoded_boxes[:, best_iou_mask, :]

- #确定给assignment分配中的prior box分配 具体哪一个groud truth。best_iou_idx中元素的范围为:range(num_object)。

- assignment[:, :4][best_iou_mask] = encoded_boxes[best_iou_idx, np.arange(assign_num),:4]

- assignment[:, 4][best_iou_mask] = 0

- assignment[:, 5:-8][best_iou_mask] = boxes[best_iou_idx, 4:]

- assignment[:, -8][best_iou_mask] = 1

- return assignment

-

返回了最终的assignment,用于作为训练时候的标准输出。

值得注意的是,在这个类里面用到self.prior,即default box都是作者先写入到了pkl文件中的,方便于使用,而且对于特定大小的feature map而言,default box是保持不变的,所以提前给出是不会影响训练的。

输入的数据和标准的输出都知道了,接下来就是定义loss function 了

model 的 loss function:

model 的loss function定义在了ssd_training.py文件中了,里面定义了一些有用的功能函数,来帮助最终loss计算的,我们就直接看最终计算那个loss的函数:

- def compute_loss(self, y_true, y_pred):

- # 在keras中自定义loss函数,它的两个输入必须为预测的输出和标准的输出

- # 变量:

- # y_pred: 它的shape为: (?, num_boxes, 4 + num_classes + 8). 就是在model框架部分介绍的输出。

- # y_truth:它的shape和y_pred的shape是一样的,就是上一节我们介绍assignment那一块的输出,具体参考上一节。

- # 返回最终的所有loss总和

- batch_size = tf.shape(y_true)[0]

- num_boxes = tf.to_float(tf.shape(y_true)[1])

- # 计算出所有default box的loss

- conf_loss = self._softmax_loss(y_true[:, :, 4:-8],

- y_pred[:, :, 4:-8])

- loc_loss = self._l1_smooth_loss(y_true[:, :, :4],

- y_pred[:, :, :4])

- #计算positive 样本的loss

- #num_pos 为一个一维的array:len(num_pos)=batch

- num_pos = tf.reduce_sum(y_true[:, :, -8], axis=-1)

- ##只需计算存在gt_box与其对应的loss

- pos_loc_loss = tf.reduce_sum(loc_loss * y_true[:, :, -8],

- axis=1)

- pos_conf_loss = tf.reduce_sum(conf_loss * y_true[:, :, -8],

- axis=1)

- #计算negative sample的loss,只计算了confidence loss

- num_neg = tf.minimum(self.neg_pos_ratio * num_pos,

- num_boxes - num_pos)

- pos_num_neg_mask = tf.greater(num_neg, 0)

- has_min = tf.to_float(tf.reduce_any(pos_num_neg_mask))

- num_neg = tf.concat(axis=0, values=[num_neg,

- [(1 - has_min) * self.negatives_for_hard]])

- #tf.boolen_mask(a,b),例如b=[true, false],a=[[[2,2],[2,3]]],则输出为[2,2]。

- #实际上就是取num_neg为正数的那些元素,然后再在其中取num_neg中的最小的元素作为num_neg_batch。

- num_neg_batch = tf.reduce_min(tf.boolean_mask(num_neg,

- tf.greater(num_neg, 0)))

- num_neg_batch = tf.to_int32(num_neg_batch)

- confs_start = 4 + self.background_label_id + 1

- confs_end = confs_start + self.num_classes - 1

- #max_confs的shape为:(batch, num_prior)

- max_confs = tf.reduce_max(y_pred[:, :, confs_start:confs_end],

- axis=2)

- #返回负样本的top-K个元素,最终返回的indices的shape为(batch, K=num_neg_batch)

- _, indices = tf.nn.top_k(max_confs * (1 - y_true[:, :, -8]),

- k=num_neg_batch)

- #创建一个shape也为(batch,num_neg_batch)的indices

- batch_idx = tf.expand_dims(tf.range(0, batch_size), 1)

- batch_idx = tf.tile(batch_idx, (1, num_neg_batch))

- #乘以num_boxes后得到batch中每一个sample的index的起始值,再加上top_k得到的index就得到了一个一维的full_indices。

- full_indices = (tf.reshape(batch_idx, [-1]) * tf.to_int32(num_boxes) +

- tf.reshape(indices, [-1]))

- #把得到的conf_loss也reshape成一维,然后用full_indices对其进行取值

- neg_conf_loss = tf.gather(tf.reshape(conf_loss, [-1]),

- full_indices)

- #最终把负样本的confidence loss reshape 成(batch, num_neg_batch),再对每个sample上的loss求和。

- neg_conf_loss = tf.reshape(neg_conf_loss,

- [batch_size, num_neg_batch])

- neg_conf_loss = tf.reduce_sum(neg_conf_loss, axis=1)

- #整合所有的loss:positive loss 和 negative loss

- total_loss = pos_conf_loss + neg_conf_loss

- total_loss /= (num_pos + tf.to_float(num_neg_batch))

- num_pos = tf.where(tf.not_equal(num_pos, 0), num_pos,

- tf.ones_like(num_pos))

- total_loss += (self.alpha * pos_loc_loss) / num_pos

- return total_loss

这时候function loss 也准备好了,属于一切都准备就绪了。当然就是进行训练了。其实在写这篇blog之前我还是对loss function 这块没有太细看明白,写完之后顿时就恍然大悟的,写blog确实是一个自我学习的一个很好过程。

model 进行 training

training这一块是写在SSD_training.ipynb的jupyter notebook文件中的,上面那些model 的部件准备好了之后,training就按照keras的流程照搬就好了。

不过需要注意一下,作者给的这个训练并不是voc数据集的训练,而是对3种瓶子的检测。

1.必要的库和自己编写的模块的导入:

- import cv2

- import keras

- from keras.applications.imagenet_utils import preprocess_input

- from keras.backend.tensorflow_backend import set_session

- from keras.models import Model

- from keras.preprocessing import image

- import matplotlib.pyplot as plt

- import numpy as np

- import pickle

- from random import shuffle

- from scipy.misc import imread

- from scipy.misc import imresize

- import tensorflow as tf

- from ssd import SSD300

- from ssd_training import MultiboxLoss

- from ssd_utils import BBoxUtility

-

- %matplotlib inline

- plt.rcParams['figure.figsize'] = (8, 8)

- plt.rcParams['image.interpolation'] = 'nearest'

-

- np.set_printoptions(suppress=True)

2.必要的初始化参数和prior box 的读取,以及输入数据的读取:

- NUM_CLASSES = 4

- input_shape = (300, 300, 3)

- #prior_boxes_ssd300.pkl 存放了所有的prior:[xmin, ymin, xmax, ymax,var[0],var[1],var[2],var[3]]

- priors = pickle.load(open('prior_boxes_ssd300.pkl', 'rb'))

- bbox_util = BBoxUtility(NUM_CLASSES, priors)

- #获得输入数据的file_name、bounding box 和 label

- gt = pickle.load(open('gt_pascal.pkl', 'rb'))

- keys = sorted(gt.keys())

- num_train = int(round(0.8 * len(keys)))

- train_keys = keys[:num_train]

- val_keys = keys[num_train:]

- num_val = len(val_keys)

3.输入数据和label的generator类定义,有点长,就把generate 那个函数贴出来:

- class Generator(object):

- def generate(self, train=True):

- while True:

- if train:

- shuffle(self.train_keys)

- keys = self.train_keys

- else:

- shuffle(self.val_keys)

- keys = self.val_keys

- inputs = []

- targets = []

- for key in keys:

- img_path = self.path_prefix + key

- img = imread(img_path).astype('float32')

- y = self.gt[key].copy()

- ##y的shape是一张图片中box的数目和位置+类别。(num_box, coordinate+num_class)

- if train and self.do_crop:

- img, y = self.random_sized_crop(img, y)

- img = imresize(img, self.image_size).astype('float32')

- if train:

- shuffle(self.color_jitter)

- for jitter in self.color_jitter:

- img = jitter(img)

- if self.lighting_std:

- img = self.lighting(img)

- if self.hflip_prob > 0:

- img, y = self.horizontal_flip(img, y)

- if self.vflip_prob > 0:

- img, y = self.vertical_flip(img, y)

- y = self.bbox_util.assign_boxes(y)

- inputs.append(img)

- targets.append(y)

- if len(targets) == self.batch_size:

- tmp_inp = np.array(inputs)

- tmp_targets = np.array(targets)

- inputs = []

- targets = []

- yield preprocess_input(tmp_inp), tmp_targets #batch 生成器

4.必要的初始化

- #输入数据(图片)的root directory

- path_prefix = '../../frames/'

- gen = Generator(gt, bbox_util, 16, '../../frames/',

- train_keys, val_keys,

- (input_shape[0], input_shape[1]), do_crop=False)

- #构建SSD300的model

- model = SSD300(input_shape, num_classes=NUM_CLASSES)

- model.load_weights('weights_SSD300.hdf5', by_name=True)

- #也没太弄懂,为什么需要把他们给freeze,为啥也对他们train

- freeze = ['input_1', 'conv1_1', 'conv1_2', 'pool1',

- 'conv2_1', 'conv2_2', 'pool2',

- 'conv3_1', 'conv3_2', 'conv3_3', 'pool3']

- for L in model.layers:

- if L.name in freeze:

- L.trainable = False

5.keras的一些callback function的定义以及model的compile and training:

- def schedule(epoch, decay=0.9):

- return base_lr * decay**(epoch)

-

- callbacks = [keras.callbacks.ModelCheckpoint('./checkpoints/weights.{epoch:02d}-{val_loss:.2f}.hdf5',

- verbose=1,

- save_weights_only=True),

- keras.callbacks.LearningRateScheduler(schedule)]

- base_lr = 3e-4

- optim = keras.optimizers.Adam(lr=base_lr)

- # optim = keras.optimizers.RMSprop(lr=base_lr)

- # optim = keras.optimizers.SGD(lr=base_lr, momentum=0.9, decay=decay, nesterov=True)

- model.compile(optimizer=optim,

- loss=MultiboxLoss(NUM_CLASSES, neg_pos_ratio=2.0).compute_loss)

- nb_epoch = 30

- history = model.fit_generator(gen.generate(True), gen.train_batches,

- nb_epoch, verbose=1,

- callbacks=callbacks,

- validation_data=gen.generate(False),

- nb_val_samples=gen.val_batches,

- nb_worker=1)

6.train完了之后,当然是检测了:

- #数据的读取

- inputs = []

- images = []

- img_path = path_prefix + sorted(val_keys)[0]

- img = image.load_img(img_path, target_size=(300, 300))

- img = image.img_to_array(img)

- images.append(imread(img_path))

- inputs.append(img.copy())

- inputs = preprocess_input(np.array(inputs))

- #进行预测和预测后对预测结果的解码

- preds = model.predict(inputs, batch_size=1, verbose=1)

- results = bbox_util.detection_out(preds)

- #可视化预测结果

- for i, img in enumerate(images):

- # Parse the outputs.

- det_label = results[i][:, 0]

- det_conf = results[i][:, 1]

- det_xmin = results[i][:, 2]

- det_ymin = results[i][:, 3]

- det_xmax = results[i][:, 4]

- det_ymax = results[i][:, 5]

- # Get detections with confidence higher than 0.6.

- top_indices = [i for i, conf in enumerate(det_conf) if conf >= 0.6]

- top_conf = det_conf[top_indices]

- top_label_indices = det_label[top_indices].tolist()

- top_xmin = det_xmin[top_indices]

- top_ymin = det_ymin[top_indices]

- top_xmax = det_xmax[top_indices]

- top_ymax = det_ymax[top_indices]

-

- colors = plt.cm.hsv(np.linspace(0, 1, 4)).tolist()

-

- plt.imshow(img / 255.)

- currentAxis = plt.gca()

-

- for i in range(top_conf.shape[0]):

- xmin = int(round(top_xmin[i] * img.shape[1]))

- ymin = int(round(top_ymin[i] * img.shape[0]))

- xmax = int(round(top_xmax[i] * img.shape[1]))

- ymax = int(round(top_ymax[i] * img.shape[0]))

- score = top_conf[i]

- label = int(top_label_indices[i])

- #注意这里的label直接使用的数字,因为它train的数据集不是voc,而是几种瓶子的种类。

- display_txt = '{:0.2f}, {}'.format(score, label)

- coords = (xmin, ymin), xmax-xmin+1, ymax-ymin+1

- color = colors[label]

- currentAxis.add_patch(plt.Rectangle(*coords, fill=False, edgecolor=color, linewidth=2))

- currentAxis.text(xmin, ymin, display_txt, bbox={'facecolor':color, 'alpha':0.5})

- plt.show()

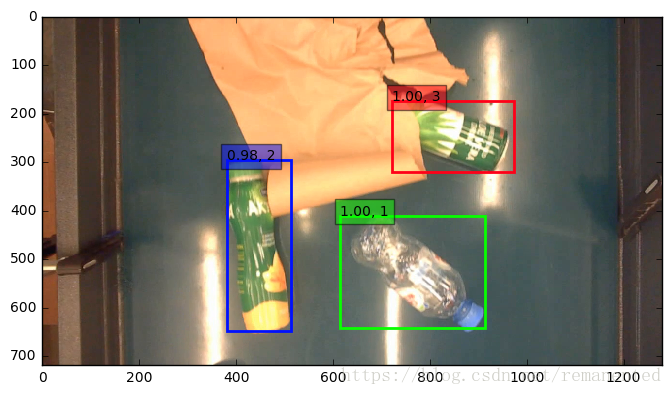

7.predict 的结果:

整个过程也就基本上的结束了。SSD的keras实现还是比较简单的,没有mask r-cnn那么费劲。不知道为啥我先看的yolo的原理和实现,但是不太想写yolo的实现和原理(手动白眼),直接跳到了SSD,大概是觉得SSD比较好理解把,yolo等有时间再写吧。

之后我再把生成prior box pkl文件的代码贴上来,自己写的代码有点乱。希望看到了最后你对SDD的模型架构和具体实现都有了一个很好的认识。因为也是一个新手,所以其中有什么理解不到位,或者写错的,欢迎指出。

添加:prior box 的 pkl文件生成代码:其实也很简单,就是稍微修改了一下PriorBox这个自定义的keras layer,把输出用来产生对于特定feature map 大小的 default box:

- import numpy as np

- class PriorBox():

- def __init__(self, img_size, min_size, max_size=None, aspect_ratios=None,

- flip=True, variances=[0.1,0.1,0.2,0.2], clip=True, layer_shape=[8,8],**kwargs):

- self.input_shape = layer_shape

- self.img_size = img_size

- if min_size <= 0:

- raise Exception('min_size must be positive.')

- self.min_size = min_size

- self.max_size = max_size

- self.aspect_ratios = [1.0]

- if max_size:

- if max_size < min_size:

- raise Exception('max_size must be greater than min_size.')

- self.aspect_ratios.append(1.0)

- if aspect_ratios:

- for ar in aspect_ratios:

- if ar in self.aspect_ratios:

- continue

- self.aspect_ratios.append(ar)

- if flip:

- self.aspect_ratios.append(1.0 / ar)

- self.variances = np.array(variances)

- self.clip = True

- super(PriorBox, self).__init__(**kwargs)

-

- def compute_default_box(self):

- layer_height = self.input_shape[0]

- layer_width = self.input_shape[1]

- img_width = self.img_size[0]

- img_height = self.img_size[1]

- # define prior boxes shapes

- box_widths = []

- box_heights = []

- for ar in self.aspect_ratios:

- if ar == 1 and len(box_widths) == 0:

- box_widths.append(self.min_size)

- box_heights.append(self.min_size)

- elif ar == 1 and len(box_widths) > 0:

- box_widths.append(np.sqrt(self.min_size * self.max_size))

- box_heights.append(np.sqrt(self.min_size * self.max_size))

- elif ar != 1:

- box_widths.append(self.min_size * np.sqrt(ar))

- box_heights.append(self.min_size / np.sqrt(ar))

- box_widths = 0.5 * np.array(box_widths)

- box_heights = 0.5 * np.array(box_heights)

- # define centers of prior boxes

- step_x = img_width / layer_width

- step_y = img_height / layer_height

- #generate a list data

- linx = np.linspace(0.5 * step_x, img_width - 0.5 * step_x,

- layer_width)

- liny = np.linspace(0.5 * step_y, img_height - 0.5 * step_y,

- layer_height)

- ##ulitize meshgrid function to generate default box's coordinates

- centers_x, centers_y = np.meshgrid(linx, liny)

- centers_x = centers_x.reshape(-1, 1)

- centers_y = centers_y.reshape(-1, 1)

- # define xmin, ymin, xmax, ymax of prior boxes

- num_priors_ = len(self.aspect_ratios)

- prior_boxes = np.concatenate((centers_x, centers_y), axis=1)

- prior_boxes = np.tile(prior_boxes, (1, 2 * num_priors_))

- prior_boxes[:, ::4] -= box_widths

- prior_boxes[:, 1::4] -= box_heights

- prior_boxes[:, 2::4] += box_widths

- prior_boxes[:, 3::4] += box_heights

- prior_boxes[:, ::2] /= img_width

- prior_boxes[:, 1::2] /= img_height

- prior_boxes = prior_boxes.reshape(-1, 4)

- if self.clip:

- prior_boxes = np.minimum(np.maximum(prior_boxes, 0.0), 1.0)

- # define variances

- num_boxes = len(prior_boxes)

- if len(self.variances) == 1:

- variances = np.ones((num_boxes, 4)) * self.variances[0]

- elif len(self.variances) == 4:

- variances = np.tile(self.variances, (num_boxes, 1))

- else:

- raise Exception('Must provide one or four variances.')

- prior_boxes = np.concatenate((prior_boxes, variances), axis=1)

- return prior_boxes

-

- #调用修改后的PriorBox类

- img_size = (300, 300)

- default_box_layer1 = PriorBox(img_size, 30, [], aspect_ratios=[2], layer_shape=(38,38)).compute_default_box()

- default_box_layer2 = PriorBox(img_size, 60, 114, aspect_ratios=[2,3], layer_shape=(19,19)).compute_default_box()

- default_box_layer3 = PriorBox(img_size, 114, 168, aspect_ratios=[2,3], layer_shape=(10,10)).compute_default_box()

- default_box_layer4 = PriorBox(img_size, 168, 222, aspect_ratios=[2,3], layer_shape=(5,5)).compute_default_box()

- default_box_layer5 = PriorBox(img_size, 222, 276, aspect_ratios=[2,3], layer_shape=(3,3)).compute_default_box()

- default_box_layer6 = PriorBox(img_size, 276, 330, aspect_ratios=[2,3], layer_shape=(1,1)).compute_default_box()

- #把各层的输出concatenate起来

- default_box = np.concatenate((default_box_layer1, default_box_layer2, default_box_layer3,

- default_box_layer4, default_box_layer5, default_box_layer6), axis=0)

- #写入到pkl文件中

- import pickle

- pickle.dump(default_box,open("default_box_information","wb"))