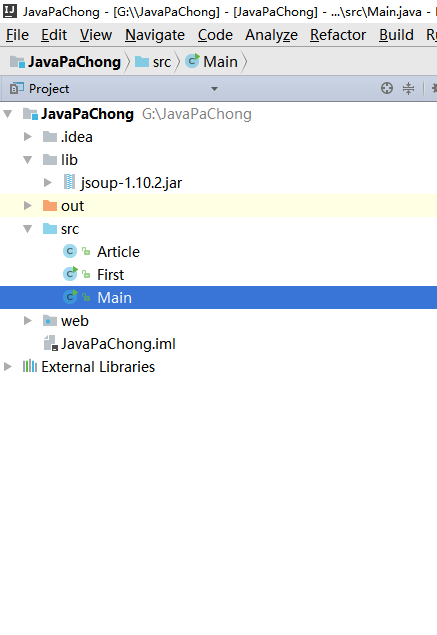

工程目录:

所需要的jar包为: jsoup-1.10.2.jar

/**

* Created by wangzheng on 2017/2/19.

*/

public class Article {

/**

* 文章链接的相对地址

*/

private String address;

/**

* 文章标题

*/

private String title;

/**

* 文章简介

*/

private String desption;

/**

* 文章发表时间

*/

private String time;

public String getAddress() {

return address;

}

public void setAddress(String address) {

this.address = address;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getDesption() {

return desption;

}

public void setDesption(String desption) {

this.desption = desption;

}

public String getTime() {

return time;

}

public void setTime(String time) {

this.time = time;

}

}

/**

* Created by wangzheng on 2017/2/19.

*/

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.jsoup.Connection;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

public class First {

// 需要进行爬取得博客首页

// private static final String URL = "http://blog.csdn.net/guolin_blog";

private static final String URL = "http://blog.csdn.net/qq_33599520/article/list/1";

public static void main(String[] args) throws IOException {

// 获取url地址的http链接Connection

Connection conn = Jsoup.connect(URL) // 博客首页的url地址

.userAgent("Mozilla/5.0 (Windows NT 6.1; rv:47.0) Gecko/20100101 Firefox/47.0") // http请求的浏览器设置

.timeout(5000) // http连接时长

.method(Connection.Method.GET); // 请求类型是get请求,http请求还是post,delete等方式

//获取页面的html文档

Document doc = conn.get();

Element body = doc.body();

// 将爬取出来的文章封装到Artcle中,并放到ArrayList里面去

List<Article> resultList = new ArrayList<Article>();

Element articleListDiv = body.getElementById("article_list");

Elements articleList = articleListDiv.getElementsByClass("list_item");

for(Element article : articleList){

Article articleEntity = new Article();

Element linkNode = (article.select("div h1 a")).get(0);

Element desptionNode = (article.getElementsByClass("article_description")).get(0);

Element articleManageNode = (article.getElementsByClass("article_manage")).get(0);

articleEntity.setAddress(linkNode.attr("href"));

articleEntity.setTitle(linkNode.text());

articleEntity.setDesption(desptionNode.text());

articleEntity.setTime(articleManageNode.select("span:eq(0").text());

resultList.add(articleEntity);

}

// 遍历输出ArrayList里面的爬取到的文章

System.out.println("文章总数:" + resultList.size());

for(Article article : resultList) {

System.out.println("文章绝对路劲地址:http://blog.csdn.net" + article.getAddress());

}

}

}

/**

* Created by wangzheng on 2017/2/19.

*/

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.jsoup.*;

import org.jsoup.nodes.*;

import org.jsoup.select.*;

public class Main {

private static final String URL = "http://blog.csdn.net/qq_33599520";

public static void main(String[] args) throws IOException {

Connection conn = Jsoup.connect(URL)

.userAgent("Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:50.0) Gecko/20100101 Firefox/50.0")

.timeout(5000)

.method(Connection.Method.GET);

Document doc = conn.get();

Element body = doc.body();

// 获取总页数

String totalPageStr = body.getElementById("papelist").select("span:eq(0)").text();

String regex = ".+共(\d+)页";

totalPageStr = totalPageStr.replaceAll(regex, "$1");

int totalPage = Integer.parseInt(totalPageStr);

int pageNow = 1;

List<Article> articleList = new ArrayList<Article>();

for(pageNow = 1; pageNow <= totalPage; pageNow++){

articleList.addAll(getArtitcleByPage(pageNow));

}

// 遍历输出博主所有的文章

for(Article article : articleList) {

System.out.println("文章标题:" + article.getTitle());

System.out.println("文章绝对路劲地址:http://blog.csdn.net" + article.getAddress());

System.out.println("文章简介:" + article.getDesption());

System.out.println("发表时间:" + article.getTime());

}

}

public static List<Article> getArtitcleByPage(int pageNow) throws IOException{

Connection conn = Jsoup.connect(URL + "/article/list/" + pageNow)

.userAgent("Mozilla/5.0 (Windows NT 6.1; rv:47.0) Gecko/20100101 Firefox/47.")

.timeout(5000)

.method(Connection.Method.GET);

Document doc = conn.get();

Element body = doc.body();

List<Article> resultList = new ArrayList<Article>();

Element articleListDiv = body.getElementById("article_list");

Elements articleList = articleListDiv.getElementsByClass("list_item");

for(Element article : articleList){

Article articleEntity = new Article();

Element linkNode = (article.select("div h1 a")).get(0);

Element desptionNode = (article.getElementsByClass("article_description")).get(0);

Element articleManageNode = (article.getElementsByClass("article_manage")).get(0);

articleEntity.setAddress(linkNode.attr("href"));

articleEntity.setTitle(linkNode.text());

articleEntity.setDesption(desptionNode.text());

articleEntity.setTime(articleManageNode.select("span:eq(0").text());

resultList.add(articleEntity);

}

return resultList;

}

}