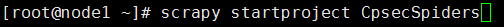

1.创建工程CpsecSpiders

scrapy 命令行工具:scrapy startproject CpsecSpiders

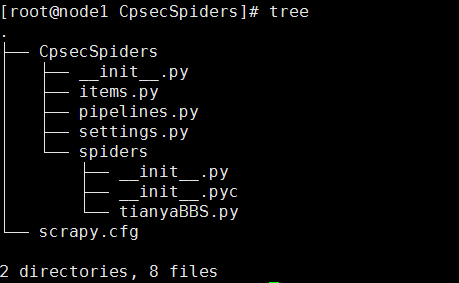

2.工程CpsecSpiders的目录结构:cd CpsecSpiders

目录文件说明

scrapy.cfg: 项目的配置文件CpsecSpiders/: 该项目的python模块。之后您将在此加入代码。CpsecSpiders/items.py: 项目中的item文件.——要爬的内容变量声明CpsecSpiders/pipelines.py: 项目中的pipelines文件. ——存到数据库,数据去重,关键词匹配CpsecSpiders/settings.py: 项目的设置文件.——全局设设置文件CpsecSpiders/spiders/: 放置spider代码的目录.——每一个爬虫都放在这个目录

3.爬虫代码tianyaBBS.py

#!/usr/bin/python # -*- coding:utf-8 -*- from scrapy.contrib.loader import ItemLoader from scrapy.spider import Spider from scrapy.http import Request from scrapy.selector import Selector from CpsecSpiders.items import CpsecspidersItem import scrapy import lxml.html as lh from CpsecSpiders.CpsecSpiderUtil import spiderutil as sp import sys import time import os from scrapy.contrib.spiders import CrawlSpider, Rule from urlparse import urljoin from xml.dom.minidom import parse import xml.dom.minidom class tianyaBBSspider(CrawlSpider): reload(sys) sys.setdefaultencoding('utf8') #爬虫名称,非常关键,唯一标示 name = "tianya" #域名限定 allowed_domains = ["bbs.tianya.cn"]

#爬虫的爬取得起始url start_urls = [ #天涯论坛热帖榜 可以写多个用,分隔 "http://bbs.tianya.cn/hotArticle.jsp", ] baseurl = 'http://bbs.tianya.cn' def parse(self, response): #选择器 sel = Selector(response) item = CpsecSpiderItem() #文章url列表 article_url = sel.xpath('//div[@class="mt5"]/table[@class="tab-bbs-list tab-bbs-list-2"]//tr[@class="bg"]/td[1]/a/@href').extract() #下一页地址 next_page_url = sel.xpath('//div[@class="long-pages"]/a[last()]/@href').extract() for url in article_url: #拼接url urll = urljoin(self.baseurl,url) #调用parse_item解析文章内容 request = scrapy.Request(urll,callback=self.parse_item) request.meta['item'] = item yield request if next_page_url[0]: #调用自身进行迭代 request = scrapy.Request(urljoin(self.baseurl,next_page_url[0]),callback=self.parse) yield request def parse_item(self, response): content = '' sel = Selector(response) item = response.meta['item'] l = ItemLoader(item=CpsecSpiderItem(), response=response) article_url = str(response.url) today_timestamp = sp.get_tody_timestamp() article_id = sp.hashForUrl(article_url) article_name = sel.xpath('//div[@id="post_head"]/h1/span/span/text()').extract() article_time = sel.xpath('//div[@id="post_head"]/div[1]/div[@class="atl-info"]/span[2]/text()').extract() article_content = sel.xpath('//div[@class="atl-main"]//div/div[@class="atl-content"]/div[2]/div[1]/text()').extract() article_author = sel.xpath('//div[@id="post_head"]/div[1]/div[@class="atl-info"]/span[1]/a/text()').extract() article_clik_num = sel.xpath('//div[@id="post_head"]/div[1]/div[@class="atl-info"]/span[3]/text()').extract() article_reply_num = sel.xpath('//div[@id="post_head"]/div[1]/div[@class="atl-info"]/span[4]/text()').extract() #文章内容拼起来 for i in article_content: content = content + i article_id = article_id.encode('utf-8') article_name = article_name[0].encode('utf-8') content = content.encode('utf-8') article_time = article_time[0].encode('utf-8')[9::] crawl_time = today_timestamp.encode('utf-8') article_url = article_url.encode('utf-8') article_author = article_author[0].encode('utf-8') click_num = article_clik_num[0].encode('utf-8')[9::] reply_num = article_reply_num[0].encode('utf-8')[9::] l.add_value('article_name',article_name) l.add_value('article_id',article_id) l.add_value('article_content',content) l.add_value('crawl_time',crawl_time) l.add_value('article_time',article_time) l.add_value('article_url',article_url) l.add_value('reply_num',reply_num) l.add_value('click_num',click_num) l.add_value('article_author',article_author) yield l.load_item()

3.items.py代码

# s*- coding: utf-8 -*- ######################################################################## # author: # chaosju # # decription: # Define here the models for your scraped items # # help documentation: # http://doc.scrapy.org/en/latest/topics/items.html ####################################################################### from scrapy.item import Item, Field class CpsecspidersItem(Item):

article_name = Field() article_url = Field() article_content = Field() article_time = Field() article_id = Field() crawl_time = Field() article_from = Field() click_num = Field() reply_num = Field() article_author =Field()

4.settings.py代码

# -*- coding: utf-8 -*- # Scrapy settings for CpesecSpiers project # # For simplicity, this file contains only the most important settings by # default. All the other settings are documented here: # # http://doc.scrapy.org/en/latest/topics/settings.html # BOT_NAME = 'CpsecSpiders' SPIDER_MODULES = ['CpsecSpiders.spiders'] NEWSPIDER_MODULE = 'CpsecSpiders.spiders' #减慢爬取速度 为1s download_delay = 1 #爬取网站深度 DEPTH_LIMIT = 20 #禁止cookies,防止被ban COOKIES_ENABLED = False

#声明Pipeline,定义的pipeline必须在这声明

ITEM_PIPELINES = { 'CpsecSpiders.pipelines.CpsecspidersPipeline':300 }

5.scrapy.cfg内容

# Automatically created by: scrapy startproject # # For more information about the [deploy] section see: # http://doc.scrapy.org/en/latest/topics/scrapyd.html [settings] default = CpsecSpiders.settings [deploy] url = http://localhost:6800/ project = CpsecSpiders

5.pipelines.py的定义,项目用的MySQL

# -*- coding: utf-8 -*- ########################################################################## # author # chaosju # description: # Define your item pipelines here # save or process your spider's data # attention: # Don't forget to add your pipeline to the ITEM_PIPELINES setting in setting file # help document: # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html ######################################################################## import re from scrapy import log from twisted.enterprise import adbapi from scrapy.http import Request from CpsecSpiders.items import CpsecspidersItem import MySQLdb import MySQLdb.cursors from scrapy.exceptions import DropItem from CpsecSpiders.CpsecSpiderUtil import spiderutil as sp class CpsecspidersPipeline(object): def __init__(self): #connect to mysql DB self.dbpool = adbapi.ConnectionPool('MySQLdb', host = '192.168.2.2', db = 'dbname', user = 'root', passwd = 'root', cursorclass = MySQLdb.cursors.DictCursor, charset = 'utf8', use_unicode = True) def process_item(self, item, spider): """ if spider.name == '360search' or spider.name == 'baidu' or spider.name == 'sogou': query = self.dbpool.runInteraction(self._conditional_insert, item) return item elif spider.name == 'ifengSpider': query = self.dbpool.runInteraction(self._conditional_insert_ifeng, item) return item elif spider.name == 'chinanews': query = self.dbipool.runInteraction(self._conditional_insert_chinanews, item) return item else: """ query = self.dbpool.runInteraction(self._conditional_insert, item) return item def _conditional_insert(self, tx, item): for i in range(len(item['article_name'])): tx.execute("select * from article_info as t where t.id = %s", (item['article_id'][i], )) result = tx.fetchone() #lens = sp.isContains(item['article_name'][i])

#if not result and lens != -1:

#数据不存在,则插入

if not result: tx.execute( 'insert into article_info(id,article_name,article_time,article_url,crawl_time,praise_num,comment_num,article_from,article_author) values (%s, %s, %s, %s, %s, %s, %s, %s, %s)', (item['article_id'][i],item['article_name'][i],item['article_time'][i],item['article_url'][i],item['crawl_time'][i],item['click_num'][i],item['reply_num'][i],item['article_from'][i],item['article_author'][i])) tx.execute( 'insert into article_content(id,article_content) values (%s, %s)', (item['article_id'][i],item['article_content'][i]))