一,安装模式介绍

Hive官网上介绍了Hive的3种安装方式,分别对应不同的应用场景。

a、内嵌模式(元数据保村在内嵌的derby种,允许一个会话链接,尝试多个会话链接时会报错)

b、本地模式(本地安装mysql 替代derby存储元数据)

c、远程模式(远程安装mysql 替代derby存储元数据)

二,安装准备

前提:已经安装java JDK1.7以上,hadoop可用,mysql可用。

三,下载解压

下载链接:https://mirrors.tuna.tsinghua.edu.cn/apache/hive/

cd /home tar zxvf apache-hive-2.3.6-bin.tar.gz mv apache-hive-2.3.6-bin hive

四,配置环境变量

[root@192-168-22-220 hive]# vi /etc/profile export JAVA_HOME=/usr/java/jdk1.8.0_231-amd64 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export HADOOP_HOME=/home/hadoop-2.10.0

export HADOOP_CONF_DIR=/home/hadoop-2.10.0/etc/hadoop export HIVE_HOME=/home/hive

export HIVE_CONF_DIR=/home/hive/conf

export PATH=$JAVA_HOME/bin:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin

[root@192-168-22-220 hive]# source /etc/profile

五,修改Hive的配置

[root@192-168-22-220 conf]# cp hive-default.xml.template hive-site.xml [root@192-168-22-220 conf]# mkdir /home/hive/warehouse [root@192-168-22-220 conf]# vi hive-site.xml #删除里面内容,只留<configuration></configuration> 节点 #删除方法 #将光标放在<configuration>的下一行在:模式下输入.,$-1d 按回车。从当前行删除到倒数第二行 <property> <name>hive.security.authorization.enabled</name> <value>true</value> </property> <property> <name>hive.server2.enable.doAs</name> <value>false</value> </property>

<property>

<name>hive.security.authorization.createtable.owner.grants</name>

<value>ALL</value>

</property>

<property>

<name>hive.users.in.admin.role</name>

<value>root</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.22.220:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>数据库账号</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>数据库密码</value>

</property>

六,mysql 驱动

下载:https://blog.csdn.net/weixin_41804049/article/details/87719574

把mysql-connector-java-5.1.46.jar放到hive的lib目录下

七,初始化数据库

schematool -dbType mysql -initSchema #成功的话,mysql里面会多出来hive这个数据库

报错可看。http://www.lzhpo.com/article/98

八,启动

运行hive之前要确保hadoop集群启动和MySQL服务启动正常开启的情况下

hive启动常见的有三种:

本地cli启动

#由于配置过环境变量,可以直接在命令行中输入hive 本地启动命令 hive [root@192-168-22-220 ~]# hive which: no hbase in (/usr/java/jdk1.8.0_231-amd64/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/home/hadoop-2.10.0/bin:/home/hadoop-2.10.0/sbin:/home/hive/bin:/root/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/hadoop-2.10.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in jar:file:/home/hive/lib/hive-common-2.3.6.jar!/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>

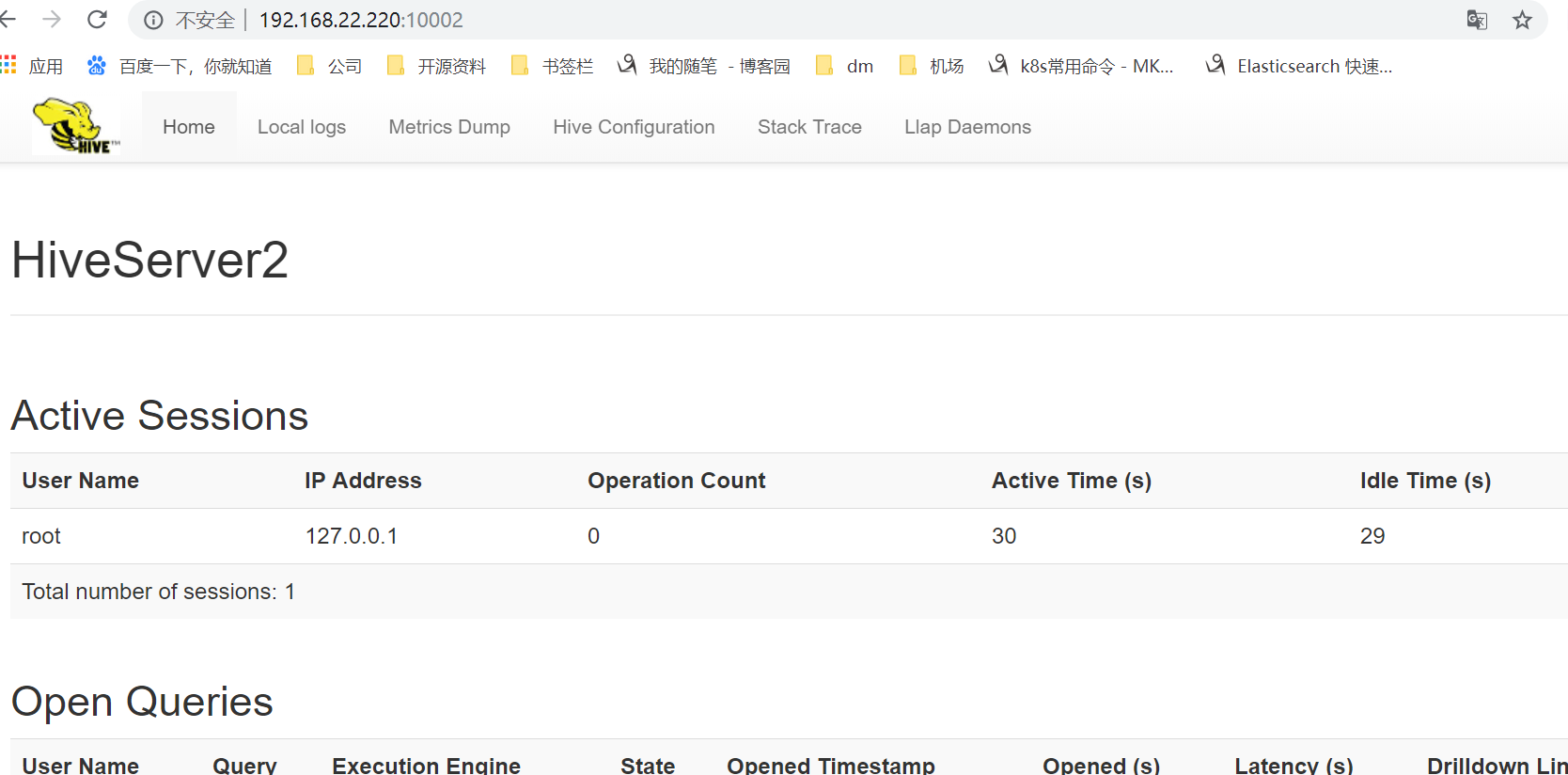

HiveServer2/beeline 客户端方式启动

由于hive启动,hadoop集群中其他节点是无法访问的,只能在hive安装的当前节点使用,若需要在其他节点操作hive,则可以用HiveServer2/beeline方式

第一:修改 hadoop 集群的 hdfs-site.xml 配置文件: #加入一条配置信息,表示启用 webhdfs (可选) <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> #是否需要验证的配置,此处设为NONE,暂时不需要验证 (可选) <property> <name>hive.server2.authentication</name> <value>NONE</value> </property>

第二:修改 hadoop 集群的 core-site.xml 配置文件: #加入两条配置信息:表示设置 hadoop 的代理用户 <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property>

重启 stop-all.sh start-all.sh #配置解析: hadoop.proxyuser.hadoop.hosts 配置成*的意义,表示任意节点使用 hadoop 集群的代理用户 hadoop 都能访问 hdfs 集群,

hadoop.proxyuser.hadoop.groups 表示代理用户的组所属

第一步:先启动 hiveserver2 服务 前台启动方式:hiveserver2 后台启动方式:nohup hiveserver2 1>/dev/null 2>/dev/null & 第二步:然后启动 beeline 客户端去连接: beeline -u jdbc:hive2://127.0.0.1:10000 -n hadoop -u : 指定元数据库的链接信息 -n : 指定用户名和密码 也可以先执行 beeline 然后按图所示输入:!connect jdbc:hive2://127.0.0.1:10000 按回车,然后输入用户名,这个 用户名就是安装 hadoop 集群的用户名 hiveserver端口号默认是10000 使用beeline通过jdbc连接上之后就可以像client一样操作。 hiveserver2会同时启动一个webui,端口号默认为10002,可以通过http://localhost:10002/访问 界面中可以看到Session/Query/Software等信息。(此网页只可查看,不可以操作hive数据仓库)

启动hiveWebInterface,通过网页访问hive

hive提供网页GUI来访问Hive数据仓库 可以通过以下命令启动hwi,默认端口号9999 $HIVE_HOME/bin/hive --service hwi 从Hive 2.2.0开始不再支持hwi,故此处不再赘述。

九,查看

[root@192-168-22-220 ~]# hadoop dfs -lsr /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

lsr: DEPRECATED: Please use 'ls -R' instead.

drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp

drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp/hive

drwx------ - root supergroup 0 2019-12-13 09:54 /tmp/hive/root

十,测试hive创建库

0: jdbc:hive2://127.0.0.1:10000> create database hive1; #创建库 No rows affected (0.582 seconds) 显示库: 0: jdbc:hive2://127.0.0.1:10000> show databases; +----------------+ | database_name | +----------------+ | default | | hive1 | +----------------+ 2 rows selected (0.232 seconds) 创建库成功!

十一,再次查看

[root@192-168-22-220 ~]# hadoop dfs -lsr / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. lsr: DEPRECATED: Please use 'ls -R' instead. drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home/hive drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home/hive/warehouse drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home/hive/warehouse/hive1.db drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp/hive drwx------ - root supergroup 0 2019-12-13 11:19 /tmp/hive/root

[root@192-168-22-220 ~]# mysql -uroot -p Enter password: Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 33 Server version: 5.7.28 MySQL Community Server (GPL) Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | mysql | | performance_schema | | sys | +--------------------+ 5 rows in set (0.00 sec) mysql> select * from hive.DBS; +-------+-----------------------+---------------------------------------------------------+---------+------------+------------+ | DB_ID | DESC | DB_LOCATION_URI | NAME | OWNER_NAME | OWNER_TYPE | +-------+-----------------------+---------------------------------------------------------+---------+------------+------------+ | 1 | Default Hive database | hdfs://192.168.22.220:9000/home/hive/warehouse | default | public | ROLE | | 2 | NULL | hdfs://192.168.22.220:9000/home/hive/warehouse/hive1.db | hive1 | root | USER | +-------+-----------------------+---------------------------------------------------------+---------+------------+------------+ 2 rows in set (0.00 sec)

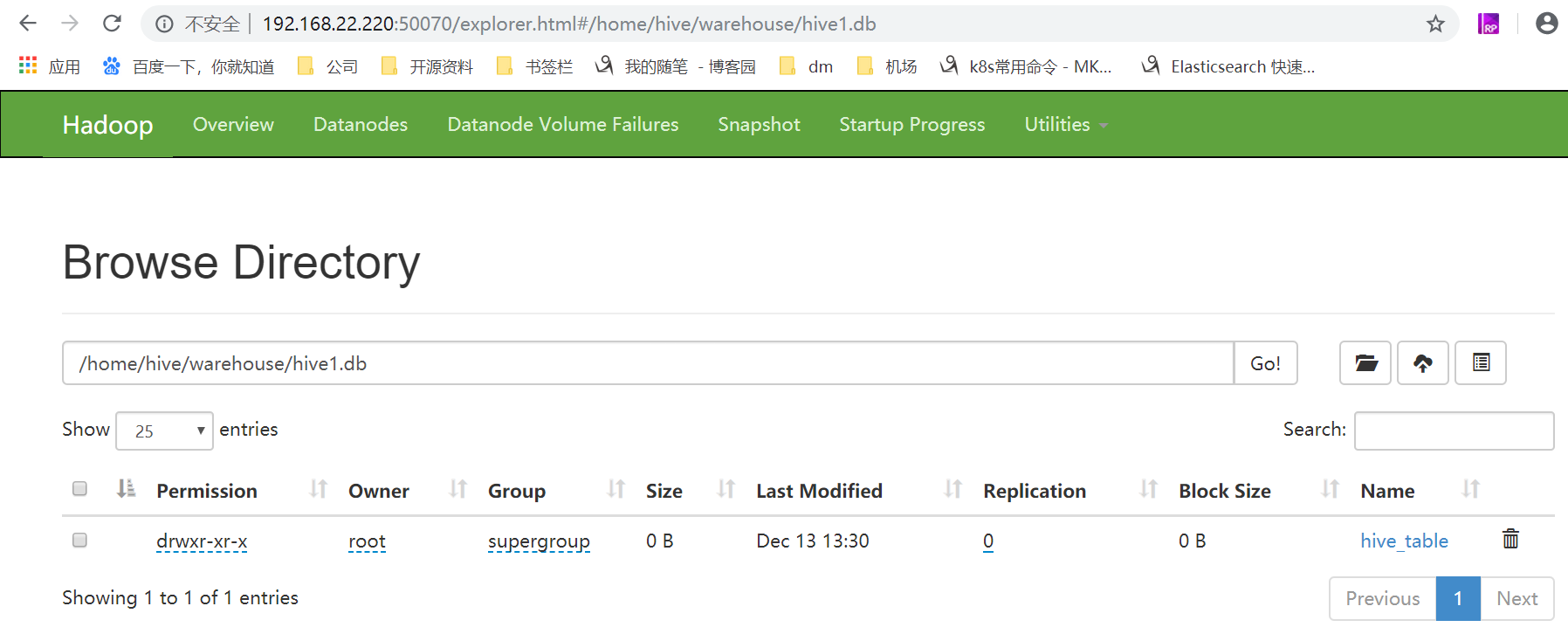

十二,测试hive创建表

0: jdbc:hive2://127.0.0.1:10000> use hive1; No rows affected (0.277 seconds) 0: jdbc:hive2://127.0.0.1:10000> create table hive_table (id int,name string); No rows affected (1.409 seconds) 0: jdbc:hive2://127.0.0.1:10000> show tables; +-------------+ | tab_name | +-------------+ | hive_table | +-------------+ 1 row selected (0.263 seconds)

0: jdbc:hive2://127.0.0.1:10000> insert into hive_table (id,name) values (1,'cgc'); WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. No rows affected (38.199 seconds) 0: jdbc:hive2://127.0.0.1:10000> select * from hive_table; +----------------+------------------+ | hive_table.id | hive_table.name | +----------------+------------------+ | 1 | cgc | +----------------+------------------+ 1 row selected (0.575 seconds)

十三,成功展示

mysql> select * from hive.TBLS; +--------+-------------+-------+------------------+-------+-----------+-------+------------+---------------+--------------------+--------------------+--------------------+ | TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | IS_REWRITE_ENABLED | +--------+-------------+-------+------------------+-------+-----------+-------+------------+---------------+--------------------+--------------------+--------------------+ | 1 | 1576215017 | 2 | 0 | root | 0 | 1 | hive_table | MANAGED_TABLE | NULL | NULL | | +--------+-------------+-------+------------------+-------+-----------+-------+------------+---------------+--------------------+--------------------+--------------------+ 1 row in set (0.00 sec)

[root@192-168-22-220 ~]# hadoop dfs -lsr / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. lsr: DEPRECATED: Please use 'ls -R' instead. drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home/hive drwxr-xr-x - root supergroup 0 2019-12-13 13:17 /home/hive/warehouse drwxr-xr-x - root supergroup 0 2019-12-13 13:30 /home/hive/warehouse/hive1.db drwxr-xr-x - root supergroup 0 2019-12-13 13:30 /home/hive/warehouse/hive1.db/hive_table drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp drwx-wx-wx - root supergroup 0 2019-12-12 18:33 /tmp/hive drwx------ - root supergroup 0 2019-12-13 11:19 /tmp/hive/root