1.下载安装包http://archive.apache.org/dist/flume/

2.解压命令tar -zxvf 压缩包 -C 路径

3.配置环境变量

export FLUME_HOME=/opt/programs/apache-flume-1.6.0-bin

export PATH=$PATH:$FLUME_HOME/bin

source /etc/profile

4.在conf/目录下,修改flume-env.sh

cp flume-env.ps1.template flume-env.sh

vi flume-env.sh

在最下面添加java_home就行了

export JAVA_HOME=/usr/java/jdk1.8.0_25

注意:

如果你的hadoop集群是HA模式,需要把core-site.xml、hdfs-site.xml复制到flume的conf/文件夹下

5.在conf/目录下,新建测试配置文件example.conf

http://flume.apache.org/FlumeUserGuide.html#avro-sink

vi example.conf

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

flume-ng agent --conf conf --conf-file example.conf --name a1 -Dflume.root.logger=INFO,console

8.安装telnet

yum -y install telnet

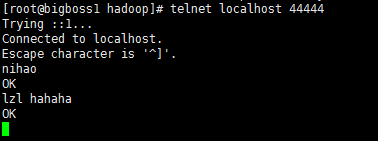

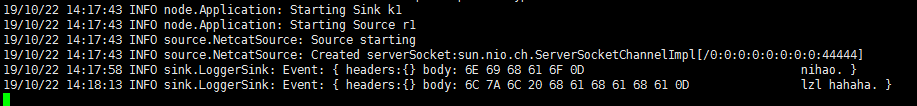

9.测试连接

telnet localhost 44444

10.单节点配置ok了,复制到其他节点。

测试:用Flume收集文件夹中的数据

1.创建一个即将要被“监视”的文件夹

[root@bigboss1 opt]# mkdir flume-dir

[root@bigboss1 opt]# ll

total 16

-rw-r--r--. 1 root root 23 Oct 15 15:56 exam.csv

drwxr-xr-x. 2 root root 6 Oct 22 18:49 flume-dir

drwxr-xr-x. 8 root root 4096 Oct 22 11:34 programs

drwxr-xr-x. 2 root root 4096 Oct 22 11:33 targz

drwxr-xr-x. 4 root root 32 Sep 27 09:43 tasks

drwxr-xr-x. 2 root root 4096 Sep 28 08:40 txts

[root@bigboss1 opt]# cd flume-dir/

[root@bigboss1 flume-dir]# pwd

/opt/flume-dir

2.在flume的conf/下创建文件example-dir.conf文件

a1.channels = ch1

a1.sources = src1

a1.sinks = k1

a1.sources.src1.type = spooldir

a1.sources.src1.channels = ch1

a1.sources.src1.spoolDir = /opt/flume-dir

a1.sources.src1.fileHeader = true

a1.sources.src1.ignorePattern = ([^ ]*.tmp)

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = ch1

a1.sinks.k1.hdfs.path = hdfs://bigboss1:9000/test/flume-events1/%y-%m-%d/%H

a1.sinks.k1.hdfs.filePrefix = events1-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 60

a1.sinks.k1.hdfs.roundUnit = minute

a1.sinks.k1.hdfs.useLocalTimeStamp = true

a1.sinks.k1.hdfs.fileType = DataStream

a1.sinks.k1.hdfs.rollInterval = 600

a1.sinks.k1.hdfs.rollSize = 134217700

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.minBlockReplicas = 1

a1.channels.ch1.type = memory

a1.channels.ch1.capacity = 1000

a1.channels.ch1.transactionCapacity = 100

a1.sources.src1.channels = ch1

3.运行flume agent

flume-ng agent --conf conf --name a1 --conf-file example-dir.conf &

提示:& 表示将任务放在后台

4.在被‘监视’的文件夹下创建文件

[root@bigboss1 flume-dir]# vi mytxt.txt

You have new mail in /var/spool/mail/root

[root@bigboss1 flume-dir]# vi mytmp.tmp

[root@bigboss1 flume-dir]# ll

total 8

-rw-r--r--. 1 root root 14 Oct 22 19:11 mytmp.tmp

-rw-r--r--. 1 root root 20 Oct 22 19:11 mytxt.txt.COMPLETED

我在mytxt.txt里写了

i am ok

are you ok?

在mytmp.tmp里写了

hello flume!

.tmp文件会被ignore,因为a1.sources.src1.ignorePattern = ([^ ]*.tmp)

此时flume会有变化

19/10/22 19:11:21 INFO avro.ReliableSpoolingFileEventReader: Preparing to move file /opt/flume-dir/mytxt.txt to /opt/flume-dir/mytxt.txt.COMPLETED

19/10/22 19:11:22 INFO hdfs.HDFSDataStream: Serializer = TEXT, UseRawLocalFileSystem = false

19/10/22 19:11:22 INFO hdfs.BucketWriter: Creating hdfs://bigboss1:9000/test/flume-events1/19-10-22/19/events1-.1571742682004.tmp

19/10/22 19:21:24 INFO hdfs.BucketWriter: Closing hdfs://bigboss1:9000/test/flume-events1/19-10-22/19/events1-.1571742682004.tmp

5.在hdfs查看文件

[root@bigboss1 flume-dir]# hdfs dfs -cat /test/flume-events1/19-10-22/19/events1-.1571742682004.tmp

i am ok

are you ok?

[root@bigboss1 flume-dir]#

嗯,结束啦