一 POD的容器资源限制

1.1 限制内容

有两个参数

QoS Class: BestEffort,表示尽可能的满足使用,级别较低,但当资源不够时,会杀掉这个容器

resources: {}这里指定为空,则使用上面的参数

一般定义

resources: requests: #表示最小需求 cpu: "0.1" memory: "32Mi" limits: #最大限制 cpu: "1" memory: "128Mi

1.2 配置资源限制

[root@docker-server1 pods]# vim nginx-pods.yaml

apiVersion: v1 kind: Pod metadata: annotations: test: this is a test app labels: app: nginx name: nginx namespace: default spec: containers: - env: - name: test value: aaa - name: test1 value: bbb image: nginx imagePullPolicy: Always name: nginx ports: - containerPort: 80 hostPort: 8080 protocol: TCP resources: requests: cpu: "0.2" memory: "128Mi" limits: cpu: "2" memory: "2Gi" - command: - sh - -c - sleep 3600 image: busybox imagePullPolicy: Always name: busybox resources: requests: cpu: "0.1" memory: "32Mi" limits: cpu: "1" memory: "128Mi" restartPolicy: Always

[root@docker-server1 pods]# kubectl delete pod nginx

1.3 创建pod

[root@docker-server1 pods]# kubectl apply -f nginx-pods.yaml

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE nginx 2/2 Running 0 26s

[root@docker-server1 pods]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx 2/2 Running 0 32s 10.244.2.9 192.168.132.133 <none> <none>

1.4 查看资源限制信息

[root@docker-server1 pods]# kubectl describe pods nginx

Name: nginx Namespace: default Priority: 0 Node: 192.168.132.133/192.168.132.133 Start Time: Thu, 09 Jan 2020 19:00:56 -0500 Labels: app=nginx Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{"test":"this is a test app"},"labels":{"app":"nginx"},"name":"nginx","namespace... test: this is a test app Status: Running IP: 10.244.2.9 IPs: IP: 10.244.2.9 Containers: nginx: Container ID: docker://80287ddae6b23bbb066246a00e1d764182517ae065015fa56017ecc8627f7bd5 Image: nginx Image ID: docker-pullable://nginx@sha256:8aa7f6a9585d908a63e5e418dc5d14ae7467d2e36e1ab4f0d8f9d059a3d071ce Port: 80/TCP Host Port: 8080/TCP State: Running Started: Thu, 09 Jan 2020 19:01:03 -0500 Ready: True Restart Count: 0 Limits: cpu: 2 memory: 2Gi Requests: cpu: 200m memory: 128Mi Environment: test: aaa test1: bbb Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) busybox: Container ID: docker://91ac7538f51d8ffa33328dc0af2e2b7ef094d5439e948b0fc849f444d94ec61b Image: busybox Image ID: docker-pullable://busybox@sha256:6915be4043561d64e0ab0f8f098dc2ac48e077fe23f488ac24b665166898115a Port: <none> Host Port: <none> Command: sh -c sleep 3600 State: Running Started: Thu, 09 Jan 2020 19:01:08 -0500 Ready: True Restart Count: 0 Limits: cpu: 1 memory: 128Mi Requests: cpu: 100m memory: 32Mi Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-bwbrn: Type: Secret (a volume populated by a Secret) SecretName: default-token-bwbrn Optional: false QoS Class: Burstable Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Pulling 2m37s kubelet, 192.168.132.133 Pulling image "nginx" Normal Scheduled 2m35s default-scheduler Successfully assigned default/nginx to 192.168.132.133 Normal Pulled 2m31s kubelet, 192.168.132.133 Successfully pulled image "nginx" Normal Created 2m31s kubelet, 192.168.132.133 Created container nginx Normal Started 2m31s kubelet, 192.168.132.133 Started container nginx Normal Pulling 2m31s kubelet, 192.168.132.133 Pulling image "busybox" Normal Pulled 2m26s kubelet, 192.168.132.133 Successfully pulled image "busybox" Normal Created 2m26s kubelet, 192.168.132.133 Created container busybox Normal Started 2m26s kubelet, 192.168.132.133 Started container busybox

1.5 不同时配置requests和limits

[root@docker-server1 pods]# vim nginx-pods.yaml

apiVersion: v1 kind: Pod metadata: annotations: test: this is a test app labels: app: nginx name: nginx namespace: default spec: containers: - env: - name: test value: aaa - name: test1 value: bbb image: nginx imagePullPolicy: Always name: nginx ports: - containerPort: 80 hostPort: 8080 protocol: TCP resources: requests: cpu: "0.2" memory: "128Mi" - command: - sh - -c - sleep 3600 image: busybox imagePullPolicy: Always name: busybox resources: limits: cpu: "1" memory: "128Mi" restartPolicy: Always

[root@docker-server1 pods]# kubectl delete pod nginx

[root@docker-server1 pods]# kubectl apply -f nginx-pods.yaml

[root@docker-server1 pods]# kubectl describe pods nginx

Name: nginx Namespace: default Priority: 0 Node: 192.168.132.133/192.168.132.133 Start Time: Thu, 09 Jan 2020 19:07:55 -0500 Labels: app=nginx Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{"test":"this is a test app"},"labels":{"app":"nginx"},"name":"nginx","namespace... test: this is a test app Status: Pending IP: IPs: <none> Containers: nginx: Container ID: Image: nginx Image ID: Port: 80/TCP Host Port: 8080/TCP State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Requests: cpu: 200m memory: 128Mi Environment: test: aaa test1: bbb Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) busybox: Container ID: Image: busybox Image ID: Port: <none> Host Port: <none> Command: sh -c sleep 3600 State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Limits: cpu: 1 memory: 128Mi Requests: #自己定义了requests cpu: 1 memory: 128Mi Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: default-token-bwbrn: Type: Secret (a volume populated by a Secret) SecretName: default-token-bwbrn Optional: false QoS Class: Burstable Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Pulling 7s kubelet, 192.168.132.133 Pulling image "nginx" Normal Scheduled 6s default-scheduler Successfully assigned default/nginx to 192.168.132.133

说明定义了limites,会自动定义一个和limites相同资源的requests,但是不定义limits,也不会定义limits设置

二 pods对容器的健将检查

2.1 健康检查种类

pod通过LivenessProbe和ReadinessProbe两种探针来检查容器的健康状态:

1 LivenessProbe用于判断容器是否健康,如果LivenessProbe探测到容器不健康,kubelet将删除该容器并根据容器的重启策略做相应的处理。如果容器不包含LivenessProbe,则kubelet认为该容器的LivenessProbe探针永远返回sucess。

2 ReadinessProbe用于判断容器是否启动完成且准备接受请求。如果该探针探测到失败,则Endpoint Controoler将会从Service的Endpoint中删除包含该容器Pod的条目。

使用httpGet配置示例

livenessProbe: httpGet: path: / port: 80 httpHeaders: - name: X-Custom-Header value: Awesome initialDelaySeconds: 3 #容器延迟检查时间 periodSeconds: 3 #每3秒探测一次

检查执行操作就是:curl -H "X-Custom-Header:Awesome" http://127.0.01:80/ 使用curl传递一个头部来检查80端口根

探测器参数

initialDelaySeconds:容器启动后要等待多少秒后存活和就绪探测器才被初始化,默认是 0 秒,最小值是 0。 periodSeconds:执行探测的时间间隔(单位是秒)。默认是 10 秒。最小值是 1。 timeoutSeconds:探测的超时后等待多少秒。默认值是 1 秒。最小值是 1。 successThreshold:探测器在失败后,被视为成功的最小连续成功数。默认值是 1。存活探测的这个值必须是 1。最小值是 1。 failureThreshold:当 Pod 启动了并且探测到失败,Kubernetes 的重试次数。存活探测情况下的放弃就意味着重新启动容器。就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1。

2.2 定义一个存活态 HTTP 请求接口

示例配置

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-http spec: containers: - name: liveness image: k8s.gcr.io/liveness args: - /server livenessProbe: httpGet: path: /healthz port: 8080 httpHeaders: - name: X-Custom-Header value: Awesome initialDelaySeconds: 3 periodSeconds: 3

在这个配置文件中,可以看到 Pod 也只有一个容器。periodSeconds 字段指定了 kubelet 每隔 3 秒执行一次存活探测。initialDelaySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 3 秒。kubelet 会向容器内运行的服务(服务会监听 8080 端口)发送一个 HTTP GET 请求来执行探测。如果服务上 /healthz 路径下的处理程序返回成功码,则 kubelet 认为容器是健康存活的。如果处理程序返回失败码,则 kubelet 会杀死这个容器并且重新启动它。

任何大于或等于 200 并且小于 400 的返回码标示成功,其它返回码都标示失败。

HTTP 探测器可以在 httpGet 上配置额外的字段

host:连接使用的主机名,默认是 Pod 的 IP。也可以在 HTTP 头中设置 “Host” 来代替。 scheme :用于设置连接主机的方式(HTTP 还是 HTTPS)。默认是 HTTP。 path:访问 HTTP 服务的路径。 httpHeaders:请求中自定义的 HTTP 头。HTTP 头字段允许重复。 port:访问容器的端口号或者端口名。如果数字必须在 1 ~ 65535 之间。

对于 HTTP 探测,kubelet 发送一个 HTTP 请求到指定的路径和端口来执行检测。除非 httpGet 中的 host 字段设置了,否则 kubelet 默认是给 Pod 的 IP 地址发送探测。如果 scheme 字段设置为了 HTTPS,kubelet 会跳过证书验证发送 HTTPS 请求。大多数情况下,不需要设置host 字段。这里有个需要设置 host 字段的场景,假设容器监听 127.0.0.1,并且 Pod 的 hostNetwork 字段设置为了 true。那么 httpGet 中的 host 字段应该设置为 127.0.0.1。可能更常见的情况是如果 Pod 依赖虚拟主机,你不应该设置 host 字段,而是应该在 httpHeaders 中设置 Host。

2.3 基于conmand探测

许多长时间运行的应用程序最终会过渡到断开的状态,除非重新启动,否则无法恢复。Kubernetes 提供了存活探测器来发现并补救这种情况

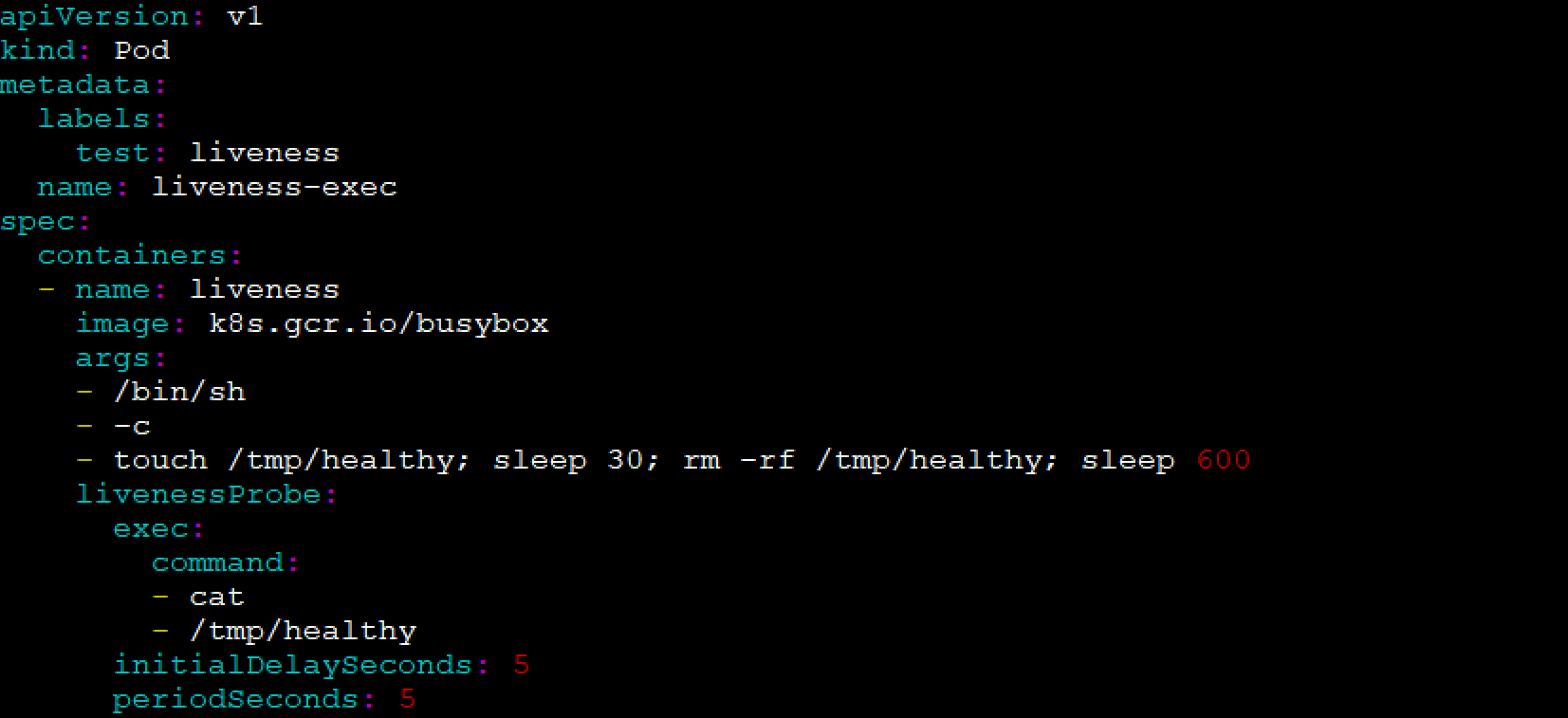

配置实例

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-exec spec: containers: - name: liveness image: k8s.gcr.io/busybox args: - /bin/sh - -c - touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5

在这个配置文件中,可以看到 Pod 中只有一个容器。periodSeconds 字段指定了 kubelet 应该每 5 秒执行一次存活探测。initialDelaySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 5 秒。kubelet 在容器内执行命令 cat /tmp/healthy 来进行探测。如果命令执行成功并且返回值为 0,kubelet 就会认为这个容器是健康存活的。如果这个命令返回非 0 值,kubelet 会杀死这个容器并重新启动它。

当容器启动时,执行如下的命令:

/bin/sh -c "touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600"

2.4 操作测试

[root@docker-server1 pods]# vim busybox-healthcheck.yaml

[root@docker-server1 pods]# kubectl apply -f busybox-healthcheck.yaml

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE liveness-exec 1/1 Running 0 9s nginx 2/2 Running 1 97m

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE liveness-exec 1/1 Running 1 79s #已经有一次restart nginx 2/2 Running 1 98m

[root@docker-server1 pods]# kubectl describe pods liveness-exec

Name: liveness-exec Namespace: default Priority: 0 Node: 192.168.132.132/192.168.132.132 Start Time: Thu, 09 Jan 2020 20:45:50 -0500 Labels: test=liveness Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-exec","namespace":"default"},"s... Status: Running IP: 10.244.1.6 IPs: IP: 10.244.1.6 Containers: liveness: Container ID: docker://b864e74fd7fc0c16f39d7b8ecaec1d771c5a63139fe4907b5d07389cc88d9f86 Image: k8s.gcr.io/busybox Image ID: docker-pullable://k8s.gcr.io/busybox@sha256:d8d3bc2c183ed2f9f10e7258f84971202325ee6011ba137112e01e30f206de67 Port: <none> Host Port: <none> Args: /bin/sh -c touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600 State: Running Started: Thu, 09 Jan 2020 20:48:24 -0500 Last State: Terminated Reason: Error Exit Code: 137 Started: Thu, 09 Jan 2020 20:47:08 -0500 Finished: Thu, 09 Jan 2020 20:48:22 -0500 Ready: True Restart Count: 2 Liveness: exec [cat /tmp/healthy] delay=5s timeout=1s period=5s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-bwbrn: Type: Secret (a volume populated by a Secret) SecretName: default-token-bwbrn Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m36s default-scheduler Successfully assigned default/liveness-exec to 192.168.132.132 Warning Unhealthy 3s (x6 over 87s) kubelet, 192.168.132.132 Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory #这里已经有一个不健康的状态 Normal Killing 3s (x2 over 78s) kubelet, 192.168.132.132 Container liveness failed liveness probe, will be restarted Normal Pulling <invalid> (x3 over 2m3s) kubelet, 192.168.132.132 Pulling image "k8s.gcr.io/busybox" Normal Pulled <invalid> (x3 over 119s) kubelet, 192.168.132.132 Successfully pulled image "k8s.gcr.io/busybox" Normal Created <invalid> (x3 over 119s) kubelet, 192.168.132.132 Created container liveness Normal Started <invalid> (x3 over 119s) kubelet, 192.168.132.132 Started container liveness

这里在杀掉后会重启,使用不重启操作

[root@docker-server1 pods]# kubectl delete pods liveness-exec

[root@docker-server1 pods]# vi busybox-healthcheck.yaml

[root@docker-server1 pods]# kubectl apply -f busybox-healthcheck.yaml

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE liveness-exec 1/1 Running 0 7s nginx 2/2 Running 1 109m

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE liveness-exec 0/1 Error 0 2m11s #已经失败 nginx 2/2 Running 1 111m

[root@docker-server1 pods]# kubectl describe pods liveness-exec

Name: liveness-exec Namespace: default Priority: 0 Node: 192.168.132.132/192.168.132.132 Start Time: Thu, 09 Jan 2020 20:57:54 -0500 Labels: test=liveness Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-exec","namespace":"default"},"s... Status: Failed IP: 10.244.1.7 IPs: IP: 10.244.1.7 Containers: liveness: Container ID: docker://d1bc23c8d6ef3e773ebbfeeff058eea39f1363a046df58da47e05e247c28b159 Image: k8s.gcr.io/busybox Image ID: docker-pullable://k8s.gcr.io/busybox@sha256:d8d3bc2c183ed2f9f10e7258f84971202325ee6011ba137112e01e30f206de67 Port: <none> Host Port: <none> Args: /bin/sh -c touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600 State: Terminated Reason: Error Exit Code: 137 Started: Thu, 09 Jan 2020 20:57:56 -0500 Finished: Thu, 09 Jan 2020 20:59:10 -0500 Ready: False Restart Count: 0 Liveness: exec [cat /tmp/healthy] delay=5s timeout=1s period=5s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-bwbrn (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: default-token-bwbrn: Type: Secret (a volume populated by a Secret) SecretName: default-token-bwbrn Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m9s default-scheduler Successfully assigned default/liveness-exec to 192.168.132.132 Normal Pulling 96s kubelet, 192.168.132.132 Pulling image "k8s.gcr.io/busybox" Normal Pulled 95s kubelet, 192.168.132.132 Successfully pulled image "k8s.gcr.io/busybox" Normal Created 95s kubelet, 192.168.132.132 Created container liveness Normal Started 95s kubelet, 192.168.132.132 Started container liveness Warning Unhealthy 51s (x3 over 61s) kubelet, 192.168.132.132 Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory Normal Killing 51s kubelet, 192.168.132.132 Stopping container liveness

2.5 定义 TCP 的存活探测

第三种类型的存活探测是使用 TCP 套接字。通过配置,kubelet 会尝试在指定端口和容器建立套接字链接。如果能建立链接,这个容器就被看作是健康的,如果不能则这个容器就被看作是有问题的。

实例:

apiVersion: v1 kind: Pod metadata: name: goproxy labels: app: goproxy spec: containers: - name: goproxy image: k8s.gcr.io/goproxy:0.1 ports: - containerPort: 8080 readinessProbe: tcpSocket: port: 8080 initialDelaySeconds: 5 periodSeconds: 10 livenessProbe: tcpSocket: port: 8080 initialDelaySeconds: 15 periodSeconds: 20

TCP 检测的配置和 HTTP 检测非常相似。下面这个例子同时使用就绪和存活探测器。kubelet 会在容器启动 5 秒后发送第一个就绪探测。这会尝试连接 goproxy 容器的 8080 端口。如果探测成功,这个 Pod 会被标记为就绪状态,kubelet 将继续每隔 10 秒运行一次检测。

除了就绪探测,这个配置包括了一个存活探测。kubelet 会在容器启动 15 秒后进行第一次存活探测。就像就绪探测一样,会尝试连接 goproxy 容器的 8080 端口。如果存活探测失败,这个容器会被重新启动

kubectl apply -f https://k8s.io/examples/pods/probe/tcp-liveness-readiness.yaml

三 pod的其他操作

初始化容器

Init Container在所有容器运行之前执行(run-to-completion),常用来初始化配置。就是在业务容器启动之前,启动一个临时的初始化容器,用于完成业务容器启动之前的初始化操作,当初始化容器完成初始化任务后,然后退出,业务容器开始启动

先学习volume挂载

3.1 volume挂载

[root@docker-server1 pods]# vim nginx-pods-volumes.yaml

apiVersion: v1 kind: Pod metadata: annotations: test: this is a test app labels: app: nginx name: nginx-volume namespace: default spec: volumes: - name: datadir hostPath: path: /data containers: - env: - name: test value: aaa - name: test1 value: bbb volumeMounts: - name: datadir mountPath: /usr/share/nginx/html image: nginx imagePullPolicy: Always name: nginx ports: - containerPort: 80 hostPort: 8080 protocol: TCP resources: requests: cpu: "0.2" memory: "128Mi" restartPolicy: Always

[root@docker-server1 pods]# kubectl apply -f nginx-pods-volumes.yaml

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE goproxy 1/1 Running 0 38m nginx 2/2 Running 2 166m nginx-volume 1/1 Running 0 68s

[root@docker-server1 pods]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES goproxy 1/1 Running 0 38m 10.244.1.8 192.168.132.132 <none> <none> nginx 2/2 Running 2 166m 10.244.2.10 192.168.132.133 <none> <none> nginx-volume 1/1 Running 0 105s 10.244.1.9 192.168.132.132 <none> <none>

[root@docker-server1 pods]# curl http://10.244.1.9

<html> <head><title>403 Forbidden</title></head> <body> <center><h1>403 Forbidden</h1></center> <hr><center>nginx/1.17.7</center> </body> </html>

容器运行在docker-server2上

[root@docker-server2 ~]# echo "index on docker-server2" > /data/index.html

再次访问

[root@docker-server1 pods]# curl http://10.244.1.9

![]()

通过这种方式可以挂载一个容器卷,但是不可取,因为挂载本地目录,k8s容器换到其他节点,数据就会变化

3.2 初始化容器

[root@docker-server1 pods]# vim init-container.yaml

apiVersion: v1 kind: Pod metadata: name: init-demo spec: initContainers: - name: install image: busybox command: - "sh" - "-c" - > echo "nginx in kubernetes" > /work-dir/index.html volumeMounts: - name: workdir mountPath: "/work-dir" volumes: - name: workdir emptyDir: {} containers: - name: nginx image: nginx ports: - containerPort: 80 volumeMounts: - name: workdir mountPath: /usr/share/nginx/html

emptyDir: {}这个不指定,表示会在本地找一个临时的目录,挂载到容器中,生命周期的容器相同,但是这个目录可以让两个容器都可以看到,这样当初始化容器任务结束后,业务容器就可以读取这个目录中的数据

[root@docker-server1 pods]# kubectl apply -f init-container.yaml

[root@docker-server1 pods]# kubectl get pods

NAME READY STATUS RESTARTS AGE goproxy 1/1 Running 0 58m init-demo 1/1 Running 0 40s nginx 2/2 Running 3 3h6m nginx-volume 1/1 Running 0 21m

[root@docker-server1 pods]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES goproxy 1/1 Running 0 58m 10.244.1.8 192.168.132.132 <none> <none> init-demo 1/1 Running 0 44s 10.244.1.10 192.168.132.132 <none> <none> nginx 2/2 Running 3 3h6m 10.244.2.10 192.168.132.133 <none> <none> nginx-volume 1/1 Running 0 21m 10.244.1.9 192.168.132.132 <none> <none>

[root@docker-server1 pods]# curl http://10.244.1.10

![]()

3.3 生命周期管理

容器生命周期的钩子

- 容器生命周期钩子(Container Lifecycle Hooks)监听容器生命周期的特定事件,并在事件发生时执行已注册的回调函数。支持两种钩子:

- postStart: 容器创建后立即执行,注意由于是异步执行,它无法保证一定在ENTRYPOINT之前运行。如果失败,容器会被杀死,并根据RestartPolicy决定是否重启

- preStop:容器终止前执行,常用于资源清理。如果失败,容器同样也会被杀死

- 而钩子的回调函数支持两种方式:

- exec:在容器内执行命令,如果命令的退出状态码是0表示执行成功,否则表示失败

- httpGet:向指定URL发起GET请求,如果返回的HTTP状态码在[200, 400)之间表示请求成功,否则表示失败

[root@docker-server1 pods]# vim nginx-pods-lifecycle.yaml

apiVersion: v1 kind: Pod metadata: name: lifecycle-demo spec: containers: - name: lifecycle-demo-container image: nginx lifecycle: postStart: httpGet: path: / port: 80 preStop: exec: command: ["/usr/sbin/nginx","-s","quit"]

[root@docker-server1 pods]# kubectl apply -f nginx-pods-lifecycle.yaml

[root@docker-server1 pods]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES goproxy 1/1 Running 0 76m 10.244.1.8 192.168.132.132 <none> <none> init-demo 1/1 Running 0 18m 10.244.1.10 192.168.132.132 <none> <none> lifecycle-demo 0/1 ContainerCreating 0 4s <none> 192.168.132.132 <none> <none> nginx 2/2 Running 3 3h24m 10.244.2.10 192.168.132.133 <none> <none> nginx-volume 1/1 Running 0 39m 10.244.1.9 192.168.132.132 <none> <none>

3.4 静态POD

kubelet的运行方式有两种,一种是通过与kubernetes的master节点连接,接受任务并执行。另外一种则是可以作为一个独立组件运行。监听某个目录中的yml文件,当发现变化,就执行yml文件,我们可以在这个目录中定义启动Pod的yml文件,这样不需要master端,kubelet也会自行启动pod,但通过这方式启动的pod没法被master端调度。只能在当前的kubelet主机节点上运行,这种pod就被称作静态pod

kubeadm初始化集群的方式就是借助了静态Pod的方式将容器运行在kubelet管理的静态Pod中

比如在安装master节点的时候,kubeadm安装kubectl,kubelet,master节点就是以静态POD方式运行

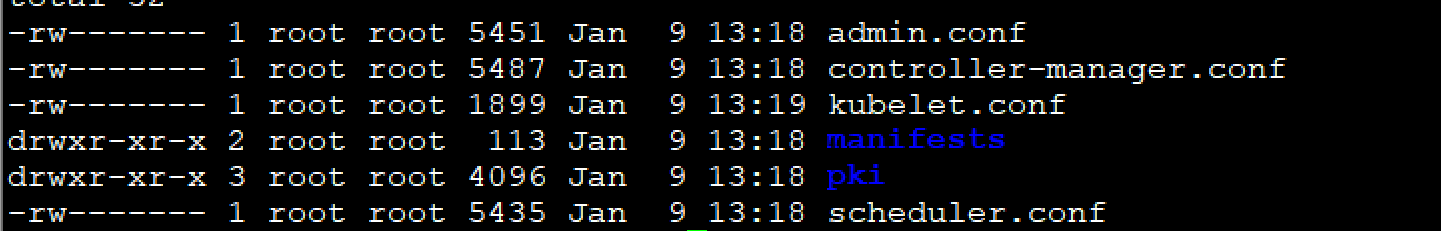

[root@docker-server1 pods]# cd /etc/kubernetes/

[root@docker-server1 kubernetes]# ll

[root@docker-server1 kubernetes]# cd manifests/

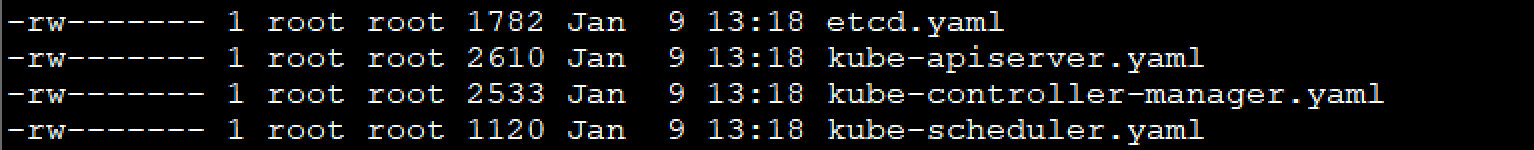

[root@docker-server1 manifests]# ll

[root@docker-server1 manifests]# vim etcd.yaml

apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: etcd tier: control-plane name: etcd namespace: kube-system spec: containers: - command: - etcd - --advertise-client-urls=https://192.168.132.131:2379 - --cert-file=/etc/kubernetes/pki/etcd/server.crt - --client-cert-auth=true - --data-dir=/var/lib/etcd - --initial-advertise-peer-urls=https://192.168.132.131:2380 - --initial-cluster=192.168.132.131=https://192.168.132.131:2380 - --key-file=/etc/kubernetes/pki/etcd/server.key - --listen-client-urls=https://127.0.0.1:2379,https://192.168.132.131:2379 - --listen-metrics-urls=http://127.0.0.1:2381 - --listen-peer-urls=https://192.168.132.131:2380 - --name=192.168.132.131 - --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt - --peer-client-cert-auth=true - --peer-key-file=/etc/kubernetes/pki/etcd/peer.key - --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt - --snapshot-count=10000 - --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt image: k8s.gcr.io/etcd:3.4.3-0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /health port: 2381 scheme: HTTP initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd resources: {} volumeMounts: - mountPath: /var/lib/etcd name: etcd-data - mountPath: /etc/kubernetes/pki/etcd name: etcd-certs hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/kubernetes/pki/etcd type: DirectoryOrCreate name: etcd-certs - hostPath: path: /var/lib/etcd type: DirectoryOrCreate name: etcd-data status: {}

尝试修改yml文件

这里添加一个参数

再次查看,容器已经重启

[root@docker-server1 manifests]# docker ps -a|grep apiserver

c28921f0415 0cae8d5cc64c "kube-apiserver --ad…" 37 seconds ago Up 36 seconds k8s_kube-apiserver_kube-apiserver-192.168.132.131_kube-system_35be3047d357a34596bdda175ae3edd5_1 f5e6441e09a0 0cae8d5cc64c "kube-apiserver --ad…" 10 hours ago Exited (0) 37 seconds ago k8s_kube-apiserver_kube-apiserver-192.168.132.131_kube-system_35be3047d357a34596bdda175ae3edd5_0 f5aff40580f5 k8s.gcr.io/pause:3.1 "/pause" 10 hours ago Up 10 hours k8s_POD_kube-apiserver-192.168.132.131_kube-system_35be3047d357a34596bdda175ae3edd5_0

关于POD的部分学习到这里

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!