一 简介

- Flannel是一种基于overlay网络的跨主机容器网络解决方案,也就是将TCP数据包封装在另一种网络包里面进行路由转发和通信,

- Flannel是CoreOS开发,专门用于docker多机互联的一个工具,让集群中的不同节点主机创建的容器都具有全集群唯一的虚拟ip地址

- Flannel使用go语言编写

二 Flannel实现原理

2.1原理说明

- Flannel为每个host分配一个subnet,容器从这个subnet中分配IP,这些IP可以在host间路由,容器间无需使用nat和端口映射即可实现跨主机通信

- 每个subnet都是从一个更大的IP池中划分的,flannel会在每个主机上运行一个叫flanneld的agent,其职责就是从池子中分配subnet

- Flannel使用etcd存放网络配置、已分配 的subnet、host的IP等信息

- Flannel数据包在主机间转发是由backend实现的,目前已经支持UDP、VxLAN、host-gw、AWS VPC和GCE路由等多种backend

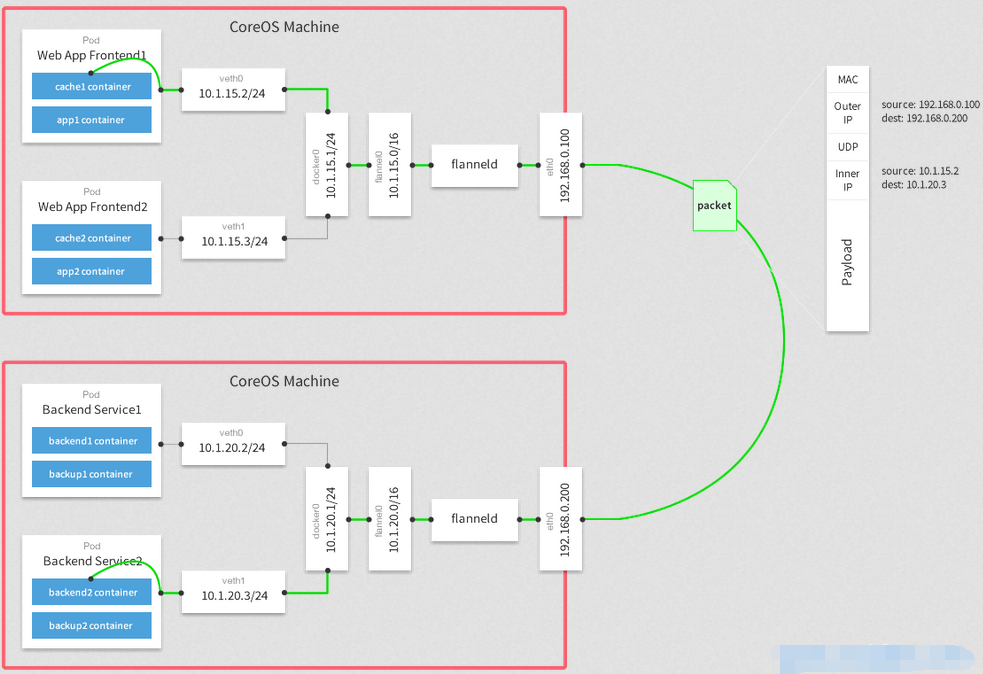

2.2 Flannel网络结构图

多了一层封装,进行流量劫持

2.3 数据转发流程

- 容器直接使用目标容器的ip访问,默认通过容器内部的eth0发送出去。

- 报文通过veth pair被发送到vethXXX。

- vethXXX是直接连接到虚拟交换机docker0的,报文通过虚拟bridge docker0发送出去。

- 查找路由表,外部容器ip的报文都会转发到flannel0虚拟网卡,这是一个P2P的虚拟网卡,然后报文就被转发到监听在另一端的flanneld。

- flanneld通过etcd维护了各个节点之间的路由表,把原来的报文UDP封装一层,通过配置的iface发送出去。

- 报文通过主机之间的网络找到目标主机。

- 报文继续往上,到传输层,交给监听在8285端口的flanneld程序处理。

- 数据被解包,然后发送给flannel0虚拟网卡。

- 查找路由表,发现对应容器的报文要交给docker0。

- docker0找到连到自己的容器,把报文发送过去。

2.4 实现原理

- Flannel为每个host分配一个 subnet,容器从这个 subnet中分配IP,这些IP可以在host间路由,容器间无需使用nat和端□映射即可实现跨主机通信

- 每个 subnet都是从一个更大的IP池中划分的,f1 annel会在每个主机上运行—个叫uf1 annelid的 agent,其职责就是从池子中分配 subnet

- Flannel使用etcd存放网络配置、已分配的 subnet、host的IP等信息

- Flannel数据包在主机间转发是由 backend实现的,目前已经攴持UDP、 VXLAN、host-gw AWS VPC和GCE路由等多种 backend

三 Flannel安装配置

3.1 环境准备

| 节点名称 | IP地址 | 安装软件 |

| docker-server1 | 192.168.132.131 | etcd、flannel、docker |

| docker-server2 | 192.168.132.132 | flannel、docker |

删掉节点的所有容器

[root@docker-server1 ~]# docker ps -aq|xargs docker rm

[root@docker-server2 ~]# docker ps -aq|xargs docker rm

3.2 安装etcd

etcd下载地址:https://github.com/coreos/etcd/releases

下载

[root@docker-server1 ~]# wget https://github.com/coreos/etcd/releases/download/v3.3.9/etcd-v3.3.9-linux-amd64.tar.gz

[root@docker-server2 ~]# wget https://github.com/coreos/etcd/releases/download/v3.3.9/etcd-v3.3.9-linux-amd64.tar.gz

在131上安装etcd和flannel

[root@docker-server1 ~]# tar -xf etcd-v3.3.9-linux-amd64.tar.gz

[root@docker-server1 ~]# cd etcd-v3.3.9-linux-amd64

[root@docker-server1 etcd-v3.3.9-linux-amd64]# ll

total 33992 drwxr-xr-x 11 joy joy 4096 Jul 24 2018 Documentation -rwxr-xr-x 1 joy joy 18934016 Jul 24 2018 etcd -rwxr-xr-x 1 joy joy 15809280 Jul 24 2018 etcdctl -rw-r--r-- 1 joy joy 38864 Jul 24 2018 README-etcdctl.md -rw-r--r-- 1 joy joy 7262 Jul 24 2018 README.md -rw-r--r-- 1 joy joy 7855 Jul 24 2018 READMEv2-etcdctl.md

[root@docker-server1 etcd-v3.3.9-linux-amd64]# cp etcd* /usr/bin/

启动命令:

[root@docker-server1 ~]# etcd -name etcd-131 -data-dir /var/lib/etcd --advertise-client-urls http://192.168.132.131:2379,http://127.0.0.1:2379 --listen-client-urls http://192.168.132.131:2379,http://127.0.0.1:2379

-name:etc取名

-data-dir:定义数据路径

2019-11-09 14:12:36.719599 I | etcdmain: Git SHA: fca8add78 2019-11-09 14:12:36.719603 I | etcdmain: Go Version: go1.10.3 2019-11-09 14:12:36.719606 I | etcdmain: Go OS/Arch: linux/amd64 2019-11-09 14:12:36.719613 I | etcdmain: setting maximum number of CPUs to 4, total number of available CPUs is 4 2019-11-09 14:12:36.720048 I | embed: listening for peers on http://localhost:2380 2019-11-09 14:12:36.720124 I | embed: listening for client requests on 127.0.0.1:2379 2019-11-09 14:12:36.720146 I | embed: listening for client requests on 192.168.132.131:2379 2019-11-09 14:12:36.722745 I | etcdserver: name = etcd-131 2019-11-09 14:12:36.722778 I | etcdserver: data dir = /var/lib/etcd 2019-11-09 14:12:36.722783 I | etcdserver: member dir = /var/lib/etcd/member 2019-11-09 14:12:36.722787 I | etcdserver: heartbeat = 100ms 2019-11-09 14:12:36.722791 I | etcdserver: election = 1000ms 2019-11-09 14:12:36.722794 I | etcdserver: snapshot count = 100000 2019-11-09 14:12:36.722811 I | etcdserver: advertise client URLs = http://127.0.0.1:2379,http://192.168.132.131:2379 2019-11-09 14:12:36.722816 I | etcdserver: initial advertise peer URLs = http://localhost:2380 2019-11-09 14:12:36.722823 I | etcdserver: initial cluster = etcd-131=http://localhost:2380 2019-11-09 14:12:36.725597 I | etcdserver: starting member 8e9e05c52164694d in cluster cdf818194e3a8c32 2019-11-09 14:12:36.725645 I | raft: 8e9e05c52164694d became follower at term 0 2019-11-09 14:12:36.725658 I | raft: newRaft 8e9e05c52164694d [peers: [], term: 0, commit: 0, applied: 0, lastindex: 0, lastterm: 0] 2019-11-09 14:12:36.725663 I | raft: 8e9e05c52164694d became follower at term 1 2019-11-09 14:12:36.731392 W | auth: simple token is not cryptographically signed 2019-11-09 14:12:36.732944 I | etcdserver: starting server... [version: 3.3.9, cluster version: to_be_decided] 2019-11-09 14:12:36.733497 I | etcdserver: 8e9e05c52164694d as single-node; fast-forwarding 9 ticks (election ticks 10) 2019-11-09 14:12:36.734281 I | etcdserver/membership: added member 8e9e05c52164694d [http://localhost:2380] to cluster cdf818194e3a8c32 2019-11-09 14:12:37.635489 I | raft: 8e9e05c52164694d is starting a new election at term 1 2019-11-09 14:12:37.635568 I | raft: 8e9e05c52164694d became candidate at term 2 2019-11-09 14:12:37.635621 I | raft: 8e9e05c52164694d received MsgVoteResp from 8e9e05c52164694d at term 2 2019-11-09 14:12:37.635656 I | raft: 8e9e05c52164694d became leader at term 2 2019-11-09 14:12:37.635676 I | raft: raft.node: 8e9e05c52164694d elected leader 8e9e05c52164694d at term 2 2019-11-09 14:12:37.636216 I | etcdserver: setting up the initial cluster version to 3.3 2019-11-09 14:12:37.637689 N | etcdserver/membership: set the initial cluster version to 3.3 2019-11-09 14:12:37.637846 I | etcdserver/api: enabled capabilities for version 3.3 2019-11-09 14:12:37.637990 I | etcdserver: published {Name:etcd-131 ClientURLs:[http://127.0.0.1:2379 http://192.168.132.131:2379]} to cluster cdf818194e3a8c32 2019-11-09 14:12:37.638099 I | embed: ready to serve client requests 2019-11-09 14:12:37.638861 I | embed: ready to serve client requests 2019-11-09 14:12:37.639056 E | etcdmain: forgot to set Type=notify in systemd service file? 2019-11-09 14:12:37.640375 N | embed: serving insecure client requests on 127.0.0.1:2379, this is strongly discouraged! 2019-11-09 14:12:37.640424 N | embed: serving insecure client requests on 192.168.132.131:2379, this is strongly discouraged!

[root@docker-server1 ~]# ps -ef|grep etcd

root 85130 84636 2 14:12 pts/4 00:00:01 etcd -name etcd-131 -data-dir /var/lib/etcd --advertise-client-urls http://192.168.132.131:2379,http://127.0.0.1:2379 --listen-client-urls http://192.168.132.131:2379,http://127.0.0.1:2379

etcdctl 是一个客户端连接工具

[root@docker-server1 ~]# etcdctl member list

8e9e05c52164694d: name=etcd-131 peerURLs=http://localhost:2380 clientURLs=http://127.0.0.1:2379,http://192.168.132.131:2379 isLeader=true

使用etcdctl连接ectd数据库,检查etcd的连通性

[root@docker-server1 ~]# etcdctl --endpoints http://127.0.0.1:2379 member list

8e9e05c52164694d: name=etcd-131 peerURLs=http://localhost:2380 clientURLs=http://127.0.0.1:2379,http://192.168.132.131:2379 isLeader=true

查看etcdctl版本

[root@docker-server1 ~]# etcdctl --version

etcdctl version: 3.3.9 API version: 2

[root@docker-server1 ~]# etcdctl --help

NAME: etcdctl - A simple command line client for etcd. WARNING: Environment variable ETCDCTL_API is not set; defaults to etcdctl v2. Set environment variable ETCDCTL_API=3 to use v3 API or ETCDCTL_API=2 to use v2 API. USAGE: etcdctl [global options] command [command options] [arguments...] VERSION: 3.3.9 COMMANDS: backup backup an etcd directory cluster-health check the health of the etcd cluster mk make a new key with a given value mkdir make a new directory rm remove a key or a directory rmdir removes the key if it is an empty directory or a key-value pair get retrieve the value of a key ls retrieve a directory set set the value of a key setdir create a new directory or update an existing directory TTL update update an existing key with a given value updatedir update an existing directory watch watch a key for changes exec-watch watch a key for changes and exec an executable member member add, remove and list subcommands user user add, grant and revoke subcommands role role add, grant and revoke subcommands auth overall auth controls help, h Shows a list of commands or help for one command GLOBAL OPTIONS: --debug output cURL commands which can be used to reproduce the request --no-sync don't synchronize cluster information before sending request --output simple, -o simple output response in the given format (simple, `extended` or `json`) (default: "simple") --discovery-srv value, -D value domain name to query for SRV records describing cluster endpoints --insecure-discovery accept insecure SRV records describing cluster endpoints --peers value, -C value DEPRECATED - "--endpoints" should be used instead --endpoint value DEPRECATED - "--endpoints" should be used instead --endpoints value a comma-delimited list of machine addresses in the cluster (default: "http://127.0.0.1:2379,http://127.0.0.1:4001") --cert-file value identify HTTPS client using this SSL certificate file --key-file value identify HTTPS client using this SSL key file --ca-file value verify certificates of HTTPS-enabled servers using this CA bundle --username value, -u value provide username[:password] and prompt if password is not supplied. --timeout value connection timeout per request (default: 2s) --total-timeout value timeout for the command execution (except watch) (default: 5s) --help, -h show help --version, -v print the version

更新etxdctl版本

[root@docker-server1 ~]# export ETCDCTL_API=3

[root@docker-server1 ~]# etcdctl --help

指令已经发生变化

[root@docker-server1 ~]# etcdctl --help NAME: etcdctl - A simple command line client for etcd3. USAGE: etcdctl VERSION: 3.3.9 API VERSION: 3.3 COMMANDS: get Gets the key or a range of keys put Puts the given key into the store del Removes the specified key or range of keys [key, range_end) txn Txn processes all the requests in one transaction compaction Compacts the event history in etcd alarm disarm Disarms all alarms alarm list Lists all alarms defrag Defragments the storage of the etcd members with given endpoints endpoint health Checks the healthiness of endpoints specified in `--endpoints` flag endpoint status Prints out the status of endpoints specified in `--endpoints` flag endpoint hashkv Prints the KV history hash for each endpoint in --endpoints move-leader Transfers leadership to another etcd cluster member. watch Watches events stream on keys or prefixes version Prints the version of etcdctl lease grant Creates leases lease revoke Revokes leases lease timetolive Get lease information lease list List all active leases lease keep-alive Keeps leases alive (renew) member add Adds a member into the cluster member remove Removes a member from the cluster member update Updates a member in the cluster member list Lists all members in the cluster snapshot save Stores an etcd node backend snapshot to a given file snapshot restore Restores an etcd member snapshot to an etcd directory snapshot status Gets backend snapshot status of a given file make-mirror Makes a mirror at the destination etcd cluster migrate Migrates keys in a v2 store to a mvcc store lock Acquires a named lock elect Observes and participates in leader election auth enable Enables authentication auth disable Disables authentication user add Adds a new user user delete Deletes a user user get Gets detailed information of a user user list Lists all users user passwd Changes password of user user grant-role Grants a role to a user user revoke-role Revokes a role from a user role add Adds a new role role delete Deletes a role role get Gets detailed information of a role role list Lists all roles role grant-permission Grants a key to a role role revoke-permission Revokes a key from a role check perf Check the performance of the etcd cluster help Help about any command OPTIONS: --cacert="" verify certificates of TLS-enabled secure servers using this CA bundle --cert="" identify secure client using this TLS certificate file --command-timeout=5s timeout for short running command (excluding dial timeout) --debug[=false] enable client-side debug logging --dial-timeout=2s dial timeout for client connections -d, --discovery-srv="" domain name to query for SRV records describing cluster endpoints --endpoints=[127.0.0.1:2379] gRPC endpoints --hex[=false] print byte strings as hex encoded strings --insecure-discovery[=true] accept insecure SRV records describing cluster endpoints --insecure-skip-tls-verify[=false] skip server certificate verification --insecure-transport[=true] disable transport security for client connections --keepalive-time=2s keepalive time for client connections --keepalive-timeout=6s keepalive timeout for client connections --key="" identify secure client using this TLS key file --user="" username[:password] for authentication (prompt if password is not supplied) -w, --write-out="simple" set the output format (fields, json, protobuf, simple, table)

3.3 安装Flannel

flannel下载地址:https://github.com/coreos/flannel/releases

下载

[root@docker-server1 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@docker-server2 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@docker-server1 ~]# tar -xf flannel-v0.11.0-linux-amd64.tar.gz

[root@docker-server1 ~]# cp flanneld /usr/bin/

[root@docker-server1 ~]# cp mk-docker-opts.sh /usr/bin/

2版本etcdctl使用

添加flannel网络配置信息到etcd:是一个键值对

[root@docker-server1 ~]# etcdctl set /coreos.com/network/config '{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}'

如果不是本机可以加参数:--endpoints http://IP:2379

{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}

Network:用于指定Flannel地址池

SubnetLen:用于指定分配给单个宿主机的docker0的ip段的子网掩码的长度

SubnetMin:用于指定最小能够分配的ip段

SudbnetMax:用于指定最大能够分配的ip段,在上面的示例中,表示每个宿主机可以分配一个24位掩码长度的子网,可以分配的子网从10.0.1.0/24到10.0.20.0/24,也就意味着在这个网段中,最多只能有20台宿主机

Backend:用于指定数据包以什么方式转发,默认为udp模式,host-gw模式性能最好,但不能跨宿主机网络

[root@docker-server1 ~]# etcdctl get /coreos.com/network/config

{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}

上面的操作时,时etcdctl的API版本是2可以使用,当升级到3版本后会报错

[root@docker-server1 ~]# etcdctl set /coreos.com/network/config '{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}'

Error: unknown command "set" for "etcdctl" Did you mean this? get put del user Run 'etcdctl --help' for usage. Error: unknown command "set" for "etcdctl" Did you mean this? get put del user

3版本用法

[root@docker-server1 ~]# etcdctl --endpoints http://127.0.0.1:2379 put /coreos.com/network/config '{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}'

OK

[root@docker-server1 ~]# etcdctl get /coreos.com/network/config

/coreos.com/network/config {"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "vxlan"}}

下面的操作都是使用的etcdctl v2版本

3.4 启动Flannel

[root@docker-server1 ~]# /usr/bin/flanneld --etcd-endpoints="http://192.168.132.131:2379" --iface=192.168.132.131 --etcd-prefix=/coreos.com/network &

[root@docker-server1 ~]# I1109 15:02:01.696813 86107 main.go:450] Searching for interface using 192.168.132.131 I1109 15:02:01.698311 86107 main.go:527] Using interface with name ens33 and address 192.168.132.131 I1109 15:02:01.698335 86107 main.go:544] Defaulting external address to interface address (192.168.132.131) I1109 15:02:01.698556 86107 main.go:244] Created subnet manager: Etcd Local Manager with Previous Subnet: 10.0.4.0/24 I1109 15:02:01.698571 86107 main.go:247] Installing signal handlers I1109 15:02:01.700808 86107 main.go:386] Found network config - Backend type: vxlan I1109 15:02:01.700880 86107 vxlan.go:120] VXLAN config: VNI=1 Port=0 GBP=false DirectRouting=false I1109 15:02:01.703889 86107 local_manager.go:147] Found lease (10.0.4.0/24) for current IP (192.168.132.131), reusing I1109 15:02:01.704954 86107 main.go:317] Wrote subnet file to /run/flannel/subnet.env I1109 15:02:01.704967 86107 main.go:321] Running backend. I1109 15:02:01.705791 86107 vxlan_network.go:60] watching for new subnet leases I1109 15:02:01.709710 86107 main.go:429] Waiting for 22h59m59.994619649s to renew lease

[root@docker-server1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:91:dd:19 brd ff:ff:ff:ff:ff:ff inet 192.168.132.131/24 brd 192.168.132.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::bcf9:af19:a325:e2c7/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:3e:dd:55:81 brd ff:ff:ff:ff:ff:ff inet 192.168.0.1/24 brd 192.168.0.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:3eff:fedd:5581/64 scope link valid_lft forever preferred_lft forever 94: br-b1c2d9c1e522: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:cd:4a:25:4f brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global br-b1c2d9c1e522 valid_lft forever preferred_lft forever inet6 fe80::42:cdff:fe4a:254f/64 scope link valid_lft forever preferred_lft forever 95: br-ec4a8380b2d3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:c9:ba:23:ae brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 brd 172.18.255.255 scope global br-ec4a8380b2d3 valid_lft forever preferred_lft forever 104: br-f42e46889a2a: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:b4:b6:35:7f brd ff:ff:ff:ff:ff:ff inet 172.22.16.1/24 brd 172.22.16.255 scope global br-f42e46889a2a valid_lft forever preferred_lft forever inet6 fe80::42:b4ff:feb6:357f/64 scope link valid_lft forever preferred_lft forever 123: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 8e:b1:37:26:b0:59 brd ff:ff:ff:ff:ff:ff inet 10.0.4.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::8cb1:37ff:fe26:b059/64 scope link

[root@docker-server1 ~]# ps -ef |grep flan

root 86107 84636 0 15:02 pts/4 00:00:00 /usr/bin/flanneld --etcd-endpoints=http://192.168.132.131:2379 --iface=192.168.132.131 --etcd-prefix=/coreos.com/network

可以使用flannel提供的脚本将subnet.env转写成Docker启动参数,创建好的启动参数默认生成在/run/docker_opts.env文件中:

[root@docker-server1 ~]# mk-docker-opts.sh

[root@docker-server1 ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.0.0.0/16 FLANNEL_SUBNET=10.0.8.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=false

[root@docker-server1 ~]# cat /run/docker_opts.env

DOCKER_OPT_BIP="--bip=10.0.4.1/24" DOCKER_OPT_IPMASQ="--ip-masq=true" DOCKER_OPT_MTU="--mtu=1450" DOCKER_OPTS=" --bip=10.0.4.1/24 --ip-masq=true --mtu=1450"

需要把这个文件加到docker的启动项

EnvironmentFile=/run/docker_opts.env ExecStart=/usr/bin/dockerd $DOCKER_OPTS -H fd:// --containerd=/run/containerd/containerd.sock

启动docker

[root@docker-server1 ~]# systemctl daemon-reload

[root@docker-server1 ~]# systemctl restart docker

报错

[root@docker-server1 ~]# journalctl -xe -u docker

Nov 09 15:13:52 docker-server1 dockerd[86540]: unable to configure the Docker daemon with file /etc/docker/daemon.json: the following Nov 09 15:13:52 docker-server1 systemd[1]: docker.service: main process exited, code=exited, status=1/FAILURE

删除/etc/docker/daemon.json里的bip配置

[root@docker-server1 ~]# systemctl restart docker

To force a start use "systemctl reset-failed docker.service" followed by "systemctl start docker.service" again.

[root@docker-server1 ~]# systemctl restart docker

[root@docker-server1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:91:dd:19 brd ff:ff:ff:ff:ff:ff inet 192.168.132.131/24 brd 192.168.132.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::bcf9:af19:a325:e2c7/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:3e:dd:55:81 brd ff:ff:ff:ff:ff:ff inet 10.0.4.1/24 brd 10.0.4.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:3eff:fedd:5581/64 scope link valid_lft forever preferred_lft forever 94: br-b1c2d9c1e522: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:cd:4a:25:4f brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global br-b1c2d9c1e522 valid_lft forever preferred_lft forever inet6 fe80::42:cdff:fe4a:254f/64 scope link valid_lft forever preferred_lft forever 95: br-ec4a8380b2d3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:c9:ba:23:ae brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 brd 172.18.255.255 scope global br-ec4a8380b2d3 valid_lft forever preferred_lft forever 104: br-f42e46889a2a: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:b4:b6:35:7f brd ff:ff:ff:ff:ff:ff inet 172.22.16.1/24 brd 172.22.16.255 scope global br-f42e46889a2a valid_lft forever preferred_lft forever inet6 fe80::42:b4ff:feb6:357f/64 scope link valid_lft forever preferred_lft forever 123: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 8e:b1:37:26:b0:59 brd ff:ff:ff:ff:ff:ff inet 10.0.4.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::8cb1:37ff:fe26:b059/64 scope link valid_lft forever preferred_lft forever

docker0的IP是10.0.4.1

[root@docker-server1 ~]# etcdctl ls /coreos.com/network/

/coreos.com/network/subnets

/coreos.com/network/config

可以看到flannel0网卡的地址和etcd存储的地址一样,这样flannel网络配置完成

Flannel启动过程解析:

- 从etcd中获取network的配置信息

- 划分subnet,并在etcd中进行注册

- 将子网信息记录到/run/flannel/subnet.env中

- Flannel必须先于Docker启动

3.5 验证Flannel网络

查看etcd中的数据:

[root@docker-server1 ~]# etcdctl ls /coreos.com/network/subnets

/coreos.com/network/subnets/10.0.4.0-24

3.6 配置Docker

Docker安装完成以后,需要修改其启动参数以使其能够使用flannel进行IP分配,以及网络通讯

在Flannel运行之后,会生成一个环境变量文件,包含了当前主机要使用flannel通讯的相关参数,如下:

[root@docker-server1 ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.0.0.0/16 FLANNEL_SUBNET=10.0.4.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=false

docker-server1相同操作配置flannel,不用安装etcd

[root@docker-server2 ~]# tar -xf flannel-v0.11.0-linux-amd64.tar.gz

[root@docker-server2 ~]# mv flanneld /usr/bin/

[root@docker-server2 ~]# mv mk-docker-opts.sh /usr/bin/

[root@docker-server2 ~]# /usr/bin/flanneld --etcd-endpoints="http://192.168.132.131:2379" --iface=192.168.132.132 --etcd-prefix=/coreos.com/network &

[root@docker-server2 ~]# mk-docker-opts.sh -c

[root@docker-server2 ~]# cat /run/docker_opts.env

修改docker启动文件,删除daemon.json的bip配置

[root@docker-server2 ~]# systemctl daemon-reload

[root@docker-server2 ~]# systemctl restart docker

[root@docker-server2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:63:fd:11 brd ff:ff:ff:ff:ff:ff inet 192.168.132.132/24 brd 192.168.132.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::6a92:62ba:1b33:c93d/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:50:67:ff:90 brd ff:ff:ff:ff:ff:ff inet 10.0.18.1/24 brd 10.0.18.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:50ff:fe67:ff90/64 scope link valid_lft forever preferred_lft forever 14: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 62:b9:76:44:bf:32 brd ff:ff:ff:ff:ff:ff inet 10.0.18.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::60b9:76ff:fe44:bf32/64 scope link valid_lft forever preferred_lft forever

[root@docker-server1 ~]# etcdctl ls /coreos.com/network/subnets

/coreos.com/network/subnets/10.0.8.0-24 /coreos.com/network/subnets/10.0.18.0-24

两台主机的subnet网段

[root@docker-server1 ~]# etcdctl get /coreos.com/network/subnets/10.0.18.0-24

{"PublicIP":"192.168.132.132","BackendType":"vxlan","BackendData":{"VtepMAC":"62:b9:76:44:bf:32"}}

[root@docker-server1 ~]# etcdctl get /coreos.com/network/subnets/10.0.8.0-24

{"PublicIP":"192.168.132.131","BackendType":"vxlan","BackendData":{"VtepMAC":"62:93:c1:0f:b9:b0"}}

3.7 验证容器互通

[root@docker-server1 ~]# docker run -it busybox

/ # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 124: eth0@if125: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue link/ether 02:42:0a:00:04:02 brd ff:ff:ff:ff:ff:ff inet 10.0.4.2/24 brd 10.0.4.255 scope global eth0 valid_lft forever preferred_lft forever

[root@docker-server2 ~]# docker run -it busybox

/ # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 15: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue link/ether 02:42:0a:00:12:02 brd ff:ff:ff:ff:ff:ff inet 10.0.18.2/24 brd 10.0.18.255 scope global eth0 valid_lft forever preferred_lft forever

互ping

192.168.132.131容器 / # ping 10.0.18.2 PING 10.0.18.2 (10.0.18.2): 56 data bytes 64 bytes from 10.0.18.2: seq=0 ttl=62 time=2.517 ms 64 bytes from 10.0.18.2: seq=1 ttl=62 time=1.058 ms 64 bytes from 10.0.18.2: seq=2 ttl=62 time=1.676 ms 192.168.132.132容器 PING 10.0.4.2 (10.0.4.2): 56 data bytes 64 bytes from 10.0.4.2: seq=0 ttl=62 time=2.606 ms 64 bytes from 10.0.4.2: seq=1 ttl=62 time=1.727 ms

两主机的容器可以互通

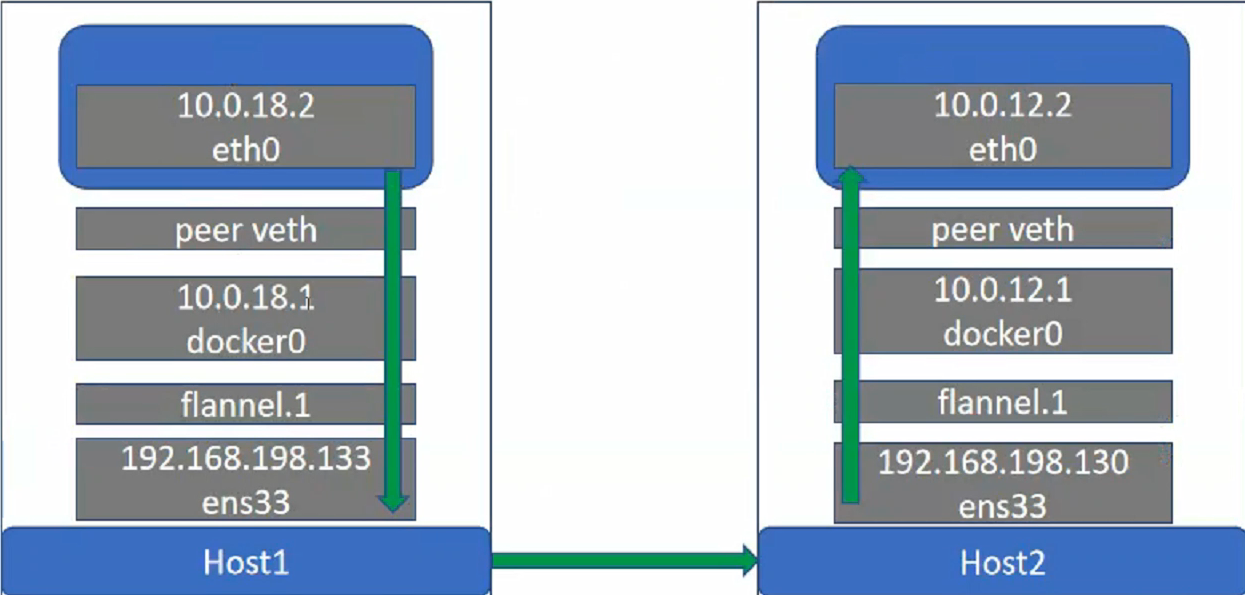

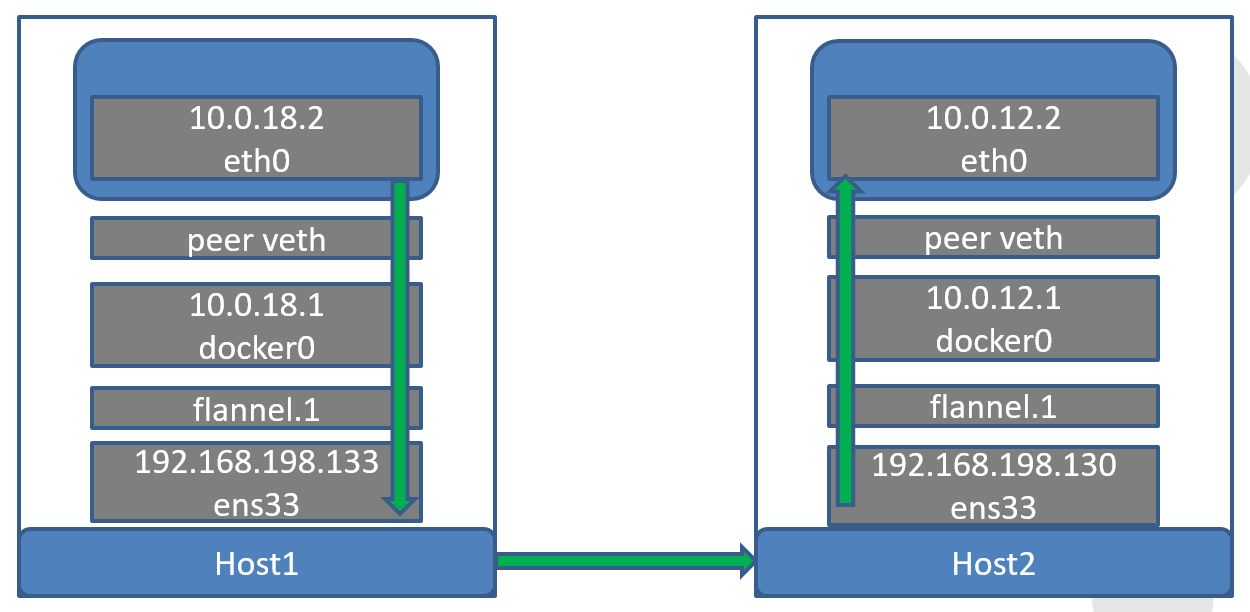

此时的网络数据包流向如图:

3.8 配置backend为host-gw

host-gw bakcend是flannel的另一个backend。与vxlan不同,host-gw不会封装数据包,而是在主机的路由表中创建到其他主机的subnet的路由条目,从而实现容器网络跨主机通信。需要说明的是,host-gw不能跨宿主机网络通信,或者说跨宿主机网络通信需要物理路由支持。性能最好

修改etcd如下:

[root@docker-server1 ~]# etcdctl --endpoints http://127.0.0.1:2379 set /coreos.com/network/config '{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "host-gw"}}'

{"Network": "10.0.0.0/16", "SubnetLen": 24, "SubnetMin": "10.0.1.0","SubnetMax": "10.0.20.0", "Backend": {"Type": "host-gw"}

重启flanneld与docker:

root@docker-server1 ~]# kill -9 86780

[root@docker-server1 ~]# /usr/bin/flanneld --etcd-endpoints="http://192.168.132.131:2379" --iface=192.168.132.131 --etcd-prefix=/coreos.com/network &

[root@docker-server1 ~]# systemctl restart docker

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:3e:dd:55:81 brd ff:ff:ff:ff:ff:ff inet 10.0.4.1/24 brd 10.0.4.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:3eff:fedd:5581/64 scope link valid_lft forever preferred_lft forever 123: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 8e:b1:37:26:b0:59 brd ff:ff:ff:ff:ff:ff inet 10.0.4.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::8cb1:37ff:fe26:b059/64 scope link valid_lft forever preferred_lft forever

[root@docker-server2 ~]# kill -9 74050

[root@docker-server2 ~]# /usr/bin/flanneld --etcd-endpoints="http://192.168.132.131:2379" --iface=192.168.132.132 --etcd-prefix=/coreos.com/network &

[root@docker-server2 ~]# systemctl restart docker

[root@docker-server2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:63:fd:11 brd ff:ff:ff:ff:ff:ff inet 192.168.132.132/24 brd 192.168.132.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::6a92:62ba:1b33:c93d/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:50:67:ff:90 brd ff:ff:ff:ff:ff:ff inet 10.0.18.1/24 brd 10.0.18.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:50ff:fe67:ff90/64 scope link valid_lft forever preferred_lft forever 14: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 62:b9:76:44:bf:32 brd ff:ff:ff:ff:ff:ff inet 10.0.18.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::60b9:76ff:fe44:bf32/64 scope link valid_lft forever preferred_lft forever

可以在宿主机上查看到路由条目:

[root@docker-server1 ~]# ip route

default via 192.168.132.2 dev ens33 proto static metric 100 10.0.0.0/16 dev flannel.1 10.0.4.0/24 dev docker0 proto kernel scope link src 10.0.4.1 10.0.18.0/24 via 192.168.132.132 dev ens33 172.17.0.0/16 dev br-b1c2d9c1e522 proto kernel scope link src 172.17.0.1 172.18.0.0/16 dev br-ec4a8380b2d3 proto kernel scope link src 172.18.0.1 172.22.16.0/24 dev br-f42e46889a2a proto kernel scope link src 172.22.16.1 192.168.132.0/24 dev ens33 proto kernel scope link src 192.168.132.131 metric 100

扩展:

calico网络

- bgp转发:相当于host-gw转发,不能跨网段进行转发

- ipip转发:相当于vxlan转发模式

calico优势:

- 同时开启了两种转发模式,但是flannel只能开启其中一种转发模式

- 自动判断,如果宿主机跨网段,就是用ipip的方式转发,如果没有跨网段,就使用bgp方式转发

- 有流量策略管理,控制流量

ovs:openvswitch,openshift使用的网络

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!