介绍:在上一次的deploy部署ceph,虽然出了结果,最后的结果并没有满足最初的目的,现在尝试使用ansible部署一遍,看是否会有问题

一、环境准备

ceph1充当部署节点,ceph2,ceph3,ceph4充当ceph集群节点

| IP | 主机名 | 节点 | 系统 |

|---|---|---|---|

| 172.25.250.10 | ceph1 | ceph-ansible | Red Hat release 7.4 |

| 172.25.250.11 | ceph2 | mon、mgr、osd | Red Hat release 7.4 |

| 172.25.250.12 | ceph3 | mon、mgr、osd | Red Hat release 7.4 |

| 172.25.250.10 | ceph4 | mon、mgr、osd | Red Hat release 7.4 |

1.1 ceph1配置hosts

172.25.250.10 ceph1 172.25.250.11 ceph2 172.25.250.12 ceph3 172.25.250.13 ceph4

1.2 配置免密登录

[root@ceph1 ~]# ssh-keygen

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph1

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph2

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph3

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph4

二,部署ceph集群

2.1部署节点安装ansible

注:再部署之前,一般需要配置yum源和时间服务器ntp配置

[root@ceph1 ~]# yum -y install ansible

[root@ceph1 ~]# cd /etc/ansible/

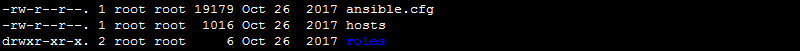

[root@ceph1 ansible]# ll

2.2 定义hosts

[root@ceph1 ansible]# vim hosts

[mons]

ceph2

ceph3

ceph4

[mgrs]

ceph2

ceph3

ceph4

[osds]

ceph2

ceph3

ceph4

[clients]

ceph1

2.3 安装ceph-ansible

[root@ceph1 ansible]# yum -y install ceph-ansible

[root@ceph1 ansible]# cd /usr/share/ceph-ansible/

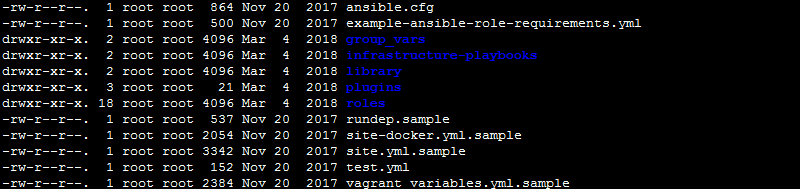

[root@ceph1 ceph-ansible]# ls

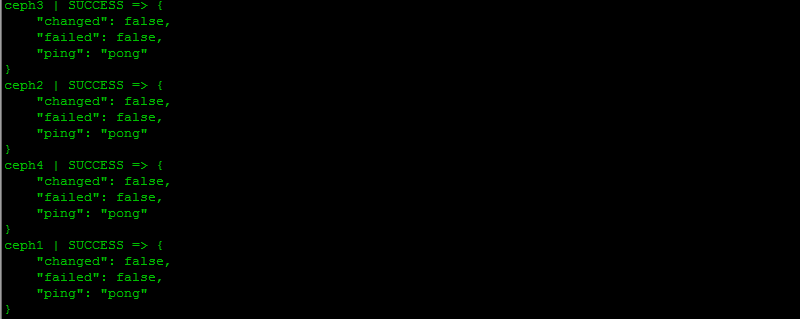

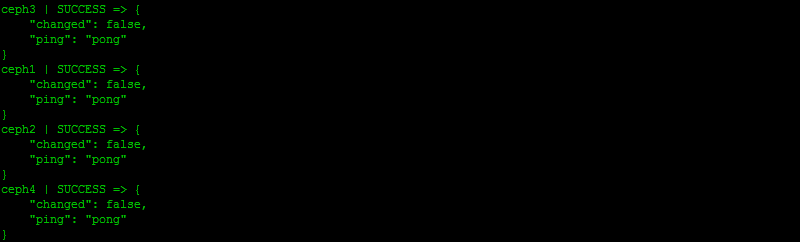

[root@ceph1 ceph-ansible]# ansible all -m ping

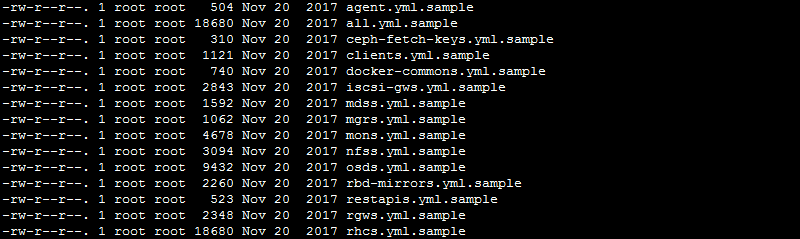

[root@ceph1 ceph-ansible]# cd /usr/share/ceph-ansible/group_vars/

[root@ceph1 group_vars]# cp mons.yml.sample mons.yml

[root@ceph1 group_vars]# cp mgrs.yml.sample mgrs.yml

[root@ceph1 group_vars]# cp osds.yml.sample osds.yml

[root@ceph1 group_vars]# cp clients.yml.sample clients.yml

[root@ceph1 group_vars]# cp all.yml.sample all.yml

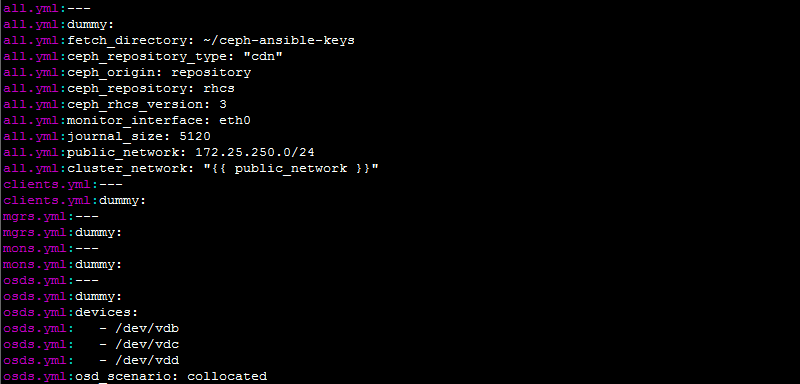

[root@ceph1 group_vars]# vim all.yml

fetch_directory: ~/ceph-ansible-keys ceph_repository_type: "cdn"

ceph_origin: repository

ceph_repository: rhcs

ceph_rhcs_version: 3

monitor_interface: eth0

journal_size: 5120

public_network: 172.25.250.0/24

cluster_network: "{{ public_network }}"

2.4 定义OSD

[root@ceph1 group_vars]# vim osds.yml

devices: - /dev/vdb - /dev/vdc - /dev/vdd osd_scenario: collocated

[root@ceph1 group_vars]# grep -Ev "^$|^s*#" *.yml

2.5 定义ansible的入口文件

[root@ceph1 group_vars]# cd ..

[root@ceph1 ceph-ansible]# cp site.yml.sample site.yml

[root@ceph1 ceph-ansible]# vim site.yml

- hosts: - mons # - agents - osds # - mdss # - rgws # - nfss # - restapis # - rbdmirrors - clients - mgrs # - iscsi-gws

2.6 安装

[root@ceph1 ceph-ansible]# ansible-playbook site.yml

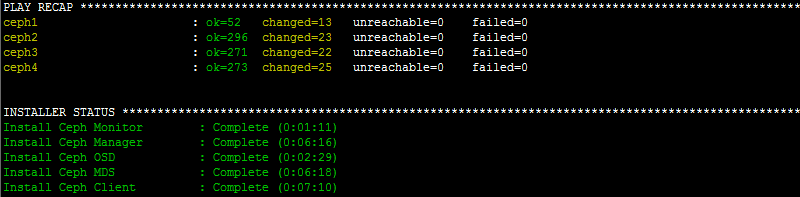

安装如果有问题,会有报错,根据报错信息去修改yml文件,然后重新执行,出现下面的结果表示已经安装完成

PLAY RECAP **************************************************************** ceph1 : ok=42 changed=4 unreachable=0 failed=0 ceph2 : ok=213 changed=9 unreachable=0 failed=0 ceph3 : ok=196 changed=3 unreachable=0 failed=0 ceph4 : ok=190 changed=28 unreachable=0 failed=0

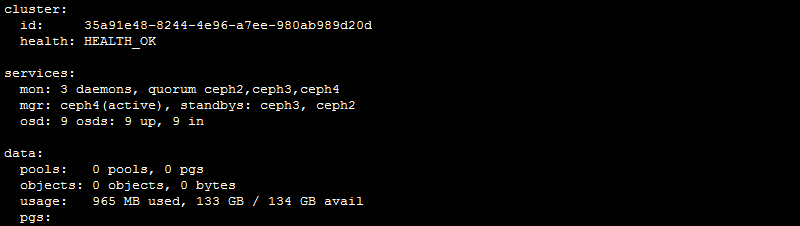

2.7 检测验证

[root@ceph2 ~]# ceph -s

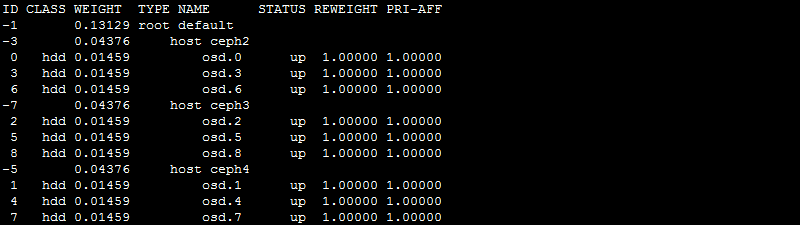

[root@ceph2 ~]# ceph osd tree

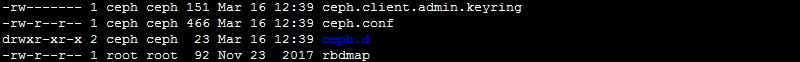

所有的文件都存放在/etc/ceph下

[root@ceph2 ~]# cd /etc/ceph/

[root@ceph2 ceph]# ll

ceph.conf是配置文件的名字,是因为没有指定集群的名字默认为ceph,配置文件的名字应为:cluster_name.conf

[root@ceph2 ceph]# cat ceph.conf

[global] fsid = 35a91e48-8244-4e96-a7ee-980ab989d20d mon initial members = ceph2,ceph3,ceph4 mon host = 172.25.250.11,172.25.250.12,172.25.250.13 public network = 172.25.250.0/24 cluster network = 172.25.250.0/24 [osd] osd mkfs type = xfs osd mkfs options xfs = -f -i size=2048 osd mount options xfs = noatime,largeio,inode64,swalloc osd journal size = 5120

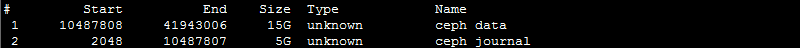

查看磁盘,有一个日志分区和数据分区

[root@ceph2 ceph]# fdisk -l

查看ceph进程

[root@ceph2 ceph]# ps aux|grep ceph

2.8 节点扩充

如果需要扩充节点,可以直接在部署节点的hosts文件,添加上主机名,执行部署,就会自己在原来的基础上继续扩充

如,把ceph1的三个磁盘扩充到osd,则配置如下:

[root@ceph1 ansible]# vim hosts

[mons]

ceph2

ceph3

ceph4

[mgrs]

ceph2

ceph3

ceph4

[osds]

ceph2

ceph3

ceph4

ceph1

[clients]

ceph1

再执行部署

[root@ceph1 ceph-ansible]# ansible-playbook site.yml

如果部署错误,删除需要下面这个yml文件

[root@ceph1 ceph-ansible]# vim infrastructure-playbooks/purge-cluster.yml

部分注释内容:

# This playbook purges Ceph # It removes: packages, configuration files and ALL THE DATA # # Use it like this: # ansible-playbook purge-cluster.yml # Prompts for confirmation to purge, defaults to no and # doesn't purge the cluster. yes purges the cluster. # # ansible-playbook -e ireallymeanit=yes|no purge-cluster.yml # Overrides the prompt using -e option. Can be used in # automation scripts to avoid interactive prompt.

执行删除:

[root@ceph1 ceph-ansible]# ansible-playbook infrastructure-playbooks/purge-cluster.yml

另一个环境安装

一、环境准备,

每个虚拟机只有一块多余的磁盘做osd

| IP | 主机名 | 节点 | 系统 | 硬盘 |

|---|---|---|---|---|

|

172.25.254.130 |

ceph1 | ceph-ansible | CentOS Linux release 7.4.1708 (Core) | /dev/sdb,dev/sdc,dev/sdd |

|

172.25.254.131 |

ceph2 | mon、mgr、osd | CentOS Linux release 7.4.1708 (Core) | /dev/sdb,dev/sdc,dev/sdd |

|

172.25.254.132 |

ceph3 | mon、mgr、osd | CentOS Linux release 7.4.1708 (Core) | /dev/sdb,dev/sdc,dev/sdd |

| 172.25.254.133 | ceph4 | mon、mgr、osd | CentOS Linux release 7.4.1708 (Core) |

/dev/sdb,dev/sdc,dev/sdd |

1.1 配置hosts

172.25.254.130 ceph1 172.25.254.131 ceph2 172.25.254.132 ceph3 172.25.254.133 ceph4

1.2 配置免密登录

root@ceph1 ~]# ssh-keygen

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph1

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph2

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph3

[root@ceph1 ~]# ssh-copy-id -i .ssh/id_rsa.pub ceph4

1.3 内核进行升级

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm yum --disablerepo=* --enablerepo=elrepo-kernel repolist yum --disablerepo=* --enablerepo=elrepo-kernel list kernel* yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-ml.x86_64 awk -F' '$1=="menuentry " {print $2}' /etc/grub2.cfg grub2-set-default 0 reboot rpm -qa|grep kernel|grep 3.10|xargs yum remove -y yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-ml-tools.x86_64 rpm -qa|grep kernel

systemctl stop firewalld

systemctl disable firewalld

二、部署

2.1 下载包

[root@ceph1 ~]# wget -c https://github.com/ceph/ceph-ansible/archive/v3.1.7.tar.gz

[root@ceph1 ~]# tar xf v3.1.7.tar.gz

[root@ceph1 ~]# cd ceph-ansible-3.1.7

2.3 修改inventory,添加主机信息

[root@ceph1 ceph-ansible-3.1.7]# vim hosts

[mons]

ceph2

ceph3

ceph4

[osds]

ceph2

ceph3

ceph4

[mgrs]

ceph2

ceph3

ceph4

[mdss]

ceph2

ceph3

ceph4

[clients]

ceph1

ceph2

ceph3

ceph4

[root@ceph1 ceph-ansible-3.1.7]# ansible all -m ping -i hosts

2.4 修改all.yml写入如下内容

[root@ceph1 ceph-ansible-3.1.7]# cp group_vars/all.yml.sample group_vars/all.yml

[root@ceph1 ceph-ansible-3.1.7]# cp group_vars/osds.yml.sample group_vars/osds.yml

[root@ceph1 ceph-ansible-3.1.7]# cp site.yml.sample site.yml

[root@ceph1 ceph-ansible-3.1.7]# vim group_vars/all.yml

ceph_origin: repository ceph_repository: community ceph_mirror: http://mirrors.aliyun.com/ceph ceph_stable_key: http://mirrors.aliyun.com/ceph/keys/release.asc ceph_stable_release: luminous ceph_stable_repo: "{{ ceph_mirror }}/rpm-{{ ceph_stable_release }}" fsid: 54d55c64-d458-4208-9592-36ce881cbcb7 ##通过uuidgen生成 generate_fsid: false cephx: true public_network: 172.25.254.0/20 cluster_network: 172.25.254.0/20 monitor_interface: ens33 ceph_conf_overrides: global: rbd_default_features: 7 auth cluster required: cephx auth service required: cephx auth client required: cephx osd journal size: 2048 osd pool default size: 3 osd pool default min size: 1 mon_pg_warn_max_per_osd: 1024 osd pool default pg num: 128 osd pool default pgp num: 128 max open files: 131072 osd_deep_scrub_randomize_ratio: 0.01 mgr: mgr modules: dashboard mon: mon_allow_pool_delete: true client: rbd_cache: true rbd_cache_size: 335544320 rbd_cache_max_dirty: 134217728 rbd_cache_max_dirty_age: 10 osd: osd mkfs type: xfs ms_bind_port_max: 7100 osd_client_message_size_cap: 2147483648 osd_crush_update_on_start: true osd_deep_scrub_stride: 131072 osd_disk_threads: 4 osd_map_cache_bl_size: 128 osd_max_object_name_len: 256 osd_max_object_namespace_len: 64 osd_max_write_size: 1024 osd_op_threads: 8 osd_recovery_op_priority: 1 osd_recovery_max_active: 1 osd_recovery_max_single_start: 1 osd_recovery_max_chunk: 1048576 osd_recovery_threads: 1 osd_max_backfills: 4 osd_scrub_begin_hour: 23 osd_scrub_end_hour: 7

[root@ceph1 ceph-ansible-3.1.7]# vim group_vars/osds.yml

devices: - /dev/sdb - /dev/sdc - /dev/sdd osd_scenario: collocated osd_objectstore: bluestore

[root@ceph1 ceph-ansible-3.1.7]# vim site.yml

# Defines deployment design and assigns role to server groups - hosts: - mons # - agents - osds - mdss # - rgws # - nfss # - rbdmirrors - clients - mgrs

2.5 执行部署

[root@ceph1 ceph-ansible-3.1.7]# ansible-playbook site.yml -i hosts

-bash: ansible-playbook: command not found

[root@ceph1 ceph-ansible-3.1.7]# yum install ansible -y

[root@ceph1 ceph-ansible-3.1.7]# ansible-playbook site.yml -i hosts

成功安装

注:如果是不会过程出错,先清空集群,在进行部署

cp infrastructure-playbooks/purge-cluster.yml purge-cluster.yml # 必须copy到项目根目录下 ansible-playbook -i hosts purge-cluster.yml

不升级内核,也可以安装成功,但是没有测试集群的其他性能!

参考链接:https://yq.aliyun.com/articles/624202