代码示例:

package com.atguigu.hdfs; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.*; import org.junit.After; import org.junit.Before; import org.junit.Test; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import java.util.Arrays; public class HdfsClient { private FileSystem fs; @Before public void init() throws URISyntaxException, IOException, InterruptedException { URI uri=new URI("hdfs://master:9000"); Configuration configuration=new Configuration(); String user="root"; fs=FileSystem.get(uri,configuration,user); } @After public void close() throws IOException { // 3 关闭资源 fs.close(); } //创建目录 @Test public void testmkdir() throws IOException, URISyntaxException, InterruptedException { fs.mkdirs(new Path("/homework")); } //上传 @Test public void testPut() throws IOException { // fs.copyFromLocalFile(true,true,new Path("D:\sunwukong.txt"),new Path("/xiyou/huaguoshan")); } @Test public void testListFiles() throws IOException, InterruptedException, URISyntaxException { // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://master:9000"), configuration, "root"); // 2 获取文件详情 RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true); while (listFiles.hasNext()) { LocatedFileStatus fileStatus = listFiles.next(); System.out.println("========" + fileStatus.getPath() + "========="); System.out.println(fileStatus.getPermission()); System.out.println(fileStatus.getOwner()); System.out.println(fileStatus.getGroup()); System.out.println(fileStatus.getLen()); System.out.println(fileStatus.getModificationTime()); System.out.println(fileStatus.getReplication()); System.out.println(fileStatus.getBlockSize()); System.out.println(fileStatus.getPath().getName()); // 获取块信息 BlockLocation[] blockLocations = fileStatus.getBlockLocations(); System.out.println(Arrays.toString(blockLocations)); } // 3 关闭资源 fs.close(); } }

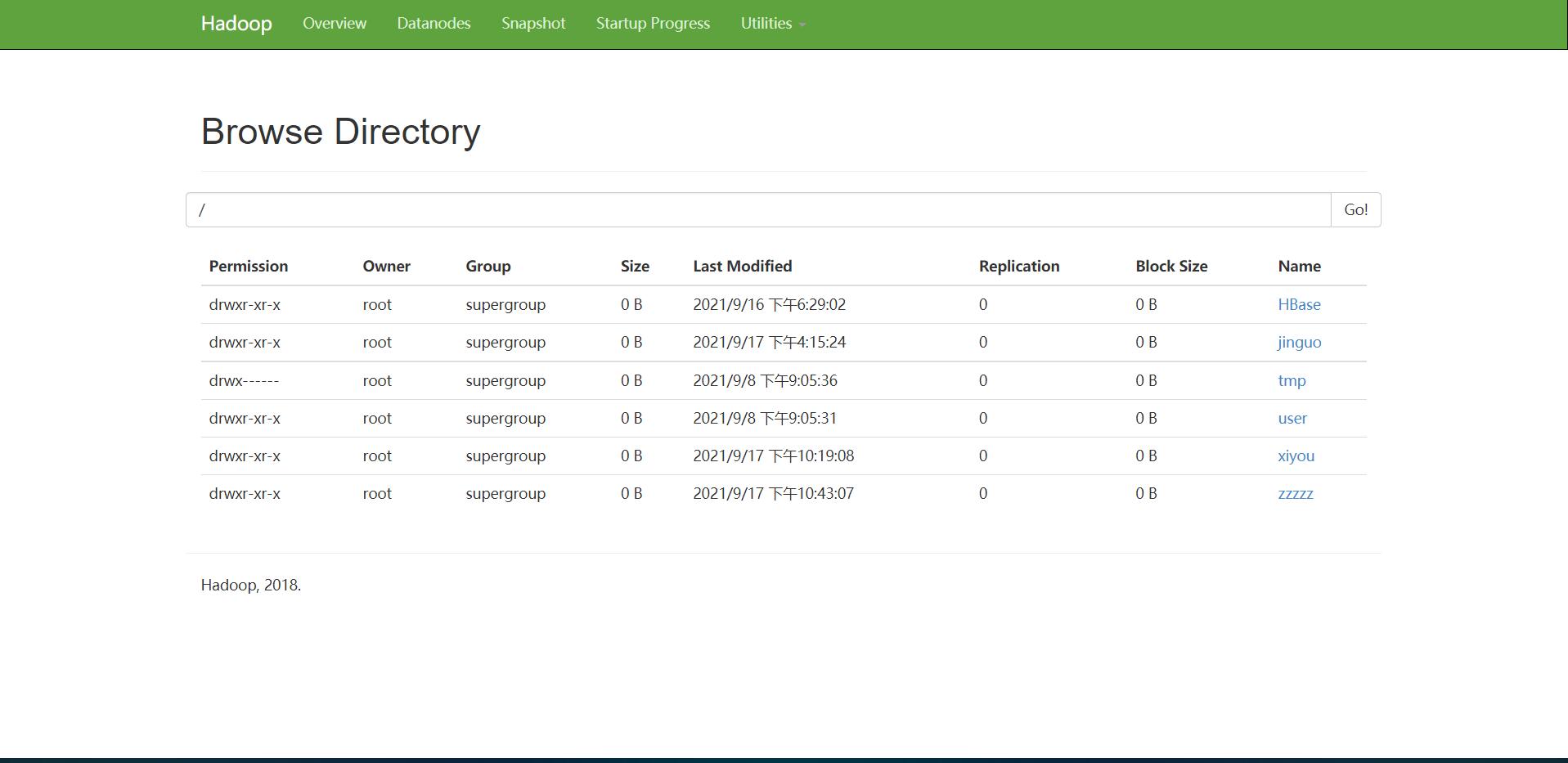

运行截图: