Prometheus Query Language

Prometheus 内置了自己的功能表达式查询语言——PromQL(Prometheus Query Language)。它允许用户实时选择和汇聚时间序列数据,从而很方便地在 Prometheus 中查询和检索数据。表达式的结果可以在浏览器中展示位图形,也可以展示位表格,或者由外部系统通过 HTTP API 的形式进行调用。虽然 PromQL 这个单词以 QL 结尾,但是它并不是一种与 SQL 类似的语言,因为当涉及在时间序列上执行计算时,SQL 往往缺乏必要的表达能力。

PromQL 的表现力非常强,除了支持常见的操作符外,还提供了大量的内置函数来实现对数据的高级处理,让监控的数据会说话。日常数据查询、可视化及告警配置这三大功能模块都是依赖 PromQL 实现的。

PromQL 是 Prometheus 实战的核心,是 Prometheus 场景的基础,也是 Prometheus 的必修课。

一、初识 PromQL

我们先通过案例来看看 PromQL,感受下 PromQL 是如何让用户通过指标更好地了解系统的性能的。

案例一:获取当前主机可用的内存空间大小,单位 MB。

node_memory_free_bytes_total / (1024 * 1024)

说明:node_memory_free_bytes_total 是瞬时向量表达式,返回的结果是瞬时向量。它可以用于获取当前主机可用的内存大小,默认的样本单位是 B,我们需要将单位换算为 MB。

案例二:基于 2 小时的样本数据,预测未来 24 小时内磁盘是否会满。

if predict_linear(node_filesystem_free[2h],24*3600) < 0

说明:predict_linear(v range-vector,t scalar) 函数可以预测时间序列 v 在 t 秒后的值,它基于简单线性回归的方式,对时间窗口内的样本数据进行统计,从而对时间序列的变化趋势做出预测。上述命令就是根据文件系统过去2小时以内的空闲磁盘,去计算未来24小时磁盘空间是否会小鱼0.如果用户需要基于这个线性预测函数增加告警功能,也可以按如下方式扩展更新。

ALERT DiskWillFullIn24Houre IF predict_linear(node_filesystem_free[2h],24*3600)<0

案例三:http_request_total(HTTP 请求总数)的 9 种常见 PromQL 语句。

# 1. 查询 HTTP 请求总数。

http_requests_total

# 2.查询返回所有时间序列、指标 http_requests_total,以及给定 job 和 handler 的标签

http_requests_total{job="apiserver",handle="/api/comments"}

# 3.条件查询:查询状态码为 200 的请求总数。

http_requests_total{code="200"}

# 4.区间查询:查询5分钟内的请求总量

http_requests_total{}[5m]

# 5.系统函数使用

# 查询系统所有 HTTP 请求的总量

sum(http_requests_total)

# 6.使用正则表达式,选择名称与特定模式匹配的作业(如以 server 结尾的作业)的时间序列

http_requests_total{job=~"."server"}

# 7.过滤除了 4xx 之外所有 HTTP 状态码的数据

http_requests_total{status!~"4.."}

# 8.子查询,以1次/分钟的速率采集最近30分钟内的指标数据,然后返回这30分钟内距离当前时间

# 最近的5分钟内的采集结果

rate(http_requests_total[5m])[30m:1m]

# 9.函数 rate,以1次/秒的速度采集最近5分钟内的数据并将结果以时间序列的形式返回

rate(http_requests_total[5m])

如上所述,我们仅针对 http_requests_total 这一个指标就做了9种不同的具有代表性的监控按理,可以看出 PromQL 语句是非常灵活的。

1.1 PromQL 的4种数据类型

结合上述案例,我们看到了瞬时向量 Instant vector 和区间向量 Ranger vector,它们属于 Prometheus 表达式语言的4种数据类型。

1、瞬时向量(Instant vector):一组时间序列,每个时间序列包含单个样本,它们共享相同的时间戳。也就是说,表达式的返回值中只会包含该时间序列中的最新的一个样本值。而相应的这样的表达式称之为瞬时向量表达式。

2、区间向量(Range vector):一组时间序列,每个时间序列包含一段时间范围内的样本数据。

3、标量(Scalar):一个浮点型的数据值。

4、字符串(String): 一个简单的字符串值。

1.2 时间序列

和MySQL 关系型数据库不同的是,时间序列数据库主要按照一定的时间间隔产生一个个数据点,而这些数据点按照时间戳和值的生成顺序存放,这就得到了我们上问提到的向量(vector)。以时间轴为横坐标、序列为纵坐标,这些数据点连接起来就会形成一个矩阵。

矩阵中的每一个点都可称为一个样本(Sample),样本主要由3方面构成。

-

- 指标(Metrics):包括指标名称(Metrics name)和一组标签集(Label set)名称,如 request_total{path="/status",method="GET"}。

- 时间戳(TimeStamp):这个值默认精确到毫秒。

- 样本值(Value):这个值默认使用 Float64 浮点类型。

Prometheus 会定期为所有系列收集新数据点。

1.3 指标

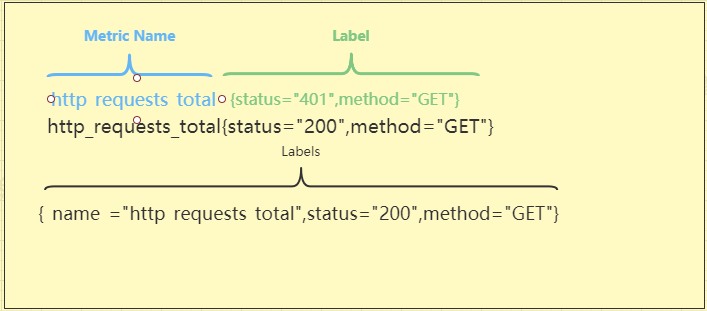

时间序列的指标(Metrics)可以基于 Bigtable(Google 论文)设计为 Key-Value 存储方式,如下图所示

上图中的 http_requests_total{status="401",method="GET"} @1434317560938 94358 为例,在 Key-value中,94358 作为 Value(也就是样本值 Sample Value),前面的 http_requests_total{status="401",method="GET"} @1434317560938 一律为 Key。在 Key 中,又由 Metrics Name(例子中的 http_requests_total)、Label(例子中的{status="401",method="GET"})和 Timestamp(例子中的 @1434317560938)3部分构成。

在 Prometheus 的世界里,所有的数值都是 64 bit的。每条时间序列里面记录的就是 64 bit Timestamp(时间戳)和 64 bit 的 Sample Value(采样值)。

如图所示,Prometheus 的 Metrics 可以有两种表现方式。第一种方式是经典的形式。

<Metric Name>{<Label name>=<label value>, ...}

其中,Metric Name 就是指标名称,反映监控样本的含义。指标名称只能由ASCII字符、数字、下划线以及冒号组成并必须符合正则表达式[a-zA-Z_:][a-zA-Z0-9_:]*。

标签反映了当前样本的多种特征纬度。通过这些纬度,Prometheus 可以对样本数据进行过滤、聚合、统计等操作,从而产生新的计算后的一条时间序列。标签名称也只能由ASCII字符、数字以及下划线组成,并且必须满足正则表达式[a-zA-Z_][a-zA-Z0-9_]*。

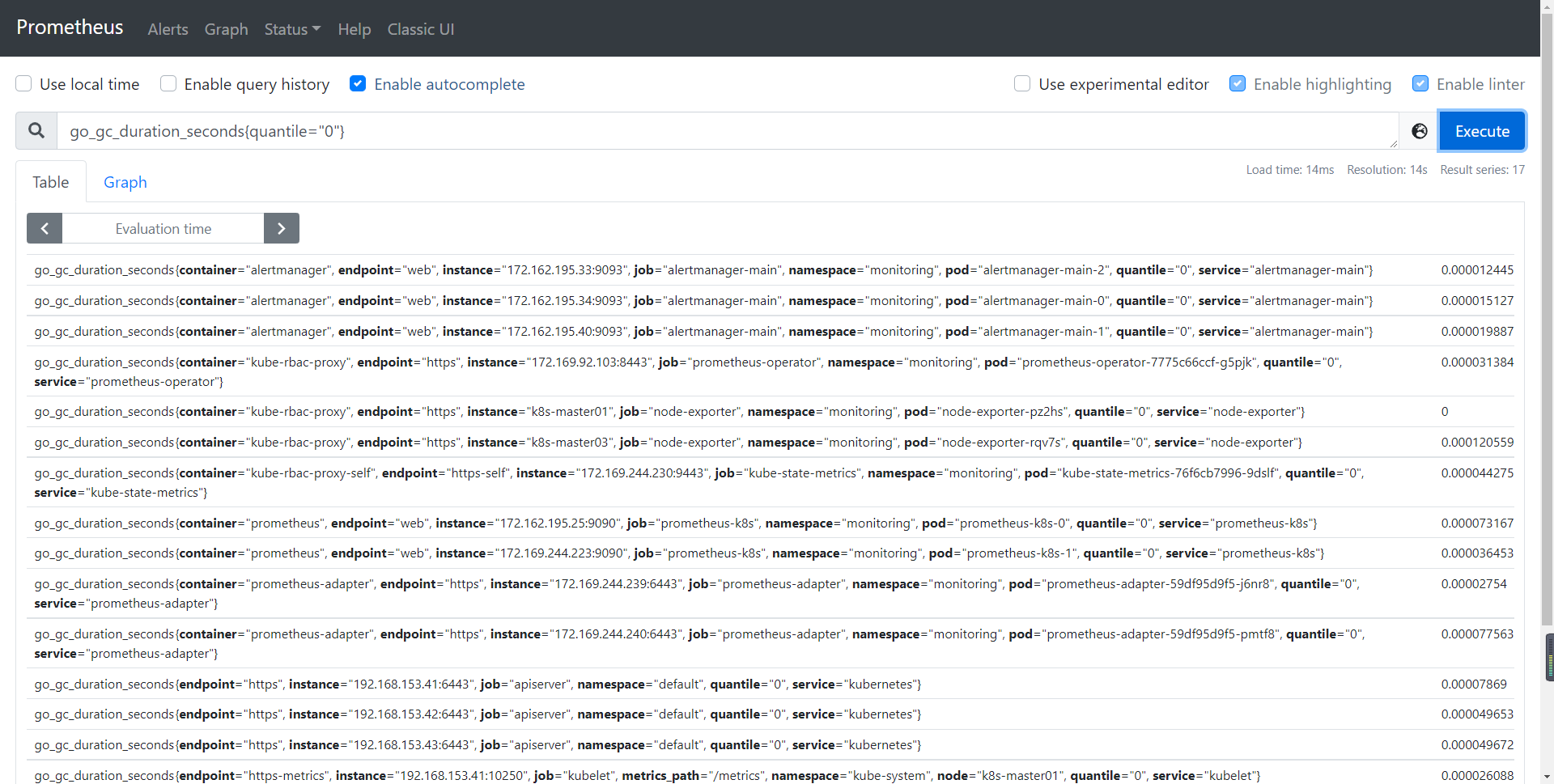

通过命令 go_gc_duration_seconds{quantile="0"} 可以在 Prometheus 的 Graph控制台获得图:

第二种方式来源于 Prometheus 内部。

(__name__=metrics.<label name>=<label value>, ...)

第二种方式和第一种方式是一样的,表示同一条时间序列。这种方式是 Prometheus 内部的表现形式,是系统保留的关键字,官方推荐只能在系统内部使用。在 Prometheus 的底层实现中,指标名称实际上是以 __name__=<metric name> 的形式保存在数据库中的;__name__ 是特定的标签,代表了 Metric Name。标签的值可以包含任何 Unicode 编码的字符。

通过命令 {__name__="go_gc_duration_seconds",quantile="0"} 可以在 Prometheus 的 Graph 控制台获得如下结果:

二、PromQL中的4大选择器

如果一个指标来自多个不同类型的服务器或者应用,那么技术人员通常都有缩小范围的需求,例如希望从不计其数的指标中查看来自一个实例 instance 或者 handler 标签的指标。这时就要用标签筛选功能了。这种标签的筛选功能是通过选择器(Selector)来完成的。

http_requests_total{job="Helloworld",status="200",method="POST",handler="/api/comments"}

这就是一个选择器,它返回的 job 是 HelloWorld,返回值是 200,方法是 POST(handler 标签为 "/api/comments" 的 http_requests_total)。它是 HTTP 请求总数的瞬时向量选择器(InstantVector Selector)。

例子中的 job="HelloWorld"是一个匹配器(Matcher),一个选择器中可以有多个匹配器,它们组合在一起使用。

接下来就从匹配器(Matcher)、瞬时向量选择器(Instant Vector Selector)、区间向量选择器(Range Vector Selector)和偏移量修改器(Offset)这4个方面对 PromQL 进行介绍。

2.1 匹配器

匹配器是作用于标签上的,标签匹配器可以对时间序列进行过滤,Prometheus 自持完全匹配和正则匹配两种模式。

2.1.1. 相等匹配器(=)

相等匹配器(Equality Matcher),用于选择与提供的字符串完全相同的标签。下面介绍的例子中就会使用相等匹配器按照条件进行一系列过滤。

http_requests_total{job="Helloworld",status="200",method="POST",handler="/api/comments"}

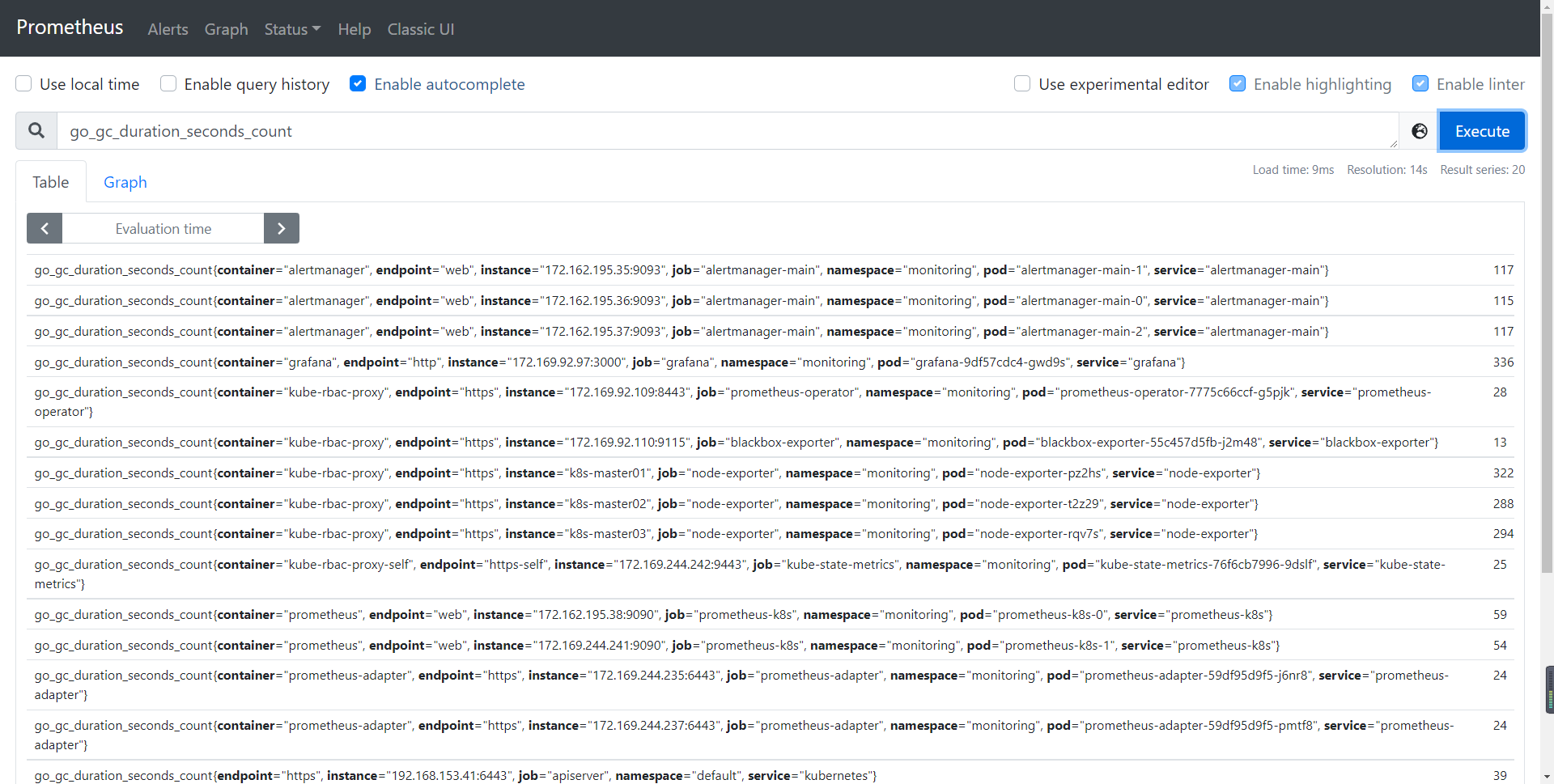

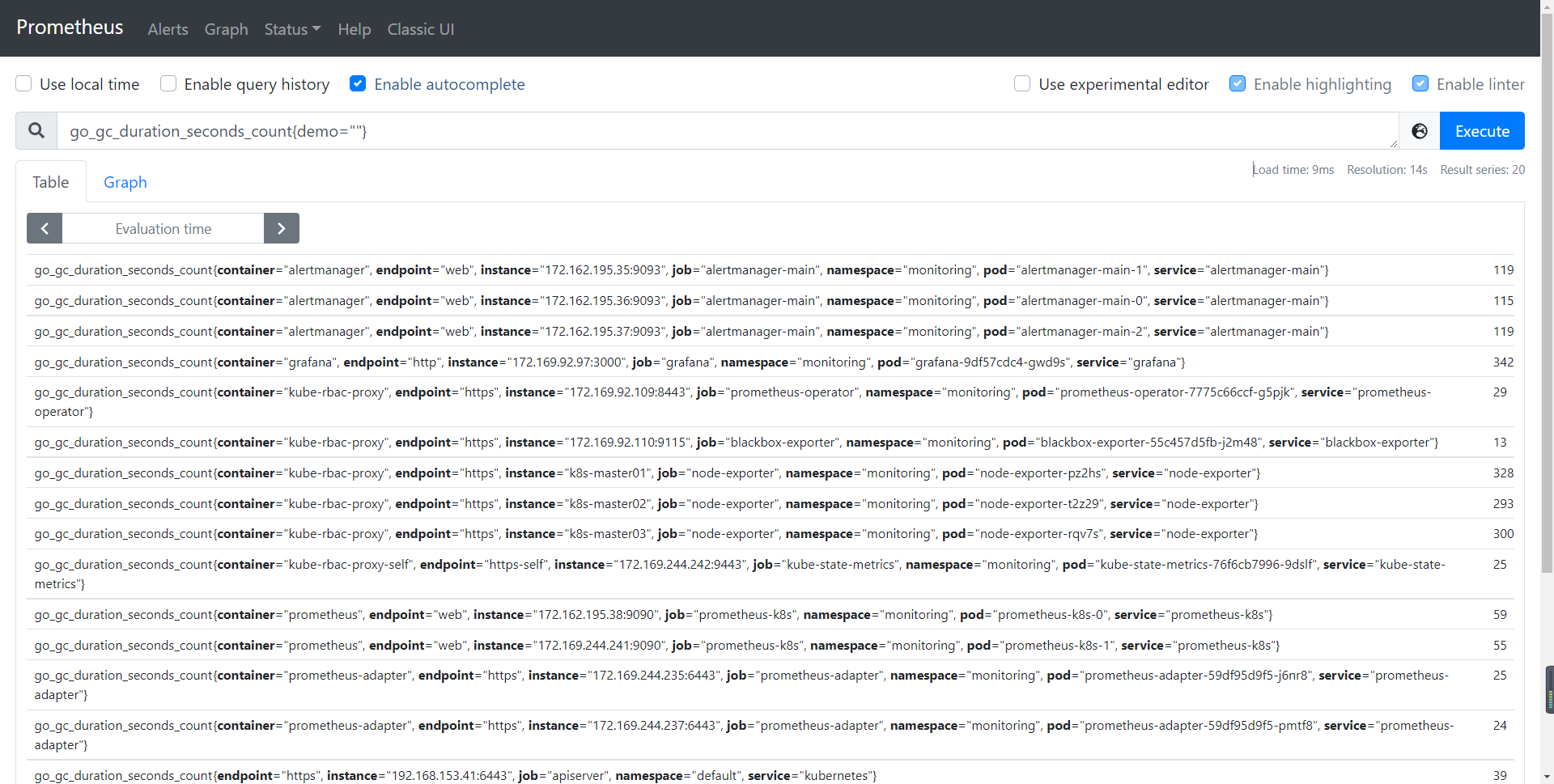

需要注意的是,如果标签为空或者不存在,那么也可以使用 Label="" 的形式。对于不存在的标签,比如 demo 标签,go_gc_duration_seconds_count 和 go_gc_duration_seconds_count{demo=""} 效果是一样的,对比如下:

2.1.2. 不相等匹配器(!=)

不相等匹配器(Negative Equality Matcher),用于选择与提供的字符串不相等的标签。它和相等匹配器是完全性相反的。举个例子,如果想要查看 job 并不是 HelloWorld 的 HTTP 请求总数,可以使用如下不相等匹配器。

http_requests_total{job!="HelloWorld"}

2.1.3. 正则表达式匹配器(=~)

正则表达式匹配器(Regular Expression Matcher),用于选择与提供的字符串进行正则运算后所得结果相匹配的标签。Prometheus 的正则运算是强指定的,比如正则表达式 a 只会匹配到字符串 a,而并不会匹配到 ab 或者 ba 或者 abc。如果你不想使用这样的强制指定功能。可以在正则表达式的前面或者后面加上 ".*"。比如下面的例子表示 job 是所有以 Hello 开头的 HTTP 请求总数。

http_requests_total{job=~"Hello.*"}

http_requests_total 直接等效于 {__name__="http_requests_total"},后者也可以使用和前者一样的4种匹配器(=,!=,=~,!=)。比如下面的例子表示 job 是所有以 Hello 开头的指标。

{__name_-=~"Hello.*"}

如果想要查看 job 是以 Hello 开头的,且在生产(prod)、测试(test)、预发布(pre)等环境下响应结果不是 200 的 HTTP 请求总数,可以使用这样的方式进行查询。

http_requests_total{job="Hello.*",env=~"prod|test|pre",code!="200"}

由于所有的 PromQL 表达式必须至少包含一个指标名称,或者至少有一个不会匹配到空字符串的标签过滤器,因此结合 Prometheus 官方文档,可以梳理出如下非法实例:

{job=~".*"} #非法!

{job=""} #非法!

{job!=""} #非法!

相反,如下表达式是合法的:

{job=~".+"} #合法! .+ 表示至少一个字符

{job=~".*",method="get"} #合法! .* 表示任意一个字符

{job=~"",method="post"} #合法! 存在一个非空匹配

{job=~".+",method="post"} #合法! 存在一个非空匹配

2.1.4. 正则表达式相反匹配器(!~)

正则表达式相反匹配器(Negative Regular Expression Matcher),用于选择与提供的字符串进行正则运算后所得结果不匹配的标签。因为 PromQL 的正则表达式基于 RE2 的语法,但是 RE2 不支持向前不匹配表达式,所以 !~ 的出现是一种替代方案,以实现基于正则表达式排除指定标签值的功能。在一个选择器当中,可以针对同一个标签来使用多个匹配器。比如下面的例子,可以实现查找 job 名是 node 且安装在 /prometheus 目录下,但是并不在 /prometheus/user 目录下的所有文件系统并确定其大小。

node_filesystem_size_bytes{job="node",mountpoint=~"/prometheus/.*",mountpoint !~ "/prometheus/user/.*"}

PromQL 采用的是 RE2 引擎,支持正则表达式。RE2 来源于 Go 语言,它被设计为一种线性时间模式,非常适合用于 PromQL 这种时间序列的方法。但是就像我们前文描述的 RE2 那样,其不支持向前不匹配表达式(向前断言),也不支持反向引用,同时还缺失很多高级特性。

知识延伸:

=、!=、=~、!~ 这4个匹配器在实战中非常有用,但是如果频繁为标签施加正则匹配器,比如 HTTP 状态码有 1xx、2xx、3xx、4xx、5xx,在统计所有返回值是 5xx 的 HTTP 请求时,PromQL 语句就会变成 http_requests_total{job="HelloWorld",status=~"500",status=~"501",status=~"502",status=~"503",status=~"504",status=~"505"……}

但是,我们都知道 5xx 代表服务器错误,这些状态表示服务器在尝试处理请求时发生了内部错误。这些错误可能来自服务器本身,而不是请求。

500 (服务器内部错误) 服务器遇到错误,无法完成请求。

501 (尚未实施) 服务器不具备完成请求的功能。 例如,服务器无法识别请求方法时可能会返回此代码。

502 (错误网关) 服务器作为网关或代理,从上游服务器收到无效响应。

503 (服务不可用) 服务器目前无法使用(由于超载或停机维护)。 通常,这只是暂时状态。

504 (网关超时) 服务器作为网关或代理,但是没有及时从上游服务器收到请求。

505 (HTTP 版本不受支持) 服务器不支持请求中所用的 HTTP 协议版本。

……

为了消化这样的错误,可以进行如下优化:

优化一:多个表达式之间使用 "|" 进行分割:http_requests_total{job="HelloWorld",status=~"500|501|502|503|504|505"}。

优化二:将这些返回值包装为 5xx,这样就可以直接使用正则表达式匹配器对 http_requests_total{job="HelloWorld",status=~"5xx"}进行优化。

优化三:如果要选择不以 4xx 开头的所有 HTTP 状态码,可以使用 http_requests_total{status!~"4.."}。

2.2 瞬时向量选择器

瞬时向量选择器用于返回在指定时间戳之前查询到的最新样本的瞬时向量,也就是包含 0 个或者多个时间序列的列表。在最简单的形式中,可以仅指定指标的名称,如 http_requests_total,这将生成包含此指标名称的所有时间序列的元素的瞬时向量。我们可以通过大括号 {} 中添加一组匹配的标签来进一步过滤这些时间序列,如:

http_requests_total{job="HelloWorld",group="middlueware"}

http_requests_total{} 选择当前最新的数据

瞬时向量并不希望获取过时的数据,这里需要注意的是,在 Prometheus 1.x 和 2.x 版本中是有区别的。

在 Prometheus 1.x 中会返回在查询时间之间不超过 5 分钟的时间序列,这种方法还是能满足大多数场景的需求的。但是如果在第一次查询,如 http_requests_total{job="HellWorld"} 这个5分钟的时间窗口内增加一个 label,如 http_requests_total{job="HelloWorld",group="middleware"},之后再重新进行一次瞬时查询,那么就会重复计费。这是一个问题。

在 Prometheus 2.x 是这么处理上述问题的:它会像汽车雨刮器一样刮擦,如果一个时间序列从一个刮擦到另一个,或者 Prometheus 的服务发现不再能找到当前 target,陈旧的标记就会被添加到时间序列中。这时使用瞬时向量过滤器,除需要找到满足匹配条件的时间序列之外,还需要考虑查询求值时间之前 5 分钟内的最新样本。如果样本是正常样本,那么它将在瞬时向量中返回;但如果是过期的标记,那么该时间序列将不出现在瞬时向量中。需要注意的是,如果你使用了 Prometheus Export 来暴露时间戳,那么过期的标记和 Prometheus 2.x 对过期标记的处理逻辑就会失效,受影响的时间序列会继续和 5 分钟以前的旧逻辑一起执行。

2.3 区间向量选择器

区间向量选择器返回一组时间序列,每个时间序列包含一段时间范围内的样本数据。和瞬时向量选择器不同的是,它从当前时间向前选择了一定时间范围的样本。区间向量选择器主要在选择器末尾的方括号 [] 中,通过时间范围选择器进行定义,以指定每个返回的区间向量样本值中提取多长的时间范围。例如,下面的例子可以表示最近5分钟内的所有HTTP请求的样本数据,其中[5m]将瞬时向量选择器转变为区间向量选择器。

http_requests_total{}[5m]

时间范围通过整数来表示,可以使用以下单位之一:秒(s)、分钟(m)、小时(h)、天(d)、周(w)、年(y)。需要强调的是,必须用整数来表示时间,比如 38m 是正确的,但是 2h 15m 和 1.5h 都是错误的。注意,这里的年是忽略闰年的,永远是50*60*25*365 秒。

关于区间向量选择还需要补充的就是,它返回的是一定范围内所有的样本数据,虽然刮擦时间是相同的,但是多个时间序列的时间戳往往并不会对齐,如下所示:

http_requests_total{code="200",job="HelloWorld",method="get"}=[

1@1518096812.678

1@1518096817.678

1@1518096822.678

1@1518096827.678

1@1518096832.678

1@1518096837.678

]

http_requests_total{code="200",job="HelloWorld",method="get"}=[

4@1518096813.233

4@1518096818.233

4@1518096823.233

4@1518096828.233

4@1518096833.233

4@1518096838.233

]

这是因为距离向量会保留样本的原始时间戳,不同 target 的刮擦被分布以均匀的负载,所以虽然我们可以控制刮擦和规则评估的频率,比如 5秒/次(第一组 12、17、22、27、32、37;第二组 13、18、23、28、33、28),但是我们无法控制他们完全对齐时间戳(1@1518096812.678和4@1518096813.233),因为假如有成百上千的 target,每次5秒的刮擦都会导致这些 target 在不同的位置被处理,所以时间序列一定会存在略微不同的时间点的。但是这在实际生产中并不是非常重要(偶发的不对系统造成影响的瞬时毛刺数据不是很重要),因为 Prometheus 等指标监控本身的定位就不像 Log 监控那样精准,而是趋势准确。

最后,我们结合本节介绍的知识,来看几个关于 CPU 和 PromQL 实战案例,夯实下理论。

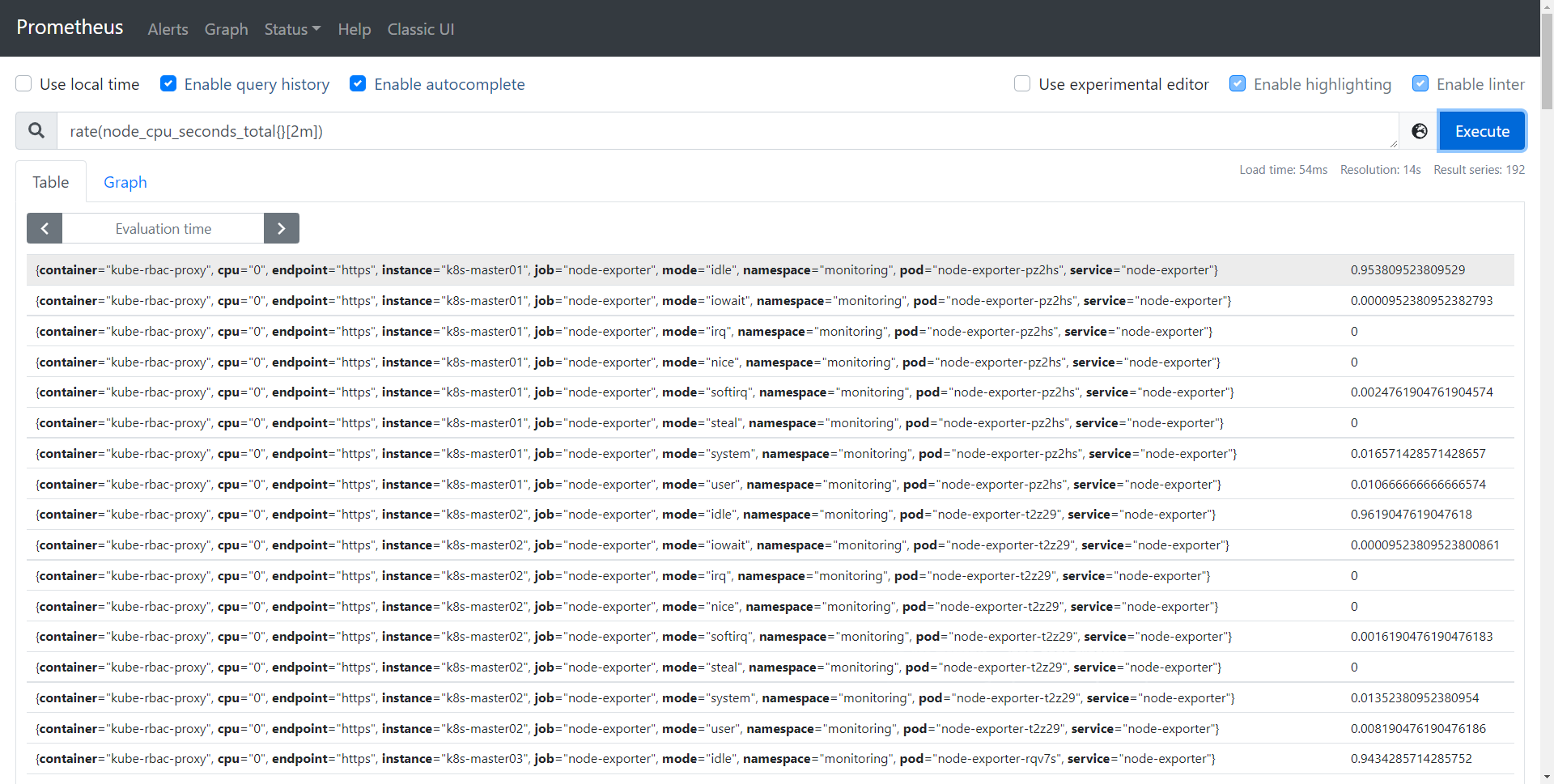

案例一:计算 2 分钟内系统进程的 CPU 使用率。

rate是PromQL内置函数,获取一段时间窗口的平均量。取一段时间增量的平均每秒数量,2m内总增量/2m

rate(node_cpu_seconds_total{}[2m])

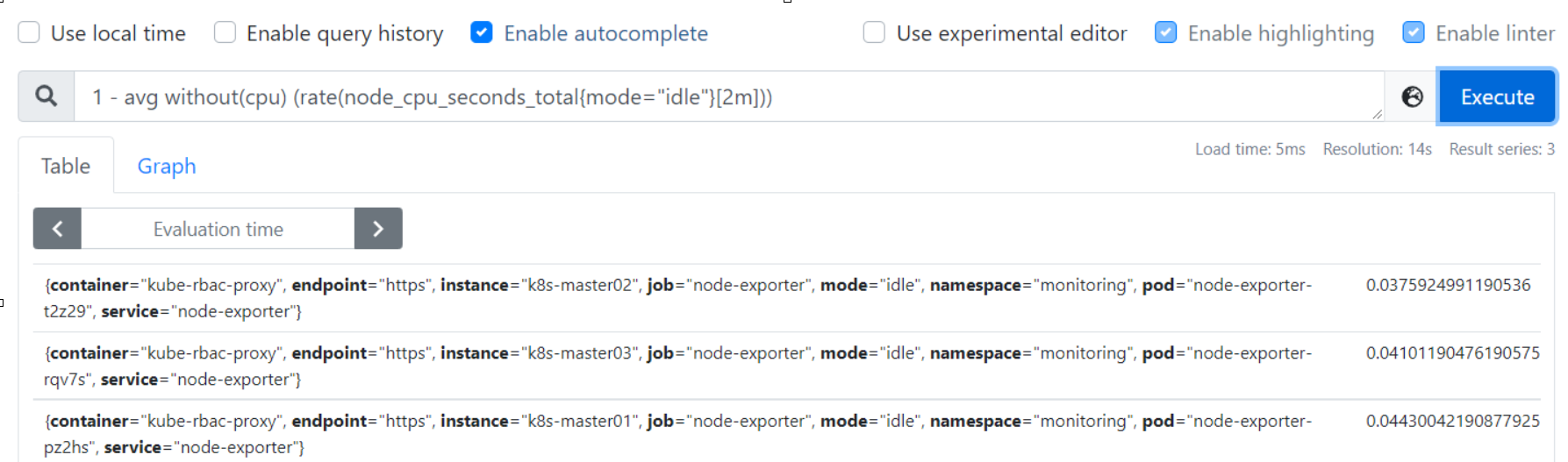

案例二:计算系统 CPU 的总体使用率,通过排除系统闲置的 CPU 使用率即可获得(without用于从计算结果中移除列举的标签,而保留其它标签)。

without 用于从计算结果中移除列举的标签,而保留其它标签。by则正好相反,结果向量中只保留列出的标签,其余标签则移除。通过without和by可以按照样本的问题对数据进行聚合。

avg without 不按cpu标签分组,然后计算平均值。

1 - avg without(cpu) (rate(node_cpu_seconds_total{mode="idle"}[2m]))

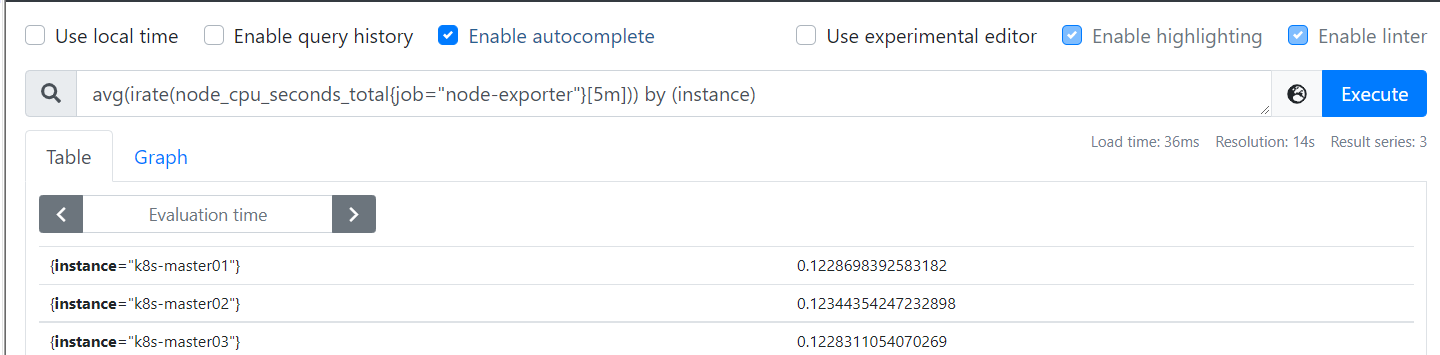

案例三:node_cpu_seconds_total 可以获取当前 CPU 的所有信息。使用 avg 聚合查询到数据后,再使用 by 来区分实例,这样就能做到分实例查询各自的数据。

irate(5m):指定时间范围内的最近两个数据点来算速率,适合快速变化的计数器(counter)。

avg(irate(node_cpu_seconds_total{job="node-exporter"}[5m])) by (instance)

知识延伸:

1)区间向量选择器往往和速率函数 rate 一起使用。比如子查询,以 1次/分钟的速率采集关于 http_requests_total 指标在过去30分钟的数据,然后返回这30分钟内距离当前最近的5分钟内的采集结果,示例如下:

rate(http_requests_total{}[5m])[30m:1m]

2)一个区间向量表达式不能直接展示在 Graph 中,但是可以展示在 Console 视图中。

2.4 偏移量修改器

偏移量修改器可以让瞬时向量选择器和区间向量选择器发生偏移,它允许获取查询计算时间并在每个选择器的基础上将其向前推移。

瞬时向量选择器和区间向量选择器都可以获取当前时间基准下的样本数据,如果我们要获取查询计算时间前5分钟的 HTTP 请求情况,可以使用下面这样的方式:

http_requests_total{} offset 5m

偏移向量修改器的关键字必须紧跟在选择器{}后面,

sum(http_requests_total{method="GET"} offset 5m) #正确 sum(http_requests_total{method="GET"}) offset 5m #错误

区间向量修改器的关键字也必须跟在选择器{}后面,

sum(http_requests_total[5m] offset 5m) #正确 sum(http_requests_total[5m]) offset 5m #错误

偏移量修改器一般用于单条数据调试比较有帮助。而趋势变化数据中,用它较少。

三、Prometheus的4大指标类型

Prometheus有4大指标类型(Metrics Type),分别是 Counter(计数器)、Gauge(仪表盘)、Histogram(直方图)和 Summary(摘要)。这是在 Prometheus 客户端(目前主要有 Go、Java、Python、Ruby 等语言版本)中提供的4种核心指标类型,但是 Prometheus 的服务端并不区分指标类型,而是简单地把这些指标统一视为无类型的时间序列。

3.1 计数器

计数器类型代表一种样本数据单调递增的指标,在没有发生重置(如服务重启、应用重启)的情况下只增不减,其样本值应该是不断增大的。例如,可以使用 Counter 类型的指标来表示服务的请求数、已完成的任务数、错误发生的次数等。计数器指标主要有两个应用方法:

1) Inc() //将 Counter 值加 1 2) Add(float64) //将指定值加到 Counter 值上,如果指定值小于0,会产生 Go语言的Panic异常,进而可能导致崩溃

但是,计数器计算的总数对用户来说大多没有什么用。大家千万不要用于计数器类型用于计算当前运行的进程数量、当前登录的用户数量等。

为了能够更直观地表示样本数据的变化情况,往往需要计算样本的增长速率,这时候通常使用 PromQL 的rate、topk、increase 和 irate 等函数

rate(http_requests_total[5m]) //通过 rate() 函数获取 HTTP 请求量的增长速率 topk(10,http_requests_total) //通过当前系统中访问量排在前10的 HTTP 地址

知识延伸:

Prometheus 要先执行 rate() 再执行 sum(),不能执行完 sum() 再执行 rate()。

这背后与 rate() 的实现方式有关,rate()在设计上假定对应的指标应该是一个计数器,也就是只有incr(增加)和 reset(归零)两种方式。而执行了sum()或其他聚合操作之后,得到的就不再是一个计数器了。

increase(v range-vector)函数传递的参数是一个区间向量,increase 函数获取区间向量中的第一个和最后一个样本并返回其增长量。下面的例子可以查询 Counter 类型指标的增长速率,可以获取 http_requests_total 在最近 5 分钟内的平均样本,其中 300 代表 300 秒。

increase(http_requests_total[5m]) / 300

知识延伸:

rate 和 increase 函数计算的增长量容易陷入长尾效应中。比如在某一个由于访问量或者其他问题导致 CPU 占用 100% 的情况中,通过计算在时间窗口内的平均增长率是无法反应出该问题的。

为什么监控和性能测试中,我们更关注 p95/p99位?就是因为长尾效应。由于个别请求的响应时间需要1秒或者更久,传统的响应时间的平均值就体现不出响应时间中的尖刺,去尖刺也是数据采集中一个很重要的工序,这就是所谓的长尾效应。p95/p99就是长尾效应的分割线,如表示99%的请求在 xxx 范围内,或者是1%的请求在 xxx 范围之外。99%是一个范围,意思是99%的请求在某一延迟内,剩下的1%就在延迟之外了。

irate(v range-vector) 是 PromQL 针对长尾效应专门提供的灵敏度更高的函数。irate 同样用于计算区间向量的增长速率,但是其反映出的瞬时增长速率。irate 函数是通过区间向量中的最后两个样本数据来计算区间向量的增长速率的。这种方式可以避免在时间窗口范围内的"长尾问题",并且体现出更好的灵敏度。通过 irate 函数绘制的图标能够更好地反映样本数据的瞬时变化状态。irate 的调用命令如下所示:

irate(http_requests_total[5m])

知识延伸:

irate 函数相比于 rate 函数提供了更高的灵敏度,不过分析长期趋势时或者在告警规则中,irate 的这种灵敏度反而容易造成干扰。因此,在长期趋势分析或者告警钟更推荐 rate 函数。

3.2 仪表盘

仪表盘类型代表一种样本数据可以任意变化的指标,即可增可减。它可以理解为状态的快照,Gauge 通常用于表示温度或者内存使用率这种指标数据,也可以表示随时增加或减少的 “总数”,例如当前并发请求的数量 node_memory_MemFee(主机但钱空闲的内容大小)、node_memory_MemAvailable(可用内存大小)等。在使用 Gauge 时,用户往往希望使用它们求和、取平均值、最小值、最大值等。

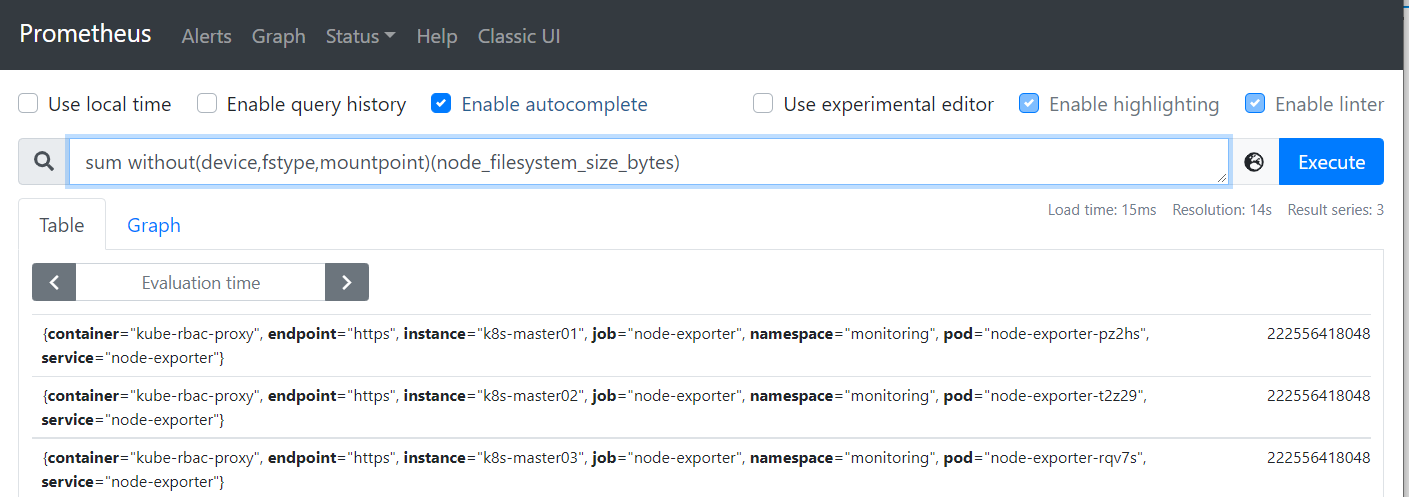

以 Prometheus 经典的 Node Exporter 的指标 node_filesystem_size_bytes 为例,它可以报告从 node_filesystem_size_bytes 采集来的文件系统大小,包含 device、fstype 和 mountpoint 等标签。如果想要对每一台机器上的总文件系统大小求和(sum),可以使用如下 PromQL语句:

sum without(device,fstype,mountpoint)(node_filesystem_size_bytes)

除了求和、求最大值等,利用 Gauge 的函数求最小值和平均值原理也是类似的。除了基本的操作外,Gauge 经常结合 PromQL 的 predict_linear 和 data 函数使用。

3.3 直方图

在大多数情况下,人们都倾向于使用某些量化指标的平均值,例如 CPU 的平均使用率、页面的平均响应时间。用这种方式呈现结果很明显,以系统 API 调用的平均响应时间为例,如果大多数 API 请求维持在 100ms 的响应方位内,而个别请求的响应时间需要 5s,就表示出现了长尾问题。

响应慢可能是平均值大导致的,也可能是长尾效应导致的额,区分两者的最简单的方式就是按照请求延迟的范围进行分区。例如,统计延迟在0~10ms之间的请求数有多少,在10~20ms之间的请求数有多少。通过 Histogram 展示监控指标,我们可以快速了解监控样本的分布情况。