yolov3

Yolov3整合当前网络上的一些优点,从下图可知,Yolov3的推理速度很快

参考github或代码

yolov3的map与推断时间与其他的对比

Top-1 Accuracy是指排名第一的类别与实际结果相符的准确率,

Top-5 Accuracy是指排名前五的类别包含实际结果的准确率。

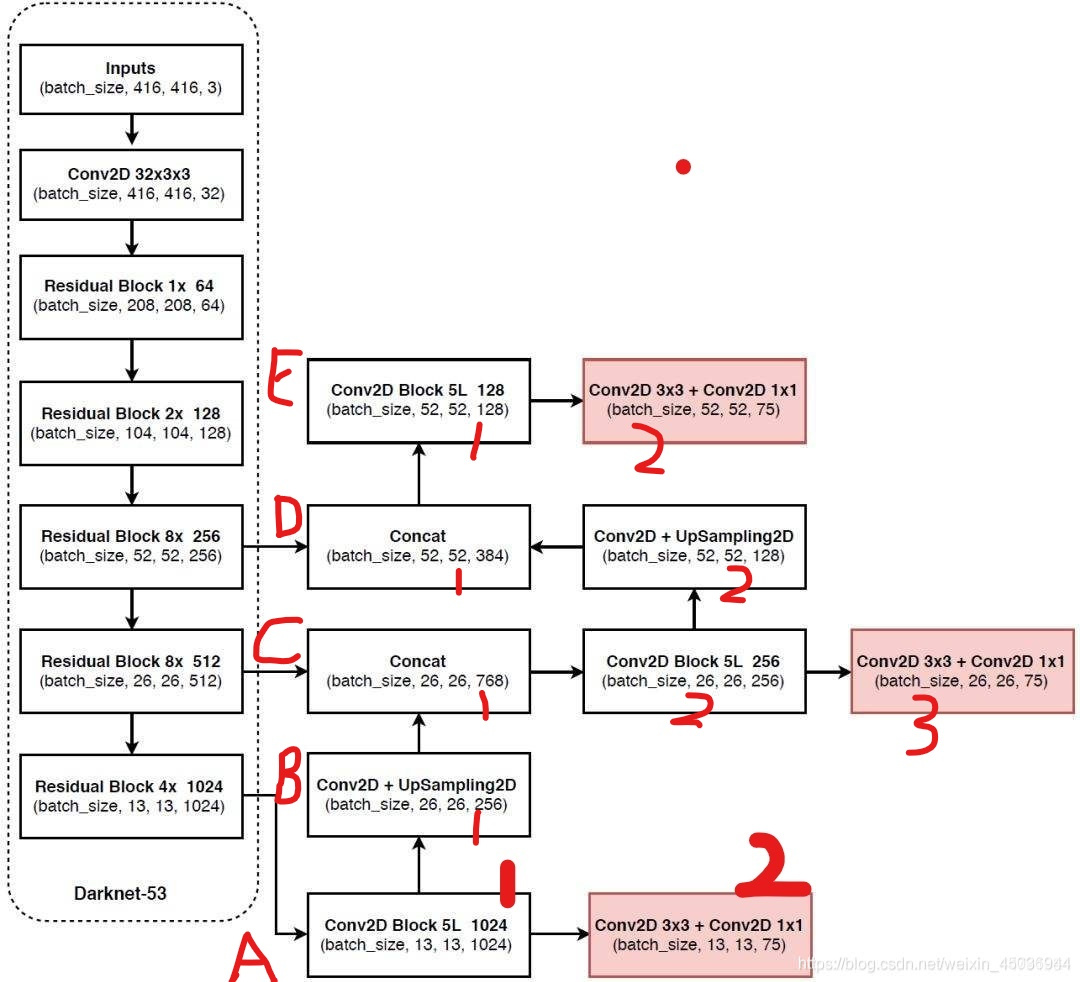

yolov3的框架图

- 其主干网络采用的是Darknet-53

1. 为什么叫做Darknet-53:

因为他有53个卷积层

2. Darknet-53为什么要比ResNet-152要好?

答:1.darknet-53没有pool层,所有的下采样都是通过卷积层来实现(使用卷积层替换最大池化下采样)

3. Darknet-53为什么要比ResNet-152要快?

答:可能是因为他的卷积核要比ResNet-152个数要少

yolov3的3个特征图的输出大小

预测输出1尺寸为13×13,这里预测大的目标

预测输出3尺寸为52×52(细粒度最高),用来预测小目标

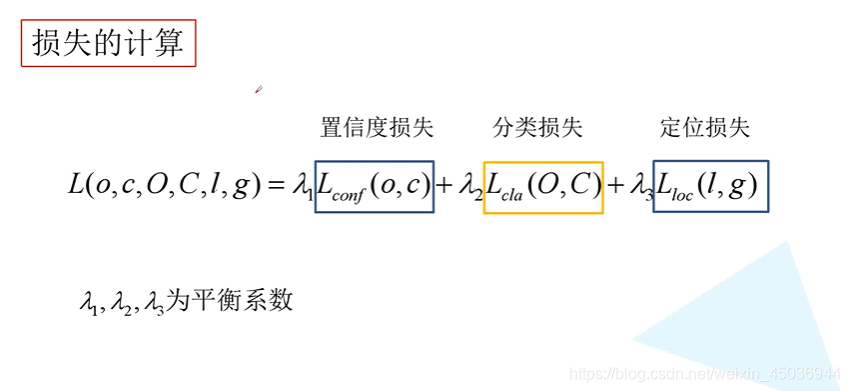

yolov3的损失函数

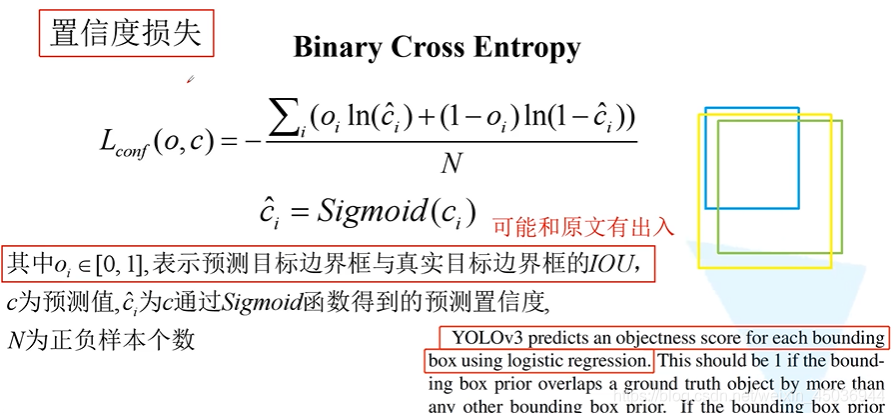

置信度损失

使用logistic regression就是使用二值交叉熵损失

右边蓝色的代表的是anchor,绿色的是GT box,将预测边界框偏移量应用到anchor上得到黄色的目标边界框(预测目标边界框)

这里的黄色和绿色的IOU就是oi

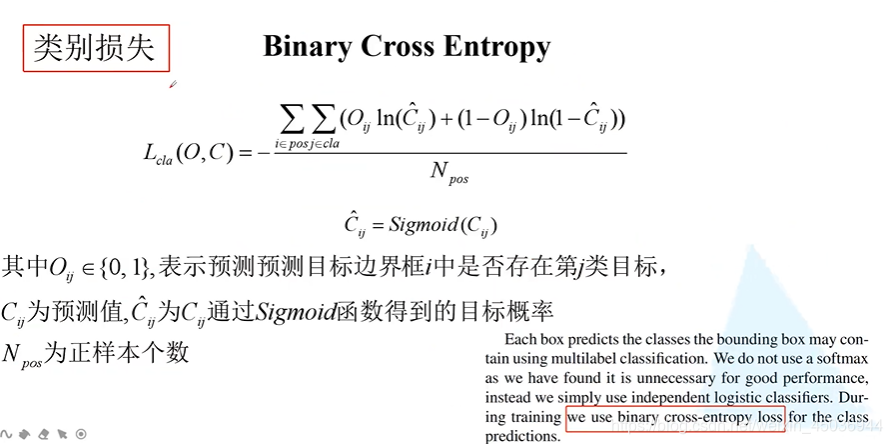

类别损失

- 理解类别损失

- 这里的预测概率和不为1,因为这里使用的是二值交叉熵损失,每个预测值之间是相互独立的

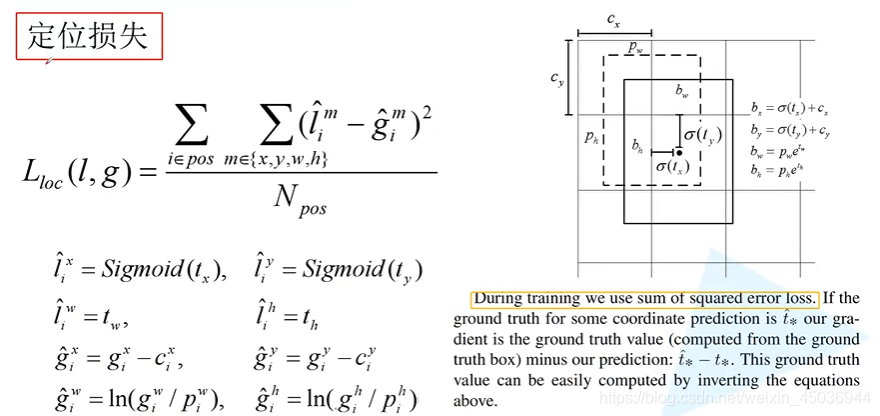

定位损失

- 预测值是tx,ty,tw,th.

代码部分

代码部分的框架图如下:

代码阅读地址

- 阅读代码时发现github作者的实现好像跟论文还有点区别(比如论文的anchor框是由聚类产生的,但是作者是直接生成的)--后面注意要用到官方代码

Yolo3代码部分

step1:(nets目录下)

darknet.py

step2:(nets目录下)

yolo3.py

step3(utils目录下)

utils.py

对先验框调整的过程称为解码的过程

step4(根目录下)

predict.py

yolo.py

step5:(在根目录下/在utils目录下/在nets目录下)

train.py

dataloader.py

yolo_training.py

print(model)产生的结果

- 用这个对着代码看能够更好的理解

YoloBody(

(backbone): DarkNet(

(conv1): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(layer1): Sequential(

(ds_conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(ds_bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(ds_relu): LeakyReLU(negative_slope=0.1)

(residual_0): BasicBlock(

(conv1): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

)

(layer2): Sequential(

(ds_conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(ds_bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(ds_relu): LeakyReLU(negative_slope=0.1)

(residual_0): BasicBlock(

(conv1): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_1): BasicBlock(

(conv1): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

)

(layer3): Sequential(

(ds_conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(ds_bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(ds_relu): LeakyReLU(negative_slope=0.1)

(residual_0): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_1): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_2): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_3): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_4): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_5): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_6): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_7): BasicBlock(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

)

(layer4): Sequential(

(ds_conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(ds_bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(ds_relu): LeakyReLU(negative_slope=0.1)

(residual_0): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_1): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_2): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_3): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_4): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_5): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_6): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_7): BasicBlock(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

)

(layer5): Sequential(

(ds_conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(ds_bn): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(ds_relu): LeakyReLU(negative_slope=0.1)

(residual_0): BasicBlock(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_1): BasicBlock(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_2): BasicBlock(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

(residual_3): BasicBlock(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): LeakyReLU(negative_slope=0.1)

(conv2): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): LeakyReLU(negative_slope=0.1)

)

)

)

(last_layer0): ModuleList(

(0): Sequential(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(1): Sequential(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(2): Sequential(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(3): Sequential(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(4): Sequential(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(5): Sequential(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(6): Conv2d(1024, 18, kernel_size=(1, 1), stride=(1, 1))

)

(last_layer1_conv): Sequential(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(last_layer1_upsample): Upsample(scale_factor=2.0, mode=nearest)

(last_layer1): ModuleList(

(0): Sequential(

(conv): Conv2d(768, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(1): Sequential(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(2): Sequential(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(3): Sequential(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(4): Sequential(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(5): Sequential(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(6): Conv2d(512, 18, kernel_size=(1, 1), stride=(1, 1))

)

(last_layer2_conv): Sequential(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(last_layer2_upsample): Upsample(scale_factor=2.0, mode=nearest)

(last_layer2): ModuleList(

(0): Sequential(

(conv): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(1): Sequential(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(2): Sequential(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(3): Sequential(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(4): Sequential(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(5): Sequential(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): LeakyReLU(negative_slope=0.1)

)

(6): Conv2d(256, 18, kernel_size=(1, 1), stride=(1, 1))

)

)

Loading weights into state dict...

进程已结束,退出代码 -1

使用nertion生成的网络框架图

- 这个是用keras产生的(pytorch产生的时候线连不上--需要转换成onnx格式)