轻量级爬虫

- 不需要登录

- 静态网页 -- 数据不是异步加载

爬虫:一段自动抓取互联网信息的程序

URL管理器

管理对象

- 将要抓取的url

- 已经抓取过的url

作用

- 防止重复抓取

- 防止循环抓取

实现方式:

1、内存

python内存

待爬取URL集合:set()

已爬取URL集合:set()

2、关系型数据库

MySQL

数据表urls(url, is_crawled)

3、缓存数据库

redis

待爬取URL集合:set()

已爬取URL集合:set()

网页下载器

将获取到的网页下载到本地进行分析的工具

类型

1、urllib2

Python 官方基础 展模块

2、requests

第三方包,更强大

urllib2下载网页

1、方法一:最简单的方法

import urllib2

# 直接请求

response = urllib2.urlopen('http://www.baidu.com')

# 获取状态码,如果是200表示获取成功

print response.getcode()

# 读取内容

cont = response.read()

2、方法二:添加data、http header

import urllib2

# 创建Request对象

request urllib2.Request(url)

# 添加数据

request.add_data('a', '1')

# 添加http的header, 模拟Mozilla浏览器

response.add_header('User-Agent', 'Mozilla/5.0')

3、方法三:添加特殊情景的处理器

-

HTTPCookieProcessor:对于需要用户登录的网页 -

ProxyHandler:对于需要代理才能访问的网页 -

HTTPSHandler:对于https协议的网页 -

HTTPRedirectHandler:对于设置了自动跳转的网页

import urllib2, cookielib

# 创建cookie容器

cj = cookielib.CookieJar()

# 创建1个opener

opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cj))

# 给urllib2安装opener

urllib2.install_opener(opener)

# 使用带有cookie的urllib2访问网页

response = urllib2.urlopen("http://www.baidu.com")

实例代码

# coding:utf8

import urllib2, cookielib

url = "http://www.baidu.com"

print("一种方法:")

response1 = urllib2.urlopen(url)

print(response1.getcode())

print(len(response1.read()))

print('第二种方法:')

request = urllib2.Request(url)

request.add_header("user-agent", 'Mozilla/5.0')

response1 = urllib2.urlopen(url)

print(response1.getcode())

print(len(response1.read()))

print('第三种方法:')

cj = cookielib.CookieJar()

opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cj))

urllib2.install_opener(opener)

response3 = urllib2.urlopen(request)

print(response3.getcode())

print(cj)

print(response3.read())

注:以上是Python2的写法,以下是Python3的写法

# coding:utf8

import urllib.request

import http.cookiejar

url = "http://www.baidu.com"

print("一种方法:")

response1 = urllib.request.urlopen(url)

print(response1.getcode())

print(len(response1.read()))

print('第二种方法:')

request = urllib.request.Request(url)

request.add_header("user-agent", 'Mozilla/5.0')

response1 = urllib.request.urlopen(url)

print(response1.getcode())

print(len(response1.read()))

print('第三种方法:')

cj = http.cookiejar.CookieJar()

opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj))

urllib.request.install_opener(opener)

response3 = urllib.request.urlopen(request)

print(response3.getcode())

print(cj)

print(response3.read())

网页解析器

解析网页,从网页中提取有价值数据的工具

网页解析器(BeautifulSoup)

类型

1、正则表达式(模糊匹配)

2、html.parser(结构化解析)

3、Beautiful Soup(结构化解析)

4、lxml(结构化解析)

结构化解析-DOM(Document Object Model)树

安装并使用 Beautiful Soup4

1、安装

pip install beautifulsoup4

2、使用

- 创建BeautifulSoup对象

- 搜索节点(按节点名称、属性、文字)

- find_all

- find

- 访问节点

- 名称

- 属性

- 文字

(1)创建Beautiful Soup对象

from bs4 import BeautifulSoup

# 根据HTML网页字符串创建BeautifulSoup对象

soup = BeautifulSoup(

html_doc, # HTML文档字符串

'html.parser', # HTML解析器

from_encoding='utf8' # HTML文档的编码

)

(2)搜索节点(find_all,find)

# 方法:find_all(name, attrs, string)

# 查找所有标签为a的节点

soup.find_all('a')

# 查找所有标签为a,链接符合/view/123.html形式的节点

soup.find_all('a', href='/view/123.htm')

soup.find_all('a', href=re.compile(r'/view/d+.htm'))

# 查找所有标签为div,class为abs,文字为Python的节点

soup.find_all('div', class_='abc', string='Python')

- 用class_作为查询类属性的变量名,因为class本身是python的关键字,所以需要加一个下划线来区别

(3)访问节点信息

# 得到节点:<a href="1.html">Python</a>

# 获取查找到的节点的标签名称

node.name

# 获取查找到的a节点的href属性

node['href']

# 获取查找到的a节点的链接文字

node.get_text()

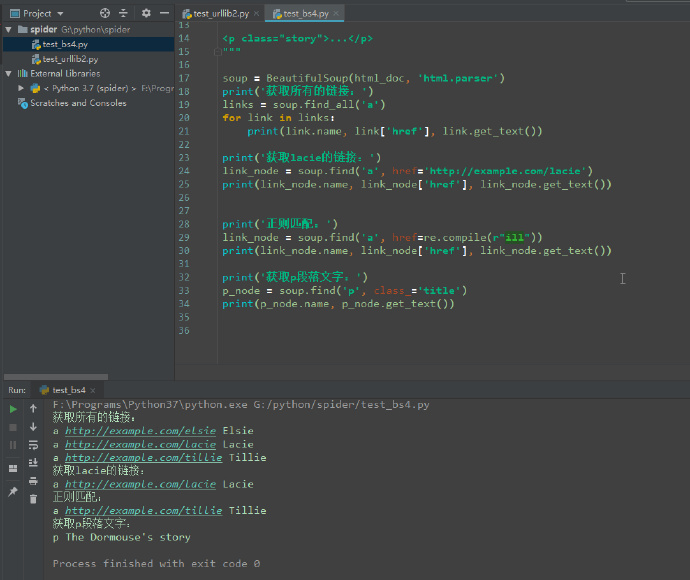

3、实例

# coding:utf8

from bs4 import BeautifulSoup, re

html_doc = """

<html><head><title>The Dormouse's story</title></head>

<body>

<p class="title"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>,

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;

and they lived at the bottom of a well.</p>

<p class="story">...</p>

"""

soup = BeautifulSoup(html_doc, 'html.parser')

print('获取所有的链接:')

links = soup.find_all('a')

for link in links:

print(link.name, link['href'], link.get_text())

print('获取lacie的链接:')

link_node = soup.find('a', href='http://example.com/lacie')

print(link_node.name, link_node['href'], link_node.get_text())

print('正则匹配:')

link_node = soup.find('a', href=re.compile(r"ill"))

print(link_node.name, link_node['href'], link_node.get_text())

print('获取p段落文字:')

p_node = soup.find('p', class_='title')

print(p_node.name, p_node.get_text())

执行后效果:

开发爬虫

分析目标

- URL格式

- 数据格式

- 网页编码

1、目标: 百度百科Python词条相关词条网页 -- 标题和简介

2、入口页

https://baike.baidu.com/item/Python/407313

3、URL格式:

- 词条页面URL:

/item/****

4、数据格式:

- 标题:

<dd class="lemmaWgt-lemmaTitle-title"><h1>...</h1></dd>

- 简介:

<div class="lemma-summary" label-module="lemmaSummary">...</div

5、页面编码:UTF-8

项目目录结构

调度主程序

# coding:utf8

from baike_spider import url_manager, html_downloader, html_parser, html_outputer

class SpiderMain(object):

def __init__(self):

# url管理器

self.urls = url_manager.UrlManager()

# 下载器

self.downloader = html_downloader.HtmlDownloader()

# 解析器

self.parser = html_parser.HtmlParser()

# 输出器

self.outputer = html_outputer.HtmlOutputer()

# 爬虫的调度程序

def craw(self, root_url):

count = 1

self.urls.add_new_url(root_url)

while self.urls.has_new_url():

try:

if count == 1000:

break

new_url = self.urls.get_new_url()

print('craw %d : %s' % (count, new_url))

html_cont = self.downloader.download(new_url)

new_urls, new_data = self.parser.parse(new_url, html_cont)

self.urls.add_new_urls(new_urls)

self.outputer.collect_data(new_data)

count = count + 1

except:

print('craw failed')

self.outputer.output_html()

if __name__ == "__main__":

root_url = "https://baike.baidu.com/item/Python/407313"

obj_spider = SpiderMain()

obj_spider.craw(root_url)

URL管理器

# coding:utf8

class UrlManager(object):

def __init__(self):

self.new_urls = set()

self.old_urls = set()

def add_new_url(self, url):

if url is None:

return

if url not in self.new_urls and url not in self.old_urls:

self.new_urls.add(url)

def add_new_urls(self, urls):

if urls is None or len(urls) == 0:

return

for url in urls:

self.add_new_url(url)

def has_new_url(self):

return len(self.new_urls) != 0

def get_new_url(self):

new_url = self.new_urls.pop()

self.old_urls.add(new_url)

return new_url

网页下载器

# coding:utf8

import urllib.request

class HtmlDownloader(object):

def download(self, url):

if url is None:

return None

# request = urllib.request.Request(url)

# request.add_header("user-agent", 'Mozilla/5.0')

response = urllib.request.urlopen(url)

if response.getcode() != 200:

return None

return response.read()

网页解析器

# coding:utf8

from bs4 import BeautifulSoup, re

from urllib.parse import urljoin

class HtmlParser(object):

def _get_new_urls(self, page_url, soup):

new_urls = set()

links = soup.find_all('a', href=re.compile(r"/item/"))

for link in links:

new_url = link['href']

new_full_url = urljoin(page_url, new_url)

new_urls.add(new_full_url)

return new_urls

def _get_new_data(self, page_url, soup):

res_data = {}

res_data['url'] = page_url

title_node = soup.find('dd', class_='lemmaWgt-lemmaTitle-title').find('h1')

res_data['title'] = title_node.get_text()

summary_node = soup.find('div', class_='lemma-summary')

res_data['summary'] = summary_node.get_text()

return res_data

def parse(self, page_url, html_cont):

if page_url is None or html_cont is None:

return

soup = BeautifulSoup(html_cont, 'html.parser')

new_urls = self._get_new_urls(page_url, soup)

new_data = self._get_new_data(page_url, soup)

return new_urls, new_data

网页输出器

# coding:utf8

class HtmlOutputer(object):

def __init__(self):

self.datas = []

def collect_data(self, data):

if data is None:

return

self.datas.append(data)

def output_html(self):

fout = open('output.html', 'w')

fout.write('<html>')

fout.write('<body>')

fout.write('<table>')

for data in self.datas:

fout.write('<tr>')

fout.write('<td>%s</td>' % data['url'])

fout.write('<td>%s</td>' % data['title'].encode('utf-8'))

fout.write('<td>%s</td>' % data['summary'].encode('utf-8'))

fout.write('</tr>')

fout.write('</table>')

fout.write('</body>')

fout.write('</html>')

fout.close()

高级爬虫:

- 登录

- 验证码

- Ajax

- 服务器防爬虫

- 多线程

- 分布式

学习资料:慕课网-Python开发简单爬虫