参考文章:

https://www.jianshu.com/p/c6d560d12d50

https://www.cnblogs.com/linuxk/p/9783510.html

服务器IP角色分布

Test-01 172.16.119.214 kubernetes node

Test-02 172.16.119.223 kubernetes node

Test-03 172.16.119.224 kubernetes node

Test-04 172.16.119.225 kubernetes master

软件安装

Mster节点:

1、安装etcd

wget https://github.com/etcd-io/etcd/releases/download/v3.2.24/etcd-v3.2.24-linux-amd64.tar.gz tar zxvf etcd-v3.2.24-linux-amd64.tar.gz mv etcd-v3.2.24-linux-amd64 /etc/etcd/

cp /etc/etcd/etcd* /usr/bin/

为了保证通信安全,客户端(如etcdctl)与etcd 集群、etcd 集群之间的通信需要使用TLS 加密

创建etcd安全证书

1)、下载加密工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod 741 cfssl* mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2)、创建CA证书

mkdir -p /etc/etcd/ssl && cd /etc/etcd/ssl/

cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF

创建CA证书签名请求文件

cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

生成CA 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

此时生成3个文件ca.csr ca-key.pem 和 ca.pem

创建etcd 证书签名请求

cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ # hosts 字段指定授权使用该证书的etcd节点IP "127.0.0.1", "172.16.119.225" # 所有etcd节点IP地址 ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

生成etcd证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

此时生成3个文件,etcd.csr etcd-key.pem 和etcd.pem

如果etcd是集群的话,将etcd.pem etcd-key.pem ca.pem三个文件传输到各个etcd节点.

3) 配置etcd启动文件

useradd -s /sbin/nologin etcd #添加启动账号

vim /lib/systemd/system/etcd.service

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify

#指定etcd工作目录和数据目录为/var/lib/etcd,需要服务启动前创建好目录 WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd --name=hostname #主机名 --cert-file=/etc/etcd/ssl/etcd.pem

--key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem

--initial-advertise-peer-urls=https://172.16.119.225:2380 --listen-peer-urls=https://172.16.119.225:2380 --listen-client-urls=https://172.16.119.225:2379,http://127.0.0.1:2379 --advertise-client-urls=https://172.16.119.225:2379 #--initial-cluster-token=etcd-cluster-0 #--initial-cluster=${ETCD_NODES} 不是集群不需要 #--initial-cluster-state=new

# --initial-cluster-state值为new时(初始化集群),

#--name的参数值必须位于--initial-cluster列表中; --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536

User=etcd

Group=etcd

[Install] WantedBy=multi-user.target

mkdir -p /var/lib/etcd/

chown -R etcd.etcd /etc/etcd && chmod -R 500 /etc/etcd/

chown -R etcd.etcd /var/lib/etcd/

启动etcd

systemctl restart etcd && systemctl enable etcd

4)验证

编辑~/.bashrc 添加

alias etcdctl='etcdctl --endpoints=https://172.16.119.225:2379 --ca-file=/etc/etcd/ssl/ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem'

source ~/.bashrc

etcdctl cluster-health

2、环境准备工作

先设置本机hosts,编译/etc/hosts添加如下内容:

172.16.119.225 test-04

修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward=1 EOF sysctl -p

关闭swap k8s1.8版本以后,要求关闭swap,否则默认配置下kubelet将无法启动。

swapoff -a #防止开机自动挂载 swap 分区 sed -i '/ swap / s/^(.*)$/#1/g' /etc/fstab

开启ipvs

不是必须,只是建议,pod的负载均衡是用kube-proxy来实现的,实现方式有两种,一种是默认的iptables,一种是ipvs,ipvs比iptable的性能更好而已。

ipvs是啥?为啥要用ipvs?:https://blog.csdn.net/fanren224/article/details/86548398

后面master的高可用和集群服务的负载均衡要用到ipvs,所以加载内核的以下模块

需要开启的模块是

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

检查有没有开启

cut -f1 -d " " /proc/modules | grep -e ip_vs -e nf_conntrack_ipv4

没有的话,使用以下命令加载

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

ipvs还需要ipset,检查下有没有。如果没有,安装

yum install ipset -y

关闭防火墙,禁用selinux

vi /etc/selinux/config disabled systemctl disable firewalld systemctl stop firewalld

配置源 安装kube

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.13.5 kubeadm-1.13.5 kubectl-1.13.5

curl -fsSL https://get.docker.com/ | sh #安装最新版docker

也可以安装指定版本docker

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum list docker-ce --showduplicates | sort -r #查看docker列表 yum install -y docker-ce-18.06.3.ce-3.el7 #安装

启动docker 和 kubelet

systemctl start docker && systemctl enable docker

systemctl start kubelet && systemctl enable kubelet

kubeadm:用于k8s节点管理(比如初始化主节点、集群中加入子节占为、移除节点等)。

kubectl:用于管理k8s的各种资源(比如查看logs、rs、deploy、ds等)。

kubelet:k8s的服务。

3、配置kubeadm-config.yaml

vim /etc/kubernetes/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1 kind: ClusterConfiguration kubernetesVersion: v1.13.3 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers controlPlaneEndpoint: 172.16.119.225:6443 #apiServer的集群访问地址 apiServer: certSANs: - "172.16.119.225" #添加域名的SSL证书 networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.254.0.0/16 dnsDomain: cluster.local etcd: external: endpoints: - https://172.16.119.225:2379 caFile: /etc/etcd/ssl/ca.pem certFile: /etc/etcd/ssl/etcd.pem keyFile: /etc/etcd/ssl/etcd-key.pem

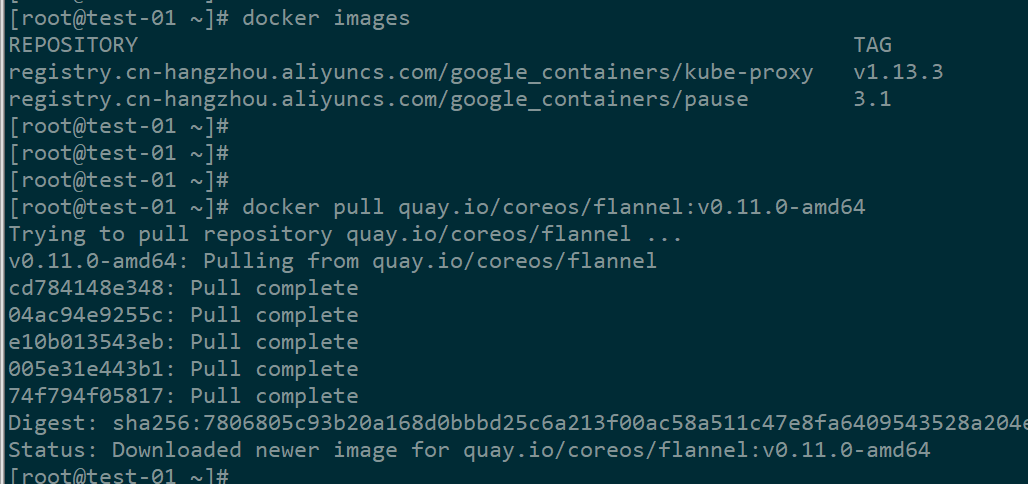

拉去kubernetes镜像

kubeadm config images pull --config kubeadm-config.yaml

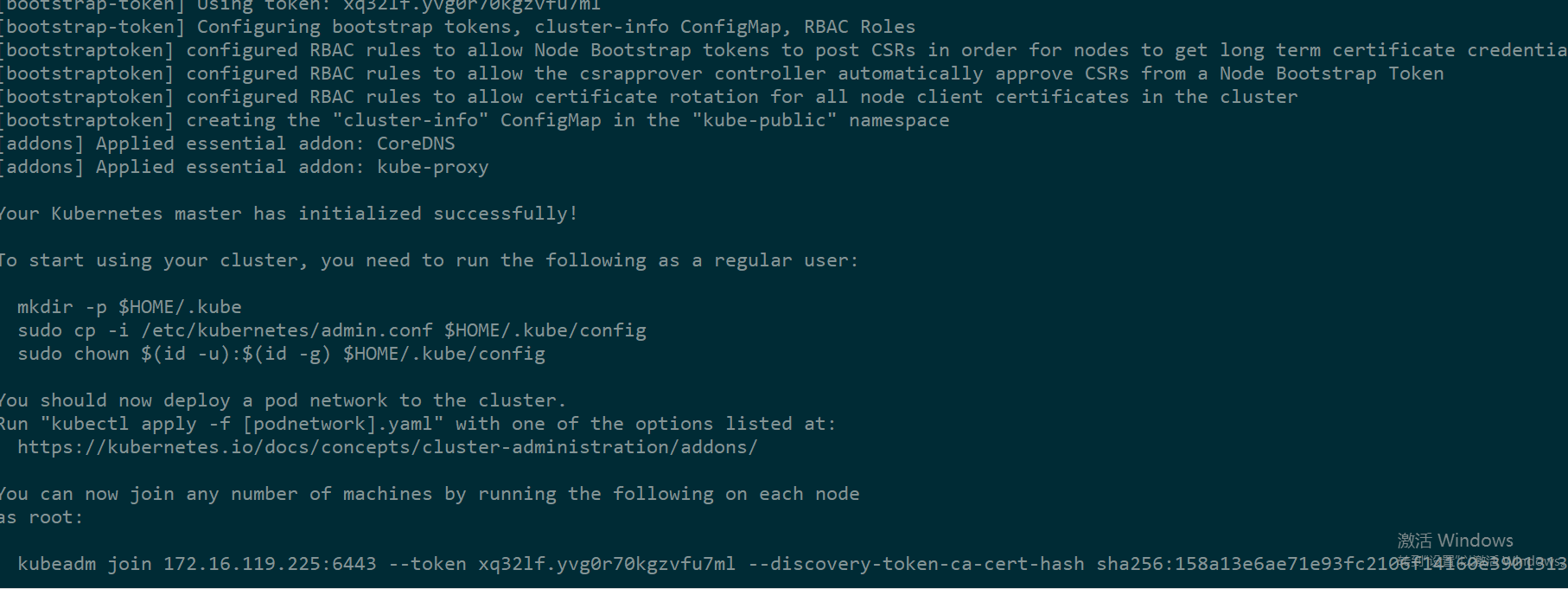

初始化master节点

kubeadm init --config kubeadm-config.yaml

注:k8s默认主机配置是2核 CPU及以上,所有如果主机主机配置只有1核,则需要加上--ignore-preflight-errors=all 参数,否则报错

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=all

初始化节点时可能会失败,最普遍的报错信息如下:

此时可根据提示使用

docker ps -a | grep kube | grep -v pause

docker logs CONTAINERID

进行排查,或查看其它日志分析原因

如果初始化失败了,可用 kubeadm reset 还原。

安装成功则会显示如下信息:

根据提示,执行下面命令复制配置文件到普通用户home目录下配置kubectl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

记住最后一句

kubeadm join 172.16.119.225:6443 --token xq32lf.yvg0r70kgzvfu7ml --discovery-token-ca-cert-hash sha256:158a13e6ae71e93fc2106f14160e3901313ab156b674c386838fe262d674a4a3

后面节点加入就用此命令。

至此完成了master节点上kubernetes的安装,但集群内还没有可用的node节点并缺乏容器网络的配置。

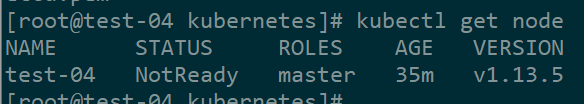

4、安装网络插件flannel

安装好kube查看节点,可以发现节点STATUS是NotReady (未就绪状态),这是因为缺少网络插件flannel或calico。这里我们用flannel做为集群的网络插件。

安装flannel

wget https://raw.githubusercontent.com/coreos/flannel/v0.12.0/Documentation/kube-flannel.yml

完整yaml文件如下:

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.12.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.12.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

修改kube-flannel.yml文件

kube-flannel.yaml中Network 值要与kubeadm-config.yaml 中 podSubnet一致。

kube-flannel.yml默认是10.244.0.0/16,所以如果初始化文件配置 podSubnet不是10.244网段,则需要修改成配置的网段

之前启动即可

kubectl create -f /etc/kubernetes/kube-flannel.yml

flannel启动成功后,会在每个node节点创建/etc/cni/net.d/10-flannel.conf文件,内容一样

{ "name": "cbr0", "type": "flannel", "delegate": { "isDefaultGateway": true } }

还有/run/flannel/subnet.env文件,每个node网段不一样

#master 节点 [root@master ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.244.0.0/16 FLANNEL_SUBNET=10.244.0.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true [root@master ~]# #slave-01节点 [root@slave-01 ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.244.0.0/16 FLANNEL_SUBNET=10.244.1.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true [root@slave-01 ~]# #slave-02节点 [root@slave-02 ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.244.0.0/16 FLANNEL_SUBNET=10.244.2.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true [root@slave-02 ~]#

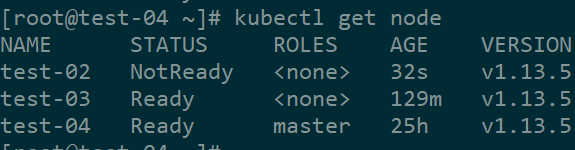

节点加入

环境配置和master环境配置一样

配置源并安装软件

yum install -y kubelet-1.13.5 kubeadm-1.13.5 kubectl-1.13.5

curl -fsSL https://get.docker.com/ | sh #安装最新版docker

启动docker和kubelet

systemctl start docker && systemctl enable docker

systemctl start kubelet && systemctl enable kubelet

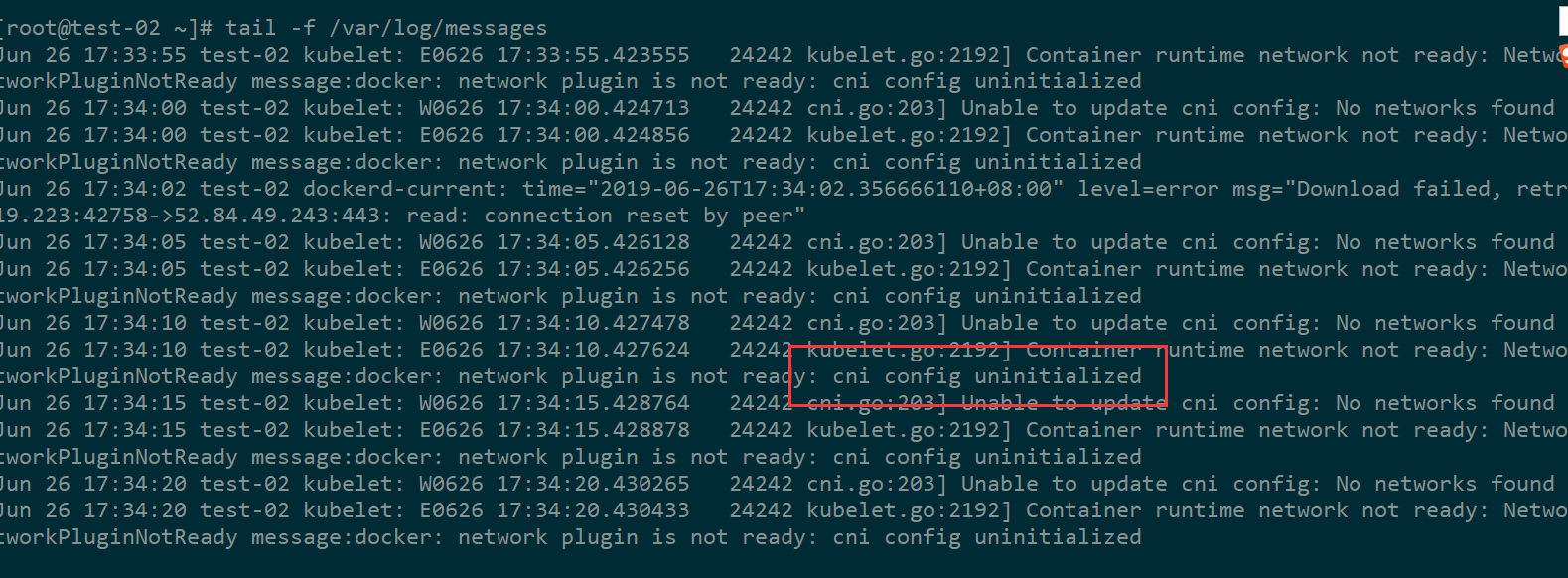

节点加入失败,如果日志提示 cni config uninitialized ,多半是因为从节点主机上没有获取成功flannel镜像(可用kubectl describe 和docker images确认),手动去从节点主机上把flannel下载下来即可,flannel镜像地址可从master节点上用docker images查看。

如果加入的节点是master节点,则需要:

从节点上创建/etc/etcd/ssl目录,并将master上ca.pem etcd.pem etcd-key.pem拷贝过来

将master节点上/etc/kubernetes/pki下ca.crt ca.key ca.key sa.key sa.pub front-proxy-ca.crt front-proxy-ca.key 证书拷贝到从节点/etc/kubernetes/pki目录下

执行kubeadm join命令加入集群,参数就是安装master过程中最后一行字,同时带上参数 --experimental-control-plane

kubeadm join 172.16.119.225:6443 --token xq32lf.yvg0r70kgzvfu7ml --discovery-token-ca-cert-hash sha256:158a13e6ae71e93fc2106f14160e3901313ab156b674c386838fe262d674a4a3 --experimental-control-plane

如果加入的节点是node节点,则直接join即可,无需拷贝证书,无需加参数 --experimental-control-plane

kubeadm join 172.16.119.225:6443 --token xq32lf.yvg0r70kgzvfu7ml --discovery-token-ca-cert-hash sha256:158a13e6ae71e93fc2106f14160e3901313ab156b674c386838fe262d674a4a3

如果加入集群时报下面错误,说明kubeadm和kubelet版本与集群不一致。查看哪个版本错了,卸载重装即可

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

error execution phase kubelet-start: configmaps "kubelet-config-1.15" is forbidden: User "system:bootstrap:xq32lf" cannot get resource "configmaps" in API group "" in the namespace "kube-system"

安装成功后,会出现以下信息

This node has joined the cluster and a new control plane instance was created: * Certificate signing request was sent to apiserver and approval was received. * The Kubelet was informed of the new secure connection details. * Master label and taint were applied to the new node. * The Kubernetes control plane instances scaled up. To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster.

同样根据提示信息配置kebelet

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

然后到master节点验证

如果节点加入后显示NotReady且从节点/var/log/messages日志里显示cni config uninitialized

### 解决办法

### 编辑/var/lib/kubelet/kubeadm-flags.env,删除--network-plugin=cni 然后重启kubelet服务,但这种治标不治本,主要原因是flannel镜像无法下载或启动出了问题,还需查看具体原因

我的是因为从节点未下载成功flannel镜像,在从节点手动安装即可

还有一种原因我没遇到,可参考

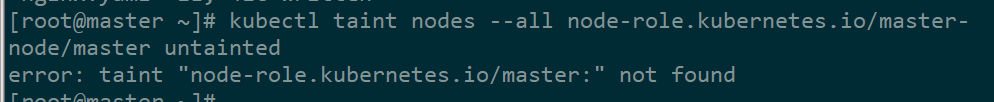

kubeadm在master节点也安装了kubelet,默认情况下并不参与工作负载。如果希望让master节点也成为一个node,则可以执行下面命令,删除node的Label "node-role.kubernetes.io/master"

kubectl taint nodes --all node-role.kubernetes.io/master-

输出 error: taint "node-role.kubernetes.io/master:" not found 忽略就行

禁止master部署pod

kubectl taint nodes k8s node-role.kubernetes.io/master=true:NoSchedule

节点删除

kubectl drain test-03 --delete-local-data --force --ignore-daemonsets kubectl delete node test-03

然后执行kubeadm reset

k8s重置时,并不会清理flannel网络,可手动清除(k8s网络不变的话,无需清理)

ifconfig cni0 down ip link delete cni0 ifconfig flannel.1 down ip link delete flannel.1 rm -rf /var/lib/cni/ rm -f /etc/cni/net.d/* systemctl restart kubelet

想重新加入则再次kubeadm join

注意token 24小时失效,如果失效了或者忘记了token,有俩种办法新建

# 简单方法 kubeadm token create --print-join-command # 第二种方法 token=$(kubeadm token generate) kubeadm token create $token --print-join-command --ttl=0

查看pod在哪个节点

kubectl get pod --all-namespaces -o wide

安装Dashboard

1、下载代码

wget http://mirror.faasx.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

2、复制上面的kubernetes-dashboard.yaml文件,配置token认证,更安全些

cp kubernetes-dashboard.yaml kubernetes-token-dashboard.yaml

3、修改service 为nodeport类型,固定访问端口

vim kubernetes-token-dashboard.yaml

修改前:

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

修改后:

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort #增加这句

ports:

- port: 443

nodePort: 32000 #增加这句,端口范围30000-32767,否则会报错

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

4、创建kubernetes-dashboard管理员角色

vim /etc/kubernetes/kubernetes-dashboard-admin.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: dashboard-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kube-system roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io

5、加载管理员角色

kubectl create -f kubernetes-dashboard-admin.yaml

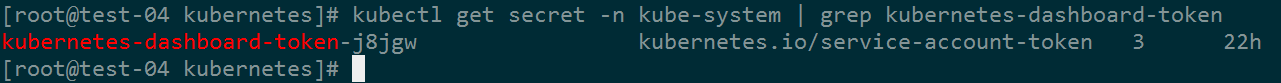

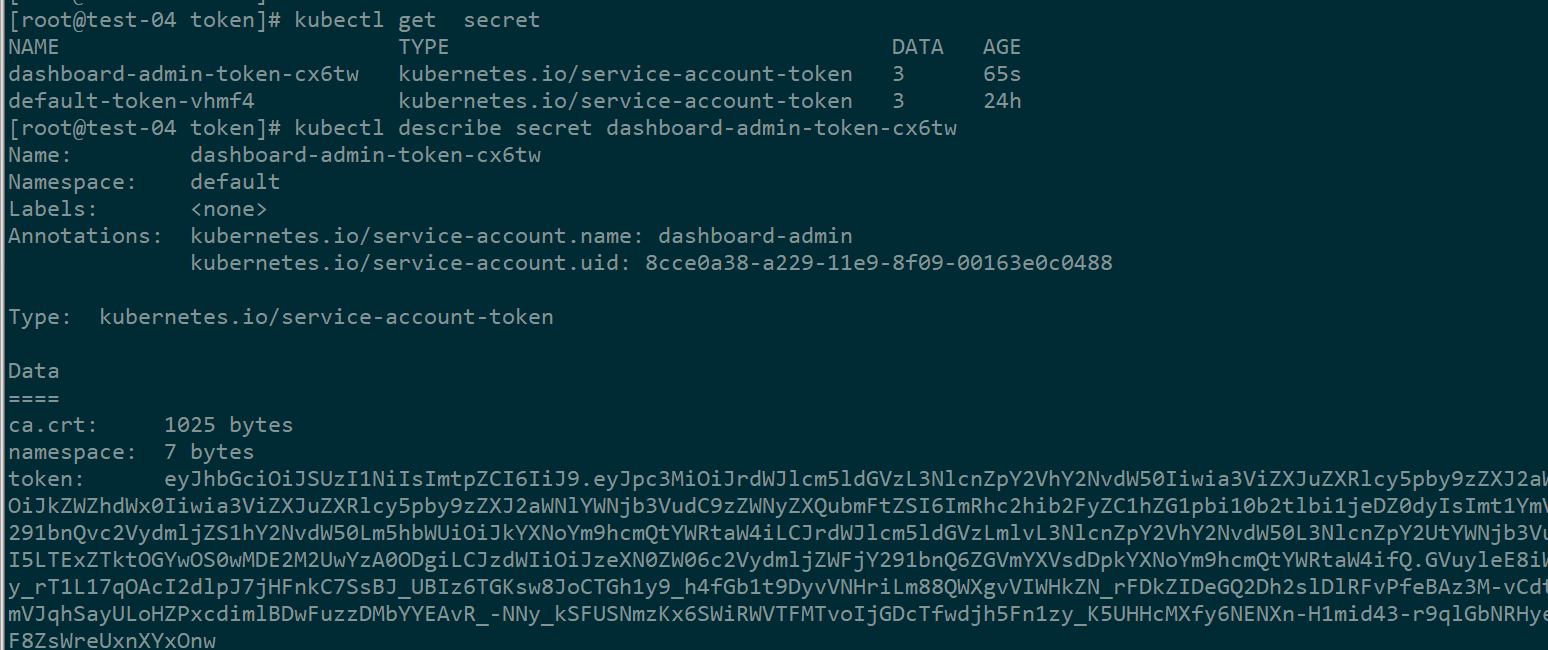

6、获取dashboard管理员角色token

kubectl get secret -n kube-system | grep kubernetes-dashboard-token

#获取token

kubectl describe secret -n kube-system dashboard-admin-token-pt7zq

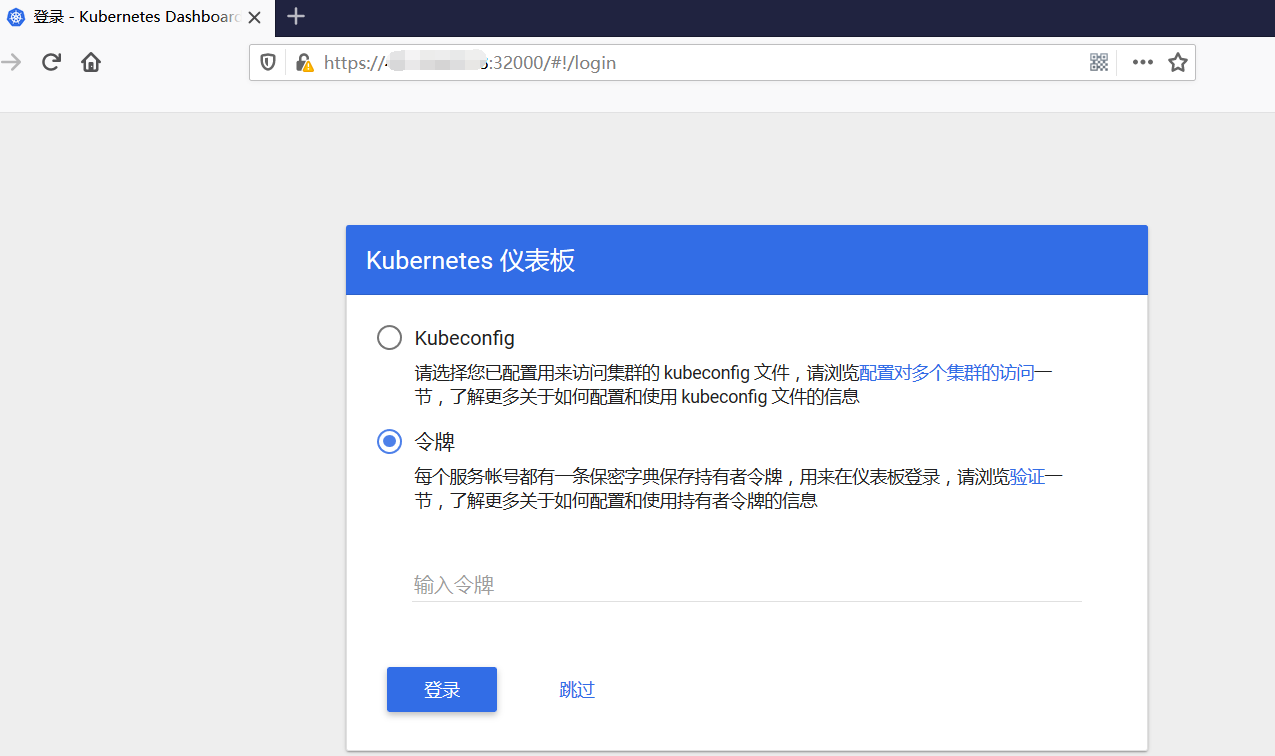

7、启动dashboard,用火狐浏览器打开并复制上面的token登录

kubectl create -f kubernetes-token-dashboard.yaml

至此k8s dashboard就部署好了

--------下面是用证书方式登陆的,可以跳过--------------------

1、生成证书

cd /etc/kubernetes/pki

openssl genrsa -out dashboard-token.key 2048

openssl req -new -key dashboard-token.key -out dashboard-token.csr -subj "/CN=172.16.119.225"

openssl x509 -req -in dashboard-token.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out dashboard-token.crt -days 2048

2、定义令牌方式访问

生成 secret

kubectl create secret generic dashboard-cert -n kube-system --from-file=dashboard-token.crt --from-file=dashboard.key=dashboard-token.key

创建serviceaccount

kubectl create serviceaccount dashboard-admin -n kube-system

将 serviceaccount 绑定到集群角色admin

kubectl create rolebinding dashboard-admin --clusterrole=admin --serviceaccount=kube-system:dashboard-admin

查看dashboard-admin这个serviceaccount的token

-----------------------end-----------------

---------------------------------------------

异常解决

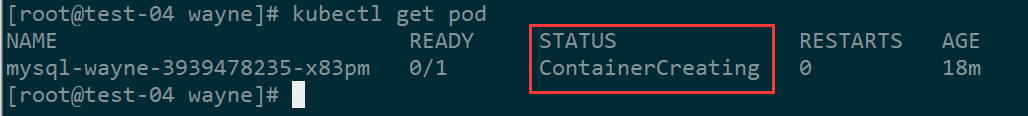

1、kubectl create -f mysql.yaml 后pod无法启动,用kubectl get pod 发现该pod处于ContainerCreating状态

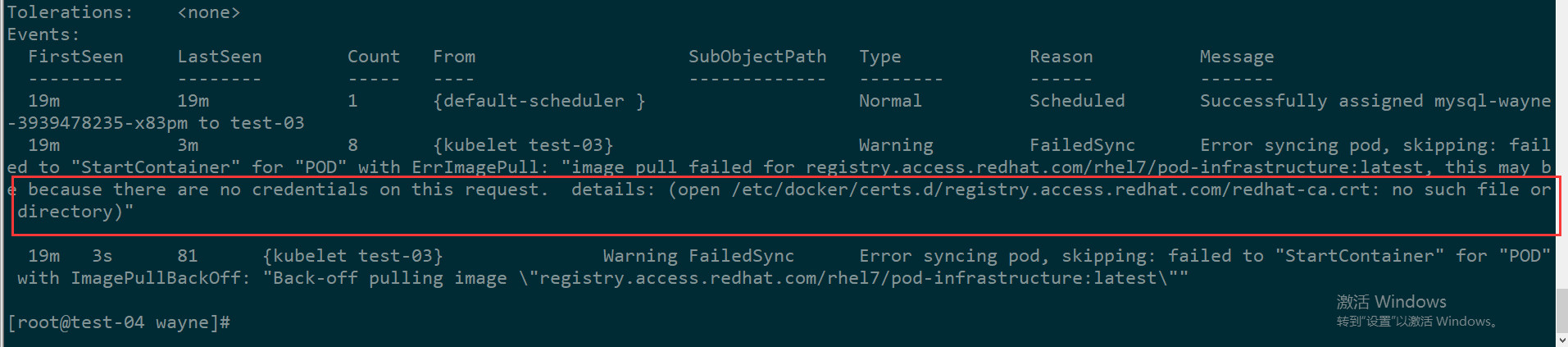

使用kubectl describe pod mysql-wayne-3939478235-x83pm 查看具体信息时发现报错如下:

解决办法:

各个node节点上都需要安装

yum install *rhsm*

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

如果还报错则进行如下步骤

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

然后删除pod 重新创建

kubectl delete -f mysql.yaml

kubectl create -f mysql.yaml

2、pod无法删除排查

https://www.58jb.com/html/155.html

3、message 日志 报plugin flannel does not support config version

解决方法:https://github.com/coreos/flannel/issues/1178

编辑 /etc/cni/net.d/10-flannel.conf,添加:"cniVersion": "0.2.0", 再重启kubelet

4、报错Failed to get system container stats for "/system.slice/kubelet.service"

解决方法:

在/lib/systemd/system/kubelet.service添加

ExecStart=/usr/bin/kubelet --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice

k8s集群升级

安装的版本是1.13.5,最新都1.18.5了,

但由于版本跨度太大,不能直接从 1.13.x 更新到 1.18.x,kubeadm 的更新是不支持跨多个主版本的,所以我们现在是 1.13,只能更新到 1.14 版本了,然后再重 1.14 更新到 1.15.

1.16之后就可以直接跨版本升级了,也更简单了

master主机

1、先备份原kubeadm配置文件

kubeadm config view >1.13.3.yaml

2、升级kubeadm

yum install -y kubeadm-1.14.5-0 kubectl-1.14.5-0 kubelet-1.14.5-0

3、kubeadm upgrade plan 查看是否可以升级,结果没有报错信息就行,如果跨版本,比如1.13直接升级到1.17就会报错

FATAL: this version of kubeadm only supports deploying clusters with the control plane version >= 1.16.0. Current version: v1.13.3

kubeadm upgrade plan

External components that should be upgraded manually before you upgrade the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Etcd 3.2.24 3.3.10

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.13.5 v1.14.5

Upgrade to the latest version in the v1.13 series:

COMPONENT CURRENT AVAILABLE

API Server v1.13.3 v1.14.5

Controller Manager v1.13.3 v1.14.5

Scheduler v1.13.3 v1.14.5

Kube Proxy v1.13.3 v1.14.5

CoreDNS 1.2.6 1.3.1

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.14.5

_____________________________________________________________________

[root@master kubernetes]#

4、升级

kubeadm upgrade apply v1.14.5 -config kubeadm-config.yaml

隔一段时间有如下信息表示升级成功

[addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy [upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.14.5". Enjoy! [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

5、查看结果

kubectl version Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.5", GitCommit:"0e9fcb426b100a2aea5ed5c25b3d8cfbb01a8acf", GitTreeState:"clean", BuildDate:"2019-08-05T09:21:30Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.5", GitCommit:"0e9fcb426b100a2aea5ed5c25b3d8cfbb01a8acf", GitTreeState:"clean", BuildDate:"2019-08-05T09:13:08Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

6、更新kubelet

yum install -y kubelet-1.14.5-0

[root@master ~]# kubelet --version Kubernetes v1.14.5 [root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready master 112m v1.14.5 slave-01 NotReady <none> 108m v1.13.5 slave-02 NotReady <none> 48m v1.13.5 [root@master ~]#

然后依次升级到1.15.5 1.16.5

node

直接升级kubeadm kubelet kubectl,然后重启kebelet即可,不受跨版本限制

yum install -y kubeadm-1.18.5-0 kubectl-1.18.5-0 kubelet-1.18.5-0