不多说,直接上干货!

这个问题,得非 你的hive和hbase是不是同样都是CDH版本,还是一个是apache版本,一个是CDH版本。

问题详情

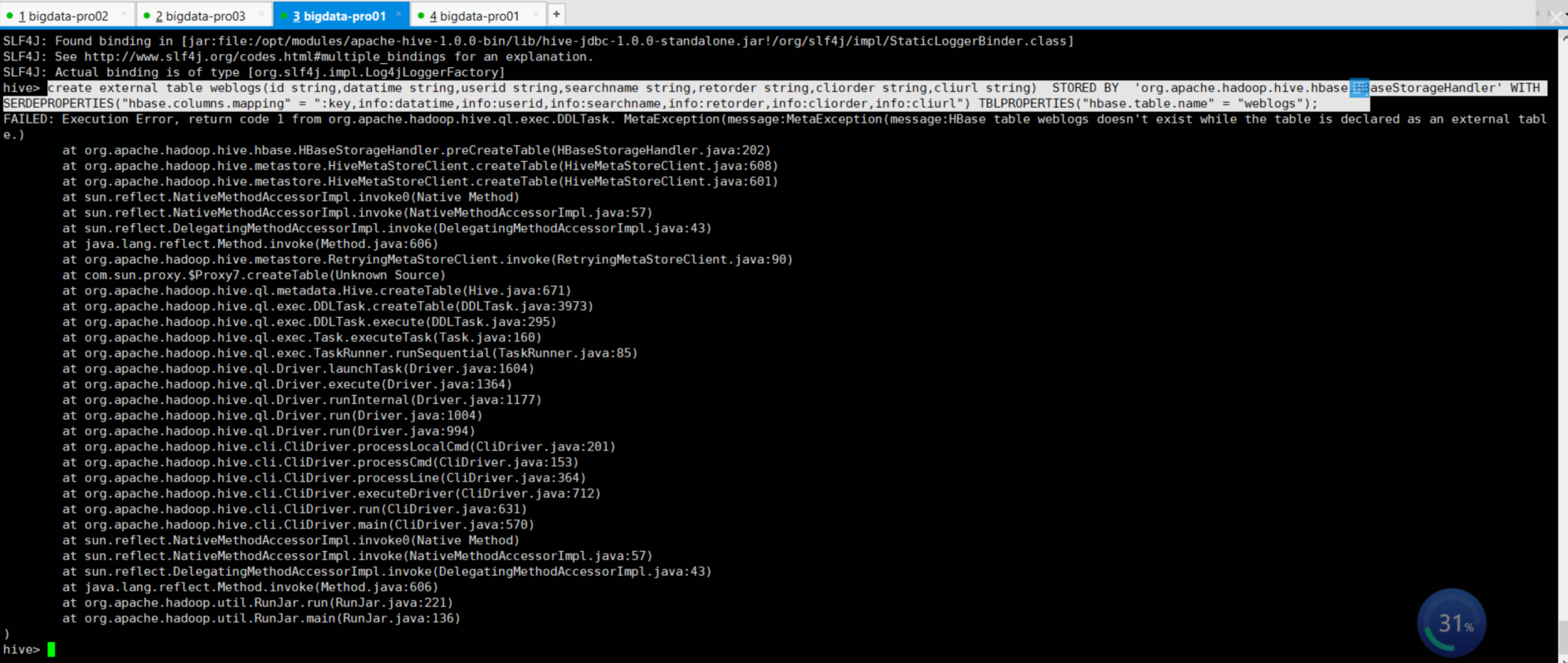

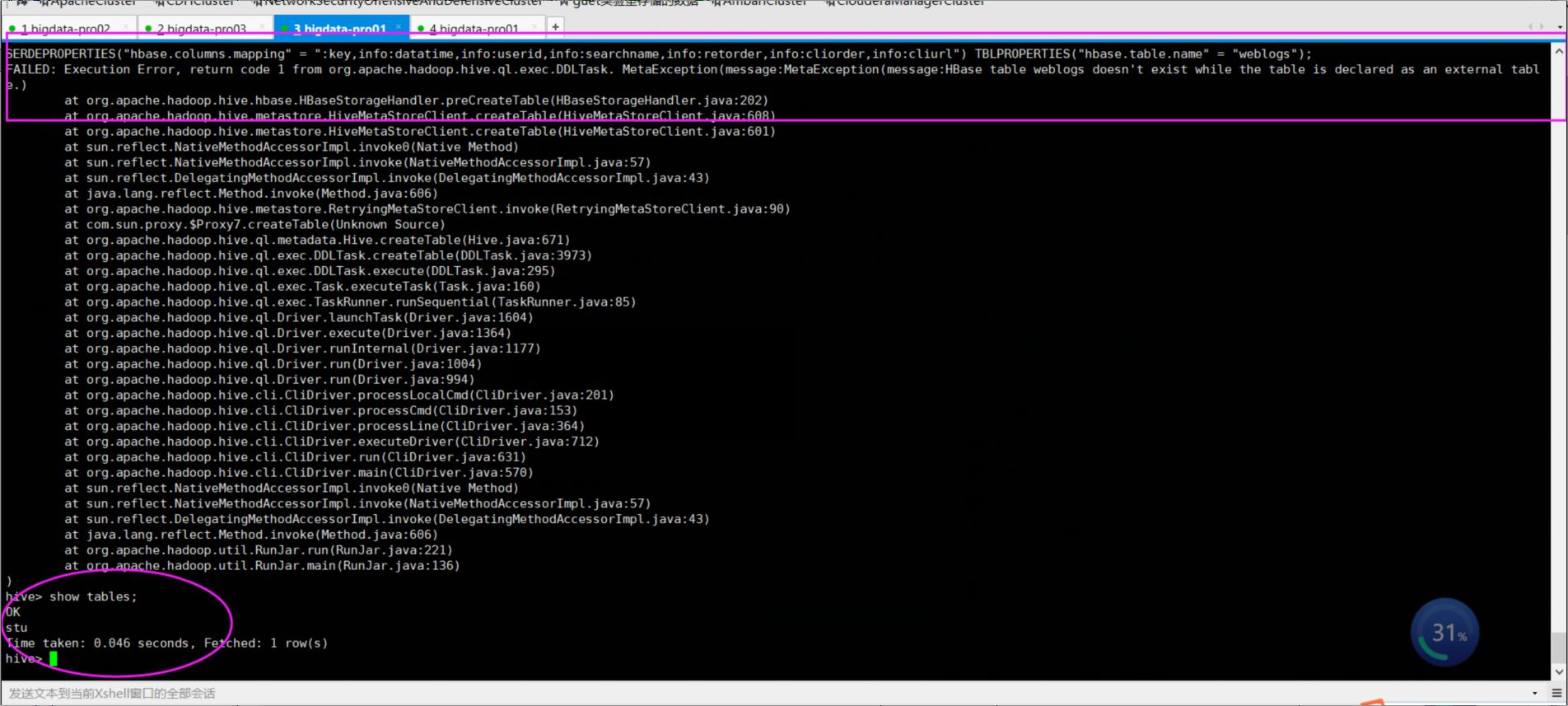

[kfk@bigdata-pro01 apache-hive-1.0.0-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.0-bin/conf/hive-log4j.properties SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.0-bin/lib/hive-jdbc-1.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] hive> create external table weblogs(id string,datatime string,userid string,searchname string,retorder string,cliorder string,cliurl string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") TBLPROPERTIES("hbase.table.name" = "weblogs"); FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:MetaException(message:HBase table weblogs doesn't exist while the table is declared as an external table.) at org.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:202) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:608) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:601) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:90) at com.sun.proxy.$Proxy7.createTable(Unknown Source) at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:671) at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:3973) at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:295) at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160) at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:85) at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1604) at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1364) at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1177) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1004) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:994) at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:201) at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:153) at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:364) at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:712) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:631) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:570) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.run(RunJar.java:221) at org.apache.hadoop.util.RunJar.main(RunJar.java:136) )

说明没创建成功。

Hive和HBase同是CDH版本的解决办法

首先通过用下面的命令,重新启动hive

./hive -hiveconf hive.root.logger=DEBUG,console 进行debug

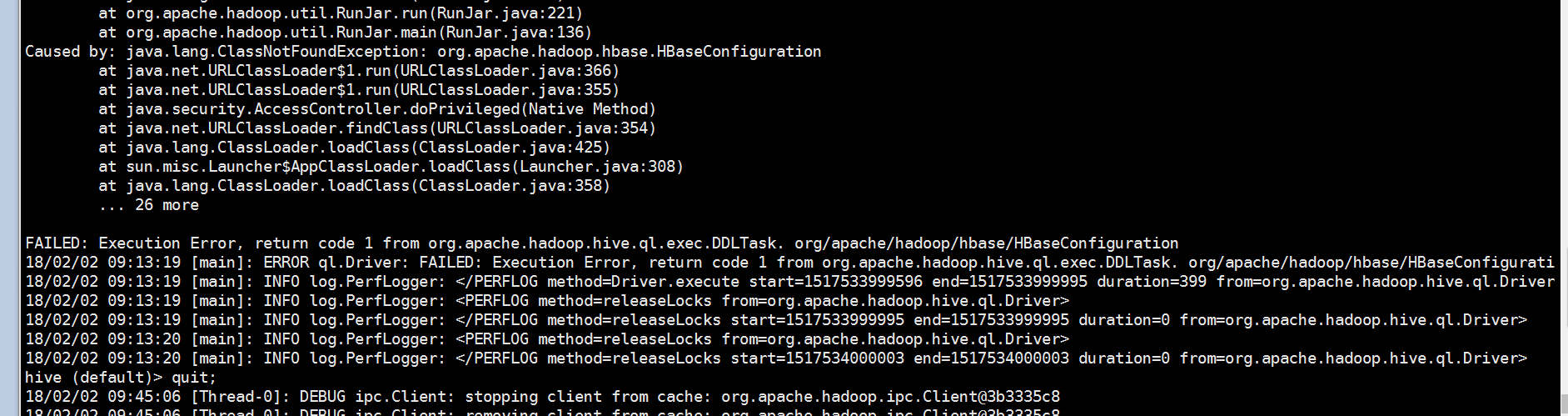

查看到错误原因

调遣hbase包错误,配置文件不能加载。

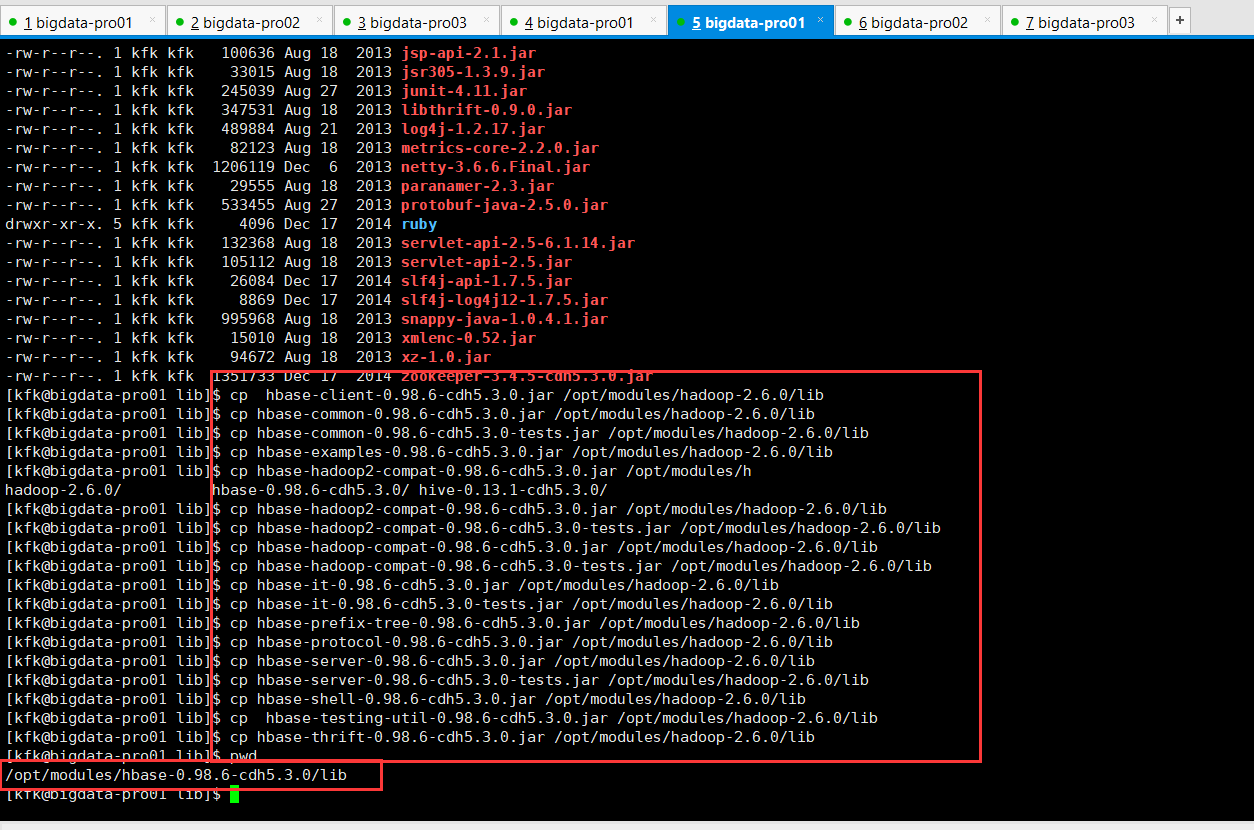

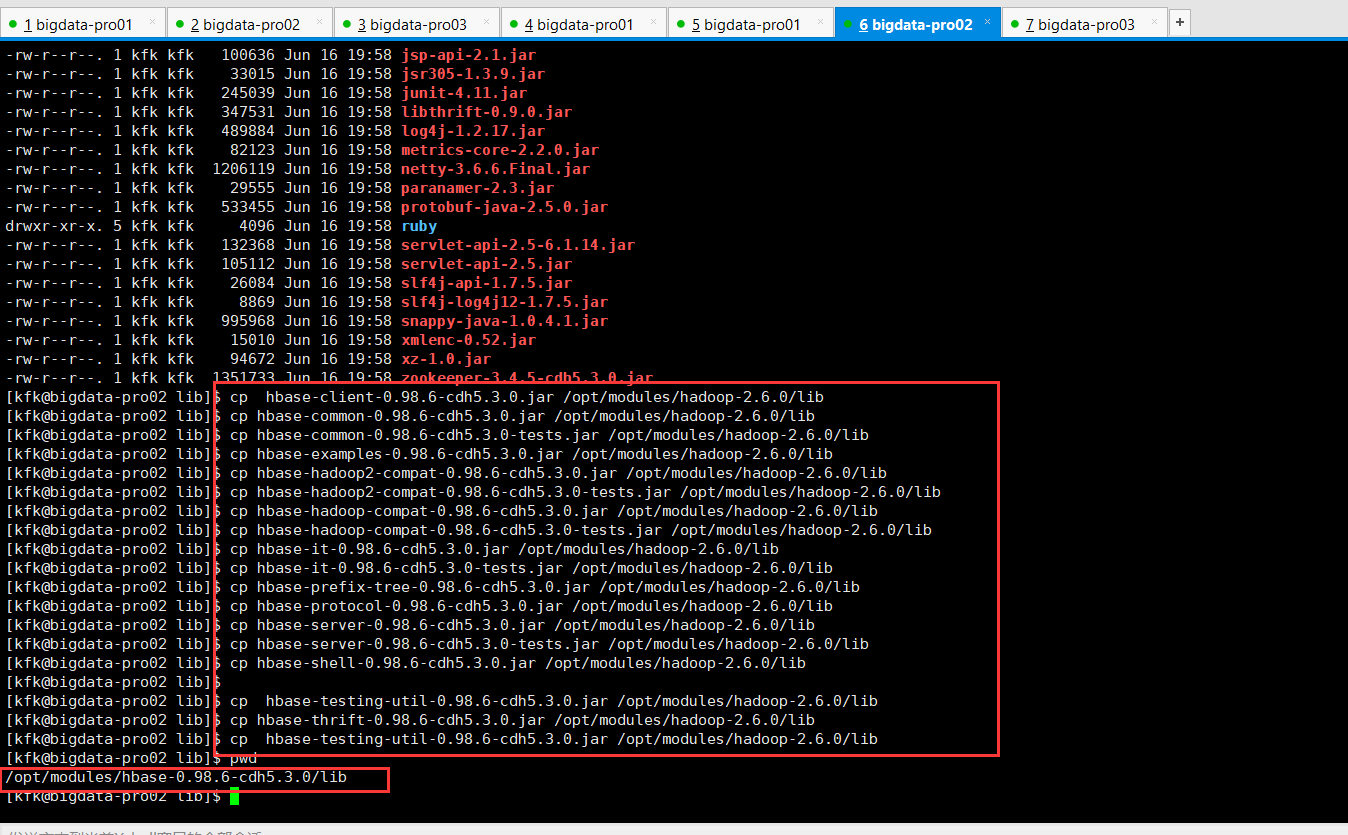

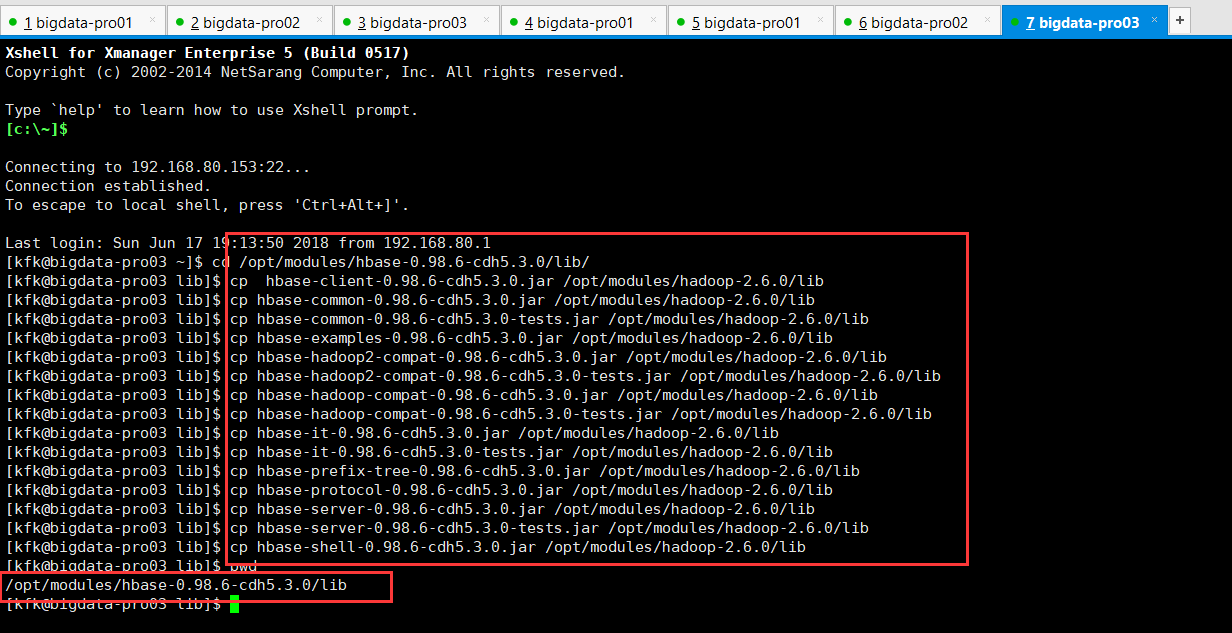

将hbase目录下jar包拷贝到hadoop/lib下,(注意了我这里为了保险起见3个节点都做了这一步)

[kfk@bigdata-pro01 lib]$ cp hbase-client-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-common-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-common-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-examples-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar /opt/modules/h hadoop-2.6.0/ hbase-0.98.6-cdh5.3.0/ hive-0.13.1-cdh5.3.0/ [kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-it-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-it-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-prefix-tree-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-protocol-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-server-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-server-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-shell-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-testing-util-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ cp hbase-thrift-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro01 lib]$ pwd /opt/modules/hbase-0.98.6-cdh5.3.0/lib [kfk@bigdata-pro01 lib]$

[kfk@bigdata-pro02 lib]$ cp hbase-client-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-common-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-common-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-examples-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-it-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-it-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-prefix-tree-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-protocol-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-server-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-server-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-shell-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ [kfk@bigdata-pro02 lib]$ cp hbase-testing-util-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-thrift-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ cp hbase-testing-util-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro02 lib]$ pwd /opt/modules/hbase-0.98.6-cdh5.3.0/lib [kfk@bigdata-pro02 lib]$

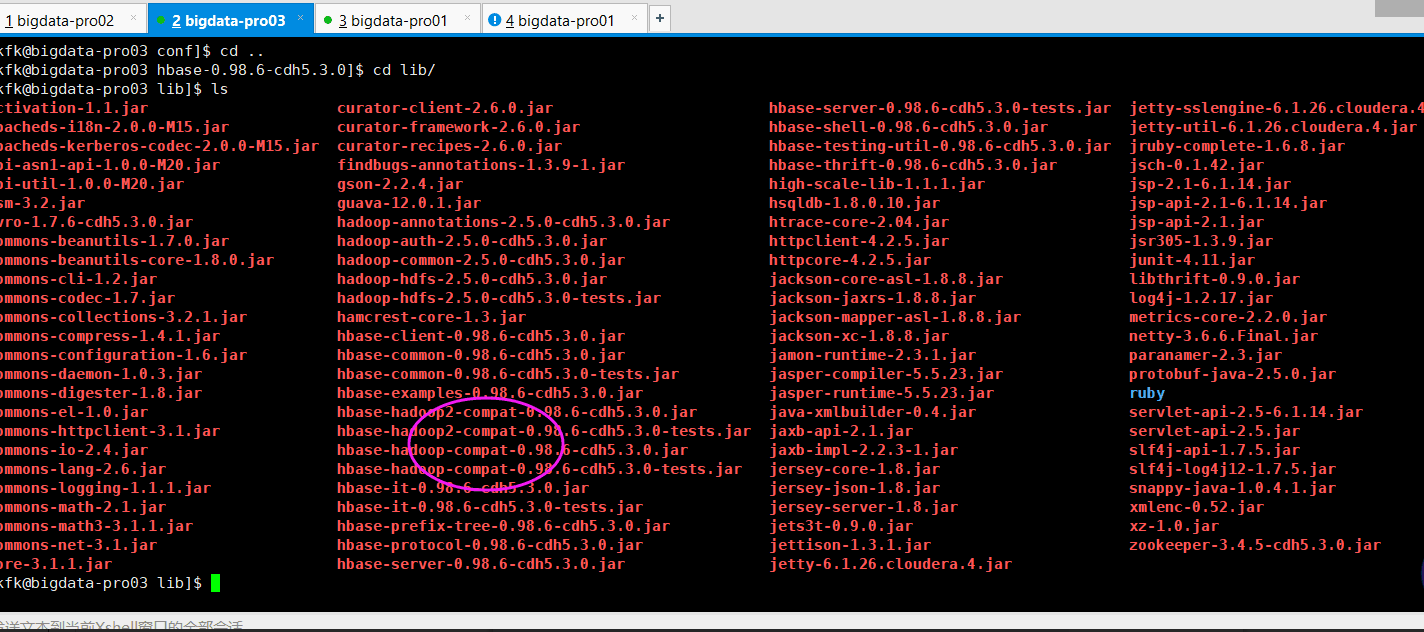

[kfk@bigdata-pro03 ~]$ cd /opt/modules/hbase-0.98.6-cdh5.3.0/lib/ [kfk@bigdata-pro03 lib]$ cp hbase-client-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-common-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-common-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-examples-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-hadoop2-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-hadoop-compat-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-it-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-it-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-prefix-tree-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-protocol-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-server-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-server-0.98.6-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ cp hbase-shell-0.98.6-cdh5.3.0.jar /opt/modules/hadoop-2.6.0/lib [kfk@bigdata-pro03 lib]$ pwd /opt/modules/hbase-0.98.6-cdh5.3.0/lib [kfk@bigdata-pro03 lib]$

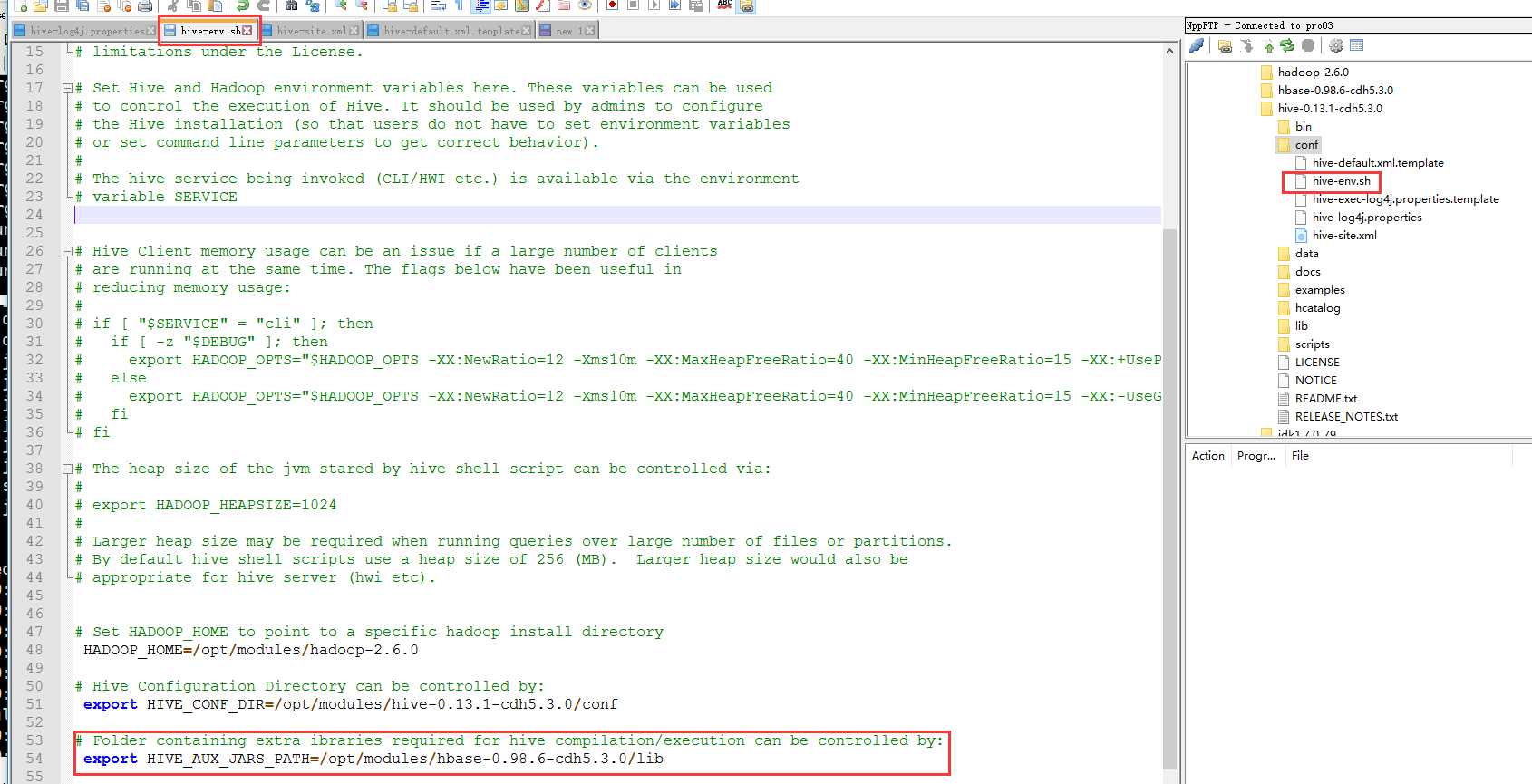

然后,修改hive的配置文件,使hbase的lib包生效

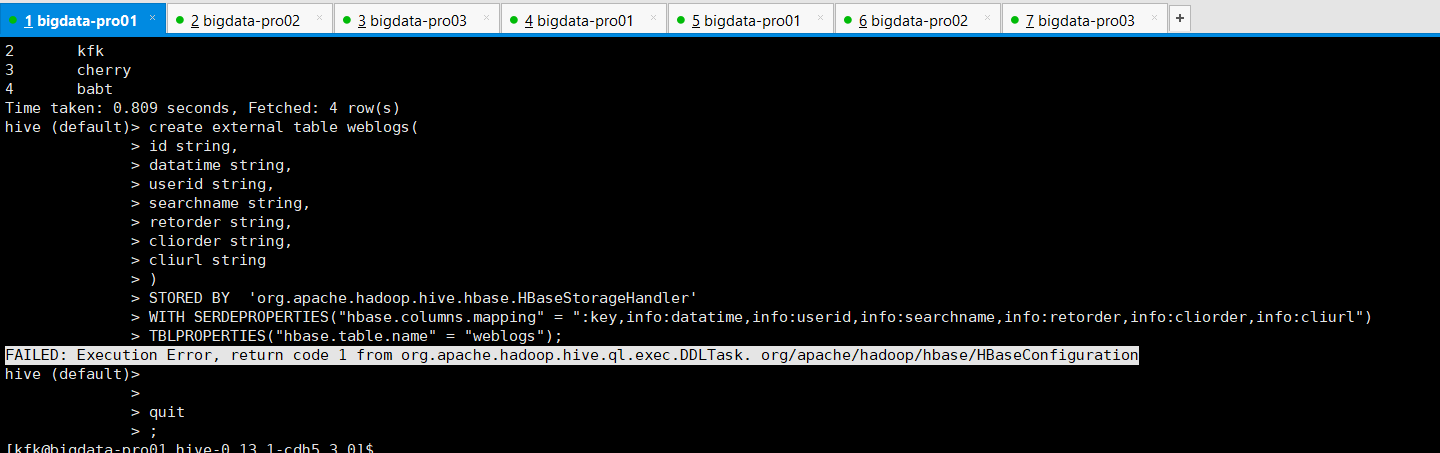

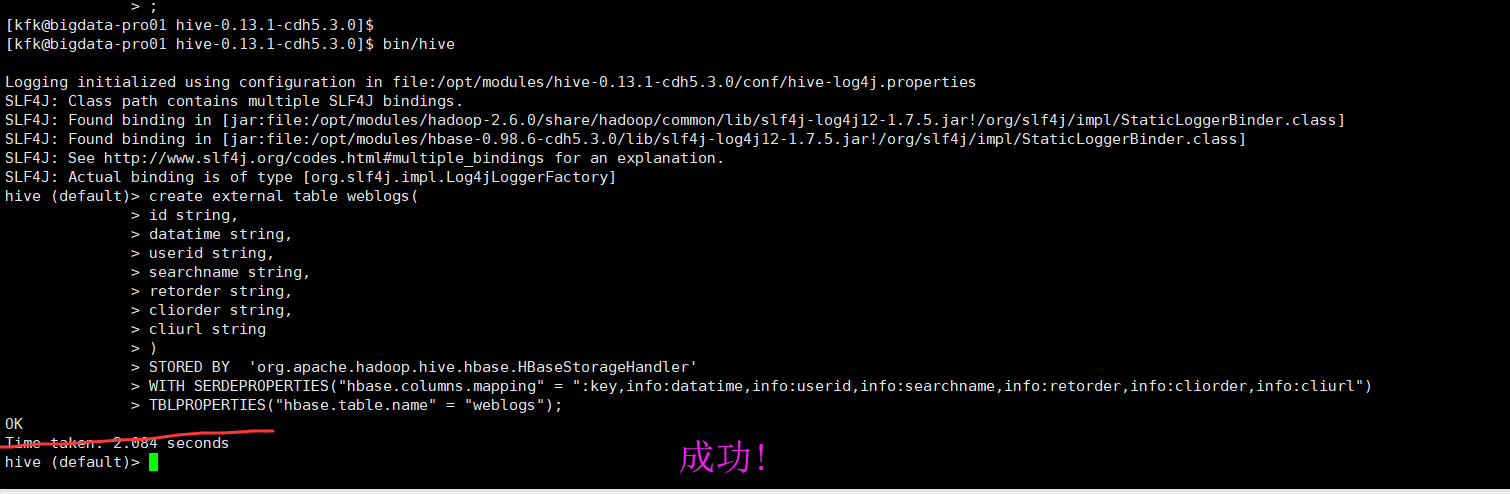

重启一下hive,再次建表

[kfk@bigdata-pro01 hive-0.13.1-cdh5.3.0]$ bin/hive Logging initialized using configuration in file:/opt/modules/hive-0.13.1-cdh5.3.0/conf/hive-log4j.properties SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/modules/hbase-0.98.6-cdh5.3.0/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] hive (default)> create external table weblogs( > id string, > datatime string, > userid string, > searchname string, > retorder string, > cliorder string, > cliurl string > ) > STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' > WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") > TBLPROPERTIES("hbase.table.name" = "weblogs"); OK Time taken: 2.084 seconds hive (default)>

Hive和HBase不同是CDH版本的解决办法

(2) 重启Hbase服务试试

(3)别光检查你的Hive,还看看你的HBase进程啊。

从时间同步,防火墙,MySQL启动没、hive-site.xml配置好了没,以及授权是否正确等方面去排查。

java.lang.RuntimeException: HRegionServer Aborted的问题

分布式集群HBase启动后某节点的HRegionServer自动消失问题

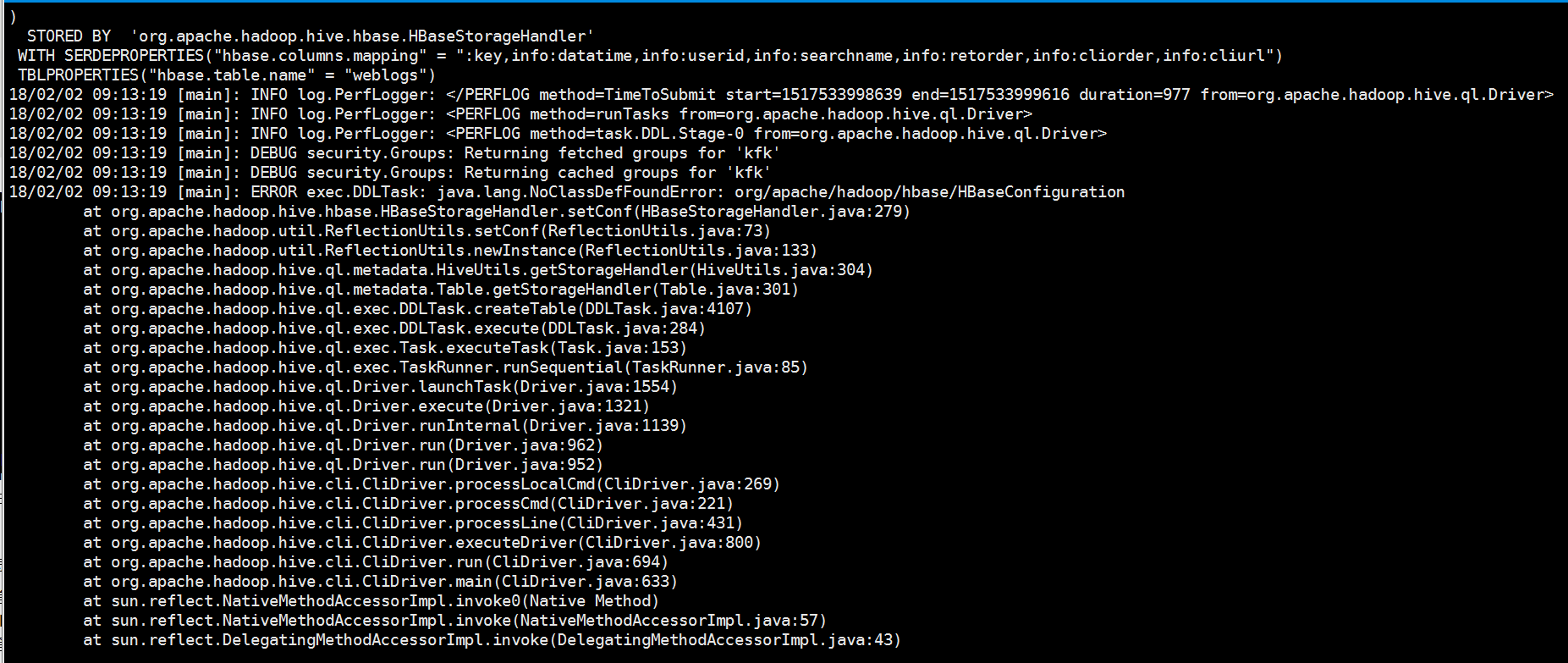

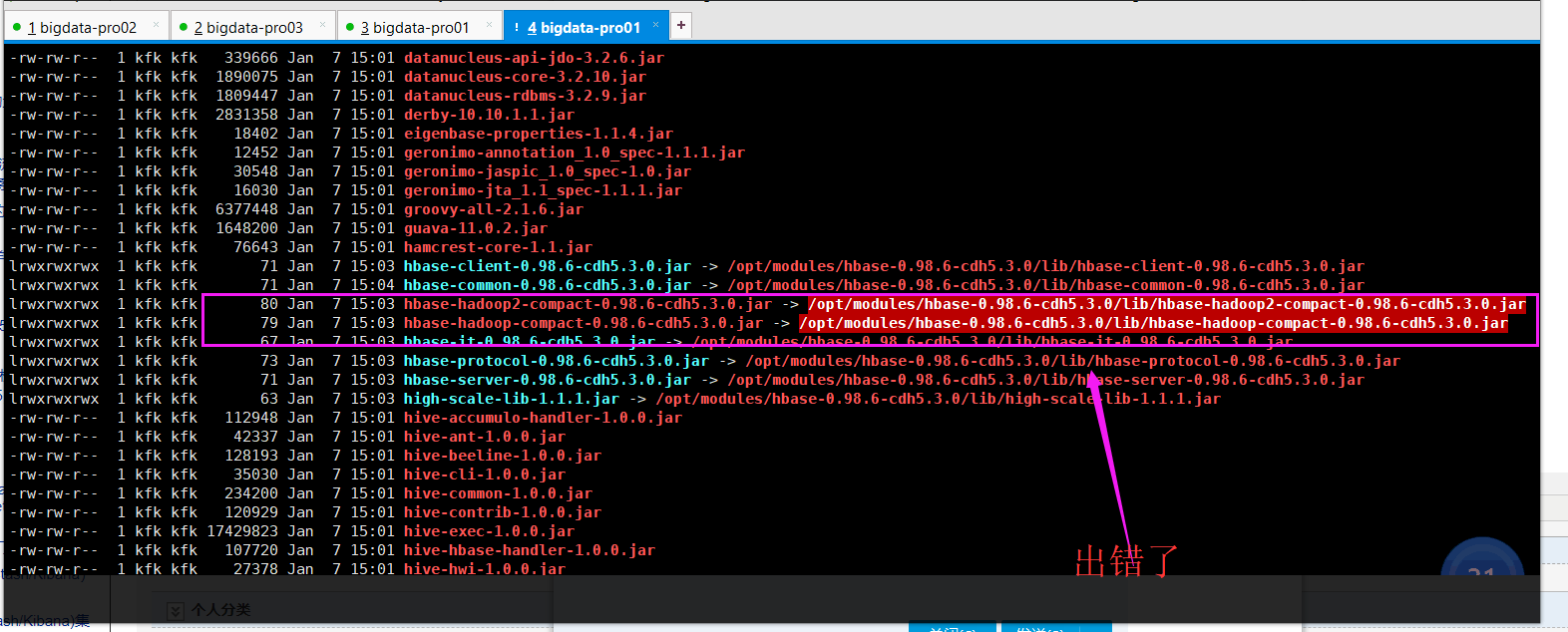

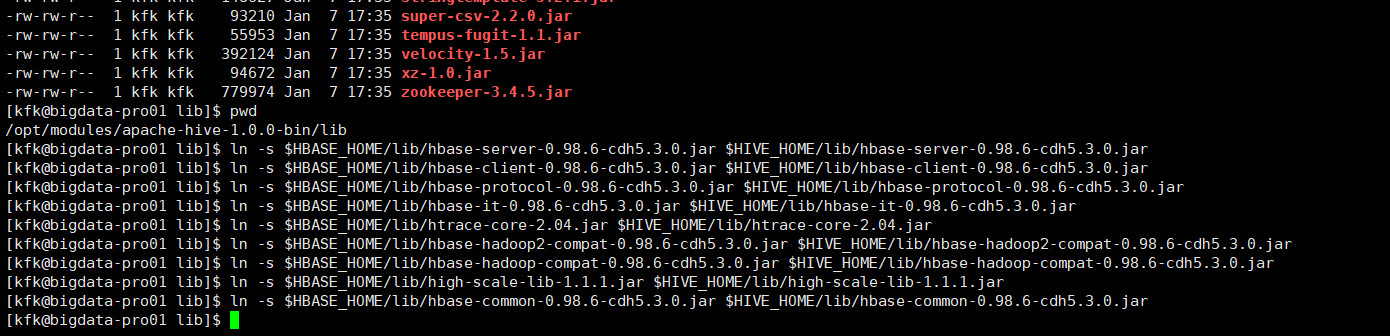

(4) 少包了,是Hive和HBase集成这过程出错了。

官网 https://cwiki.apache.org/confluence/display/Hive/HBaseIntegration

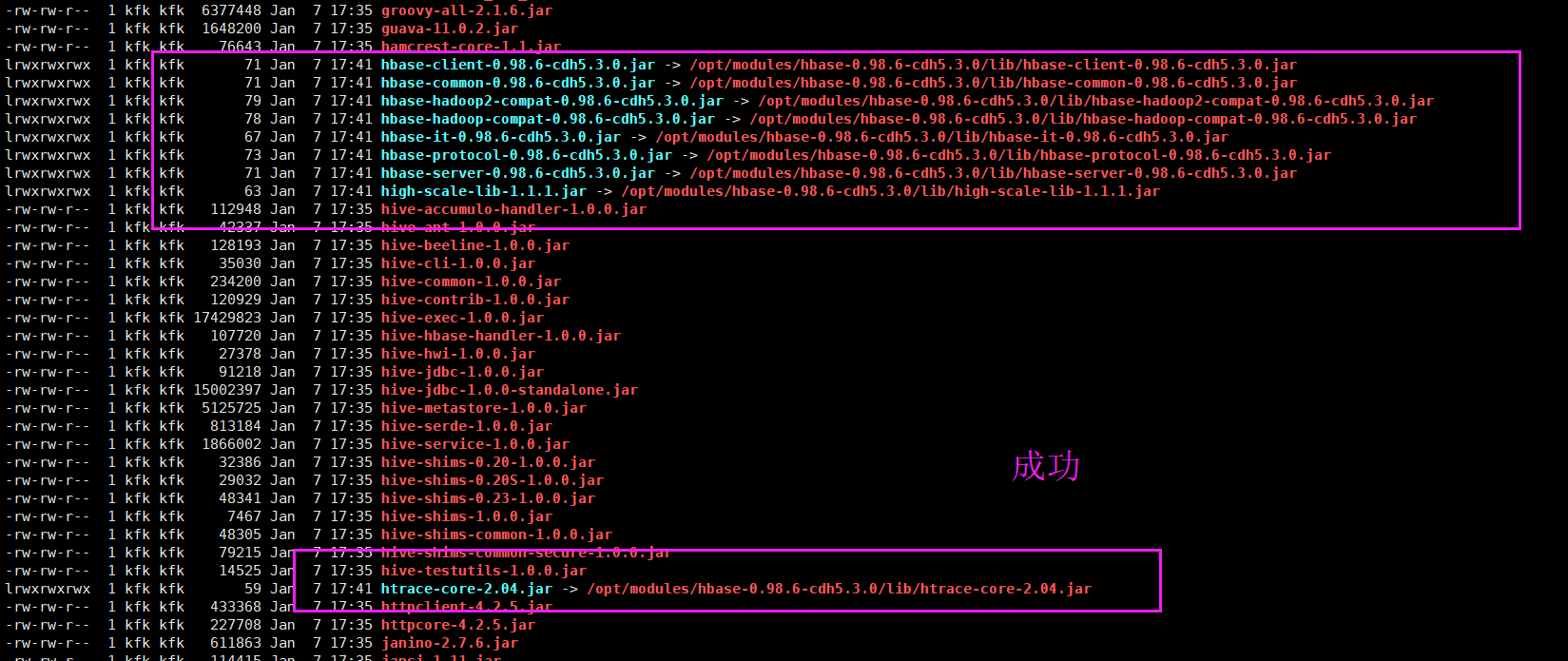

export HBASE_HOME=/opt/modules/hbase-0.98.6-cdh5.3.0 export HIVE_HOME=/opt/modules/apache-hive-1.0.0-bin ln -s $HBASE_HOME/lib/hbase-server-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-server-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-client-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-client-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-protocol-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-protocol-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-it-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-it-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/htrace-core-2.04.jar $HIVE_HOME/lib/htrace-core-2.04.jar ln -s $HBASE_HOME/lib/hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-hadoop-compat-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop-compat-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/high-scale-lib-1.1.1.jar $HIVE_HOME/lib/high-scale-lib-1.1.1.jar ln -s $HBASE_HOME/lib/hbase-common-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-common-0.98.6-cdh5.3.0.jar

[kfk@bigdata-pro01 lib]$ ll total 82368 -rw-rw-r-- 1 kfk kfk 4368200 Jan 7 17:35 accumulo-core-1.6.0.jar -rw-rw-r-- 1 kfk kfk 102069 Jan 7 17:35 accumulo-fate-1.6.0.jar -rw-rw-r-- 1 kfk kfk 57420 Jan 7 17:35 accumulo-start-1.6.0.jar -rw-rw-r-- 1 kfk kfk 117409 Jan 7 17:35 accumulo-trace-1.6.0.jar -rw-rw-r-- 1 kfk kfk 62983 Jan 7 17:35 activation-1.1.jar -rw-rw-r-- 1 kfk kfk 1997485 Jan 7 17:35 ant-1.9.1.jar -rw-rw-r-- 1 kfk kfk 18336 Jan 7 17:35 ant-launcher-1.9.1.jar -rw-rw-r-- 1 kfk kfk 445288 Jan 7 17:35 antlr-2.7.7.jar -rw-rw-r-- 1 kfk kfk 164368 Jan 7 17:35 antlr-runtime-3.4.jar -rw-rw-r-- 1 kfk kfk 32693 Jan 7 17:35 asm-commons-3.1.jar -rw-rw-r-- 1 kfk kfk 21879 Jan 7 17:35 asm-tree-3.1.jar -rw-rw-r-- 1 kfk kfk 400680 Jan 7 17:35 avro-1.7.5.jar -rw-rw-r-- 1 kfk kfk 110600 Jan 7 17:35 bonecp-0.8.0.RELEASE.jar -rw-rw-r-- 1 kfk kfk 89044 Jan 7 17:35 calcite-avatica-0.9.2-incubating.jar -rw-rw-r-- 1 kfk kfk 3156089 Jan 7 17:35 calcite-core-0.9.2-incubating.jar -rw-rw-r-- 1 kfk kfk 188671 Jan 7 17:35 commons-beanutils-1.7.0.jar -rw-rw-r-- 1 kfk kfk 206035 Jan 7 17:35 commons-beanutils-core-1.8.0.jar -rw-rw-r-- 1 kfk kfk 41123 Jan 7 17:35 commons-cli-1.2.jar -rw-rw-r-- 1 kfk kfk 58160 Jan 7 17:35 commons-codec-1.4.jar -rw-rw-r-- 1 kfk kfk 575389 Jan 7 17:35 commons-collections-3.2.1.jar -rw-rw-r-- 1 kfk kfk 30595 Jan 7 17:35 commons-compiler-2.7.6.jar -rw-rw-r-- 1 kfk kfk 241367 Jan 7 17:35 commons-compress-1.4.1.jar -rw-rw-r-- 1 kfk kfk 298829 Jan 7 17:35 commons-configuration-1.6.jar -rw-rw-r-- 1 kfk kfk 160519 Jan 7 17:35 commons-dbcp-1.4.jar -rw-rw-r-- 1 kfk kfk 143602 Jan 7 17:35 commons-digester-1.8.jar -rw-rw-r-- 1 kfk kfk 279781 Jan 7 17:35 commons-httpclient-3.0.1.jar -rw-rw-r-- 1 kfk kfk 185140 Jan 7 17:35 commons-io-2.4.jar -rw-rw-r-- 1 kfk kfk 284220 Jan 7 17:35 commons-lang-2.6.jar -rw-rw-r-- 1 kfk kfk 62050 Jan 7 17:35 commons-logging-1.1.3.jar -rw-rw-r-- 1 kfk kfk 832410 Jan 7 17:35 commons-math-2.1.jar -rw-rw-r-- 1 kfk kfk 96221 Jan 7 17:35 commons-pool-1.5.4.jar -rw-rw-r-- 1 kfk kfk 415578 Jan 7 17:35 commons-vfs2-2.0.jar -rw-rw-r-- 1 kfk kfk 68866 Jan 7 17:35 curator-client-2.6.0.jar -rw-rw-r-- 1 kfk kfk 185245 Jan 7 17:35 curator-framework-2.6.0.jar -rw-rw-r-- 1 kfk kfk 339666 Jan 7 17:35 datanucleus-api-jdo-3.2.6.jar -rw-rw-r-- 1 kfk kfk 1890075 Jan 7 17:35 datanucleus-core-3.2.10.jar -rw-rw-r-- 1 kfk kfk 1809447 Jan 7 17:35 datanucleus-rdbms-3.2.9.jar -rw-rw-r-- 1 kfk kfk 2831358 Jan 7 17:35 derby-10.10.1.1.jar -rw-rw-r-- 1 kfk kfk 18402 Jan 7 17:35 eigenbase-properties-1.1.4.jar -rw-rw-r-- 1 kfk kfk 12452 Jan 7 17:35 geronimo-annotation_1.0_spec-1.1.1.jar -rw-rw-r-- 1 kfk kfk 30548 Jan 7 17:35 geronimo-jaspic_1.0_spec-1.0.jar -rw-rw-r-- 1 kfk kfk 16030 Jan 7 17:35 geronimo-jta_1.1_spec-1.1.1.jar -rw-rw-r-- 1 kfk kfk 6377448 Jan 7 17:35 groovy-all-2.1.6.jar -rw-rw-r-- 1 kfk kfk 1648200 Jan 7 17:35 guava-11.0.2.jar -rw-rw-r-- 1 kfk kfk 76643 Jan 7 17:35 hamcrest-core-1.1.jar lrwxrwxrwx 1 kfk kfk 71 Jan 7 17:41 hbase-client-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-client-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 71 Jan 7 17:41 hbase-common-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-common-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 79 Jan 7 17:41 hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-hadoop2-compat-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 78 Jan 7 17:41 hbase-hadoop-compat-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-hadoop-compat-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 67 Jan 7 17:41 hbase-it-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-it-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 73 Jan 7 17:41 hbase-protocol-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-protocol-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 71 Jan 7 17:41 hbase-server-0.98.6-cdh5.3.0.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/hbase-server-0.98.6-cdh5.3.0.jar lrwxrwxrwx 1 kfk kfk 63 Jan 7 17:41 high-scale-lib-1.1.1.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/high-scale-lib-1.1.1.jar -rw-rw-r-- 1 kfk kfk 112948 Jan 7 17:35 hive-accumulo-handler-1.0.0.jar -rw-rw-r-- 1 kfk kfk 42337 Jan 7 17:35 hive-ant-1.0.0.jar -rw-rw-r-- 1 kfk kfk 128193 Jan 7 17:35 hive-beeline-1.0.0.jar -rw-rw-r-- 1 kfk kfk 35030 Jan 7 17:35 hive-cli-1.0.0.jar -rw-rw-r-- 1 kfk kfk 234200 Jan 7 17:35 hive-common-1.0.0.jar -rw-rw-r-- 1 kfk kfk 120929 Jan 7 17:35 hive-contrib-1.0.0.jar -rw-rw-r-- 1 kfk kfk 17429823 Jan 7 17:35 hive-exec-1.0.0.jar -rw-rw-r-- 1 kfk kfk 107720 Jan 7 17:35 hive-hbase-handler-1.0.0.jar -rw-rw-r-- 1 kfk kfk 27378 Jan 7 17:35 hive-hwi-1.0.0.jar -rw-rw-r-- 1 kfk kfk 91218 Jan 7 17:35 hive-jdbc-1.0.0.jar -rw-rw-r-- 1 kfk kfk 15002397 Jan 7 17:35 hive-jdbc-1.0.0-standalone.jar -rw-rw-r-- 1 kfk kfk 5125725 Jan 7 17:35 hive-metastore-1.0.0.jar -rw-rw-r-- 1 kfk kfk 813184 Jan 7 17:35 hive-serde-1.0.0.jar -rw-rw-r-- 1 kfk kfk 1866002 Jan 7 17:35 hive-service-1.0.0.jar -rw-rw-r-- 1 kfk kfk 32386 Jan 7 17:35 hive-shims-0.20-1.0.0.jar -rw-rw-r-- 1 kfk kfk 29032 Jan 7 17:35 hive-shims-0.20S-1.0.0.jar -rw-rw-r-- 1 kfk kfk 48341 Jan 7 17:35 hive-shims-0.23-1.0.0.jar -rw-rw-r-- 1 kfk kfk 7467 Jan 7 17:35 hive-shims-1.0.0.jar -rw-rw-r-- 1 kfk kfk 48305 Jan 7 17:35 hive-shims-common-1.0.0.jar -rw-rw-r-- 1 kfk kfk 79215 Jan 7 17:35 hive-shims-common-secure-1.0.0.jar -rw-rw-r-- 1 kfk kfk 14525 Jan 7 17:35 hive-testutils-1.0.0.jar lrwxrwxrwx 1 kfk kfk 59 Jan 7 17:41 htrace-core-2.04.jar -> /opt/modules/hbase-0.98.6-cdh5.3.0/lib/htrace-core-2.04.jar -rw-rw-r-- 1 kfk kfk 433368 Jan 7 17:35 httpclient-4.2.5.jar -rw-rw-r-- 1 kfk kfk 227708 Jan 7 17:35 httpcore-4.2.5.jar -rw-rw-r-- 1 kfk kfk 611863 Jan 7 17:35 janino-2.7.6.jar -rw-rw-r-- 1 kfk kfk 114415 Jan 7 17:35 jansi-1.11.jar -rw-rw-r-- 1 kfk kfk 60527 Jan 7 17:35 jcommander-1.32.jar -rw-rw-r-- 1 kfk kfk 201124 Jan 7 17:35 jdo-api-3.0.1.jar -rw-rw-r-- 1 kfk kfk 1681148 Jan 7 17:35 jetty-all-7.6.0.v20120127.jar -rw-rw-r-- 1 kfk kfk 1683027 Jan 7 17:35 jetty-all-server-7.6.0.v20120127.jar -rw-rw-r-- 1 kfk kfk 87325 Jan 7 17:35 jline-0.9.94.jar -rw-rw-r-- 1 kfk kfk 12131 Jan 7 17:35 jpam-1.1.jar -rw-rw-r-- 1 kfk kfk 33015 Jan 7 17:35 jsr305-1.3.9.jar -rw-rw-r-- 1 kfk kfk 15071 Jan 7 17:35 jta-1.1.jar -rw-rw-r-- 1 kfk kfk 245039 Jan 7 17:35 junit-4.11.jar -rw-rw-r-- 1 kfk kfk 275186 Jan 7 17:35 libfb303-0.9.0.jar -rw-rw-r-- 1 kfk kfk 347531 Jan 7 17:35 libthrift-0.9.0.jar -rw-rw-r-- 1 kfk kfk 489213 Jan 7 17:35 linq4j-0.4.jar -rw-rw-r-- 1 kfk kfk 481535 Jan 7 17:35 log4j-1.2.16.jar -rw-rw-r-- 1 kfk kfk 447676 Jan 7 17:35 mail-1.4.1.jar -rw-rw-r-- 1 kfk kfk 94421 Jan 7 17:35 maven-scm-api-1.4.jar -rw-rw-r-- 1 kfk kfk 40066 Jan 7 17:35 maven-scm-provider-svn-commons-1.4.jar -rw-rw-r-- 1 kfk kfk 69858 Jan 7 17:35 maven-scm-provider-svnexe-1.4.jar -rw-rw-r-- 1 kfk kfk 827942 Jan 7 17:36 mysql-connector-java-5.1.21.jar -rw-rw-r-- 1 kfk kfk 19827 Jan 7 17:35 opencsv-2.3.jar -rw-rw-r-- 1 kfk kfk 65261 Jan 7 17:35 oro-2.0.8.jar -rw-rw-r-- 1 kfk kfk 29555 Jan 7 17:35 paranamer-2.3.jar -rw-rw-r-- 1 kfk kfk 48528 Jan 7 17:35 pentaho-aggdesigner-algorithm-5.1.3-jhyde.jar drwxrwxr-x 6 kfk kfk 4096 Jan 7 17:35 php -rw-rw-r-- 1 kfk kfk 250546 Jan 7 17:35 plexus-utils-1.5.6.jar drwxrwxr-x 10 kfk kfk 4096 Jan 7 17:35 py -rw-rw-r-- 1 kfk kfk 37325 Jan 7 17:35 quidem-0.1.1.jar -rw-rw-r-- 1 kfk kfk 25429 Jan 7 17:35 regexp-1.3.jar -rw-rw-r-- 1 kfk kfk 105112 Jan 7 17:35 servlet-api-2.5.jar -rw-rw-r-- 1 kfk kfk 1251514 Jan 7 17:35 snappy-java-1.0.5.jar -rw-rw-r-- 1 kfk kfk 236660 Jan 7 17:35 ST4-4.0.4.jar -rw-rw-r-- 1 kfk kfk 26514 Jan 7 17:35 stax-api-1.0.1.jar -rw-rw-r-- 1 kfk kfk 148627 Jan 7 17:35 stringtemplate-3.2.1.jar -rw-rw-r-- 1 kfk kfk 93210 Jan 7 17:35 super-csv-2.2.0.jar -rw-rw-r-- 1 kfk kfk 55953 Jan 7 17:35 tempus-fugit-1.1.jar -rw-rw-r-- 1 kfk kfk 392124 Jan 7 17:35 velocity-1.5.jar -rw-rw-r-- 1 kfk kfk 94672 Jan 7 17:35 xz-1.0.jar -rw-rw-r-- 1 kfk kfk 779974 Jan 7 17:35 zookeeper-3.4.5.jar [kfk@bigdata-pro01 lib]$

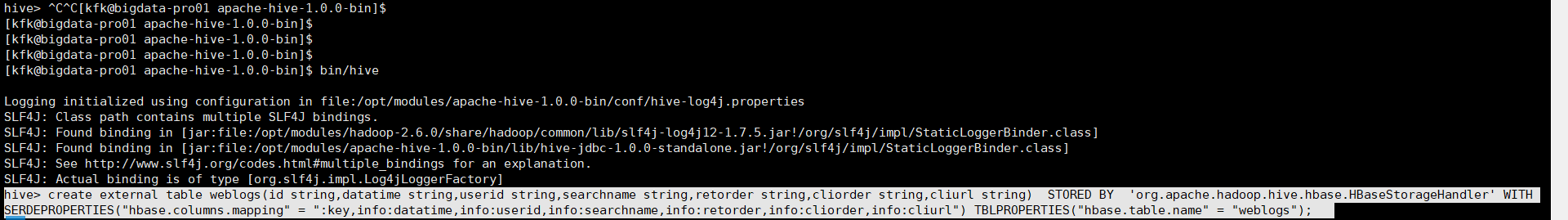

然后,再来执行

[kfk@bigdata-pro01 apache-hive-1.0.0-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.0-bin/conf/hive-log4j.properties SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.0-bin/lib/hive-jdbc-1.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] hive> create external table weblogs( > id string, > datatime string, > userid string, > searchname string, > retorder string, > cliorder string, > cliurl string > ) > STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' > WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") > TBLPROPERTIES("hbase.table.name" = "weblogs");

或者

[kfk@bigdata-pro01 apache-hive-1.0.0-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.0-bin/conf/hive-log4j.properties SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.0-bin/lib/hive-jdbc-1.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] hive> create external table weblogs(id string,datatime string,userid string,searchname string,retorder string,cliorder string,cliurl string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") TBLPROPERTIES("hbase.table.name" = "weblogs");

以上是创建与HBase集成的Hive的外部表。

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)