前期博客

Logstash安装和设置(图文详解)(多节点的ELK集群安装在一个节点就好)

Filebeat啊,根据input来监控数据,根据output来使用数据!!!

请移步,

Filebeat之input和output(包含Elasticsearch Output 、Logstash Output、 Redis Output、 File Output和 Console Output)

Logstash啊,根据input来监控数据,根据output来使用数据!!!

手把手带你看官方文档(Logstash inputs和Logstash outputs)

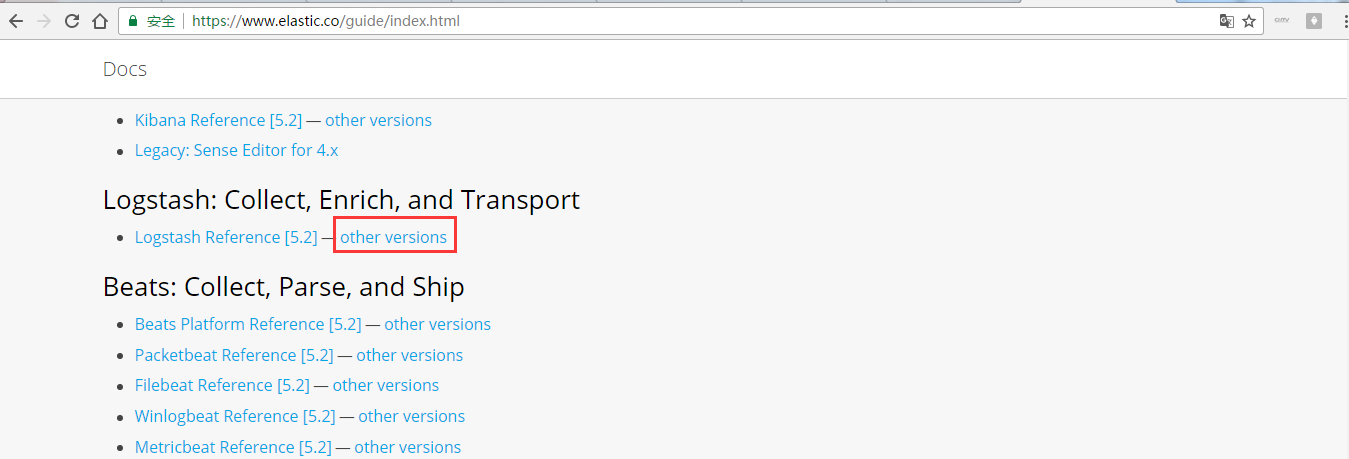

https://www.elastic.co/guide/index.html

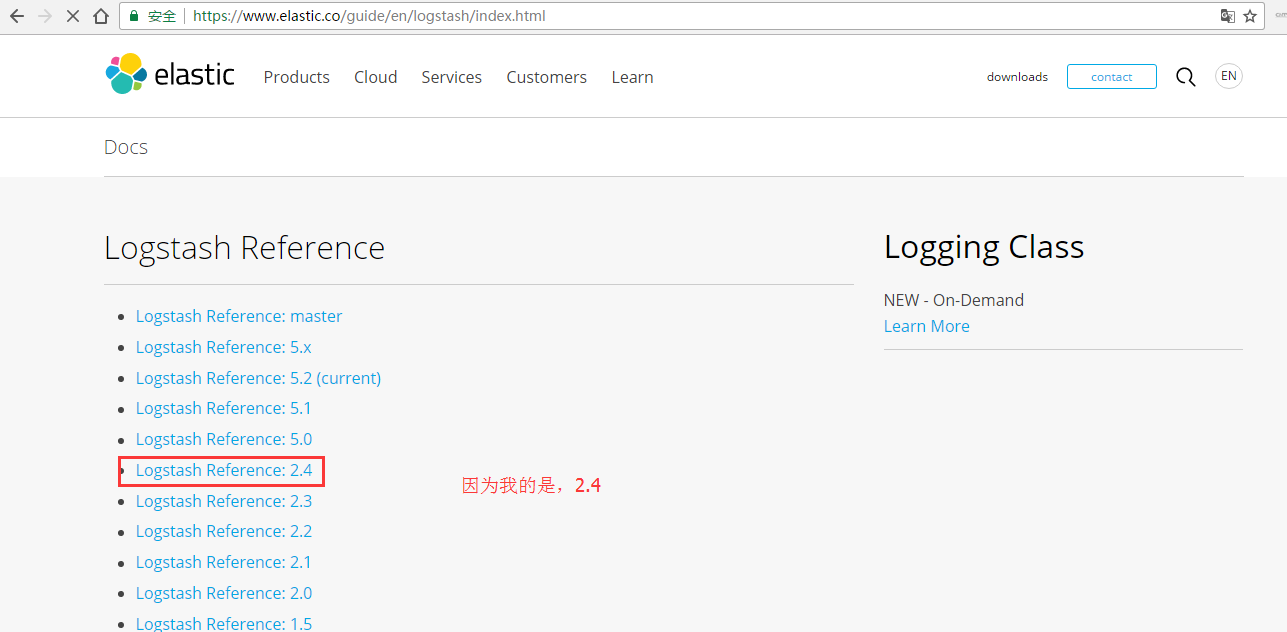

https://www.elastic.co/guide/en/logstash/index.html

https://www.elastic.co/guide/en/logstash/2.4/index.html

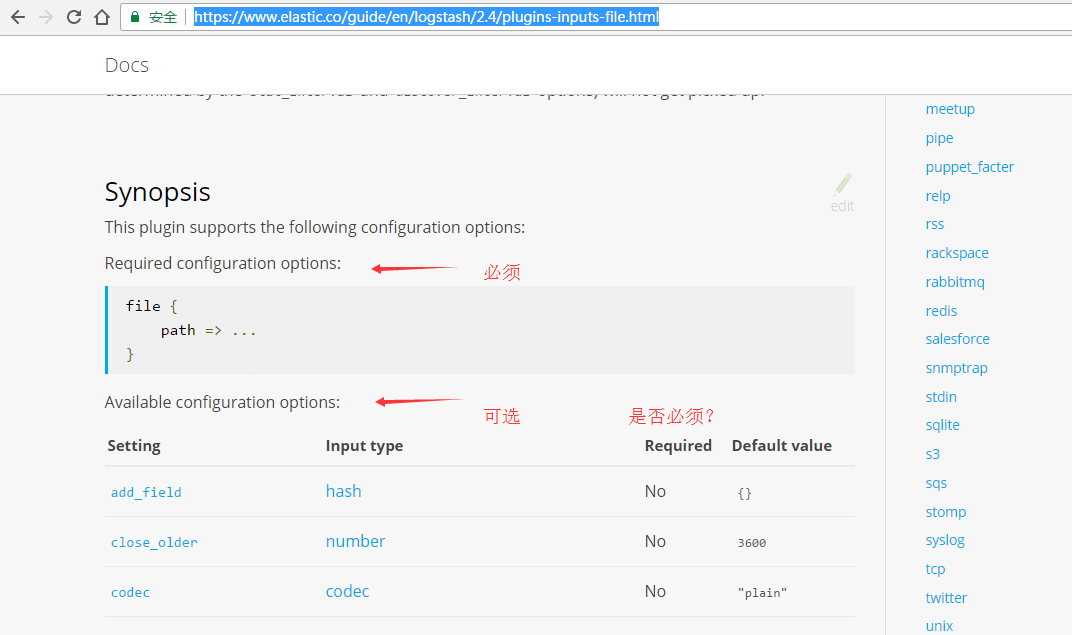

关于 Logstash inputs、Logstash outputs和Filter plugins,很多很多,自行去官网看,我这里不多赘述。仅仅拿下面来示范:

Logstash 的input

file input

最常用的input插件是file。

https://www.elastic.co/guide/en/logstash/2.4/plugins-inputs-file.html

[hadoop@HadoopMaster logstash-2.4.1]$ pwd /home/hadoop/app/logstash-2.4.1 [hadoop@HadoopMaster logstash-2.4.1]$ ll total 164 drwxrwxr-x. 2 hadoop hadoop 4096 Mar 27 03:58 bin -rw-rw-r--. 1 hadoop hadoop 102879 Nov 14 10:04 CHANGELOG.md -rw-rw-r--. 1 hadoop hadoop 2249 Nov 14 10:04 CONTRIBUTORS -rw-rw-r--. 1 hadoop hadoop 5084 Nov 14 10:07 Gemfile -rw-rw-r--. 1 hadoop hadoop 23015 Nov 14 10:04 Gemfile.jruby-1.9.lock drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 lib -rw-rw-r--. 1 hadoop hadoop 589 Nov 14 10:04 LICENSE -rw-rw-r--. 1 hadoop hadoop 46 Mar 27 05:30 logstash-simple.conf -rw-rw-r--. 1 hadoop hadoop 149 Nov 14 10:04 NOTICE.TXT drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 vendor [hadoop@HadoopMaster logstash-2.4.1]$ vim file_stdout.conf

这个,是可以自定义的。我这里是

path => "/home/hadoop/app.log"

或者

path => [ "/home/hadoop/app", "/home/hadoop/*.log" ]

input { file { path => "/home/hadoop/app.log" } } filter { } output { stdout {} }

我这里是, 监控/home/hadoop/app.log这个文件的变化。

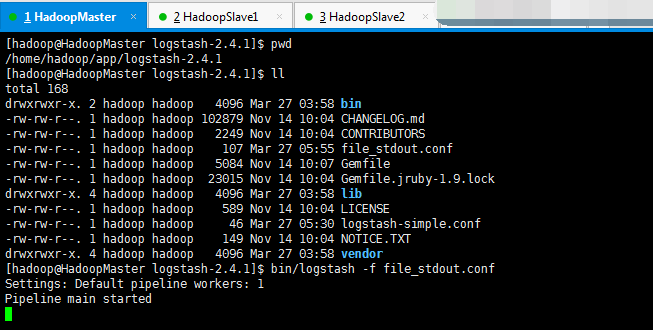

[hadoop@HadoopMaster logstash-2.4.1]$ pwd /home/hadoop/app/logstash-2.4.1 [hadoop@HadoopMaster logstash-2.4.1]$ ll total 168 drwxrwxr-x. 2 hadoop hadoop 4096 Mar 27 03:58 bin -rw-rw-r--. 1 hadoop hadoop 102879 Nov 14 10:04 CHANGELOG.md -rw-rw-r--. 1 hadoop hadoop 2249 Nov 14 10:04 CONTRIBUTORS -rw-rw-r--. 1 hadoop hadoop 107 Mar 27 05:55 file_stdout.conf -rw-rw-r--. 1 hadoop hadoop 5084 Nov 14 10:07 Gemfile -rw-rw-r--. 1 hadoop hadoop 23015 Nov 14 10:04 Gemfile.jruby-1.9.lock drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 lib -rw-rw-r--. 1 hadoop hadoop 589 Nov 14 10:04 LICENSE -rw-rw-r--. 1 hadoop hadoop 46 Mar 27 05:30 logstash-simple.conf -rw-rw-r--. 1 hadoop hadoop 149 Nov 14 10:04 NOTICE.TXT drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 vendor [hadoop@HadoopMaster logstash-2.4.1]$ bin/logstash -f file_stdout.conf Settings: Default pipeline workers: 1 Pipeline main started

重新打开,另外一个HadoopMaster界面。

[hadoop@HadoopMaster ~]$ pwd /home/hadoop [hadoop@HadoopMaster ~]$ ll total 48 drwxrwxr-x. 12 hadoop hadoop 4096 Mar 27 03:59 app -rw-rw-r--. 1 hadoop hadoop 18 Mar 26 19:59 app.log drwxrwxr-x. 7 hadoop hadoop 4096 Mar 25 06:34 data drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Desktop drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Documents drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Downloads drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Music drwxr-xr-x. 2 hadoop hadoop 4096 Mar 26 20:35 mybeat drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Pictures drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Public drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Templates drwxr-xr-x. 2 hadoop hadoop 4096 Oct 31 17:19 Videos [hadoop@HadoopMaster ~]$ echo bbbbbbb >> app.log [hadoop@HadoopMaster ~]$

[hadoop@HadoopMaster logstash-2.4.1]$ pwd /home/hadoop/app/logstash-2.4.1 [hadoop@HadoopMaster logstash-2.4.1]$ ll total 168 drwxrwxr-x. 2 hadoop hadoop 4096 Mar 27 03:58 bin -rw-rw-r--. 1 hadoop hadoop 102879 Nov 14 10:04 CHANGELOG.md -rw-rw-r--. 1 hadoop hadoop 2249 Nov 14 10:04 CONTRIBUTORS -rw-rw-r--. 1 hadoop hadoop 107 Mar 27 05:55 file_stdout.conf -rw-rw-r--. 1 hadoop hadoop 5084 Nov 14 10:07 Gemfile -rw-rw-r--. 1 hadoop hadoop 23015 Nov 14 10:04 Gemfile.jruby-1.9.lock drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 lib -rw-rw-r--. 1 hadoop hadoop 589 Nov 14 10:04 LICENSE -rw-rw-r--. 1 hadoop hadoop 46 Mar 27 05:30 logstash-simple.conf -rw-rw-r--. 1 hadoop hadoop 149 Nov 14 10:04 NOTICE.TXT drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 vendor [hadoop@HadoopMaster logstash-2.4.1]$ bin/logstash -f file_stdout.conf Settings: Default pipeline workers: 1 Pipeline main started 2017-03-26T22:24:35.897Z HadoopMaster bbbbbbb

其实,这个文件,.sincedb_8f3299d0a5bdb7df6154f681fc150341也会记录。

注意:

第一次读取新文件,不会有.sincedb等这些,默认根据这个start_position去读,若start_position是end,则读最后。若start_position是begin,则读最开始。

若不是第一次读取文件了,重启Logstash,则会有.sincedb文件了,则就转去根据这个.sincedb文件读了。不管start_position是什么,都不起效了。

start_position:指定从什么位置开始读取文件数据,默认是结束位置,也可以指定为从头开始。

注意:start_position仅在该文件从未被监听过的时候起作用,因为logstash在读取文件的时候会记录一个.sincedb文件来跟踪文件的读取位置,当文件被读取过一次之后,下次就会从.sincedb中记录的位置读取,start_position参数就无效了。文件默认在用户目录下。

注意一个坑:ignore_older属性,表示忽略老的数据,值默认为86400,表示忽略24小时以前的数据。如果你新监控一个24小时以上没有被修改过的老文件的话,就算把start_position设置为beginning,也无法获取之前的数据。

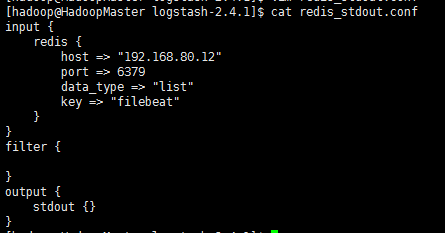

redis input

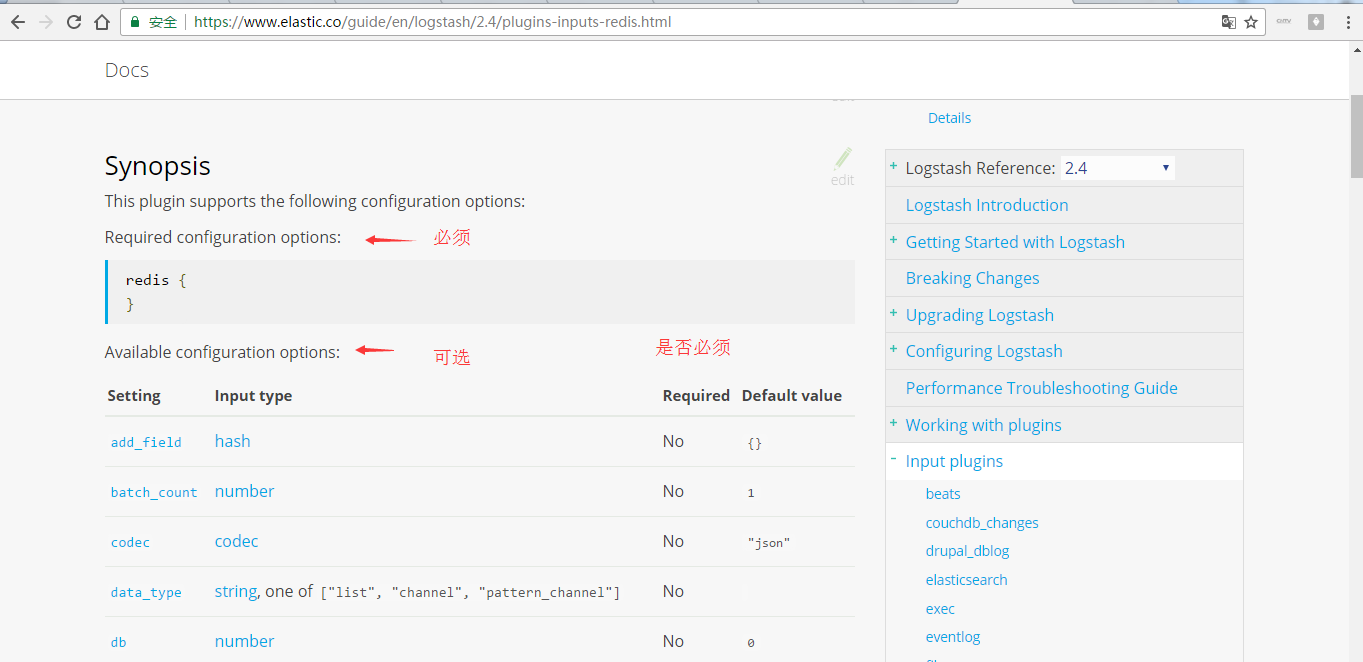

https://www.elastic.co/guide/en/logstash/2.4/plugins-inputs-redis.html

[hadoop@HadoopMaster logstash-2.4.1]$ pwd /home/hadoop/app/logstash-2.4.1 [hadoop@HadoopMaster logstash-2.4.1]$ ll total 168 drwxrwxr-x. 2 hadoop hadoop 4096 Mar 27 03:58 bin -rw-rw-r--. 1 hadoop hadoop 102879 Nov 14 10:04 CHANGELOG.md -rw-rw-r--. 1 hadoop hadoop 2249 Nov 14 10:04 CONTRIBUTORS -rw-rw-r--. 1 hadoop hadoop 107 Mar 27 05:55 file_stdout.conf -rw-rw-r--. 1 hadoop hadoop 5084 Nov 14 10:07 Gemfile -rw-rw-r--. 1 hadoop hadoop 23015 Nov 14 10:04 Gemfile.jruby-1.9.lock drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 lib -rw-rw-r--. 1 hadoop hadoop 589 Nov 14 10:04 LICENSE -rw-rw-r--. 1 hadoop hadoop 46 Mar 27 05:30 logstash-simple.conf -rw-rw-r--. 1 hadoop hadoop 149 Nov 14 10:04 NOTICE.TXT drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 vendor [hadoop@HadoopMaster logstash-2.4.1]$ vim redis_stdout.conf

input { redis { host => "192.168.80.12" port => 6379 data_type => "list" key => "filebeat" } } filter { } output { stdout {} }

这里,用到redis,不会用的博友,请移步

redis的安装(图文详解)

这里,等我安装好了之后,再来。

Logstash 的output

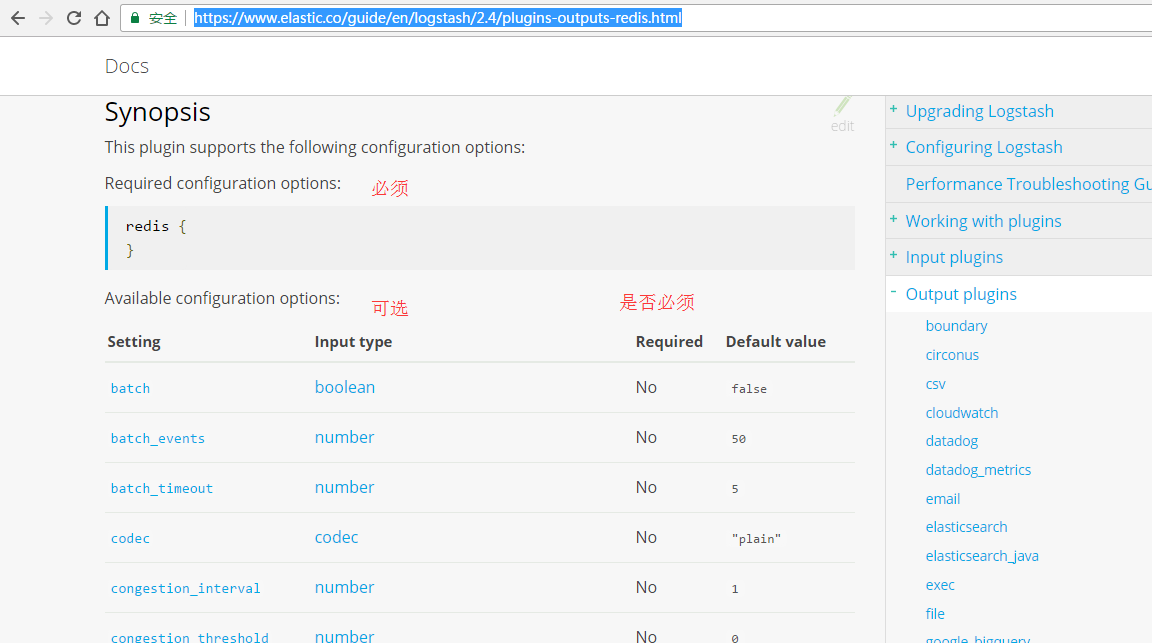

redis output

https://www.elastic.co/guide/en/logstash/2.4/plugins-outputs-redis.html

[hadoop@HadoopMaster logstash-2.4.1]$ pwd /home/hadoop/app/logstash-2.4.1 [hadoop@HadoopMaster logstash-2.4.1]$ ll total 172 drwxrwxr-x. 2 hadoop hadoop 4096 Mar 27 03:58 bin -rw-rw-r--. 1 hadoop hadoop 102879 Nov 14 10:04 CHANGELOG.md -rw-rw-r--. 1 hadoop hadoop 2249 Nov 14 10:04 CONTRIBUTORS -rw-rw-r--. 1 hadoop hadoop 107 Mar 27 05:55 file_stdout.conf -rw-rw-r--. 1 hadoop hadoop 5084 Nov 14 10:07 Gemfile -rw-rw-r--. 1 hadoop hadoop 23015 Nov 14 10:04 Gemfile.jruby-1.9.lock drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 lib -rw-rw-r--. 1 hadoop hadoop 589 Nov 14 10:04 LICENSE -rw-rw-r--. 1 hadoop hadoop 46 Mar 27 05:30 logstash-simple.conf -rw-rw-r--. 1 hadoop hadoop 149 Nov 14 10:04 NOTICE.TXT -rw-rw-r--. 1 hadoop hadoop 155 Mar 27 06:43 redis_stdout.conf drwxrwxr-x. 4 hadoop hadoop 4096 Mar 27 03:58 vendor [hadoop@HadoopMaster logstash-2.4.1]$ vim stdin_es.conf

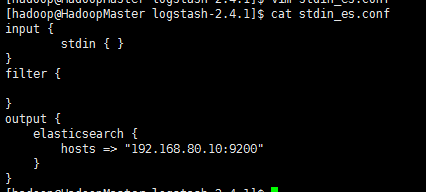

elasticsearch output

即把Logstash里的数据,写到elasticsearch 集群(这台192.168.80.10里)

hosts=>"192.168.80.00"

或

hosts=>["192.168.80.10:9200","192.168.80.11:9200","192.168.80.12:9200"]

1.x中属性名称叫host

默认向es中创建的索引库是logstash-%{+YYYY.MM.dd},可以利用es中的索引模板特性定义索引库的一些基础配置。

input { stdin { } } filter { } output { elasticsearch { hosts => "192.168.80.10:9200" } }

继续

Filter plugins

继续