不多说,直接上干货!

如何在Maven官网下载历史版本

Eclipse下Maven新建项目、自动打依赖jar包(包含普通项目和Web项目)

Eclipse下Maven新建Web项目index.jsp报错完美解决(war包)

HBase 开发环境搭建(EclipseMyEclipse + Maven)

Zookeeper项目开发环境搭建(EclipseMyEclipse + Maven)

Hive项目开发环境搭建(EclipseMyEclipse + Maven)

MapReduce 开发环境搭建(EclipseMyEclipse + Maven)

Hadoop项目开发环境搭建(EclipseMyEclipse + Maven)

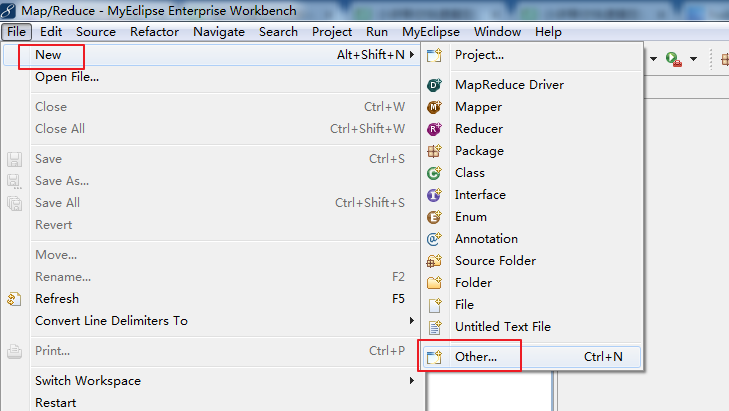

第一步:

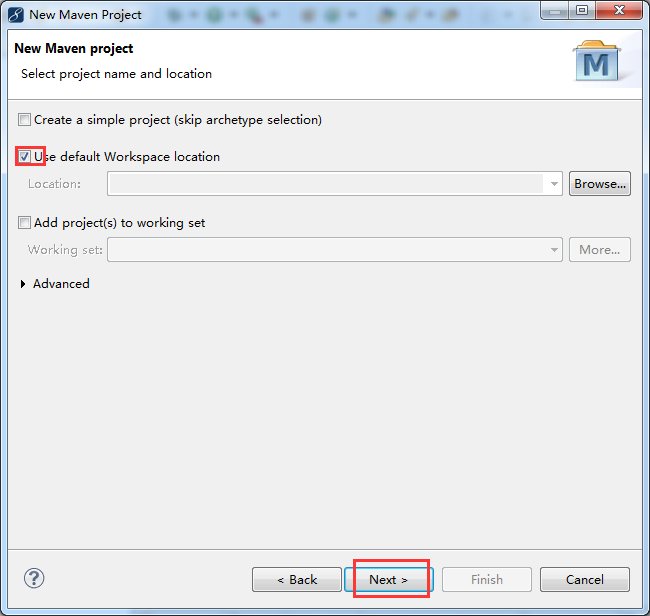

第二步:

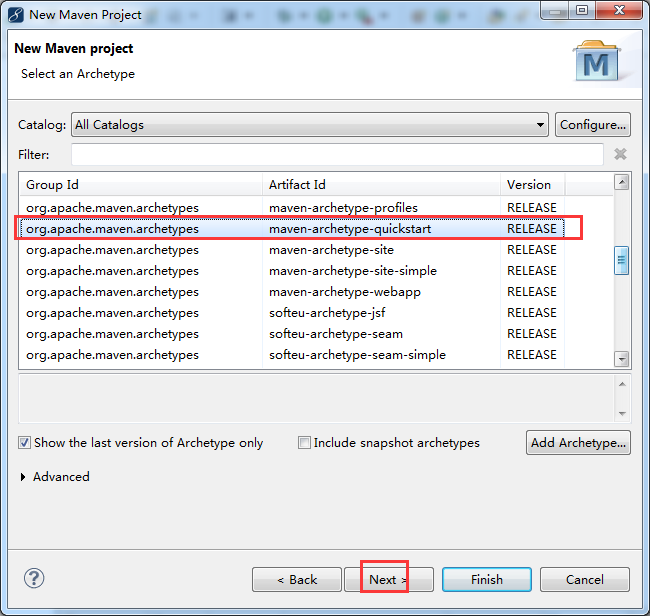

第三步:

第四步:

第五步:

第六步:

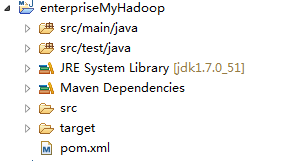

第七步:默认的

1 <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 2 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 3 <modelVersion>4.0.0</modelVersion> 4 5 <groupId>zhouls.bigdata</groupId> 6 <artifactId>enterpriseMyHadoop</artifactId> 7 <version>0.0.1-SNAPSHOT</version> 8 <packaging>jar</packaging> 9 10 <name>enterpriseMyHadoop</name> 11 <url>http://maven.apache.org</url> 12 13 <properties> 14 <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> 15 </properties> 16 17 <dependencies> 18 <dependency> 19 <groupId>junit</groupId> 20 <artifactId>junit</artifactId> 21 <version>3.8.1</version> 22 <scope>test</scope> 23 </dependency> 24 </dependencies> 25 </project>

第八步:修改得到

1 <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 2 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 3 <modelVersion>4.0.0</modelVersion> 4 5 <groupId>zhouls.bigdata.enterpriseMyHadoop</groupId> 6 <artifactId>enterpriseMyHadoop</artifactId> 7 <version>1.0-SNAPSHOT</version> 8 <packaging>jar</packaging> 9 10 <name>test</name> 11 <url>http://maven.apache.org</url> 12 13 <properties> 14 <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> 15 <hadoop.version>2.6.0</hadoop.version> 16 </properties> 17 18 <dependencies> 19 <dependency> 20 <groupId>junit</groupId> 21 <artifactId>junit</artifactId> 22 <version>3.8.1</version> 23 <scope>test</scope> 24 </dependency> 25 <dependency> 26 <groupId>org.apache.hadoop</groupId> 27 <artifactId>hadoop-common</artifactId> 28 <version>${hadoop.version}</version> 29 </dependency> 30 <dependency> 31 <groupId>org.apache.hadoop</groupId> 32 <artifactId>hadoop-hdfs</artifactId> 33 <version>${hadoop.version}</version> 34 </dependency> 35 <dependency> 36 <groupId>org.apache.hadoop</groupId> 37 <artifactId>hadoop-client</artifactId> 38 <version>${hadoop.version}</version> 39 </dependency> 40 </dependencies> 41 <build> 42 <plugins> 43 <plugin> 44 <groupId>org.apache.maven.plugins</groupId> 45 <artifactId>maven-shade-plugin</artifactId> 46 <version>2.4.1</version> 47 <executions> 48 <!-- Run shade goal on package phase --> 49 <execution> 50 <phase>package</phase> 51 <goals> 52 <goal>shade</goal> 53 </goals> 54 <configuration> 55 <transformers> 56 <!-- add Main-Class to manifest file --> 57 <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> 58 <mainClass>zhouls.bigdata.enterpriseMyHadoop.MyDriver</mainClass> 59 </transformer> 60 </transformers> 61 <createDependencyReducedPom>false</createDependencyReducedPom> 62 </configuration> 63 </execution> 64 </executions> 65 </plugin> 66 </plugins> 67 </build> 68 </project> 69

第九步:写代码

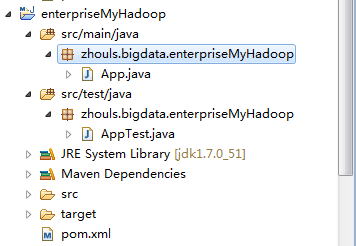

WordCount.java

1 package zhouls.bigdata.enterpriseMyHadoop; 2 3 import java.io.IOException; 4 import java.util.StringTokenizer; 5 6 import org.apache.hadoop.conf.Configuration; 7 import org.apache.hadoop.conf.Configured; 8 import org.apache.hadoop.fs.FileSystem; 9 import org.apache.hadoop.fs.Path; 10 import org.apache.hadoop.io.IntWritable; 11 import org.apache.hadoop.io.Text; 12 import org.apache.hadoop.mapreduce.Job; 13 import org.apache.hadoop.mapreduce.Mapper; 14 import org.apache.hadoop.mapreduce.Reducer; 15 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 16 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 17 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 18 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 19 import org.apache.hadoop.util.Tool; 20 @SuppressWarnings("unused")// 屏蔽java编译中的一些警告信息 21 public class WordCount extends Configured implements Tool{ 22 23 public static class TokenizerMapper extends 24 Mapper<Object, Text, Text, IntWritable> 25 //这个Mapper类是一个泛型类型,它有四个形参类型,分别指定map函数的输入键、输入值、输出键、输出值的类型。hadoop没有直接使用Java内嵌的类型,而是自己开发了一套可以优化网络序列化传输的基本类型。这些类型都在org.apache.hadoop.io包中。 26 //比如这个例子中的Object类型,适用于字段需要使用多种类型的时候,Text类型相当于Java中的String类型,IntWritable类型相当于Java中的Integer类型 27 { 28 //定义两个变量 29 private final static IntWritable one = new IntWritable(1);//这个1表示每个单词出现一次,map的输出value就是1. 30 private Text word = new Text(); 31 32 public void map(Object key, Text value, Context context) 33 //context它是mapper的一个内部类,简单的说顶级接口是为了在map或是reduce任务中跟踪task的状态,很自然的MapContext就是记录了map执行的上下文,在mapper类中,这个context可以存储一些job conf的信息,比如job运行时参数等,我们可以在map函数中处理这个信息,这也是Hadoop中参数传递中一个很经典的例子,同时context作为了map和reduce执行中各个函数的一个桥梁,这个设计和Java web中的session对象、application对象很相似 34 //简单的说context对象保存了作业运行的上下文信息,比如:作业配置信息、InputSplit信息、任务ID等 35 //我们这里最直观的就是主要用到context的write方法。 36 throws IOException, InterruptedException { 37 //The tokenizer uses the default delimiter set, which is " ": the space character, the tab character, the newline character, the carriage-return character 38 StringTokenizer itr = new StringTokenizer(value.toString());//将Text类型的value转化成字符串类型 39 //StringTokenizer是字符串分隔解析类型,StringTokenizer 用来分割字符串,你可以指定分隔符,比如',',或者空格之类的字符。 40 while (itr.hasMoreTokens()) {//hasMoreTokens() 方法是用来测试是否有此标记生成器的字符串可用更多的标记。 41 //java.util.StringTokenizer.hasMoreTokens() 42 word.set(itr.nextToken());//nextToken()这是 StringTokenizer 类下的一个方法,nextToken() 用于返回下一个匹配的字段。 43 context.write(word, one); 44 } 45 } 46 } 47 48 public static class IntSumReducer extends 49 Reducer<Text, IntWritable, Text, IntWritable> { 50 private IntWritable result = new IntWritable(); 51 public void reduce(Text key, Iterable<IntWritable> values, 52 Context context) throws IOException, InterruptedException { 53 int sum = 0; 54 for (IntWritable val : values) { 55 sum += val.get(); 56 } 57 result.set(sum); 58 context.write(key, result); 59 } 60 } 61 62 public static void main(String[] args) throws Exception { 63 Configuration conf = new Configuration(); 64 //Configuration类代表作业的配置,该类会加载mapred-site.xml、hdfs-site.xml、core-site.xml等配置文件。 65 //删除已经存在的输出目录 66 Path mypath = new Path("hdfs://djt002:9000/outData/wordcount-out");//输出路径 67 FileSystem hdfs = mypath.getFileSystem(conf);//获取文件系统 68 //如果文件系统中存在这个输出路径,则删除掉,保证输出目录不能提前存在。 69 if (hdfs.isDirectory(mypath)) { 70 hdfs.delete(mypath, true); 71 } 72 //job对象指定了作业执行规范,可以用它来控制整个作业的运行。 73 Job job = Job.getInstance();// new Job(conf, "word count"); 74 job.setJarByClass(WordCount.class);//我们在hadoop集群上运行作业的时候,要把代码打包成一个jar文件,然后把这个文件 75 //传到集群上,然后通过命令来执行这个作业,但是命令中不必指定JAR文件的名称,在这条命令中通过job对象的setJarByClass() 76 //中传递一个主类就行,hadoop会通过这个主类来查找包含它的JAR文件。 77 78 job.setMapperClass(TokenizerMapper.class); 79 //job.setReducerClass(IntSumReducer.class); 80 job.setCombinerClass(IntSumReducer.class); 81 82 job.setOutputKeyClass(Text.class); 83 job.setOutputValueClass(IntWritable.class); 84 //一般情况下mapper和reducer的输出的数据类型是一样的,所以我们用上面两条命令就行,如果不一样,我们就可以用下面两条命令单独指定mapper的输出key、value的数据类型 85 //job.setMapOutputKeyClass(Text.class); 86 //job.setMapOutputValueClass(IntWritable.class); 87 //hadoop默认的是TextInputFormat和TextOutputFormat,所以说我们这里可以不用配置。 88 //job.setInputFormatClass(TextInputFormat.class); 89 //job.setOutputFormatClass(TextOutputFormat.class); 90 91 FileInputFormat.addInputPath(job, new Path( 92 "hdfs://djt002:9000/inputData/wordcount/wc.txt"));//FileInputFormat.addInputPath()指定的这个路径可以是单个文件、一个目录或符合特定文件模式的一系列文件。 93 //从方法名称可以看出,可以通过多次调用这个方法来实现多路径的输入。 94 FileOutputFormat.setOutputPath(job, new Path( 95 "hdfs://djt002:9000/outData/wordcount-out"));//只能有一个输出路径,该路径指定的就是reduce函数输出文件的写入目录。 96 //特别注意:输出目录不能提前存在,否则hadoop会报错并拒绝执行作业,这样做的目的是防止数据丢失,因为长时间运行的作业如果结果被意外覆盖掉,那肯定不是我们想要的 97 System.exit(job.waitForCompletion(true) ? 0 : 1); 98 //使用job.waitForCompletion()提交作业并等待执行完成,该方法返回一个boolean值,表示执行成功或者失败,这个布尔值被转换成程序退出代码0或1,该布尔参数还是一个详细标识,所以作业会把进度写到控制台。 99 //waitForCompletion()提交作业后,每秒会轮询作业的进度,如果发现和上次报告后有改变,就把进度报告到控制台,作业完成后,如果成功就显示作业计数器,如果失败则把导致作业失败的错误输出到控制台 100 } 101 102 public int run(String[] args) throws Exception { 103 // TODO Auto-generated method stub 104 return 0; 105 } 106 } 107 108 109 110 111 //TextInputFormat是hadoop默认的输入格式,这个类继承自FileInputFormat,使用这种输入格式,每个文件都会单独作为Map的输入,每行数据都会生成一条记录,每条记录会表示成<key,value>的形式。 112 //key的值是每条数据记录在数据分片中的字节偏移量,数据类型是LongWritable. 113 //value的值为每行的内容,数据类型为Text。 114 // 115 //实际上InputFormat()是用来生成可供Map处理的<key,value>的。 116 //InputSplit是hadoop中用来把输入数据传送给每个单独的Map(也就是我们常说的一个split对应一个Map), 117 //InputSplit存储的并非数据本身,而是一个分片长度和一个记录数据位置的数组。 118 //生成InputSplit的方法可以通过InputFormat()来设置。 119 //当数据传给Map时,Map会将输入分片传送给InputFormat(),InputFormat()则调用getRecordReader()生成RecordReader,RecordReader则再通过creatKey()和creatValue()创建可供Map处理的<key,value>对。 120 // 121 //OutputFormat() 122 //默认的输出格式为TextOutputFormat。它和默认输入格式类似,会将每条记录以一行的形式存入文本文件。它的键和值可以是任意形式的,因为程序内部会调用toString()将键和值转化为String类型再输出。

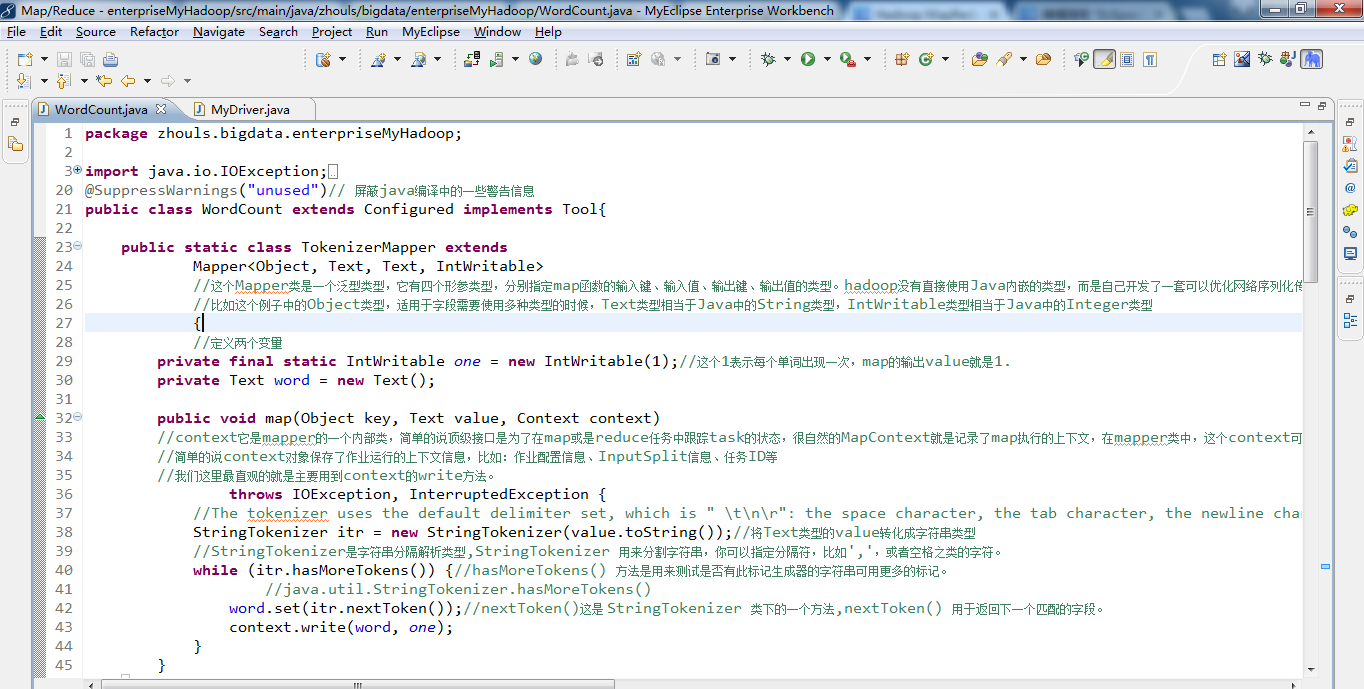

MyDriver.java

1 package zhouls.bigdata.enterpriseMyHadoop; 2 3 4 import org.apache.hadoop.util.ProgramDriver; 5 /* 6 * 管理所有MapReduce 程序 7 */ 8 public class MyDriver { 9 public static void main(String argv[]){ 10 int exitCode = -1; 11 ProgramDriver pgd = new ProgramDriver(); 12 try { 13 pgd.addClass("wordcount", WordCount.class, 14 "A map/reduce program that counts the words in the input files."); 15 16 exitCode = pgd.run(argv); 17 } 18 catch(Throwable e){ 19 e.printStackTrace(); 20 } 21 22 System.exit(exitCode); 23 } 24 }

注意的是,在这里,我们可以随着以后的业务,添加。

pgd.addClass("wordcount1", WordCount1.class,

"A map/reduce program that counts the words in the input files.");

pgd.addClass("wordcount2", WordCount2.class,

"A map/reduce program that counts the words in the input files.");

....等

这里的wordcount1是WordCount1.class的别名。即比如我们打jar包到hadoop集群,在我们的任何路径下,执行

1 $HADOOP_HOME/bin/hadoop jar enterpriseMyHadoop-1.0-SNAPSHOT.jar wordcount1 hdfs://djt002:9000/inputData/wordcount/wc.txt hdfs://djt002:9000/outData/wordcount-out

enterpriseMyHadoop-1.0-SNAPSHOT.jar是我们打好的jar包名

wordcount1是我们的类的别名,即这样可以方便替代冗余的书写。

hdfs://djt002:9000/inputData/wordcount/wc.txt是输入路径

hdfs://djt002:9000/outData/wordcount-out是输出路径

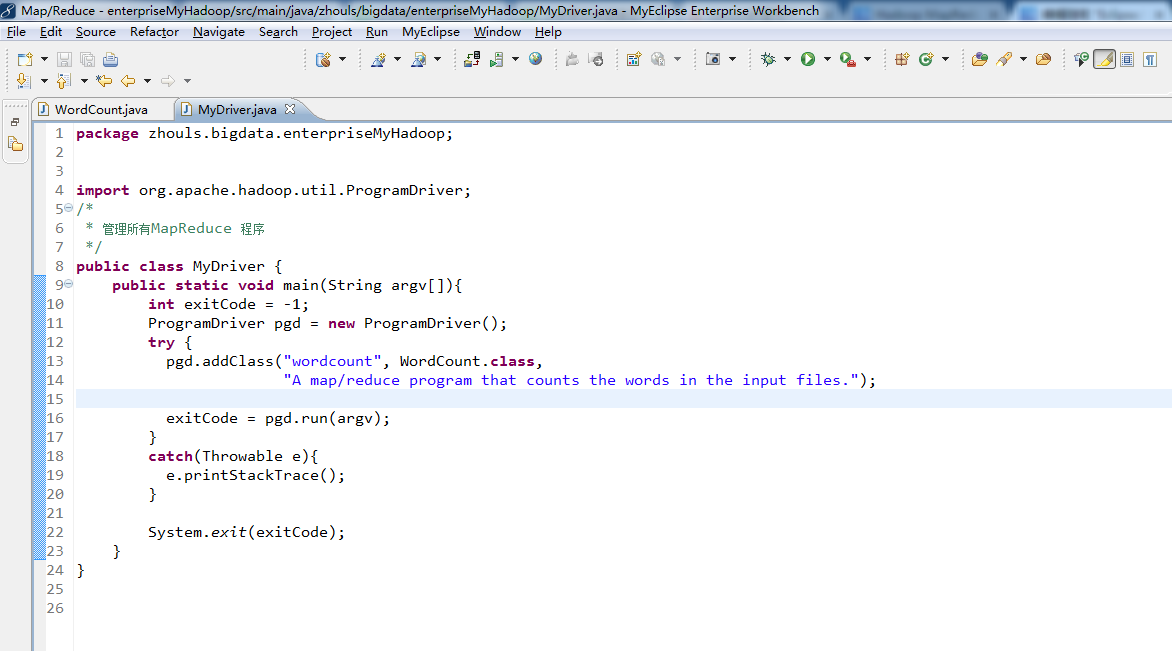

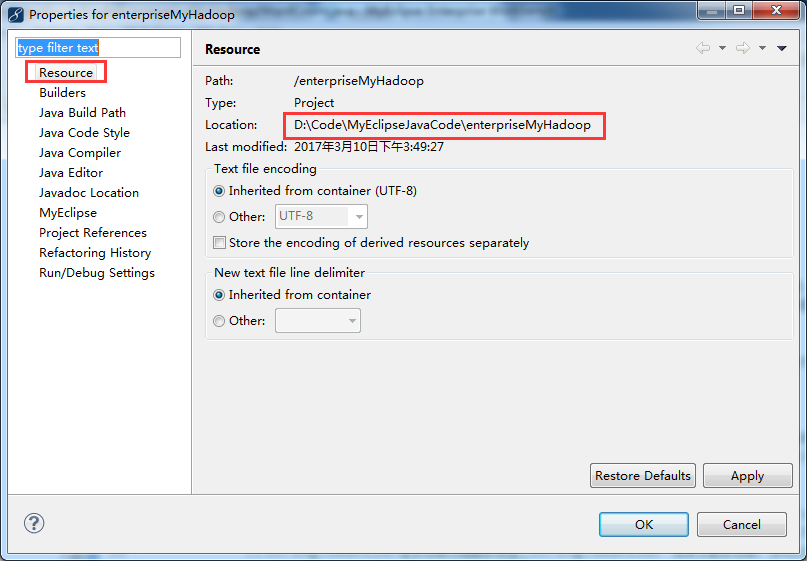

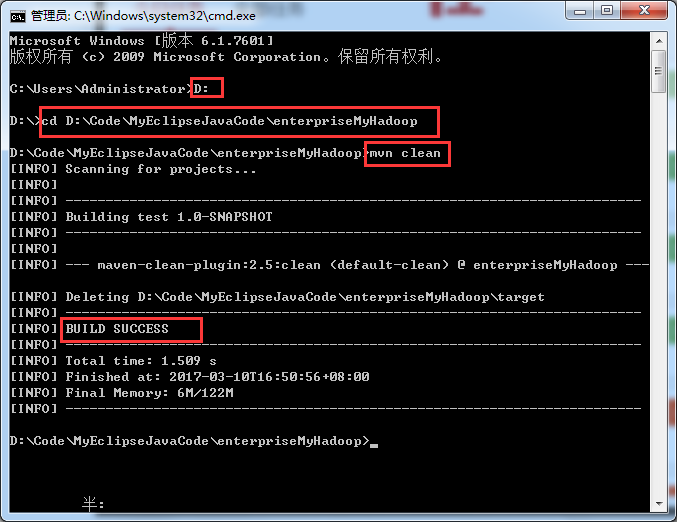

第十步:打jar包

Microsoft Windows [版本 6.1.7601]

版权所有 (c) 2009 Microsoft Corporation。保留所有权利。

C:UsersAdministrator>D:

D:>cd D:CodeMyEclipseJavaCodeenterpriseMyHadoop

D:CodeMyEclipseJavaCodeenterpriseMyHadoop>mvn clean

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building test 1.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ enterpriseMyHadoop ---

[INFO] Deleting D:CodeMyEclipseJavaCodeenterpriseMyHadoop arget

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 1.509 s

[INFO] Finished at: 2017-03-10T16:50:56+08:00

[INFO] Final Memory: 6M/122M

[INFO] ------------------------------------------------------------------------

D:CodeMyEclipseJavaCodeenterpriseMyHadoop>

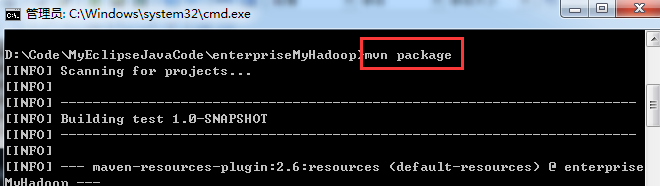

D:CodeMyEclipseJavaCodeenterpriseMyHadoop>mvn package

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building test 1.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ enterprise

MyHadoop ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] skip non existing resourceDirectory D:CodeMyEclipseJavaCodeenterpriseM

yHadoopsrcmain

esources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ enterpriseMyHad

oop ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 2 source files to D:CodeMyEclipseJavaCodeenterpriseMyHadoop

targetclasses

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ en

terpriseMyHadoop ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] skip non existing resourceDirectory D:CodeMyEclipseJavaCodeenterpriseM

yHadoopsrc est

esources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ enterpr

iseMyHadoop ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to D:CodeMyEclipseJavaCodeenterpriseMyHadoop

arget est-classes

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ enterpriseMyHadoop

---

[INFO] Surefire report directory: D:CodeMyEclipseJavaCodeenterpriseMyHadoop

argetsurefire-reports

-------------------------------------------------------

T E S T S

-------------------------------------------------------

Running zhouls.bigdata.enterpriseMyHadoop.AppTest

Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 0.008 sec

Results :

Tests run: 1, Failures: 0, Errors: 0, Skipped: 0

[INFO]

[INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ enterpriseMyHadoop ---

[INFO] Building jar: D:CodeMyEclipseJavaCodeenterpriseMyHadoop argetenterpr

iseMyHadoop-1.0-SNAPSHOT.jar

[INFO]

[INFO] --- maven-shade-plugin:2.4.1:shade (default) @ enterpriseMyHadoop ---

[INFO] Including org.apache.hadoop:hadoop-common:jar:2.6.0 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-annotations:jar:2.6.0 in the shaded ja

r.

[INFO] Including com.google.guava:guava:jar:11.0.2 in the shaded jar.

[INFO] Including commons-cli:commons-cli:jar:1.2 in the shaded jar.

[INFO] Including org.apache.commons:commons-math3:jar:3.1.1 in the shaded jar.

[INFO] Including xmlenc:xmlenc:jar:0.52 in the shaded jar.

[INFO] Including commons-httpclient:commons-httpclient:jar:3.1 in the shaded jar

.

[INFO] Including commons-codec:commons-codec:jar:1.4 in the shaded jar.

[INFO] Including commons-io:commons-io:jar:2.4 in the shaded jar.

[INFO] Including commons-net:commons-net:jar:3.1 in the shaded jar.

[INFO] Including commons-collections:commons-collections:jar:3.2.1 in the shaded

jar.

[INFO] Including javax.servlet:servlet-api:jar:2.5 in the shaded jar.

[INFO] Including org.mortbay.jetty:jetty:jar:6.1.26 in the shaded jar.

[INFO] Including org.mortbay.jetty:jetty-util:jar:6.1.26 in the shaded jar.

[INFO] Including com.sun.jersey:jersey-core:jar:1.9 in the shaded jar.

[INFO] Including com.sun.jersey:jersey-json:jar:1.9 in the shaded jar.

[INFO] Including org.codehaus.jettison:jettison:jar:1.1 in the shaded jar.

[INFO] Including com.sun.xml.bind:jaxb-impl:jar:2.2.3-1 in the shaded jar.

[INFO] Including javax.xml.bind:jaxb-api:jar:2.2.2 in the shaded jar.

[INFO] Including javax.xml.stream:stax-api:jar:1.0-2 in the shaded jar.

[INFO] Including javax.activation:activation:jar:1.1 in the shaded jar.

[INFO] Including org.codehaus.jackson:jackson-jaxrs:jar:1.8.3 in the shaded jar.

[INFO] Including org.codehaus.jackson:jackson-xc:jar:1.8.3 in the shaded jar.

[INFO] Including com.sun.jersey:jersey-server:jar:1.9 in the shaded jar.

[INFO] Including asm:asm:jar:3.1 in the shaded jar.

[INFO] Including tomcat:jasper-compiler:jar:5.5.23 in the shaded jar.

[INFO] Including tomcat:jasper-runtime:jar:5.5.23 in the shaded jar.

[INFO] Including javax.servlet.jsp:jsp-api:jar:2.1 in the shaded jar.

[INFO] Including commons-el:commons-el:jar:1.0 in the shaded jar.

[INFO] Including commons-logging:commons-logging:jar:1.1.3 in the shaded jar.

[INFO] Including log4j:log4j:jar:1.2.17 in the shaded jar.

[INFO] Including net.java.dev.jets3t:jets3t:jar:0.9.0 in the shaded jar.

[INFO] Including org.apache.httpcomponents:httpclient:jar:4.1.2 in the shaded ja

r.

[INFO] Including org.apache.httpcomponents:httpcore:jar:4.1.2 in the shaded jar.

[INFO] Including com.jamesmurty.utils:java-xmlbuilder:jar:0.4 in the shaded jar.

[INFO] Including commons-lang:commons-lang:jar:2.6 in the shaded jar.

[INFO] Including commons-configuration:commons-configuration:jar:1.6 in the shad

ed jar.

[INFO] Including commons-digester:commons-digester:jar:1.8 in the shaded jar.

[INFO] Including commons-beanutils:commons-beanutils:jar:1.7.0 in the shaded jar

.

[INFO] Including commons-beanutils:commons-beanutils-core:jar:1.8.0 in the shade

d jar.

[INFO] Including org.slf4j:slf4j-api:jar:1.7.5 in the shaded jar.

[INFO] Including org.slf4j:slf4j-log4j12:jar:1.7.5 in the shaded jar.

[INFO] Including org.codehaus.jackson:jackson-core-asl:jar:1.9.13 in the shaded

jar.

[INFO] Including org.codehaus.jackson:jackson-mapper-asl:jar:1.9.13 in the shade

d jar.

[INFO] Including org.apache.avro:avro:jar:1.7.4 in the shaded jar.

[INFO] Including com.thoughtworks.paranamer:paranamer:jar:2.3 in the shaded jar.

[INFO] Including org.xerial.snappy:snappy-java:jar:1.0.4.1 in the shaded jar.

[INFO] Including com.google.protobuf:protobuf-java:jar:2.5.0 in the shaded jar.

[INFO] Including com.google.code.gson:gson:jar:2.2.4 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-auth:jar:2.6.0 in the shaded jar.

[INFO] Including org.apache.directory.server:apacheds-kerberos-codec:jar:2.0.0-M

15 in the shaded jar.

[INFO] Including org.apache.directory.server:apacheds-i18n:jar:2.0.0-M15 in the

shaded jar.

[INFO] Including org.apache.directory.api:api-asn1-api:jar:1.0.0-M20 in the shad

ed jar.

[INFO] Including org.apache.directory.api:api-util:jar:1.0.0-M20 in the shaded j

ar.

[INFO] Including org.apache.curator:curator-framework:jar:2.6.0 in the shaded ja

r.

[INFO] Including com.jcraft:jsch:jar:0.1.42 in the shaded jar.

[INFO] Including org.apache.curator:curator-client:jar:2.6.0 in the shaded jar.

[INFO] Including org.apache.curator:curator-recipes:jar:2.6.0 in the shaded jar.

[INFO] Including com.google.code.findbugs:jsr305:jar:1.3.9 in the shaded jar.

[INFO] Including org.htrace:htrace-core:jar:3.0.4 in the shaded jar.

[INFO] Including org.apache.zookeeper:zookeeper:jar:3.4.6 in the shaded jar.

[INFO] Including org.apache.commons:commons-compress:jar:1.4.1 in the shaded jar

.

[INFO] Including org.tukaani:xz:jar:1.0 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-hdfs:jar:2.6.0 in the shaded jar.

[INFO] Including commons-daemon:commons-daemon:jar:1.0.13 in the shaded jar.

[INFO] Including io.netty:netty:jar:3.6.2.Final in the shaded jar.

[INFO] Including xerces:xercesImpl:jar:2.9.1 in the shaded jar.

[INFO] Including xml-apis:xml-apis:jar:1.3.04 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-client:jar:2.6.0 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-mapreduce-client-app:jar:2.6.0 in the

shaded jar.

[INFO] Including org.apache.hadoop:hadoop-mapreduce-client-common:jar:2.6.0 in t

he shaded jar.

[INFO] Including org.apache.hadoop:hadoop-yarn-client:jar:2.6.0 in the shaded ja

r.

[INFO] Including org.apache.hadoop:hadoop-yarn-server-common:jar:2.6.0 in the sh

aded jar.

[INFO] Including org.apache.hadoop:hadoop-mapreduce-client-shuffle:jar:2.6.0 in

the shaded jar.

[INFO] Including org.fusesource.leveldbjni:leveldbjni-all:jar:1.8 in the shaded

jar.

[INFO] Including org.apache.hadoop:hadoop-yarn-api:jar:2.6.0 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-mapreduce-client-core:jar:2.6.0 in the

shaded jar.

[INFO] Including org.apache.hadoop:hadoop-yarn-common:jar:2.6.0 in the shaded ja

r.

[INFO] Including com.sun.jersey:jersey-client:jar:1.9 in the shaded jar.

[INFO] Including org.apache.hadoop:hadoop-mapreduce-client-jobclient:jar:2.6.0 i

n the shaded jar.

[WARNING] hadoop-yarn-common-2.6.0.jar, hadoop-yarn-client-2.6.0.jar define 2 ov

erlapping classes:

[WARNING] - org.apache.hadoop.yarn.client.api.impl.package-info

[WARNING] - org.apache.hadoop.yarn.client.api.package-info

[WARNING] jasper-compiler-5.5.23.jar, jasper-runtime-5.5.23.jar define 1 overlap

ping classes:

[WARNING] - org.apache.jasper.compiler.Localizer

[WARNING] commons-beanutils-core-1.8.0.jar, commons-beanutils-1.7.0.jar, commons

-collections-3.2.1.jar define 10 overlapping classes:

[WARNING] - org.apache.commons.collections.FastHashMap$EntrySet

[WARNING] - org.apache.commons.collections.ArrayStack

[WARNING] - org.apache.commons.collections.FastHashMap$1

[WARNING] - org.apache.commons.collections.FastHashMap$KeySet

[WARNING] - org.apache.commons.collections.FastHashMap$CollectionView

[WARNING] - org.apache.commons.collections.BufferUnderflowException

[WARNING] - org.apache.commons.collections.Buffer

[WARNING] - org.apache.commons.collections.FastHashMap$CollectionView$Collecti

onViewIterator

[WARNING] - org.apache.commons.collections.FastHashMap$Values

[WARNING] - org.apache.commons.collections.FastHashMap

[WARNING] hadoop-yarn-common-2.6.0.jar, hadoop-yarn-api-2.6.0.jar define 3 overl

apping classes:

[WARNING] - org.apache.hadoop.yarn.factories.package-info

[WARNING] - org.apache.hadoop.yarn.util.package-info

[WARNING] - org.apache.hadoop.yarn.factory.providers.package-info

[WARNING] commons-beanutils-core-1.8.0.jar, commons-beanutils-1.7.0.jar define 8

2 overlapping classes:

[WARNING] - org.apache.commons.beanutils.WrapDynaBean

[WARNING] - org.apache.commons.beanutils.Converter

[WARNING] - org.apache.commons.beanutils.converters.IntegerConverter

[WARNING] - org.apache.commons.beanutils.locale.LocaleBeanUtilsBean

[WARNING] - org.apache.commons.beanutils.locale.converters.DecimalLocaleConver

ter

[WARNING] - org.apache.commons.beanutils.locale.converters.DoubleLocaleConvert

er

[WARNING] - org.apache.commons.beanutils.converters.ShortConverter

[WARNING] - org.apache.commons.beanutils.converters.StringArrayConverter

[WARNING] - org.apache.commons.beanutils.locale.LocaleConvertUtilsBean

[WARNING] - org.apache.commons.beanutils.LazyDynaClass

[WARNING] - 72 more...

[WARNING] maven-shade-plugin has detected that some class files are

[WARNING] present in two or more JARs. When this happens, only one

[WARNING] single version of the class is copied to the uber jar.

[WARNING] Usually this is not harmful and you can skip these warnings,

[WARNING] otherwise try to manually exclude artifacts based on

[WARNING] mvn dependency:tree -Ddetail=true and the above output.

[WARNING] See http://docs.codehaus.org/display/MAVENUSER/Shade+Plugin

[INFO] Replacing original artifact with shaded artifact.

[INFO] Replacing D:CodeMyEclipseJavaCodeenterpriseMyHadoop argetenterpriseM

yHadoop-1.0-SNAPSHOT.jar with D:CodeMyEclipseJavaCodeenterpriseMyHadoop arge

tenterpriseMyHadoop-1.0-SNAPSHOT-shaded.jar

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:17 min

[INFO] Finished at: 2017-03-10T16:55:33+08:00

[INFO] Final Memory: 40M/224M

[INFO] ------------------------------------------------------------------------

D:CodeMyEclipseJavaCodeenterpriseMyHadoop>

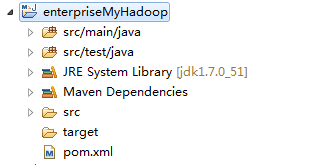

之前是空的,没有

输入打包命令mvn package,变成如下,刷新一下MyEclipse的target目录

成功!

最后将jar包,传到hdoop或spark集群里,这里我不多赘述。