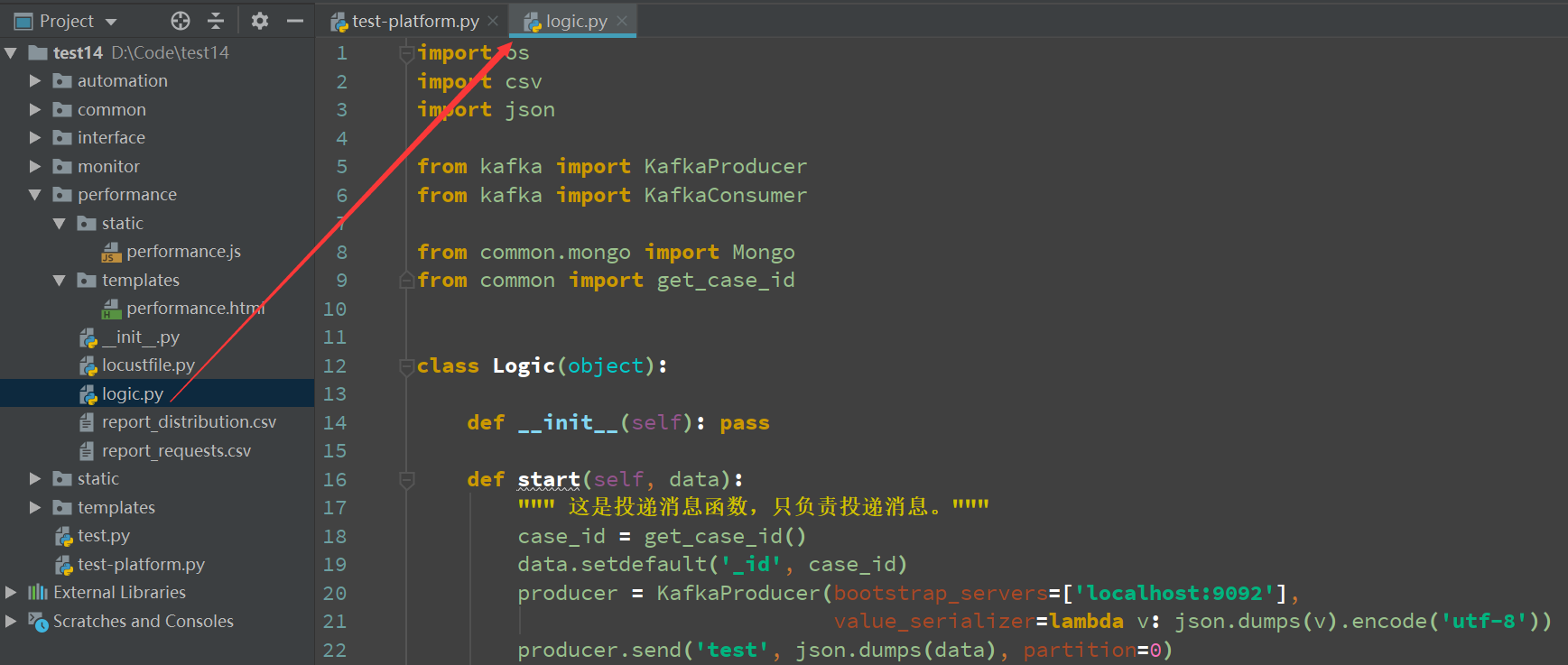

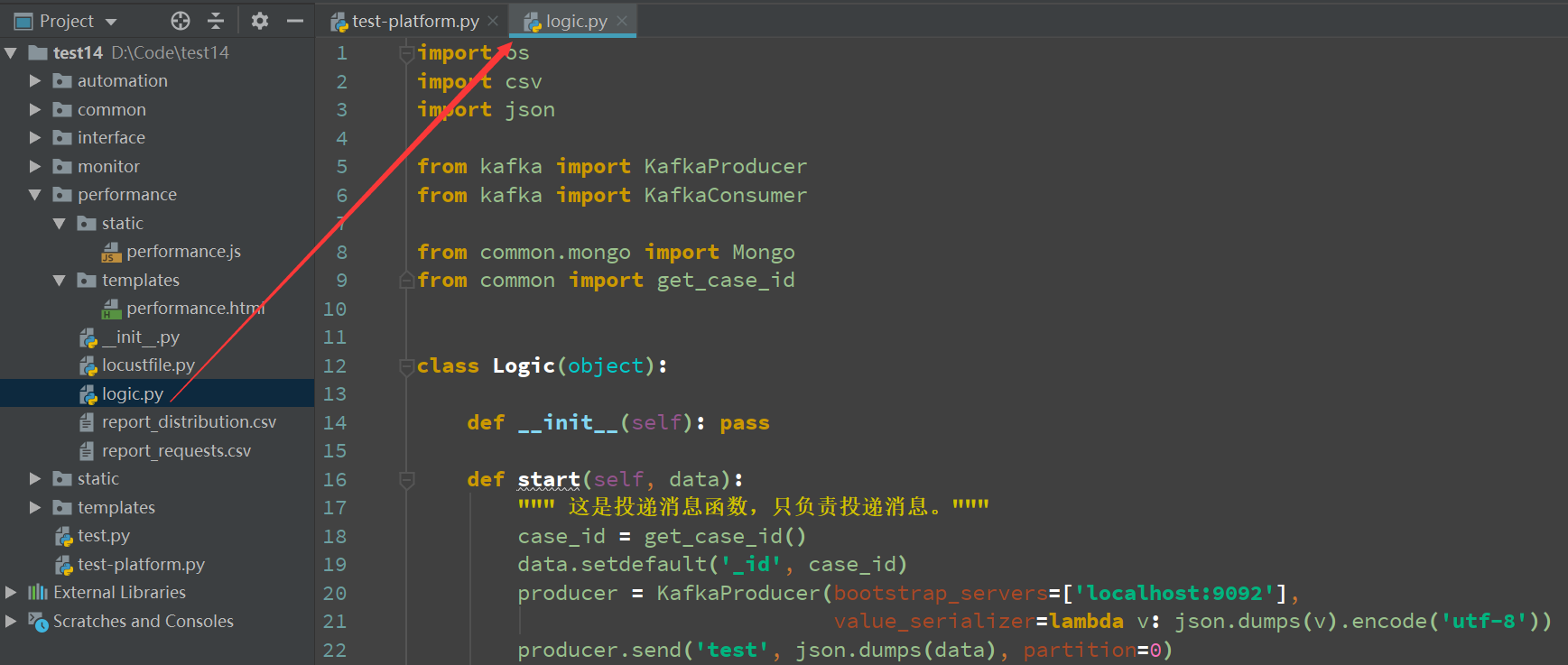

import os

import csv

import json

from kafka import KafkaProducer

from kafka import KafkaConsumer

from common.mongo import Mongo

from common import get_case_id

class Logic(object):

def __init__(self): pass

def start(self, data):

""" 这是投递消息函数,只负责投递消息。"""

case_id = get_case_id()

data.setdefault('_id', case_id)

producer = KafkaProducer(bootstrap_servers=['localhost:9092'],

value_serializer=lambda v: json.dumps(v).encode('utf-8'))

producer.send('test', json.dumps(data), partition=0)

producer.close()

return case_id

def service(self):

""" 消费者取消息,执行,service应该单独部署到其他服务器,专门用于执行接口、UI、性能测试 """

print("进入取数据模式")

consumer = KafkaConsumer('test', bootstrap_servers=['localhost:9092'])

print("创建卡夫卡消费者成功")

for msg in consumer:

print("迭代取数据开始")

recv = "%s:%d:%d: key=%s value=%s" %

(msg.topic, msg.partition, msg.offset, msg.key, msg.value)

print(recv)

# 解析成python.json对象

data = json.loads(msg.value)

data = json.loads(data)

# 拿前端传过来的数据

code = data.get('code') # 拿代码

host = data.get('host') # 拿host

user = data.get('user', 10) # 拿用户数

rate = data.get('rate', 10) # 拿QPS(每秒请求数)

time = data.get('time', 60) # 拿请求时间

# 把代码写到locustfile.py文件里面

file = open('locustfile.py', "w", encoding="utf-8")

file.write(code)

file.close()

# 拼凑并运行locust指令,noweb模式,并生成csv报告

cmd = "locust -f locustfile.py --host={0} --no-web -c {1} -r {2} -t {3} --csv=report".format(host, user, rate, time)

os.system(cmd)

# 解析report_requests.csv文件里的测试的数据写

report_id = get_case_id()

file = open('report_requests.csv')

lines = csv.reader(file, delimiter=' ', quotechar='|')

result = {

'_id': report_id,

'requests': []

}

for line in lines:

result['requests'].append(line)

file.close()

# 把数据写入到MongoDB

Mongo().insert("2019", "performance", result)

if __name__ == '__main__':

logic = Logic()

logic.service()