一、在scrapy中的应用:

1、在settings中的设置:

1 LOG_LEVEL="WARNING"#如此设置最终终端上只会显示warning及以上的日志,其他的都不会显示,日志等级为四种 2 LOG_FILE="./log.log"#保存日志供后续查看,"./log.log"为日志保存目录

2、在spider.py或pipelines.py文件中import logging,实例化logger的方式,使用logger输出内容:

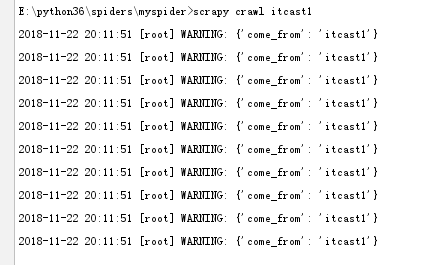

1 # -*- coding: utf-8 -*- 2 import scrapy 3 import logging 4 5 class Itcast1Spider(scrapy.Spider): 6 name = 'itcast1' 7 allowed_domains = ['itcast.cn'] 8 start_urls = ['http://itcast.cn/'] 9 10 def parse(self, response): 11 for i in range(10): 12 item={} 13 item["come_from"]="itcast1" 14 logging.warning(item) 15 pass

1 # -*- coding: utf-8 -*- 2 import scrapy 3 import logging 4 5 logger=logging.getLogger(__name__) 6 7 class Itcast2Spider(scrapy.Spider): 8 name = 'itcast2' 9 allowed_domains = ['itcast.cn'] 10 start_urls = ['http://itcast.cn/'] 11 12 def parse(self, response): 13 for i in range(10): 14 item = {} 15 item["come_from"] = "itcast1" 16 logger.warning(item) 17 yield item

1 #pipelines.py 2 import logging 3 logger=logging.getLogger(__name__) 4 class MyspiderPipeline(object): 5 def process_item(self, item, spider): 6 logger.warning("*"*10) 7 # item["hello"]="world" 8 # print(item) 9 return item#为了让数据在不同的pipeline中传递,需要return来返回item,

二、在普通项目中的应用:

1 import logging 2 logger=logging.basicConfig(...) #设置日志输出的样式,格式(可看考别人的格式) 3 #实例化一个 4 logger=logging.getlogger(__name__) 5 #在任何py文件中调用Logger即可