server1:

yum install pssh-2.3.1-2.1.x86_64.rpm crmsh-1.2.6-0.rc2.2.1.x86_64.rpm -y

yum install -y pacemaker corosync

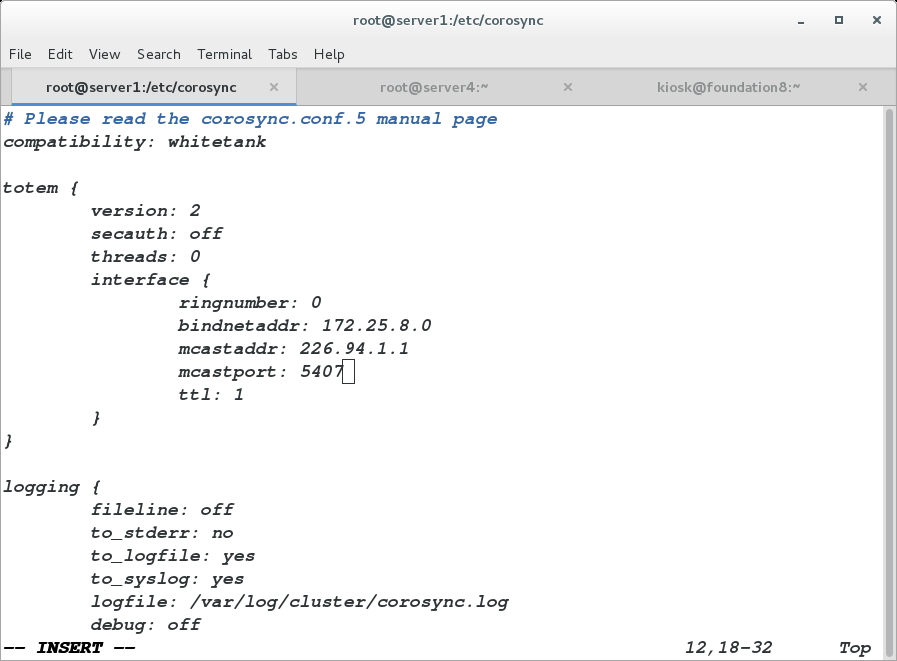

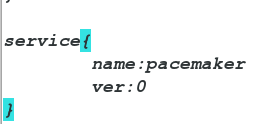

cd /etc/corosync/

cp corosync.conf.example corosync.conf

vim corosync.conf

/etc/init.d/corosync start

server4:

yum install pssh-2.3.1-2.1.x86_64.rpm crmsh-1.2.6-0.rc2.2.1.x86_64.rpm -y

yum install -y pacemaker corosync

/etc/init.d/corosync start

server1:

[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options"

dc-version="1.1.10-14.el6-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.8.100 cidr_netmask=32 op monitor interval=1min ##添加vip并添加监控

crm(live)configure# commit

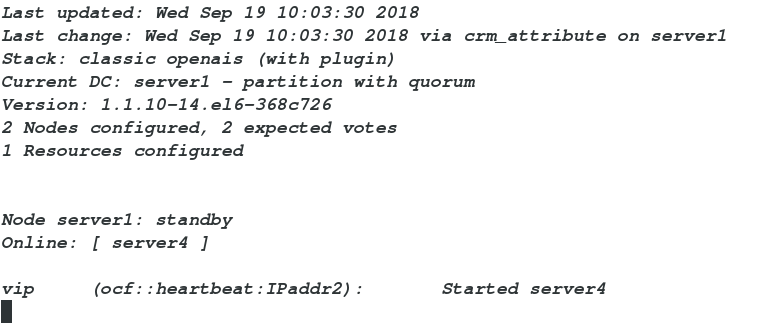

server4: 监控

com_mon

server4:

crm(live)configure# cd

crm(live)# node

crm(live)node# show

server1: normal

server4: normal

crm(live)node# standby ##停止

crm(live)node# online ##开启

crm(live)node# bye

crm(live)configure# cd

crm(live)# node

crm(live)node# show

server1: normal

server4: normal

crm(live)node# standby ##停止

crm(live)node# online ##开启

crm(live)node# bye

此时不会枪走现运行的节点

但是因为集群至少有2个节点

所以会当一个服务停止后,服务会挂掉

此时修改策略

server1:

crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore ##忽略节点数的检测

crm(live)configure# commit

此时停止节点之后剩下的另一个服务仍可继续运行

server1:

crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore ##忽略节点数的检测

crm(live)configure# commit

此时停止节点之后剩下的另一个服务仍可继续运行

在物理机中开启fence_virtd 服务

server1:

[root@server1 corosync]# crm

crm(live)# configure

crm(live)configure# primitive web

lsb: ocf: service: stonith:

crm(live)configure# primitive web

lsb: ocf: service: stonith:

crm(live)configure# primitive web lsb:httpd op monitor interval=1min

crm(live)configure# commit

crm(live)configure# group webgroup vip web ##创建一个资源管理组

crm(live)configure# commit

crm(live)configure# bye

[root@server1 corosync]# crm

crm(live)# configure

crm(live)configure# primitive web

lsb: ocf: service: stonith:

crm(live)configure# primitive web

lsb: ocf: service: stonith:

crm(live)configure# primitive web lsb:httpd op monitor interval=1min

crm(live)configure# commit

crm(live)configure# group webgroup vip web ##创建一个资源管理组

crm(live)configure# commit

crm(live)configure# bye

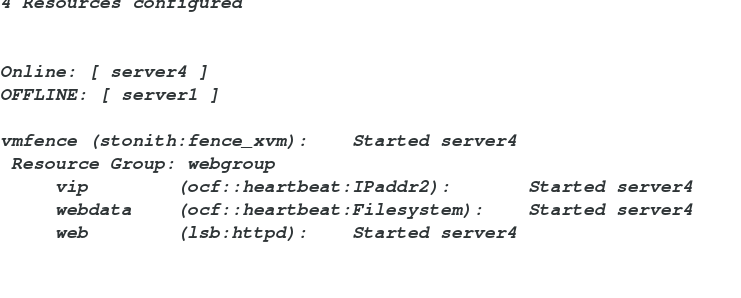

server4:

crm_mon

crm_mon

server1:

[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# primitive vmfence stonith:fence_xvm params pcmk_host_map="server1:vm1;server4:vm4" op monitor interval=1min ##添加fence服务(首先确定物理机的fence服务是否开启)

crm(live)configure# commit

crm(live)configure# property stonith-enabled=true

crm(live)configure# commit

crm(live)configure# bye

[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# primitive vmfence stonith:fence_xvm params pcmk_host_map="server1:vm1;server4:vm4" op monitor interval=1min ##添加fence服务(首先确定物理机的fence服务是否开启)

crm(live)configure# commit

crm(live)configure# property stonith-enabled=true

crm(live)configure# commit

crm(live)configure# bye

server4:

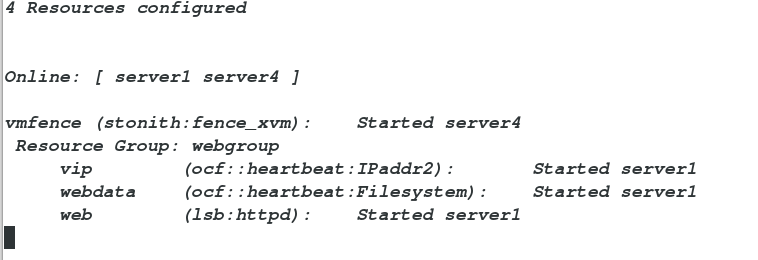

crm_mon

截图

crm_mon

截图

server2:

/etc/init.d/tgtd start

server1:

iscsiadm -m discovery -t st -p 172.25.8.2

iscsiadm -m node -l

mkfs.ext4 /dev/sda1

测试一下挂载是否ok

server1:

mount /dev/sda1 /var/www/html/

[root@server1 ~]# cd /var/www/html/

[root@server1 html]# vim index.html

cd

umount /var/www/html/

server1:

mount /dev/sda1 /var/www/html/

[root@server1 ~]# cd /var/www/html/

[root@server1 html]# vim index.html

cd

umount /var/www/html/

server4:

iscsiadm -m discovery -t st -p 172.25.8.2

iscsiadm -m node -l

mkfs.ext4 /dev/sda1

iscsiadm -m discovery -t st -p 172.25.8.2

iscsiadm -m node -l

mkfs.ext4 /dev/sda1

mount /dev/sda1 /mnt

ls /mnt ##查看是否有server1中写入的文件,有就ok

umount /mnt

ls /mnt ##查看是否有server1中写入的文件,有就ok

umount /mnt

server1:

[root@server1 ~]# crm

crm(live)# resource

crm(live)resource# show

Resource Group: webgroup

vip (ocf::heartbeat:IPaddr2): Started

web (lsb:httpd): Started

vmfence (stonith:fence_xvm): Started

crm(live)resource# stop webgroup

crm(live)resource# show

Resource Group: webgroup

vip (ocf::heartbeat:IPaddr2): Stopped

web (lsb:httpd): Stopped

vmfence (stonith:fence_xvm): Started

crm(live)resource# cd

crm(live)# configure

crm(live)configure# primitive webdata ocf:heartbeat:Filesystem params device=/dev/sda1 directory=/var/www/html fstype=ext4 op monitor interval=1min

crm(live)configure# edit

crm(live)configure# commit

crm(live)configure# delete webgroup

crm(live)configure# commit

crm(live)configure# group webgroup vip webdata web

crm(live)configure# commit

server4:

crm_mon

crm_mon

测试:

/etc/init.d/corosync stop

此时将server1服务关闭后,server4会自动接管服务,

但是当server1重新启动后,节点资源会重新回到server1

/etc/init.d/corosync start

为了防止这种情况,我们需要继续添加些策略

server1:

[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# rsc_defaults resource-stickiness=100

crm(live)configure# commit

crm(live)configure# bye

server1:

[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# rsc_defaults resource-stickiness=100

crm(live)configure# commit

crm(live)configure# bye

此时再将server1的服务停下,再次重启后发现节点资源不会浮动,仍由server4管理