版权声明:本文为博主原创文章,欢迎转载,并请注明出处。联系方式:460356155@qq.com

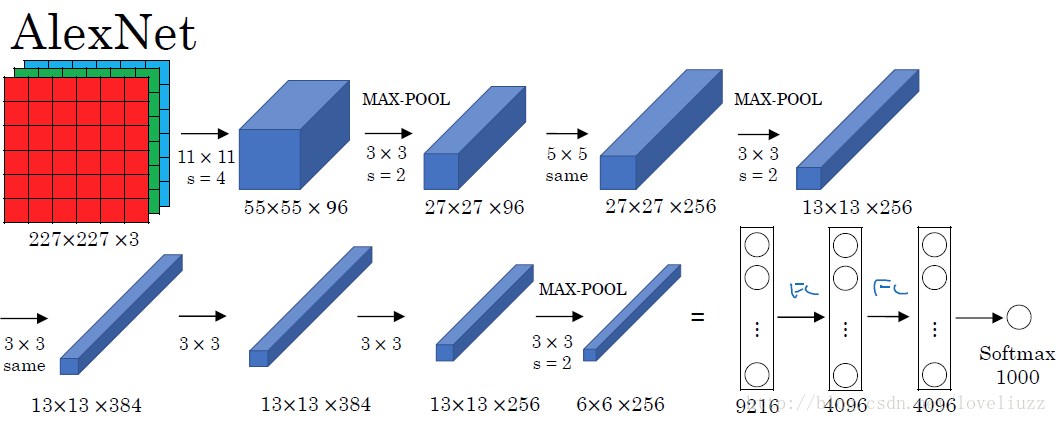

AlexNet在2012年ImageNet图像分类任务竞赛中获得冠军。网络结构如下图所示:

对CIFAR10,图片是32*32,尺寸远小于227*227,因此对网络结构和参数需做微调:

卷积层1:核大小7*7,步长2,填充2

最后一个max-pool层删除

网络定义代码如下:

1 class AlexNet(nn.Module): 2 def __init__(self): 3 super(AlexNet, self).__init__() 4 5 self.cnn = nn.Sequential( 6 # 卷积层1,3通道输入,96个卷积核,核大小7*7,步长2,填充2 7 # 经过该层图像大小变为32-7+2*2 / 2 +1,15*15 8 # 经3*3最大池化,2步长,图像变为15-3 / 2 + 1, 7*7 9 nn.Conv2d(3, 96, 7, 2, 2), 10 nn.ReLU(inplace=True), 11 nn.MaxPool2d(3, 2, 0), 12 13 # 卷积层2,96输入通道,256个卷积核,核大小5*5,步长1,填充2 14 # 经过该层图像变为7-5+2*2 / 1 + 1,7*7 15 # 经3*3最大池化,2步长,图像变为7-3 / 2 + 1, 3*3 16 nn.Conv2d(96, 256, 5, 1, 2), 17 nn.ReLU(inplace=True), 18 nn.MaxPool2d(3, 2, 0), 19 20 # 卷积层3,256输入通道,384个卷积核,核大小3*3,步长1,填充1 21 # 经过该层图像变为3-3+2*1 / 1 + 1,3*3 22 nn.Conv2d(256, 384, 3, 1, 1), 23 nn.ReLU(inplace=True), 24 25 # 卷积层3,384输入通道,384个卷积核,核大小3*3,步长1,填充1 26 # 经过该层图像变为3-3+2*1 / 1 + 1,3*3 27 nn.Conv2d(384, 384, 3, 1, 1), 28 nn.ReLU(inplace=True), 29 30 # 卷积层3,384输入通道,256个卷积核,核大小3*3,步长1,填充1 31 # 经过该层图像变为3-3+2*1 / 1 + 1,3*3 32 nn.Conv2d(384, 256, 3, 1, 1), 33 nn.ReLU(inplace=True) 34 ) 35 36 self.fc = nn.Sequential( 37 # 256个feature,每个feature 3*3 38 nn.Linear(256*3*3, 1024), 39 nn.ReLU(), 40 nn.Linear(1024, 512), 41 nn.ReLU(), 42 nn.Linear(512, 10) 43 ) 44 45 def forward(self, x): 46 x = self.cnn(x) 47 48 # x.size()[0]: batch size 49 x = x.view(x.size()[0], -1) 50 x = self.fc(x) 51 52 return x

其余代码同深度学习识别CIFAR10:pytorch训练LeNet、AlexNet、VGG19实现及比较(一)。运行结果如下:

Files already downloaded and verified

AlexNet(

(cnn): Sequential(

(0): Conv2d(3, 96, kernel_size=(7, 7), stride=(2, 2), padding=(2, 2))

(1): ReLU(inplace)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace)

(8): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace)

(10): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace)

)

(fc): Sequential(

(0): Linear(in_features=2304, out_features=1024, bias=True)

(1): ReLU()

(2): Linear(in_features=1024, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)

Train Epoch: 1 [6400/50000 (13%)] Loss: 2.303003 Acc: 10.000000

Train Epoch: 1 [12800/50000 (26%)] Loss: 2.302847 Acc: 9.000000

Train Epoch: 1 [19200/50000 (38%)] Loss: 2.302748 Acc: 9.000000

Train Epoch: 1 [25600/50000 (51%)] Loss: 2.302349 Acc: 10.000000

Train Epoch: 1 [32000/50000 (64%)] Loss: 2.301069 Acc: 10.000000

Train Epoch: 1 [38400/50000 (77%)] Loss: 2.275476 Acc: 12.000000

Train Epoch: 1 [44800/50000 (90%)] Loss: 2.231073 Acc: 13.000000

one epoch spend: 0:00:06.866484

EPOCH:1, ACC:25.06

Train Epoch: 2 [6400/50000 (13%)] Loss: 1.848806 Acc: 25.000000

Train Epoch: 2 [12800/50000 (26%)] Loss: 1.808251 Acc: 27.000000

Train Epoch: 2 [19200/50000 (38%)] Loss: 1.774210 Acc: 29.000000

Train Epoch: 2 [25600/50000 (51%)] Loss: 1.744809 Acc: 31.000000

Train Epoch: 2 [32000/50000 (64%)] Loss: 1.714098 Acc: 32.000000

Train Epoch: 2 [38400/50000 (77%)] Loss: 1.684451 Acc: 34.000000

Train Epoch: 2 [44800/50000 (90%)] Loss: 1.654931 Acc: 35.000000

one epoch spend: 0:00:06.941943

EPOCH:2, ACC:46.64

Train Epoch: 3 [6400/50000 (13%)] Loss: 1.418345 Acc: 45.000000

Train Epoch: 3 [12800/50000 (26%)] Loss: 1.368839 Acc: 47.000000

Train Epoch: 3 [19200/50000 (38%)] Loss: 1.349170 Acc: 48.000000

Train Epoch: 3 [25600/50000 (51%)] Loss: 1.326504 Acc: 49.000000

Train Epoch: 3 [32000/50000 (64%)] Loss: 1.316630 Acc: 50.000000

Train Epoch: 3 [38400/50000 (77%)] Loss: 1.300982 Acc: 51.000000

Train Epoch: 3 [44800/50000 (90%)] Loss: 1.288368 Acc: 52.000000

one epoch spend: 0:00:07.031582

EPOCH:3, ACC:56.72

Train Epoch: 4 [6400/50000 (13%)] Loss: 1.078210 Acc: 60.000000

Train Epoch: 4 [12800/50000 (26%)] Loss: 1.083730 Acc: 60.000000

Train Epoch: 4 [19200/50000 (38%)] Loss: 1.085976 Acc: 60.000000

Train Epoch: 4 [25600/50000 (51%)] Loss: 1.080863 Acc: 61.000000

Train Epoch: 4 [32000/50000 (64%)] Loss: 1.076230 Acc: 61.000000

Train Epoch: 4 [38400/50000 (77%)] Loss: 1.067998 Acc: 61.000000

Train Epoch: 4 [44800/50000 (90%)] Loss: 1.058093 Acc: 62.000000

one epoch spend: 0:00:06.908232

EPOCH:4, ACC:65.4

Train Epoch: 5 [6400/50000 (13%)] Loss: 0.911678 Acc: 67.000000

Train Epoch: 5 [12800/50000 (26%)] Loss: 0.904799 Acc: 67.000000

Train Epoch: 5 [19200/50000 (38%)] Loss: 0.914306 Acc: 67.000000

Train Epoch: 5 [25600/50000 (51%)] Loss: 0.906587 Acc: 67.000000

Train Epoch: 5 [32000/50000 (64%)] Loss: 0.902747 Acc: 67.000000

Train Epoch: 5 [38400/50000 (77%)] Loss: 0.896548 Acc: 68.000000

Train Epoch: 5 [44800/50000 (90%)] Loss: 0.895071 Acc: 68.000000

one epoch spend: 0:00:06.868743

EPOCH:5, ACC:66.47

Train Epoch: 6 [6400/50000 (13%)] Loss: 0.769778 Acc: 72.000000

Train Epoch: 6 [12800/50000 (26%)] Loss: 0.770126 Acc: 73.000000

Train Epoch: 6 [19200/50000 (38%)] Loss: 0.775755 Acc: 72.000000

Train Epoch: 6 [25600/50000 (51%)] Loss: 0.775044 Acc: 72.000000

Train Epoch: 6 [32000/50000 (64%)] Loss: 0.772686 Acc: 72.000000

Train Epoch: 6 [38400/50000 (77%)] Loss: 0.765352 Acc: 73.000000

Train Epoch: 6 [44800/50000 (90%)] Loss: 0.768808 Acc: 73.000000

one epoch spend: 0:00:06.868047

EPOCH:6, ACC:68.26

Train Epoch: 7 [6400/50000 (13%)] Loss: 0.641943 Acc: 77.000000

Train Epoch: 7 [12800/50000 (26%)] Loss: 0.643955 Acc: 77.000000

Train Epoch: 7 [19200/50000 (38%)] Loss: 0.642063 Acc: 77.000000

Train Epoch: 7 [25600/50000 (51%)] Loss: 0.647976 Acc: 77.000000

Train Epoch: 7 [32000/50000 (64%)] Loss: 0.648042 Acc: 77.000000

Train Epoch: 7 [38400/50000 (77%)] Loss: 0.652435 Acc: 77.000000

Train Epoch: 7 [44800/50000 (90%)] Loss: 0.655997 Acc: 77.000000

one epoch spend: 0:00:06.962986

EPOCH:7, ACC:72.21

Train Epoch: 8 [6400/50000 (13%)] Loss: 0.541914 Acc: 80.000000

Train Epoch: 8 [12800/50000 (26%)] Loss: 0.543631 Acc: 81.000000

Train Epoch: 8 [19200/50000 (38%)] Loss: 0.551045 Acc: 80.000000

Train Epoch: 8 [25600/50000 (51%)] Loss: 0.551447 Acc: 80.000000

Train Epoch: 8 [32000/50000 (64%)] Loss: 0.554876 Acc: 80.000000

Train Epoch: 8 [38400/50000 (77%)] Loss: 0.560712 Acc: 80.000000

Train Epoch: 8 [44800/50000 (90%)] Loss: 0.561110 Acc: 80.000000

one epoch spend: 0:00:07.025618

EPOCH:8, ACC:74.15

Train Epoch: 9 [6400/50000 (13%)] Loss: 0.452407 Acc: 84.000000

Train Epoch: 9 [12800/50000 (26%)] Loss: 0.462235 Acc: 83.000000

Train Epoch: 9 [19200/50000 (38%)] Loss: 0.476642 Acc: 83.000000

Train Epoch: 9 [25600/50000 (51%)] Loss: 0.478906 Acc: 83.000000

Train Epoch: 9 [32000/50000 (64%)] Loss: 0.476015 Acc: 83.000000

Train Epoch: 9 [38400/50000 (77%)] Loss: 0.477935 Acc: 83.000000

Train Epoch: 9 [44800/50000 (90%)] Loss: 0.480251 Acc: 83.000000

one epoch spend: 0:00:06.840690

EPOCH:9, ACC:74.49

Train Epoch: 10 [6400/50000 (13%)] Loss: 0.383466 Acc: 87.000000

Train Epoch: 10 [12800/50000 (26%)] Loss: 0.376466 Acc: 87.000000

Train Epoch: 10 [19200/50000 (38%)] Loss: 0.386534 Acc: 86.000000

Train Epoch: 10 [25600/50000 (51%)] Loss: 0.394657 Acc: 86.000000

Train Epoch: 10 [32000/50000 (64%)] Loss: 0.394315 Acc: 86.000000

Train Epoch: 10 [38400/50000 (77%)] Loss: 0.395472 Acc: 86.000000

Train Epoch: 10 [44800/50000 (90%)] Loss: 0.399573 Acc: 86.000000

one epoch spend: 0:00:06.866040

EPOCH:10, ACC:73.13

Train Epoch: 11 [6400/50000 (13%)] Loss: 0.297959 Acc: 89.000000

Train Epoch: 11 [12800/50000 (26%)] Loss: 0.305871 Acc: 89.000000

Train Epoch: 11 [19200/50000 (38%)] Loss: 0.315880 Acc: 89.000000

Train Epoch: 11 [25600/50000 (51%)] Loss: 0.322634 Acc: 88.000000

Train Epoch: 11 [32000/50000 (64%)] Loss: 0.326418 Acc: 88.000000

Train Epoch: 11 [38400/50000 (77%)] Loss: 0.333330 Acc: 88.000000

Train Epoch: 11 [44800/50000 (90%)] Loss: 0.337955 Acc: 88.000000

one epoch spend: 0:00:06.884786

EPOCH:11, ACC:73.79

Train Epoch: 12 [6400/50000 (13%)] Loss: 0.242202 Acc: 91.000000

Train Epoch: 12 [12800/50000 (26%)] Loss: 0.250616 Acc: 91.000000

Train Epoch: 12 [19200/50000 (38%)] Loss: 0.265347 Acc: 90.000000

Train Epoch: 12 [25600/50000 (51%)] Loss: 0.271456 Acc: 90.000000

Train Epoch: 12 [32000/50000 (64%)] Loss: 0.273988 Acc: 90.000000

Train Epoch: 12 [38400/50000 (77%)] Loss: 0.280836 Acc: 90.000000

Train Epoch: 12 [44800/50000 (90%)] Loss: 0.281419 Acc: 90.000000

one epoch spend: 0:00:06.906915

EPOCH:12, ACC:75.89

Train Epoch: 13 [6400/50000 (13%)] Loss: 0.228122 Acc: 92.000000

Train Epoch: 13 [12800/50000 (26%)] Loss: 0.228350 Acc: 92.000000

Train Epoch: 13 [19200/50000 (38%)] Loss: 0.227151 Acc: 92.000000

Train Epoch: 13 [25600/50000 (51%)] Loss: 0.228918 Acc: 92.000000

Train Epoch: 13 [32000/50000 (64%)] Loss: 0.232642 Acc: 91.000000

Train Epoch: 13 [38400/50000 (77%)] Loss: 0.237782 Acc: 91.000000

Train Epoch: 13 [44800/50000 (90%)] Loss: 0.242339 Acc: 91.000000

one epoch spend: 0:00:06.869576

EPOCH:13, ACC:74.39

Train Epoch: 14 [6400/50000 (13%)] Loss: 0.179683 Acc: 93.000000

Train Epoch: 14 [12800/50000 (26%)] Loss: 0.182840 Acc: 93.000000

Train Epoch: 14 [19200/50000 (38%)] Loss: 0.182861 Acc: 93.000000

Train Epoch: 14 [25600/50000 (51%)] Loss: 0.189549 Acc: 93.000000

Train Epoch: 14 [32000/50000 (64%)] Loss: 0.193639 Acc: 93.000000

Train Epoch: 14 [38400/50000 (77%)] Loss: 0.196073 Acc: 93.000000

Train Epoch: 14 [44800/50000 (90%)] Loss: 0.198425 Acc: 93.000000

one epoch spend: 0:00:06.927269

EPOCH:14, ACC:75.63

Train Epoch: 15 [6400/50000 (13%)] Loss: 0.123262 Acc: 95.000000

Train Epoch: 15 [12800/50000 (26%)] Loss: 0.136458 Acc: 95.000000

Train Epoch: 15 [19200/50000 (38%)] Loss: 0.141503 Acc: 95.000000

Train Epoch: 15 [25600/50000 (51%)] Loss: 0.147542 Acc: 94.000000

Train Epoch: 15 [32000/50000 (64%)] Loss: 0.149795 Acc: 94.000000

Train Epoch: 15 [38400/50000 (77%)] Loss: 0.154987 Acc: 94.000000

Train Epoch: 15 [44800/50000 (90%)] Loss: 0.157952 Acc: 94.000000

one epoch spend: 0:00:07.015382

EPOCH:15, ACC:74.6

Train Epoch: 16 [6400/50000 (13%)] Loss: 0.144001 Acc: 94.000000

Train Epoch: 16 [12800/50000 (26%)] Loss: 0.141813 Acc: 94.000000

Train Epoch: 16 [19200/50000 (38%)] Loss: 0.139413 Acc: 95.000000

Train Epoch: 16 [25600/50000 (51%)] Loss: 0.136546 Acc: 95.000000

Train Epoch: 16 [32000/50000 (64%)] Loss: 0.138039 Acc: 95.000000

Train Epoch: 16 [38400/50000 (77%)] Loss: 0.139393 Acc: 95.000000

Train Epoch: 16 [44800/50000 (90%)] Loss: 0.142776 Acc: 95.000000

one epoch spend: 0:00:06.883968

EPOCH:16, ACC:75.54

Train Epoch: 17 [6400/50000 (13%)] Loss: 0.080704 Acc: 97.000000

Train Epoch: 17 [12800/50000 (26%)] Loss: 0.098754 Acc: 96.000000

Train Epoch: 17 [19200/50000 (38%)] Loss: 0.104385 Acc: 96.000000

Train Epoch: 17 [25600/50000 (51%)] Loss: 0.107634 Acc: 96.000000

Train Epoch: 17 [32000/50000 (64%)] Loss: 0.112148 Acc: 96.000000

Train Epoch: 17 [38400/50000 (77%)] Loss: 0.113687 Acc: 96.000000

Train Epoch: 17 [44800/50000 (90%)] Loss: 0.114508 Acc: 96.000000

one epoch spend: 0:00:06.905244

EPOCH:17, ACC:74.9

Train Epoch: 18 [6400/50000 (13%)] Loss: 0.085284 Acc: 97.000000

Train Epoch: 18 [12800/50000 (26%)] Loss: 0.087985 Acc: 97.000000

Train Epoch: 18 [19200/50000 (38%)] Loss: 0.096691 Acc: 96.000000

Train Epoch: 18 [25600/50000 (51%)] Loss: 0.102257 Acc: 96.000000

Train Epoch: 18 [32000/50000 (64%)] Loss: 0.103708 Acc: 96.000000

Train Epoch: 18 [38400/50000 (77%)] Loss: 0.103074 Acc: 96.000000

Train Epoch: 18 [44800/50000 (90%)] Loss: 0.106078 Acc: 96.000000

one epoch spend: 0:00:06.909887

EPOCH:18, ACC:74.86

Train Epoch: 19 [6400/50000 (13%)] Loss: 0.074644 Acc: 97.000000

Train Epoch: 19 [12800/50000 (26%)] Loss: 0.072871 Acc: 97.000000

Train Epoch: 19 [19200/50000 (38%)] Loss: 0.075573 Acc: 97.000000

Train Epoch: 19 [25600/50000 (51%)] Loss: 0.079646 Acc: 97.000000

Train Epoch: 19 [32000/50000 (64%)] Loss: 0.081056 Acc: 97.000000

Train Epoch: 19 [38400/50000 (77%)] Loss: 0.084256 Acc: 97.000000

Train Epoch: 19 [44800/50000 (90%)] Loss: 0.086415 Acc: 97.000000

one epoch spend: 0:00:07.215059

EPOCH:19, ACC:75.69

Train Epoch: 20 [6400/50000 (13%)] Loss: 0.062469 Acc: 97.000000

Train Epoch: 20 [12800/50000 (26%)] Loss: 0.061595 Acc: 97.000000

Train Epoch: 20 [19200/50000 (38%)] Loss: 0.062788 Acc: 97.000000

Train Epoch: 20 [25600/50000 (51%)] Loss: 0.065734 Acc: 97.000000

Train Epoch: 20 [32000/50000 (64%)] Loss: 0.067006 Acc: 97.000000

Train Epoch: 20 [38400/50000 (77%)] Loss: 0.066818 Acc: 97.000000

Train Epoch: 20 [44800/50000 (90%)] Loss: 0.068419 Acc: 97.000000

one epoch spend: 0:00:07.187726

EPOCH:20, ACC:74.23

CIFAR10 pytorch LeNet Train: EPOCH:20, BATCH_SZ:64, LR:0.01, ACC:75.89

train spend time: 0:02:30.334005

Process finished with exit code 0

准确率达到75%,对比LeNet-5的63%,有大幅提升。