一、Ceph简介

Ceph是一个分布式的数据对象存储,系统设计旨在性能、可靠性和可扩展性上能够提供优秀的存储服务。Ceph分布式存储能够在一个统一的系统中同时提供了对象、块、和文件存储功能,在这方面独一无二的;同时在扩展性上又可支持数以千计的客户端可以访问PB级到EB级甚至更多的数据。它不但适应非结构化数据,并且客户端可以同时使用当前及传统的对象接口进行数据存取,被称为是存储的未来!

二、Ceph的特点

2.1 Ceph的优势

- CRUSH算法:Ceph摒弃了传统的集中式存储元数据寻址的方案,转而使用CRUSH算法完成数据的寻址操作。CRUSH在一致性哈希基础上很好的考虑了容灾域的隔离,能够实现各类负载的副本放置规则,例如跨机房、机架感知等。Ceph会将CRUSH规则集分配给存储池。当Ceph客户端存储或检索存储池中的数据时,Ceph会自动识别CRUSH规则集、以及存储和检索数据这一规则中的顶级bucket。当Ceph处理CRUSH规则时,它会识别出包含某个PG的主OSD,这样就可以使客户端直接与主OSD进行连接进行数据的读写。

- 高可用:Ceph中的数据副本数量可以由管理员自行定义,并可以通过CRUSH算法指定副本的物理存储位置以分隔故障域, 可以忍受多种故障场景并自动尝试并行修复。同时支持强一致副本,而副本又能够垮主机、机架、机房、数据中心存放。所以安全可靠。存储节点可以自管理、自动修复。无单点故障,有很强的容错性;

- 高扩展性:Ceph不同于swift,客户端所有的读写操作都要经过代理节点。一旦集群并发量增大时,代理节点很容易成为单点瓶颈。Ceph本身并没有主控节点,扩展起来比较容易,并且理论上,它的性能会随着磁盘数量的增加而线性增长;

- 特性丰富:Ceph支持三种调用接口:对象存储,块存储,文件系统挂载。三种方式可以一同使用。Ceph统一存储,虽然Ceph底层是一个分布式文件系统,但由于在上层开发了支持对象和块的接口;

- 统一的存储:能同时提供对象存储、文件存储和块存储;

2.2 Ceph的缺点

请忽略Ceph的缺点……

三、架构与组件

官网地址是:https://docs.ceph.com/en/nautilus/architecture/

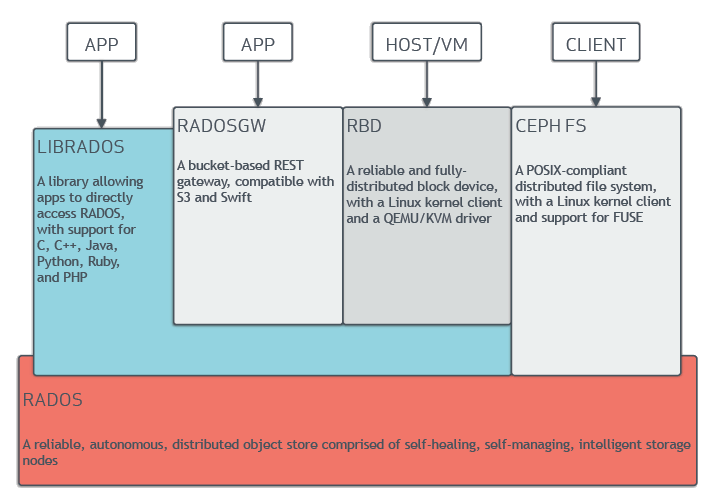

3.1、Ceph的架构示意图:

- Ceph的底层是RADOS,RADOS本身也是分布式存储系统,CEPH所有的存储功能都是基于RADOS实现。RADOS采用C++开发,所提供的原生Librados API包括C和C++两种。Ceph的上层应用调用本机上的librados API,再由后者通过socket与RADOS集群中的其他节点通信并完成各种操作。

- RADOS向外界暴露了调用接口,即LibRADOS,应用程序只需要调用LibRADOS的接口,就可以操纵Ceph了。这其中,RADOS GW用于对象存储,RBD用于块存储,它们都属于LibRADOS;CephFS是内核态程序,向外界提供了POSIX接口,用户可以通过客户端直接挂载使用。

- RADOS GateWay、RBD其作用是在librados库的基础上提供抽象层次更高、更便于应用或客户端使用的上层接口。其中,RADOS GW是一个提供与Amazon S3和Swift兼容的RESTful API的gateway,以供相应的对象存储应用开发使用。RBD则提供了一个标准的块设备接口,常用于在虚拟化的场景下为虚拟机创建volume。目前,Red Hat已经将RBD驱动集成在KVM/QEMU中,以提高虚拟机访问性能。这两种方式目前在云计算中应用的比较多。

- CEPHFS则提供了POSIX接口,用户可直接通过客户端挂载使用。它是内核态的程序,所以无需调用用户空间的librados库。它通过内核中的net模块来与Rados进行交互。

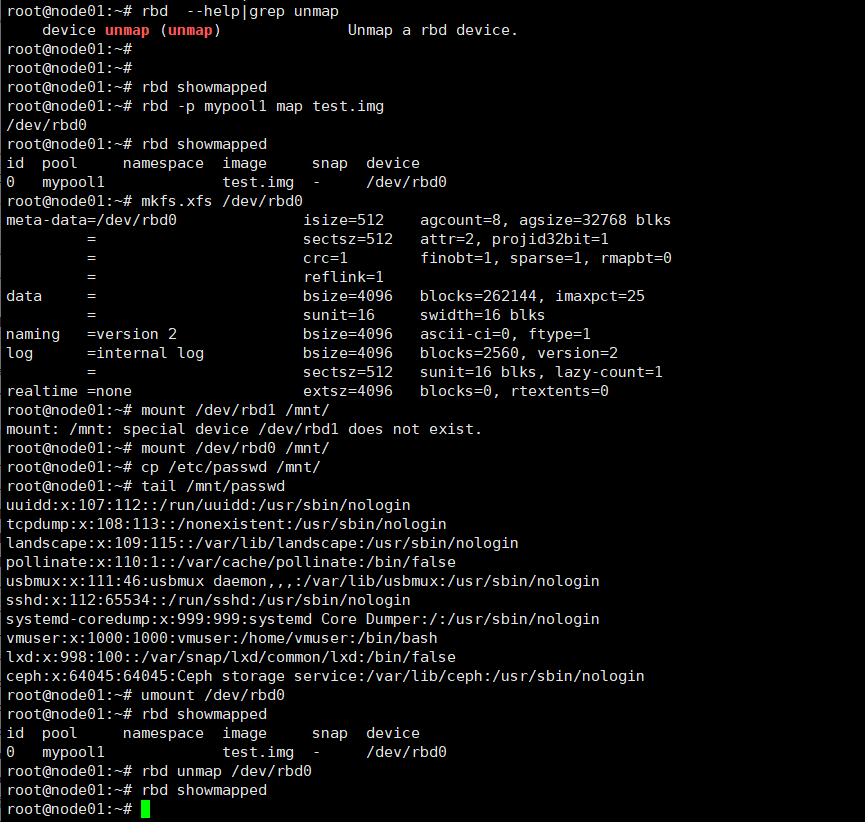

- RBD块设备。对外提供块存储。可以像磁盘一样被映射、格式化已经挂载到服务器上。支持snapshot。

3.2、 Ceph数据的存储过程:

废话不多说,先上图:

- 无论使用哪种存储方式(对象、块、挂载),存储的数据都会被切分成对象(Objects)。Objects size大小可以由管理员调整,通常为2M或4M。每个对象都会有一个唯一的OID,由ino与ono生成,虽然这些名词看上去很复杂,其实相当简单。ino即是文件的File ID,用于在全局唯一标示每一个文件,而ono则是分片的编号。比如:一个文件FileID为A,它被切成了两个对象,一个对象编号0,另一个编号1,那么这两个文件的oid则为A0与A1。Oid的好处是可以唯一标示每个不同的对象,并且存储了对象与文件的从属关系。由于ceph的所有数据都虚拟成了整齐划一的对象,所以在读写时效率都会比较高。 但是对象并不会直接存储进OSD中,因为对象的size很小,在一个大规模的集群中可能有几百到几千万个对象。这么多对象光是遍历寻址,速度都是很缓慢的;并且如果将对象直接通过某种固定映射的哈希算法映射到osd上,当这个osd损坏时,对象无法自动迁移至其他osd上面(因为映射函数不允许)。为了解决这些问题,ceph引入了归置组的概念,即PG。

- PG是一个逻辑概念,我们linux系统中可以直接看到对象,但是无法直接看到PG。它在数据寻址时类似于数据库中的索引:每个对象都会固定映射进一个PG中,所以当我们要寻找一个对象时,只需要先找到对象所属的PG,然后遍历这个PG就可以了,无需遍历所有对象。而且在数据迁移时,也是以PG作为基本单位进行迁移,ceph不会直接操作对象。 对象时如何映射进PG的?还记得OID么?首先使用静态hash函数对OID做hash取出特征码,用特征码与PG的数量去模,得到的序号则是PGID。由于这种设计方式,PG的数量多寡直接决定了数据分布的均匀性,所以合理设置的PG数量可以很好的提升CEPH集群的性能并使数据均匀分布。

- 最后PG会根据管理员设置的副本数量进行复制,然后通过crush算法存储到不同的OSD节点上(其实是把PG中的所有对象存储到节点上),第一个osd节点即为主节点,其余均为从节点。

四、环境准备、源设置、安装过程和验证

本次使用的虚拟机部署(Ubuntu18.04)和测试(CentOS7),Ceph版本为目前最新的P版本;具体规划如下(由于笔记本计算机资源限制——相关功能只能合并安装部署):

| 节点 | 角色 | IP(11:public网络,22为:cluster网络) | CPU | Memory | 磁盘 | 备注 |

| node01 | deploy、mon、mgr、osd |

192.168.11.210、192.168.22.210 |

2C | 2G | 两块40G的osd硬盘 | Ubuntu18.04 |

| node02 | mon、mgr、osd | 192.168.11.220、192.168.22.220 | 2C | 2G | 两块40G的osd硬盘 | Ubuntu18.04 |

| node03 | mon、mgr、osd | 192.168.11.230、192.168.22.230 | 2C | 2G | 两块40G的osd硬盘 | Ubuntu18.04 |

| client | 安装:ceph-common主键包即可 | 192.168.11.128 | CentOS7 |

4.1、环境准备(具体怎么弄这里我就不多说了,这里只是提一下要注意的点):

- 时间同步一致;

- 设置主机名和hosts解析

- deploy主键到所有节点的ssh免密登陆

- 各个节点静态固定网卡设置

4.2、设置操作系统和Ceph的源:

所有节点使用清华大学或阿里的源:

wget -q -O- 'https://download.ceph.com/keys/release.asc' | sudo apt-key add - cat > /etc/apt/sources.list <<EOF # 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main EOF

修改过源后,务必:# apt update

4.3、具体安装步骤:

1、在node01上安装ceph-deploy工具(由于后期有很多文件生成——创建个cephCluster目录),具体情况如下:

root@node01:~# apt-cache madison ceph-deploy ceph-deploy | 2.0.1 | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages ceph-deploy | 2.0.1 | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main i386 Packages ceph-deploy | 1.5.38-0ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 Packages ceph-deploy | 1.5.38-0ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe i386 Packages root@node01:~# root@node01:~# ls root@node01:~# mkdir cephCluster root@node01:~# cd cephCluster/ root@node01:~/cephCluster# ls root@node01:~/cephCluster# apt install ceph-deploy Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: libpython-stdlib libpython2.7-minimal libpython2.7-stdlib python python-minimal python-pkg-resources python-setuptools python2.7 python2.7-minimal Suggested packages: python-doc python-tk python-setuptools-doc python2.7-doc binutils binfmt-support The following NEW packages will be installed: ceph-deploy libpython-stdlib libpython2.7-minimal libpython2.7-stdlib python python-minimal python-pkg-resources python-setuptools python2.7 python2.7-minimal 0 upgraded, 10 newly installed, 0 to remove and 157 not upgraded. Need to get 4,521 kB of archives. After this operation, 19.4 MB of additional disk space will be used. Do you want to continue? [Y/n] y Get:1 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libpython2.7-minimal amd64 2.7.17-1~18.04ubuntu1.6 [335 kB] Get:2 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 python2.7-minimal amd64 2.7.17-1~18.04ubuntu1.6 [1,291 kB] Get:3 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 python-minimal amd64 2.7.15~rc1-1 [28.1 kB] Get:4 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libpython2.7-stdlib amd64 2.7.17-1~18.04ubuntu1.6 [1,917 kB] Get:5 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 python2.7 amd64 2.7.17-1~18.04ubuntu1.6 [248 kB] Get:6 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libpython-stdlib amd64 2.7.15~rc1-1 [7,620 B] Get:7 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 python amd64 2.7.15~rc1-1 [140 kB] Get:8 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 python-pkg-resources all 39.0.1-2 [128 kB] Get:9 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 python-setuptools all 39.0.1-2 [329 kB] Get:10 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-deploy all 2.0.1 [97.2 kB] Fetched 4,521 kB in 1s (3,953 kB/s) Selecting previously unselected package libpython2.7-minimal:amd64. (Reading database ... 67125 files and directories currently installed.) Preparing to unpack .../0-libpython2.7-minimal_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking libpython2.7-minimal:amd64 (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package python2.7-minimal. Preparing to unpack .../1-python2.7-minimal_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking python2.7-minimal (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package python-minimal. Preparing to unpack .../2-python-minimal_2.7.15~rc1-1_amd64.deb ... Unpacking python-minimal (2.7.15~rc1-1) ... Selecting previously unselected package libpython2.7-stdlib:amd64. Preparing to unpack .../3-libpython2.7-stdlib_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking libpython2.7-stdlib:amd64 (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package python2.7. Preparing to unpack .../4-python2.7_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking python2.7 (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package libpython-stdlib:amd64. Preparing to unpack .../5-libpython-stdlib_2.7.15~rc1-1_amd64.deb ... Unpacking libpython-stdlib:amd64 (2.7.15~rc1-1) ... Setting up libpython2.7-minimal:amd64 (2.7.17-1~18.04ubuntu1.6) ... Setting up python2.7-minimal (2.7.17-1~18.04ubuntu1.6) ... Linking and byte-compiling packages for runtime python2.7... Setting up python-minimal (2.7.15~rc1-1) ... Selecting previously unselected package python. (Reading database ... 67873 files and directories currently installed.) Preparing to unpack .../python_2.7.15~rc1-1_amd64.deb ... Unpacking python (2.7.15~rc1-1) ... Selecting previously unselected package python-pkg-resources. Preparing to unpack .../python-pkg-resources_39.0.1-2_all.deb ... Unpacking python-pkg-resources (39.0.1-2) ... Selecting previously unselected package python-setuptools. Preparing to unpack .../python-setuptools_39.0.1-2_all.deb ... Unpacking python-setuptools (39.0.1-2) ... Selecting previously unselected package ceph-deploy. Preparing to unpack .../ceph-deploy_2.0.1_all.deb ... Unpacking ceph-deploy (2.0.1) ... Setting up libpython2.7-stdlib:amd64 (2.7.17-1~18.04ubuntu1.6) ... Setting up python2.7 (2.7.17-1~18.04ubuntu1.6) ... Setting up libpython-stdlib:amd64 (2.7.15~rc1-1) ... Setting up python (2.7.15~rc1-1) ... Setting up python-pkg-resources (39.0.1-2) ... Setting up python-setuptools (39.0.1-2) ... Setting up ceph-deploy (2.0.1) ... Processing triggers for mime-support (3.60ubuntu1) ... Processing triggers for man-db (2.8.3-2ubuntu0.1) ... root@node01:~/cephCluster#

2、集群初始化(第一个mon节点——node01),具体情况如下:

root@node01:~/cephCluster# ceph-deploy new --cluster-network 192.168.22.0/24 --public-network 192.168.11.0/24 node01 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new --cluster-network 192.168.22.0/24 --public-network 192.168.11.0/24 node01 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2a355a4e60> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] ssh_copykey : True [ceph_deploy.cli][INFO ] mon : ['node01'] [ceph_deploy.cli][INFO ] func : <function new at 0x7f2a3285dad0> [ceph_deploy.cli][INFO ] public_network : 192.168.11.0/24 [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster_network : 192.168.22.0/24 [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] fsid : None [ceph_deploy.new][DEBUG ] Creating new cluster named ceph [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [node01][DEBUG ] connected to host: node01 [node01][DEBUG ] detect platform information from remote host [node01][DEBUG ] detect machine type [node01][DEBUG ] find the location of an executable [node01][INFO ] Running command: /bin/ip link show [node01][INFO ] Running command: /bin/ip addr show [node01][DEBUG ] IP addresses found: [u'192.168.22.210', u'192.168.11.210'] [ceph_deploy.new][DEBUG ] Resolving host node01 [ceph_deploy.new][DEBUG ] Monitor node01 at 192.168.11.210 [ceph_deploy.new][DEBUG ] Monitor initial members are ['node01'] [ceph_deploy.new][DEBUG ] Monitor addrs are [u'192.168.11.210'] [ceph_deploy.new][DEBUG ] Creating a random mon key... [ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring... [ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf... root@node01:~/cephCluster# root@node01:~/cephCluster# ls ceph.conf ceph-deploy-ceph.log ceph.mon.keyring root@node01:~/cephCluster#

3、三台mon节点上都安装:python2——不装下面的mon安装初始化会提示安装(下面只贴了node02的安装过程):

root@node02:~# apt install python2.7 -y Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: python2.7-minimal Suggested packages: python2.7-doc binfmt-support The following NEW packages will be installed: python2.7 python2.7-minimal 0 upgraded, 2 newly installed, 0 to remove and 157 not upgraded. Need to get 1,539 kB of archives. After this operation, 4,163 kB of additional disk space will be used. Get:1 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 python2.7-minimal amd64 2.7.17-1~18.04ubuntu1.6 [1,291 kB] Get:2 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 python2.7 amd64 2.7.17-1~18.04ubuntu1.6 [248 kB] Fetched 1,539 kB in 1s (2,341 kB/s) Selecting previously unselected package python2.7-minimal. (Reading database ... 69833 files and directories currently installed.) Preparing to unpack .../python2.7-minimal_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking python2.7-minimal (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package python2.7. Preparing to unpack .../python2.7_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking python2.7 (2.7.17-1~18.04ubuntu1.6) ... Setting up python2.7-minimal (2.7.17-1~18.04ubuntu1.6) ... Linking and byte-compiling packages for runtime python2.7... Setting up python2.7 (2.7.17-1~18.04ubuntu1.6) ... Processing triggers for mime-support (3.60ubuntu1) ... Processing triggers for man-db (2.8.3-2ubuntu0.1) ... root@node02:~# which python2 root@node02:~# python python2.7 python3 python3.6 python3.6m python3m root@node02:~# ln -sv /usr/bin/python2.7 /usr/bin/python2 '/usr/bin/python2' -> '/usr/bin/python2.7' root@node02:~#

4、三台mon节点上都安装:ceph-mon组件(下面只贴了node01的安装过程):

root@node01:~# apt-cache madison ceph-mon ceph-mon | 16.2.5-1bionic | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages ceph-mon | 12.2.13-0ubuntu0.18.04.8 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 Packages ceph-mon | 12.2.13-0ubuntu0.18.04.4 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-security/main amd64 Packages ceph-mon | 12.2.4-0ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 Packages root@node01:~# root@node01:~# apt install ceph-mon Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ceph-base ceph-common ceph-fuse ceph-mds guile-2.0-libs ibverbs-providers libaio1 libbabeltrace1 libbinutils libcephfs2 libdw1 libgc1c2 libgoogle-perftools4 libgsasl7 libibverbs1 libjaeger libkyotocabinet16v5 libleveldb1v5 libltdl7 liblttng-ust-ctl4 liblttng-ust0 liblua5.3-0 libmailutils5 libmysqlclient20 libnl-route-3-200 libntlm0 liboath0 libopts25 libpython2.7 librabbitmq4 librados2 libradosstriper1 librbd1 librdkafka1 librdmacm1 librgw2 libsnappy1v5 libtcmalloc-minimal4 liburcu6 mailutils mailutils-common mysql-common ntp nvme-cli postfix python3-ceph-argparse python3-ceph-common python3-cephfs python3-prettytable python3-rados python3-rbd python3-rgw smartmontools sntp ssl-cert Suggested packages: binutils-doc mailutils-mh mailutils-doc ntp-doc procmail postfix-mysql postfix-pgsql postfix-ldap postfix-pcre postfix-lmdb postfix-sqlite sasl2-bin dovecot-common resolvconf postfix-cdb postfix-doc gsmartcontrol smart-notifier openssl-blacklist The following NEW packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ceph-base ceph-common ceph-fuse ceph-mds ceph-mon guile-2.0-libs ibverbs-providers libaio1 libbabeltrace1 libbinutils libcephfs2 libdw1 libgc1c2 libgoogle-perftools4 libgsasl7 libibverbs1 libjaeger libkyotocabinet16v5 libleveldb1v5 libltdl7 liblttng-ust-ctl4 liblttng-ust0 liblua5.3-0 libmailutils5 libmysqlclient20 libnl-route-3-200 libntlm0 liboath0 libopts25 libpython2.7 librabbitmq4 librados2 libradosstriper1 librbd1 librdkafka1 librdmacm1 librgw2 libsnappy1v5 libtcmalloc-minimal4 liburcu6 mailutils mailutils-common mysql-common ntp nvme-cli postfix python3-ceph-argparse python3-ceph-common python3-cephfs python3-prettytable python3-rados python3-rbd python3-rgw smartmontools sntp ssl-cert 0 upgraded, 59 newly installed, 0 to remove and 157 not upgraded. Need to get 60.9 MB of archives. After this operation, 273 MB of additional disk space will be used. Do you want to continue? [Y/n] y Get:1 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 libopts25 amd64 1:5.18.12-4 [58.2 kB] Get:2 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/universe amd64 ntp amd64 1:4.2.8p10+dfsg-5ubuntu7.3 [640 kB] Get:3 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 binutils-common amd64 2.30-21ubuntu1~18.04.5 [197 kB] Get:4 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libbinutils amd64 2.30-21ubuntu1~18.04.5 [489 kB] Get:5 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 binutils-x86-64-linux-gnu amd64 2.30-21ubuntu1~18.04.5 [1,839 kB] Get:6 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 binutils amd64 2.30-21ubuntu1~18.04.5 [3,388 B] Get:7 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 libjaeger amd64 16.2.5-1bionic [3,780 B] Get:8 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libnl-route-3-200 amd64 3.2.29-0ubuntu3 [146 kB] Get:9 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libibverbs1 amd64 17.1-1ubuntu0.2 [44.4 kB] Get:10 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 liburcu6 amd64 0.10.1-1ubuntu1 [52.2 kB] Get:11 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 liblttng-ust-ctl4 amd64 2.10.1-1 [80.8 kB] Get:12 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 liblttng-ust0 amd64 2.10.1-1 [154 kB] Get:13 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 librdmacm1 amd64 17.1-1ubuntu0.2 [56.1 kB] Get:14 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 librados2 amd64 16.2.5-1bionic [3,175 kB] Get:15 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libaio1 amd64 0.3.110-5ubuntu0.1 [6,476 B] Get:16 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 librbd1 amd64 16.2.5-1bionic [3,125 kB] Get:17 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 libcephfs2 amd64 16.2.5-1bionic [671 kB] Get:18 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-rados amd64 16.2.5-1bionic [339 kB] Get:19 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-ceph-argparse all 16.2.5-1bionic [21.9 kB] Get:20 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-cephfs amd64 16.2.5-1bionic [177 kB] Get:21 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-ceph-common all 16.2.5-1bionic [30.8 kB] Get:22 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 python3-prettytable all 0.7.2-3 [19.7 kB] Get:23 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-rbd amd64 16.2.5-1bionic [336 kB] Get:24 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 liblua5.3-0 amd64 5.3.3-1ubuntu0.18.04.1 [115 kB] Get:25 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/universe amd64 librabbitmq4 amd64 0.8.0-1ubuntu0.18.04.2 [33.9 kB] Get:26 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 librdkafka1 amd64 0.11.3-1build1 [293 kB] Get:27 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 librgw2 amd64 16.2.5-1bionic [3,394 kB] Get:28 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 python3-rgw amd64 16.2.5-1bionic [99.4 kB] Get:29 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libdw1 amd64 0.170-0.4ubuntu0.1 [203 kB] Get:30 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libbabeltrace1 amd64 1.5.5-1 [154 kB] Get:31 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libtcmalloc-minimal4 amd64 2.5-2.2ubuntu3 [91.6 kB] Get:32 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libgoogle-perftools4 amd64 2.5-2.2ubuntu3 [190 kB] Get:33 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libsnappy1v5 amd64 1.1.7-1 [16.0 kB] Get:34 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libleveldb1v5 amd64 1.20-2 [136 kB] Get:35 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 liboath0 amd64 2.6.1-1 [44.7 kB] Get:36 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 libradosstriper1 amd64 16.2.5-1bionic [415 kB] Get:37 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-common amd64 16.2.5-1bionic [21.3 MB] Get:38 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-base amd64 16.2.5-1bionic [5,630 kB] Get:39 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-fuse amd64 16.2.5-1bionic [777 kB] Get:40 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-mds amd64 16.2.5-1bionic [2,159 kB] Get:41 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 ceph-mon amd64 16.2.5-1bionic [6,680 kB] Get:42 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libgc1c2 amd64 1:7.4.2-8ubuntu1 [81.8 kB] Get:43 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 libltdl7 amd64 2.4.6-2 [38.8 kB] Get:44 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 guile-2.0-libs amd64 2.0.13+1-5ubuntu0.1 [2,218 kB] Get:45 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 ibverbs-providers amd64 17.1-1ubuntu0.2 [160 kB] Get:46 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 libntlm0 amd64 1.4-8 [13.6 kB] Get:47 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 libgsasl7 amd64 1.8.0-8ubuntu3 [118 kB] Get:48 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 libkyotocabinet16v5 amd64 1.2.76-4.2 [292 kB] Get:49 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 mailutils-common all 1:3.4-1 [269 kB] Get:50 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 mysql-common all 5.8+1.0.4 [7,308 B] Get:51 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libmysqlclient20 amd64 5.7.35-0ubuntu0.18.04.1 [691 kB] Get:52 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 libpython2.7 amd64 2.7.17-1~18.04ubuntu1.6 [1,053 kB] Get:53 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 libmailutils5 amd64 1:3.4-1 [457 kB] Get:54 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/main amd64 ssl-cert all 1.0.39 [17.0 kB] Get:55 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 postfix amd64 3.3.0-1ubuntu0.3 [1,148 kB] Get:56 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 mailutils amd64 1:3.4-1 [140 kB] Get:57 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 nvme-cli amd64 1.5-1ubuntu1.1 [184 kB] Get:58 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 smartmontools amd64 6.5+svn4324-1ubuntu0.1 [477 kB] Get:59 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/universe amd64 sntp amd64 1:4.2.8p10+dfsg-5ubuntu7.3 [86.5 kB] Fetched 60.9 MB in 7s (8,850 kB/s) Extracting templates from packages: 100% Preconfiguring packages ... Selecting previously unselected package libopts25:amd64. (Reading database ... 68224 files and directories currently installed.) Preparing to unpack .../00-libopts25_1%3a5.18.12-4_amd64.deb ... Unpacking libopts25:amd64 (1:5.18.12-4) ... Selecting previously unselected package ntp. Preparing to unpack .../01-ntp_1%3a4.2.8p10+dfsg-5ubuntu7.3_amd64.deb ... Unpacking ntp (1:4.2.8p10+dfsg-5ubuntu7.3) ... Selecting previously unselected package binutils-common:amd64. Preparing to unpack .../02-binutils-common_2.30-21ubuntu1~18.04.5_amd64.deb ... Unpacking binutils-common:amd64 (2.30-21ubuntu1~18.04.5) ... Selecting previously unselected package libbinutils:amd64. Preparing to unpack .../03-libbinutils_2.30-21ubuntu1~18.04.5_amd64.deb ... Unpacking libbinutils:amd64 (2.30-21ubuntu1~18.04.5) ... Selecting previously unselected package binutils-x86-64-linux-gnu. Preparing to unpack .../04-binutils-x86-64-linux-gnu_2.30-21ubuntu1~18.04.5_amd64.deb ... Unpacking binutils-x86-64-linux-gnu (2.30-21ubuntu1~18.04.5) ... Selecting previously unselected package binutils. Preparing to unpack .../05-binutils_2.30-21ubuntu1~18.04.5_amd64.deb ... Unpacking binutils (2.30-21ubuntu1~18.04.5) ... Selecting previously unselected package libjaeger. Preparing to unpack .../06-libjaeger_16.2.5-1bionic_amd64.deb ... Unpacking libjaeger (16.2.5-1bionic) ... Selecting previously unselected package libnl-route-3-200:amd64. Preparing to unpack .../07-libnl-route-3-200_3.2.29-0ubuntu3_amd64.deb ... Unpacking libnl-route-3-200:amd64 (3.2.29-0ubuntu3) ... Selecting previously unselected package libibverbs1:amd64. Preparing to unpack .../08-libibverbs1_17.1-1ubuntu0.2_amd64.deb ... Unpacking libibverbs1:amd64 (17.1-1ubuntu0.2) ... Selecting previously unselected package liburcu6:amd64. Preparing to unpack .../09-liburcu6_0.10.1-1ubuntu1_amd64.deb ... Unpacking liburcu6:amd64 (0.10.1-1ubuntu1) ... Selecting previously unselected package liblttng-ust-ctl4:amd64. Preparing to unpack .../10-liblttng-ust-ctl4_2.10.1-1_amd64.deb ... Unpacking liblttng-ust-ctl4:amd64 (2.10.1-1) ... Selecting previously unselected package liblttng-ust0:amd64. Preparing to unpack .../11-liblttng-ust0_2.10.1-1_amd64.deb ... Unpacking liblttng-ust0:amd64 (2.10.1-1) ... Selecting previously unselected package librdmacm1:amd64. Preparing to unpack .../12-librdmacm1_17.1-1ubuntu0.2_amd64.deb ... Unpacking librdmacm1:amd64 (17.1-1ubuntu0.2) ... Selecting previously unselected package librados2. Preparing to unpack .../13-librados2_16.2.5-1bionic_amd64.deb ... Unpacking librados2 (16.2.5-1bionic) ... Selecting previously unselected package libaio1:amd64. Preparing to unpack .../14-libaio1_0.3.110-5ubuntu0.1_amd64.deb ... Unpacking libaio1:amd64 (0.3.110-5ubuntu0.1) ... Selecting previously unselected package librbd1. Preparing to unpack .../15-librbd1_16.2.5-1bionic_amd64.deb ... Unpacking librbd1 (16.2.5-1bionic) ... Selecting previously unselected package libcephfs2. Preparing to unpack .../16-libcephfs2_16.2.5-1bionic_amd64.deb ... Unpacking libcephfs2 (16.2.5-1bionic) ... Selecting previously unselected package python3-rados. Preparing to unpack .../17-python3-rados_16.2.5-1bionic_amd64.deb ... Unpacking python3-rados (16.2.5-1bionic) ... Selecting previously unselected package python3-ceph-argparse. Preparing to unpack .../18-python3-ceph-argparse_16.2.5-1bionic_all.deb ... Unpacking python3-ceph-argparse (16.2.5-1bionic) ... Selecting previously unselected package python3-cephfs. Preparing to unpack .../19-python3-cephfs_16.2.5-1bionic_amd64.deb ... Unpacking python3-cephfs (16.2.5-1bionic) ... Selecting previously unselected package python3-ceph-common. Preparing to unpack .../20-python3-ceph-common_16.2.5-1bionic_all.deb ... Unpacking python3-ceph-common (16.2.5-1bionic) ... Selecting previously unselected package python3-prettytable. Preparing to unpack .../21-python3-prettytable_0.7.2-3_all.deb ... Unpacking python3-prettytable (0.7.2-3) ... Selecting previously unselected package python3-rbd. Preparing to unpack .../22-python3-rbd_16.2.5-1bionic_amd64.deb ... Unpacking python3-rbd (16.2.5-1bionic) ... Selecting previously unselected package liblua5.3-0:amd64. Preparing to unpack .../23-liblua5.3-0_5.3.3-1ubuntu0.18.04.1_amd64.deb ... Unpacking liblua5.3-0:amd64 (5.3.3-1ubuntu0.18.04.1) ... Selecting previously unselected package librabbitmq4:amd64. Preparing to unpack .../24-librabbitmq4_0.8.0-1ubuntu0.18.04.2_amd64.deb ... Unpacking librabbitmq4:amd64 (0.8.0-1ubuntu0.18.04.2) ... Selecting previously unselected package librdkafka1:amd64. Preparing to unpack .../25-librdkafka1_0.11.3-1build1_amd64.deb ... Unpacking librdkafka1:amd64 (0.11.3-1build1) ... Selecting previously unselected package librgw2. Preparing to unpack .../26-librgw2_16.2.5-1bionic_amd64.deb ... Unpacking librgw2 (16.2.5-1bionic) ... Selecting previously unselected package python3-rgw. Preparing to unpack .../27-python3-rgw_16.2.5-1bionic_amd64.deb ... Unpacking python3-rgw (16.2.5-1bionic) ... Selecting previously unselected package libdw1:amd64. Preparing to unpack .../28-libdw1_0.170-0.4ubuntu0.1_amd64.deb ... Unpacking libdw1:amd64 (0.170-0.4ubuntu0.1) ... Selecting previously unselected package libbabeltrace1:amd64. Preparing to unpack .../29-libbabeltrace1_1.5.5-1_amd64.deb ... Unpacking libbabeltrace1:amd64 (1.5.5-1) ... Selecting previously unselected package libtcmalloc-minimal4. Preparing to unpack .../30-libtcmalloc-minimal4_2.5-2.2ubuntu3_amd64.deb ... Unpacking libtcmalloc-minimal4 (2.5-2.2ubuntu3) ... Selecting previously unselected package libgoogle-perftools4. Preparing to unpack .../31-libgoogle-perftools4_2.5-2.2ubuntu3_amd64.deb ... Unpacking libgoogle-perftools4 (2.5-2.2ubuntu3) ... Selecting previously unselected package libsnappy1v5:amd64. Preparing to unpack .../32-libsnappy1v5_1.1.7-1_amd64.deb ... Unpacking libsnappy1v5:amd64 (1.1.7-1) ... Selecting previously unselected package libleveldb1v5:amd64. Preparing to unpack .../33-libleveldb1v5_1.20-2_amd64.deb ... Unpacking libleveldb1v5:amd64 (1.20-2) ... Selecting previously unselected package liboath0. Preparing to unpack .../34-liboath0_2.6.1-1_amd64.deb ... Unpacking liboath0 (2.6.1-1) ... Selecting previously unselected package libradosstriper1. Preparing to unpack .../35-libradosstriper1_16.2.5-1bionic_amd64.deb ... Unpacking libradosstriper1 (16.2.5-1bionic) ... Selecting previously unselected package ceph-common. Preparing to unpack .../36-ceph-common_16.2.5-1bionic_amd64.deb ... Unpacking ceph-common (16.2.5-1bionic) ... Selecting previously unselected package ceph-base. Preparing to unpack .../37-ceph-base_16.2.5-1bionic_amd64.deb ... Unpacking ceph-base (16.2.5-1bionic) ... Selecting previously unselected package ceph-fuse. Preparing to unpack .../38-ceph-fuse_16.2.5-1bionic_amd64.deb ... Unpacking ceph-fuse (16.2.5-1bionic) ... Selecting previously unselected package ceph-mds. Preparing to unpack .../39-ceph-mds_16.2.5-1bionic_amd64.deb ... Unpacking ceph-mds (16.2.5-1bionic) ... Selecting previously unselected package ceph-mon. Preparing to unpack .../40-ceph-mon_16.2.5-1bionic_amd64.deb ... Unpacking ceph-mon (16.2.5-1bionic) ... Selecting previously unselected package libgc1c2:amd64. Preparing to unpack .../41-libgc1c2_1%3a7.4.2-8ubuntu1_amd64.deb ... Unpacking libgc1c2:amd64 (1:7.4.2-8ubuntu1) ... Selecting previously unselected package libltdl7:amd64. Preparing to unpack .../42-libltdl7_2.4.6-2_amd64.deb ... Unpacking libltdl7:amd64 (2.4.6-2) ... Selecting previously unselected package guile-2.0-libs:amd64. Preparing to unpack .../43-guile-2.0-libs_2.0.13+1-5ubuntu0.1_amd64.deb ... Unpacking guile-2.0-libs:amd64 (2.0.13+1-5ubuntu0.1) ... Selecting previously unselected package ibverbs-providers:amd64. Preparing to unpack .../44-ibverbs-providers_17.1-1ubuntu0.2_amd64.deb ... Unpacking ibverbs-providers:amd64 (17.1-1ubuntu0.2) ... Selecting previously unselected package libntlm0:amd64. Preparing to unpack .../45-libntlm0_1.4-8_amd64.deb ... Unpacking libntlm0:amd64 (1.4-8) ... Selecting previously unselected package libgsasl7:amd64. Preparing to unpack .../46-libgsasl7_1.8.0-8ubuntu3_amd64.deb ... Unpacking libgsasl7:amd64 (1.8.0-8ubuntu3) ... Selecting previously unselected package libkyotocabinet16v5:amd64. Preparing to unpack .../47-libkyotocabinet16v5_1.2.76-4.2_amd64.deb ... Unpacking libkyotocabinet16v5:amd64 (1.2.76-4.2) ... Selecting previously unselected package mailutils-common. Preparing to unpack .../48-mailutils-common_1%3a3.4-1_all.deb ... Unpacking mailutils-common (1:3.4-1) ... Selecting previously unselected package mysql-common. Preparing to unpack .../49-mysql-common_5.8+1.0.4_all.deb ... Unpacking mysql-common (5.8+1.0.4) ... Selecting previously unselected package libmysqlclient20:amd64. Preparing to unpack .../50-libmysqlclient20_5.7.35-0ubuntu0.18.04.1_amd64.deb ... Unpacking libmysqlclient20:amd64 (5.7.35-0ubuntu0.18.04.1) ... Selecting previously unselected package libpython2.7:amd64. Preparing to unpack .../51-libpython2.7_2.7.17-1~18.04ubuntu1.6_amd64.deb ... Unpacking libpython2.7:amd64 (2.7.17-1~18.04ubuntu1.6) ... Selecting previously unselected package libmailutils5:amd64. Preparing to unpack .../52-libmailutils5_1%3a3.4-1_amd64.deb ... Unpacking libmailutils5:amd64 (1:3.4-1) ... Selecting previously unselected package ssl-cert. Preparing to unpack .../53-ssl-cert_1.0.39_all.deb ... Unpacking ssl-cert (1.0.39) ... Selecting previously unselected package postfix. Preparing to unpack .../54-postfix_3.3.0-1ubuntu0.3_amd64.deb ... Unpacking postfix (3.3.0-1ubuntu0.3) ... Selecting previously unselected package mailutils. Preparing to unpack .../55-mailutils_1%3a3.4-1_amd64.deb ... Unpacking mailutils (1:3.4-1) ... Selecting previously unselected package nvme-cli. Preparing to unpack .../56-nvme-cli_1.5-1ubuntu1.1_amd64.deb ... Unpacking nvme-cli (1.5-1ubuntu1.1) ... Selecting previously unselected package smartmontools. Preparing to unpack .../57-smartmontools_6.5+svn4324-1ubuntu0.1_amd64.deb ... Unpacking smartmontools (6.5+svn4324-1ubuntu0.1) ... Selecting previously unselected package sntp. Preparing to unpack .../58-sntp_1%3a4.2.8p10+dfsg-5ubuntu7.3_amd64.deb ... Unpacking sntp (1:4.2.8p10+dfsg-5ubuntu7.3) ... Setting up librdkafka1:amd64 (0.11.3-1build1) ... Setting up libdw1:amd64 (0.170-0.4ubuntu0.1) ... Setting up python3-ceph-argparse (16.2.5-1bionic) ... Setting up mysql-common (5.8+1.0.4) ... update-alternatives: using /etc/mysql/my.cnf.fallback to provide /etc/mysql/my.cnf (my.cnf) in auto mode Setting up libgc1c2:amd64 (1:7.4.2-8ubuntu1) ... Setting up libnl-route-3-200:amd64 (3.2.29-0ubuntu3) ... Setting up ssl-cert (1.0.39) ... Setting up smartmontools (6.5+svn4324-1ubuntu0.1) ... Created symlink /etc/systemd/system/multi-user.target.wants/smartd.service → /lib/systemd/system/smartd.service. Setting up liburcu6:amd64 (0.10.1-1ubuntu1) ... Setting up nvme-cli (1.5-1ubuntu1.1) ... Setting up python3-prettytable (0.7.2-3) ... Setting up binutils-common:amd64 (2.30-21ubuntu1~18.04.5) ... Setting up liblttng-ust-ctl4:amd64 (2.10.1-1) ... Setting up libtcmalloc-minimal4 (2.5-2.2ubuntu3) ... Setting up libntlm0:amd64 (1.4-8) ... Setting up python3-ceph-common (16.2.5-1bionic) ... Setting up libgoogle-perftools4 (2.5-2.2ubuntu3) ... Setting up libaio1:amd64 (0.3.110-5ubuntu0.1) ... Setting up libsnappy1v5:amd64 (1.1.7-1) ... Setting up libltdl7:amd64 (2.4.6-2) ... Setting up libpython2.7:amd64 (2.7.17-1~18.04ubuntu1.6) ... Setting up libopts25:amd64 (1:5.18.12-4) ... Setting up libjaeger (16.2.5-1bionic) ... Setting up libmysqlclient20:amd64 (5.7.35-0ubuntu0.18.04.1) ... Setting up liboath0 (2.6.1-1) ... Setting up librabbitmq4:amd64 (0.8.0-1ubuntu0.18.04.2) ... Setting up liblttng-ust0:amd64 (2.10.1-1) ... Setting up liblua5.3-0:amd64 (5.3.3-1ubuntu0.18.04.1) ... Setting up libkyotocabinet16v5:amd64 (1.2.76-4.2) ... Setting up libbabeltrace1:amd64 (1.5.5-1) ... Setting up postfix (3.3.0-1ubuntu0.3) ... Created symlink /etc/systemd/system/multi-user.target.wants/postfix.service → /lib/systemd/system/postfix.service. Adding group `postfix' (GID 116) ... Done. Adding system user `postfix' (UID 111) ... Adding new user `postfix' (UID 111) with group `postfix' ... Not creating home directory `/var/spool/postfix'. Creating /etc/postfix/dynamicmaps.cf Adding group `postdrop' (GID 117) ... Done. setting myhostname: node01 setting alias maps setting alias database mailname is not a fully qualified domain name. Not changing /etc/mailname. setting destinations: $myhostname, node01, localhost.localdomain, , localhost setting relayhost: setting mynetworks: 127.0.0.0/8 [::ffff:127.0.0.0]/104 [::1]/128 setting mailbox_size_limit: 0 setting recipient_delimiter: + setting inet_interfaces: all setting inet_protocols: all /etc/aliases does not exist, creating it. WARNING: /etc/aliases exists, but does not have a root alias. Postfix (main.cf) is now set up with a default configuration. If you need to make changes, edit /etc/postfix/main.cf (and others) as needed. To view Postfix configuration values, see postconf(1). After modifying main.cf, be sure to run 'service postfix reload'. Running newaliases Setting up mailutils-common (1:3.4-1) ... Setting up libgsasl7:amd64 (1.8.0-8ubuntu3) ... Setting up libibverbs1:amd64 (17.1-1ubuntu0.2) ... Setting up sntp (1:4.2.8p10+dfsg-5ubuntu7.3) ... Setting up libbinutils:amd64 (2.30-21ubuntu1~18.04.5) ... Setting up ntp (1:4.2.8p10+dfsg-5ubuntu7.3) ... Created symlink /etc/systemd/system/network-pre.target.wants/ntp-systemd-netif.path → /lib/systemd/system/ntp-systemd-netif.path. Created symlink /etc/systemd/system/multi-user.target.wants/ntp.service → /lib/systemd/system/ntp.service. ntp-systemd-netif.service is a disabled or a static unit, not starting it. Setting up librdmacm1:amd64 (17.1-1ubuntu0.2) ... Setting up libleveldb1v5:amd64 (1.20-2) ... Setting up librados2 (16.2.5-1bionic) ... Setting up libcephfs2 (16.2.5-1bionic) ... Setting up ibverbs-providers:amd64 (17.1-1ubuntu0.2) ... Setting up guile-2.0-libs:amd64 (2.0.13+1-5ubuntu0.1) ... Setting up python3-rados (16.2.5-1bionic) ... Setting up binutils-x86-64-linux-gnu (2.30-21ubuntu1~18.04.5) ... Setting up libmailutils5:amd64 (1:3.4-1) ... Setting up libradosstriper1 (16.2.5-1bionic) ... Setting up python3-cephfs (16.2.5-1bionic) ... Setting up librgw2 (16.2.5-1bionic) ... Setting up ceph-fuse (16.2.5-1bionic) ... Created symlink /etc/systemd/system/remote-fs.target.wants/ceph-fuse.target → /lib/systemd/system/ceph-fuse.target. Created symlink /etc/systemd/system/ceph.target.wants/ceph-fuse.target → /lib/systemd/system/ceph-fuse.target. Setting up librbd1 (16.2.5-1bionic) ... Setting up mailutils (1:3.4-1) ... update-alternatives: using /usr/bin/frm.mailutils to provide /usr/bin/frm (frm) in auto mode update-alternatives: using /usr/bin/from.mailutils to provide /usr/bin/from (from) in auto mode update-alternatives: using /usr/bin/messages.mailutils to provide /usr/bin/messages (messages) in auto mode update-alternatives: using /usr/bin/movemail.mailutils to provide /usr/bin/movemail (movemail) in auto mode update-alternatives: using /usr/bin/readmsg.mailutils to provide /usr/bin/readmsg (readmsg) in auto mode update-alternatives: using /usr/bin/dotlock.mailutils to provide /usr/bin/dotlock (dotlock) in auto mode update-alternatives: using /usr/bin/mail.mailutils to provide /usr/bin/mailx (mailx) in auto mode Setting up binutils (2.30-21ubuntu1~18.04.5) ... Setting up python3-rgw (16.2.5-1bionic) ... Setting up python3-rbd (16.2.5-1bionic) ... Setting up ceph-common (16.2.5-1bionic) ... Adding group ceph....done Adding system user ceph....done Setting system user ceph properties....done chown: cannot access '/var/log/ceph/*.log*': No such file or directory Created symlink /etc/systemd/system/multi-user.target.wants/ceph.target → /lib/systemd/system/ceph.target. Created symlink /etc/systemd/system/multi-user.target.wants/rbdmap.service → /lib/systemd/system/rbdmap.service. Setting up ceph-base (16.2.5-1bionic) ... Created symlink /etc/systemd/system/ceph.target.wants/ceph-crash.service → /lib/systemd/system/ceph-crash.service. Setting up ceph-mds (16.2.5-1bionic) ... Created symlink /etc/systemd/system/multi-user.target.wants/ceph-mds.target → /lib/systemd/system/ceph-mds.target. Created symlink /etc/systemd/system/ceph.target.wants/ceph-mds.target → /lib/systemd/system/ceph-mds.target. Setting up ceph-mon (16.2.5-1bionic) ... Created symlink /etc/systemd/system/multi-user.target.wants/ceph-mon.target → /lib/systemd/system/ceph-mon.target. Created symlink /etc/systemd/system/ceph.target.wants/ceph-mon.target → /lib/systemd/system/ceph-mon.target. Processing triggers for systemd (237-3ubuntu10.42) ... Processing triggers for man-db (2.8.3-2ubuntu0.1) ... Processing triggers for rsyslog (8.32.0-1ubuntu4) ... Processing triggers for ufw (0.36-0ubuntu0.18.04.1) ... Processing triggers for ureadahead (0.100.0-21) ... Processing triggers for libc-bin (2.27-3ubuntu1.2) ... root@node01:~#

5、初始化安装三台node节点,具体操作deploy主键上操作,具体情况如下: